Verification Data Analytics

Hardware Verification is a highly data-intensive or data-heavy problem. Verification Engineers recognise this and spend much of their time dealing with large and complex datasets arising from verification processes.

In “The Dilemmas of Hardware Verification” we explored the key challenges around verification of complex hardware IP and systems. The “completeness dilemma” leads engineering teams to be heavily dependent on data and data analytics, to make incomplete processes measurable and bounded and allow product development teams to make data-driven decisions about signoff quality for product releases.

So, in fact, one of the many core skillsets of a good Verification Engineer, is data analytics.

Great Verification Engineers need to be great Data Analysts.

Engineers deal with huge volumes of data: suites of tests, test results, coverage results, resource planning and utilisation data, version control data, waiver analysis, bugs and defect tracking and ongoing optimisation and continuous improvement through trend analysis and root causes analytics.

In so doing, Verification Engineers utilise many different data sources to ensure projects are on track and progressing towards project goals whilst ensuring accurate information is available to support signoff decisions at key quality milestones occurring during the product development lifecycle.

Verification data also presents a huge opportunity to optimise and streamline verification workflows.

The ROI of the final delivered product is heavily determined by the development costs, and it’s been well documented that 70% or more of these costs are attributable to verification activities. So, care must be taken to ensure that verification activities are effective and efficient and not wasteful.

Of course, a healthy degree of paranoia is helpful from a Verification Engineer’s perspective as there is strong compulsion to run more and more verification cycles because a bug escape that reaches the customer or end user can be extremely costly, impactful, and potentially reputationally damaging! See “The Cost of Bugs” where we explore the balance between the “cost of finding bugs” (the verification costs) versus the “cost of not finding bugs” (the impact costs of bug escapes).

Insights from Data

The value of verification data is realised when it yields key insights.

Think of insights as questions.

An insight might be a high-level question that an Engineering Manager is asking of the engineering team to understand how effective or efficient the product development process is. It could also be a question asked by the senior leadership team, the quality team or the sales and revenue team.

Insights can also drive a strategy of continuous improvement enabled by an understanding of effectiveness and efficiency.

In some cases, insights can be unpredictable or unexpected. Curiosity and an analytical approach to cleaning, understanding, exploring, and validating the data, and reviewing the analytical views can reveal observations that were not previously available. These unexpected insights present opportunities to challenge the status quo sometimes and re-think established practices. However, care must be taken to challenge and validate the assumptions.

Beware that it’s sometimes possible to make the analytics fit the narrative rather than the other way round.

It’s useful to think of insights in the context of a data-value stack, as illustrated in Figure 1 The Analytics Inverse Pyramid.

Insights enable Data-Driven Decision making.

Insights are made possible by good Data Analytics, which are in turn enabled by Data Models constructed from the Data Sources loaded into the Data Lake. The point is to figure out what Data-Driven Decisions are required by the business first and let this drive the data capture, the data pipelines, and the data analytics, not vice-versa!

Figure 1 The Analytics Inverse Pyramid

The raw data at the base of the pyramid has little value unless it is clean and accurate and is fed through a data pipeline driving powerful analytics driving high value insights.

The anatomy of data and why we should care…

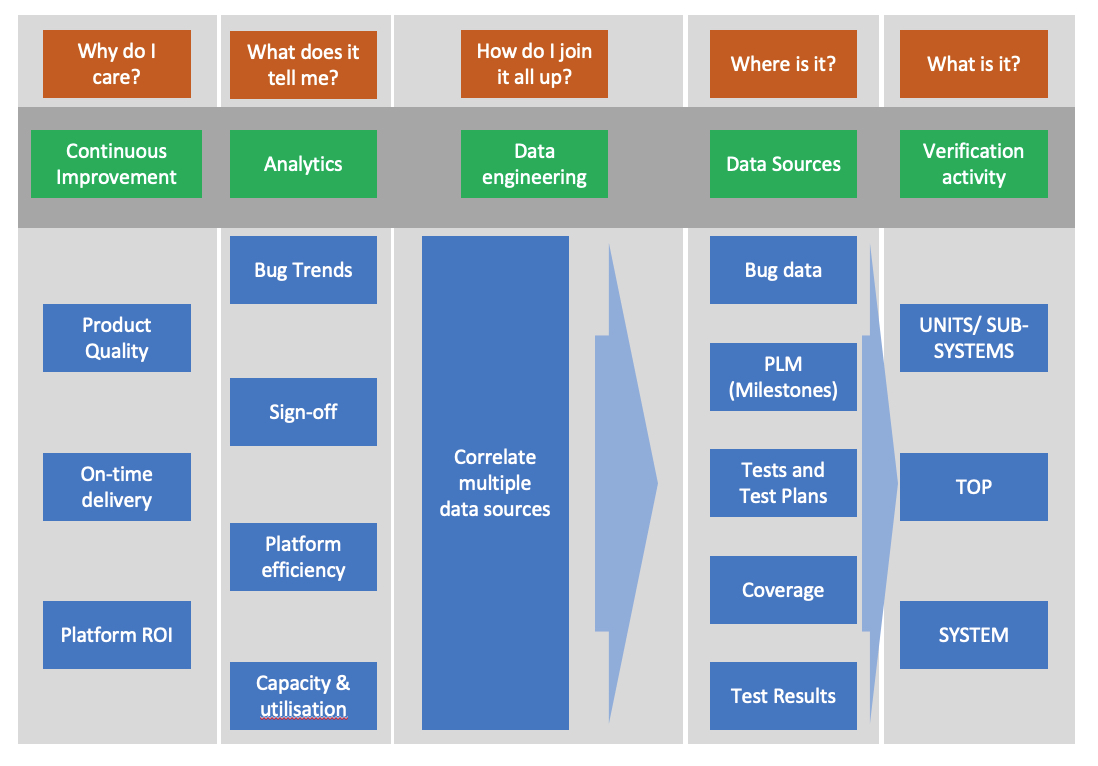

If we follow Exec-Level care-abouts driving verification excellence all the way through to verification engineering reality – daily activities – we can better describe what is happening at each stage.

From the CFO and CEOs’ viewpoint there are multiple issues to worry about, but when it relates to engineering development of the company’s all important revenue bearing products, it boils down to these.

Figure 2 Cost, Quality, Delivery

Customers want the same outcomes from their supplier, meaning the verification effort you put in must be effective and efficient, to drive cost effective solutions for them. To achieve this, your design and verification processes must be well instrumented to avoid the so-called “black box” syndrome, whereby products arrive without a clear idea of just how good the verification effort has been at finding bugs and perhaps without a good handle on costs, or project timescales.

Excellence depends on good data and an engineering culture that knows how to exploit it.

Figure 3 Data Pipelines, below, indicates the importance of analytics to provide insights into the verification effort to assess effectiveness and efficiency. Useful analytics require the correlation of information from various data sets generated by the daily activity of design and Verification Engineers.

Figure 3 Data Pipelines

It’s a useful thought-experiment to measure where your verification effort sits in relation to the questions in orange, at each of the data pipeline stages above. Perhaps surprisingly, not all engineering teams have a good enough handle on what data they have, where it’s located, how clean it is and how to exploit it. Later in the paper we explore creating the culture of curiosity and the competences necessary to make this transition possible.

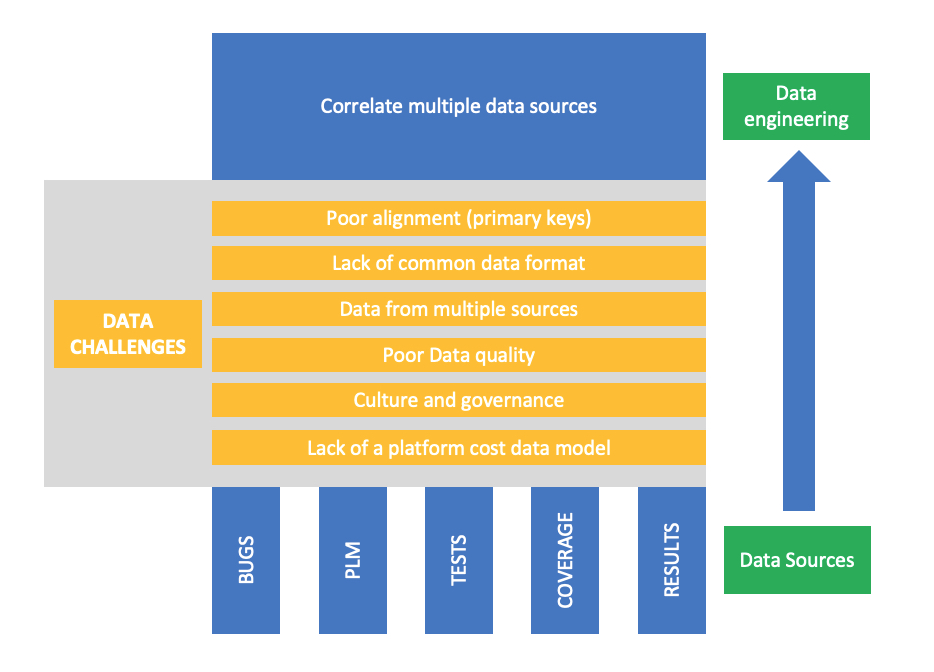

Figure 4 Data Challenges, below, illustrates some of the challenges teams are likely to encounter when developing the analytics needed for good decision making, to drive important improvements verification processes and indicate necessary investments in tools and hardware.

Figure 4 Data Challenges

These challenges are not unique to hardware verification but must be overcome to reach basic levels of analytics capability.

Deriving analytics from diverse data sets can be extremely complex, particularly when it comes to correlating them. A simple example would be to illustrate bug discovery at different stages of the product life cycle phases so you can assess progress against your Verification Plan.

Other insight questions require more complex data engineering to provide the information required. In smaller companies this task could fall to the engineering team, or it might be outsourced. As good “data engineers”, the verification team need to be comfortable with thinking around these problems.

Larger teams may have the luxury of internal data engineering/analyst resource to make these developments in-house. In both cases, Verification teams need to be fluent with the data challenges, to ensure they get what is needed if analytics are to be developed, or improved. See Step1: Train your engineers to think like Data Analysts.

The data quality, data volume trap…

Our focus for this white paper is to discuss “Data Analytics” in the context of organising, automating, cleaning, and visualising verification datasets that most teams already have. However, you can’t discuss this topic without raising the question: –

What about AI? Can I use it?

Everyone is aware of the potential offered by Machine Learning (ML) currently being embedded in EDA tools (see Step2: Exploit Advanced EDA Tooling), as well as the opportunities offered by data science to improve the targeting of coverage and parsing of data to make for easier analysis. Although this paper will touch on these subjects, it is primarily focussed on how to make the best use of data to drive better insights into the verification process.

Figure 5 Low quality, small datasets are barriers to developing analytics or successfully deploying advanced ML/AI techniques.

Although there are no publicly available numbers showing how many engineering teams have successfully implemented ML and AI, it is likely many will have encountered problems with data quality or size of datasets.

In their thought-provoking article, “A survey of machine learning applications in functional verification”, Yu, Foster and Fitzpatrick asserted, “Due to the lack of large datasets, much research has to settle for relatively primitive ML techniques that demand only small training datasets with hundreds of samples. The situation has prevented advanced ML techniques and algorithms from being applied”.

In Figure 5 (Data Quality), above, small amounts of unreliable verification data are difficult to analyse with any degree of confidence – you find yourself in Trap 1. In this case, the plausible option is to invest in cleaning up your data and developing excellent analytics – there is no easy jump to ML/AI in Level 2 from Trap 1.

Large amounts of low-quality information may be very difficult to manage and understand, making it unsuited to ML or AI techniques, let alone any necessary data engineering needed to produce good analytics – This is Trap 2. As with smaller data sets, a large-scale clean-up operation is indicated. For these reasons, bad data quality and smaller data sets present significant challenges for companies wishing to move to ML enabled EDA tools and more advanced AI techniques.

A more plausible and necessary step for many organisations is to make better use of the data they do have to create useful analytics to enable great decision making and continuous improvement.

Although “just” creating good analytics may seem less exciting than going straight to ML/AI in Level 2, they may still be difficult to implement until your data has been cleaned and some of the challenges explored in Figure 4 have been overcome.

Assuming you have your data engineering organised, built on a foundation of high-quality data and stunning analytics to shine the light into those dark and bug-rich corners, it’s time to think about what insights to look for.

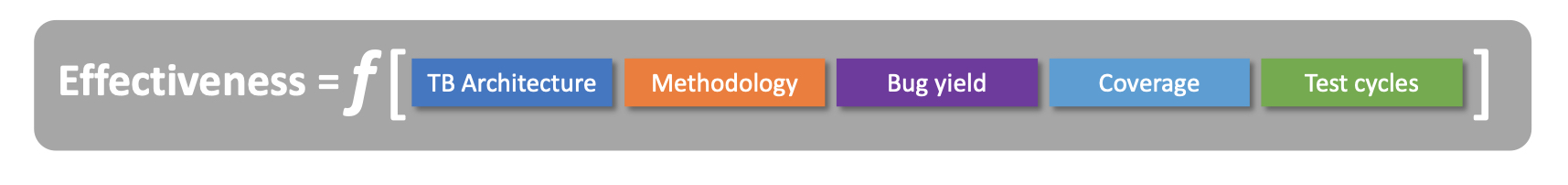

Insight: “Is my Verification Effective?”

Returning to “Insights”, many from verification datasets can be classified as effectiveness and efficiency insights. Let’s start with effectiveness. What does that mean for a verification team, and who else wants to know about it?

Effectiveness can be described as a function in the following way: –

Each of the variables in the formula is quite probably captured in a separate database and is described by a set of data schemas.

The richness of the data schemas used to collect the data has a direct impact on the quality of analytics that can be generated from it.

A “data model” connects these sources using primary keys to allow correlation of the data. Once the team have identified what analytics are required, there may be a need to elaborate the data schemas.

The effectiveness insight requires analytics that show testbench effectiveness in terms of ability to make verification progress, defined as increasing coverage and/or finding bugs. If a testbench is not advancing coverage or finding bugs, then it might be ineffective, unless verification goals are already fully met.

The utility of good analytics is the ability to analyse testbench effectiveness in a visual fashion so that development teams can make targeted improvements to testbench implementations. Continuous improvements are achieved through iterations of code refactoring, performance optimisations, or re-architecting, with a view to increasing the ability of the testbench to hit bugs and coverage with fewer seeds or cycles. Analytics are used at each stage to demonstrate actual improvements.

INSIGHT: “Does my Testbench find Bugs?”

For this, we need data schemas that enable analytics to visualise and drill into the bug curve over time. We expect to see a cumulative bug curve that flattens and saturates over time; or a bug rate curve that peaks and then falls towards zero.

Better still is to correlate these bug curves with verification effort to give a true indication of verification effort versus bugs found.

And with a hierarchy of verification such as Unit->Sub-system->Top->System, the analytics need to be able to present the bugs v effort data at each level and enable users to see how different levels and different units or sub-systems compare. Such analysis capability offers insights of which are the effective verification environments, and which are apparently ineffective. From this, teams can make decisions about where to invest engineering effort for the greatest return.

What does that mean in terms of data?

To do this we need to join the bug data with the verification results data so that we can explore how many cycles of verification are running between finding bugs – and to look at this over the product development lifecycle since it will vary according to what stage of development the product is at.

INSIGHT: “Does my Testbench increase Coverage?”

The analytics also need to correlate coverage data with verification effort data. If the analytics are revealing that the bug curve is saturated and the coverage is saturated, the engineering team can use this information to make decisions about what to do next; Run more cycles? Run less cycles? Improve the verification environment?

Further, with bug and coverage data collected across the whole product development lifecycle and all verification methodologies applied, you can reason about the relative effectiveness of each methodology. i.e., you must consider the effectiveness in the context of the whole verification lifecycle and the stage you are at. For example, Unit testing might appear to be ineffective (does not find many bugs) due to earlier top-level or formal verification doing a good job of cleaning out most bugs. So, you must consider the whole lifecycle of verification and the order that is chosen to execute various methodologies.

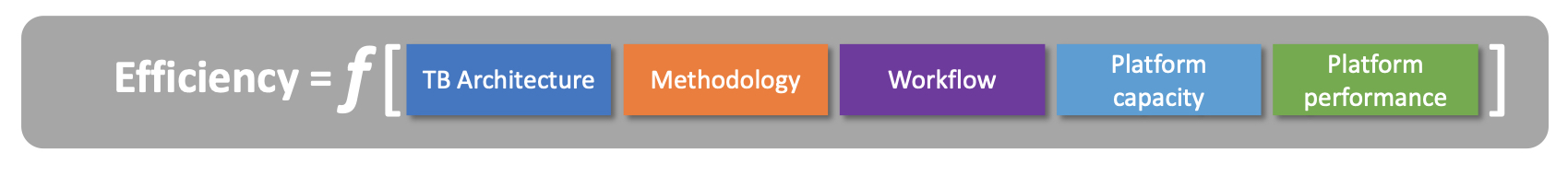

Insight: “Is my Verification Efficient?”

The second most important question relates to efficiency. You may have effective stimulus and checking, but can verification be done with the minimum amount of human and platform resources, and can it be delivered in the shortest possible time?

Efficiency is a function of the following: –

To understand efficiency, you must look at the details of:

- Individual testbenches to understand if they have been architected and implemented in an optimal way for the highest performance with the given methodology.

- Regression workflows to understand if they are running jobs optimally and not wasting resources by needlessly re-running full regression sets when more targeted runs are more efficient.

- The available platform capacities which may be shared across multiple teams. Is there a shortage of resources that leads to inefficiencies in utilisation?

- The performance of the platform, both the hardware (compute, storage, and network) and the EDA tools that are running the verification workloads.

This insight tells us how efficiently implemented simulation testbenches are. If a testbench is very slow, it will consume much greater levels of compute and simulation license resources. Slow testbenches might need to be re-implemented to make them run faster. This question relates to Unit or Sub-system testbench architecture and methodology.

Efficiency insights require analytics that reveal relative performances of verification environments tracked over time so that changes to performances can be identified and outliers can be observed and investigated. Since testbenches will vary by architecture and implementation, some degree of performance variability is to be expected, but having good analytics dashboards available to monitor these environments enables early detection of performance impacts that may arise from bad coding practices or platform/environment/tools degradations. When teams can see this data – they can fix these problems.

Without analytics, teams are flying blind regarding efficiency.

Collecting bug data is the most important step towards Level 1 analytics capability!

We have discussed the value of bugs in The Quest for Bugs series of articles, but it is worthwhile to restate here why Bug Data is one of the richest sources of verification data and can drive the most useful insights such as verification effectiveness.

Bugs are a fantastic source of insights and learning, BUT only if you collect them!

…and the collection of good quality bug data is the challenging bit.

With enough accurate bug data, you can glean insights into both the effectiveness of your verification strategies, and the quality of your design (Level 1). If you look across the entire design, do some units or functions yield more bugs than others and if so, what is the cause of this? Maybe steps can be taken to reduce the number of bugs being introduced into the code? Does the bug data point at hotspots of complexity. What is the underlying root cause of these bugs and can bugs be avoided in the first place? From a verification effectiveness perspective, which methodologies are the most effective at finding bugs quickly? Are you spending vast resources running verification cycles that do not find bugs?

Can you “shift-left” and find those bugs earlier in the product development lifecycle and saturate the bug curve sooner, meaning release the product sooner?

To answer these questions, you need to ensure you are collecting enough bug data and that you have an adequate bug schema that captures the right information about bug discovery, bug impacts, and bug root causes. If you have a rich bug dataset, you will be able to drill bug analytics to answer many of these questions and perhaps expose some unexpected insights. Welcome to Level 1 Analytics!

The challenge is often persuading your engineering teams to get the bug-logging habit.

It’s an engineering practices or engineering culture thing. Some teams just do this as a natural part of their job, other teams are less willing and see bug-logging as an overhead to making forward progress.

Engineering teams need to see concrete value from the bug analytics as a motivation to collect the data. But of course, it’s a “chicken and egg” problem; no bug data or poor-quality bug data = no analytics or low value analytics.

When is the right time to start bug-logging? How do you ensure that the bug data is complete and accurate?

There are 3 key motivators for bug-logging: –

- Teamwork and communication: the task list to develop complex products (hardware or software), is long and likely to involve multiple people. Unless bugs are diligently logged and tracked there is a risk of bugs slipping through due to poor practice. It’s often the case that the bug reporter and the bug solver are not the same person, so you need to record and track the bug communications (triage, analysis, and solutions) to ensure nothing slips through the net.

- Progress tracking and sign-off: As the project transitions through the product development lifecycle there is a need to understand what the bug-curve looks like at any one point in time. What is the current bug rate? How many bugs are outstanding at each sign-off point? Is the bug curve trending in the right direction as expected? How many critical bugs do we have versus major and minor bugs?

- Continuous Improvement: By analysing the bug discovery data and the bug root causes, we can use these insights to improve the effectiveness and efficiency of our design and verification methodologies. This is where continuous learning from bugs, both within a project and between projects, can really reduce costs, improve quality, and reduce time-to-market for complex products.

If you can collect bug data accurately and consistently, then many of the above insights will be available to you. Furthermore, if you can join this bug data with other interesting data sources such as test execution data, project milestone data, or resource consumption data, then there are additional powerful insights that are possible that will illuminate the cost-benefit of your engineering efforts.

Step1: Train your engineers to think like Data Analysts

In Figure 5 we described routes out of data/volume traps towards Level 1 and 2 capabilities. We can also identify three more specific stages that will need to be attained to make progress.

As we mentioned, data analysis is a core skill for Verification Engineers, whether they realise it or not. Sometimes however, the basics of data fluency are not there, and this is something you can train your engineers in. Often, data analysis can be quite basic; maybe a static extract of data that is visualised as an Excel table, or better as an Excel chart. These basic analytics are static views of data that maybe need to be updated manually and regularly and are presented as snapshots in time for project reporting or progress tracking.

Live and fully automated analytics is the way to go. Engineers and managers need to be able to access data analytics at any time and trust that what they are seeing is the latest complete and accurate data. They need to be able to self-serve these analytics and not rely on engineers or data-analysts to refresh and serve the analytics on request. This requirement leads to the need to deliver user-friendly visualisations underpinned by automated data pipelines that consume data at source and clean and transform that data into reliable data models upon which interactive visualisations can be built.

So, more skills are required here than a basic competence with spreadsheets and charts.

We advocate the training of some core data skills for engineers that will enable them to understand and present their data in a way that leads to powerful insights. Some of these activities can be outsourced to trained data analysts, but a core knowledge in this area ensures that Verification Engineers gather and analyse the right datasets and understand what data is needed and how to interpret it. It also engenders a data perspective (or data fluency) where engineers start to understand how to read data, how to manipulate and transform it, and how to be wary of pitfalls that can produce misleading results, such as many-many relationships between data elements.

- Data Capture: Where is your data coming from? What is the provenance of the data, and is it all being collected? This usually entails some instrumentation of verification workflows to capture data and send it to a Data Lake. In turn, that means that you need to figure out the correct data schema that will capture all the required fields needed to support the analytics. This should be an automated process so that data capture is on by default. Capture the data, regardless of whether you then need to filter and sample it later for the analytics.

- Data Cleaning: Most raw data needs some level of cleaning or processing to remove nulls or duplicates, for example, correct errors or bad entries or to backfill data gaps. This can be done in an interactive way but is best done in an automated batch processing way wherever possible. Data cleaning can be scripted with Python NumPy and pandas libraries for example, where powerful data operations can be performed on data frames with just a few steps. (Many Verification Engineers will already be using Python for verification workflow scripting and processing, so the addition of these data analysis libraries and the concepts around data frame manipulations should not be a difficult step).

- Data Engineering: This is the step where data is transformed and manipulated into a format suitable for data visualisation. This may involve joining and merging different data sources so that important correlations are possible that will deliver key insights from the data. See Figure 4 Data Challenges. Sometimes called the data model, it is the schema that controls how different data tables are joined, using common elements (primary keys) that link them together. It may also involve pivots, aggregations, summarisations, or the generation of derived or calculated data elements. For example, verification teams might want to correlate simulation testbench execution result data with bug tracking data to understand how effective different testbenches are at finding bugs in the RTL. Additionally, data engineering competence might extend to databases – how to set up structured databases such as MySQL, or unstructured databases (or Data Lakes) such as MongoDB or Hadoop, for example. There is much to learn in this domain, and it’s an area where data engineers and data analysts will specialise, so as a Verification Engineer or Design Engineer, it may be good to understand this discipline but to outsource the data engineering work to data specialists.

- Data Querying: This may be more of a data engineering skill set, but some basic SQL capability may be useful to support early exploration of datasets, before full data visualisations are available. Exploring datasets is a key competence when presented with new data and prior to establishing any automated analytics. SQL is a core competence for most Data Analysts.

- Data Visualisation: Finally, the bit that will deliver results and key insights is where the data is visualised, and the end user can interact with the data. Sometimes referred to as “Business Intelligence” since it presents intelligence or insights into the state of the business (or the state of a product development project). It should not be underestimated the importance of learning good data visualisation skills, and there are multiple good tooling options that are fun to learn and can deliver impressive visualisations very quickly e.g., PowerBI or Tableau. Learning to use these tools effectively generates real interest and excitement around data so it’s a worthwhile core skill to add to the Design or Verification Engineer’s skillset.

Step2: Exploit Advanced EDA Tooling

The EDA industry has been working on ways to exploit AI and ML to enhance their tool offerings for several years now. This is enabled by both the large volumes of data generated by many EDA verification tools, and the emergence and maturing of general ML algorithms which can be suitable to many verification data problems. These tools are often offered as new versions of the existing tools that can be licensed, or enhancements of existing tools where performance and efficiencies are improved thanks to ML under-the-hood. The end user may not need to know that ML is being utilised by the tools, or change the way that they use the tools, but the tools will perform better. This presents a low barrier to adopting more advanced tooling by your verification teams should you choose to do so, and without the need to train as data scientists or learn ML. We are not going to discuss the specific offerings of the EDA vendors or attempt to survey the market here. Our point is this:

Verification teams should be encouraged to explore and evaluate the available offerings…

… to see if the cost-benefit is there for their workflows and their product development lifecycles. Since the EDA industry is constantly evolving, the tools that are on offer and verification tooling have been an area of high innovation in the EDA industry for some time. It is the responsibility of the verification team to keep abreast of the latest developments and engage with the EDA vendors to ensure their current and future requirements can be met by the EDA vendor’s technology roadmaps.

Some of the ways (but not all) that ML is enhancing EDA tool offerings are in the following areas: –

- Debug acceleration using automated failure clustering and signature analysis.

- Execution optimisation to ensure optimal tool settings are used for simulation runs.

- Optimisation of formal engine selections for formal verification.

- Coverage closure acceleration by test selection ranking and optimisation.

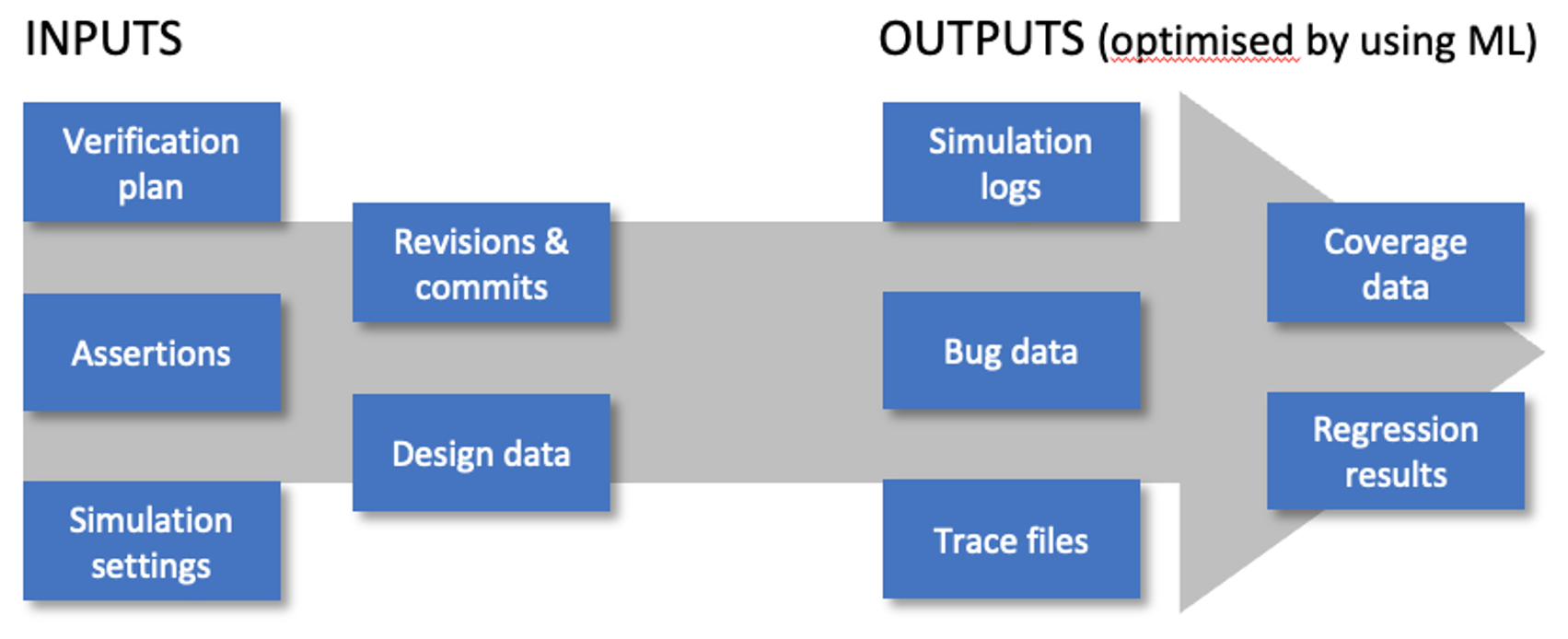

You can think about verification workflows as a set of data inputs and data outputs, as shown below. Both input data sets and the generated output data sets can be candidates for ML opportunities. We know how much effort can be expended on coverage analysis and parsing of test results. Even small improvements in efficiency and effectiveness in these key areas can yield worthwhile savings in cost, quality, and time to market.

Figure 6 ML for EDA tooling

Step3: Train your engineers to think like Data Scientists

So far we have talked about the core skills required to perform competent data analytics, but of course there is a whole branch of data analytics that is often referred to as Data Science, which is exciting and appealing because it offers us opportunities to exploit our data in different ways and yield further insights from the data that may not be achievable with data visualisations alone. Often referred to a ML or Machine Learning, there is a well-established discipline that is accessible to all with a bit more basic training. There are libraries of ready-made algorithms available; you can find many of these conveniently bundled in Python’s scikit-learn library for example. Curious Verification Engineers love to innovate and problem-solve around verification efficiency and effectiveness. These are engaging and challenging problems and solving them by learning and applying new ML skills can be highly motivating. Learning these new skills is also fun and enjoyable and there are many excellent on-line learning platforms that can get you from zero-to-hero in a very short time e.g., DataQuest, DataCamp, udemy, coursera, codeacademy, to name a few.

If your engineering team has mastered basic data analytics and visualisation skills, your data pipeline is clean and accurate, and you are collecting enough data, then there are many optimisation problems in verification that may be ripe for an ML approach – e.g., regression set reduction and optimisation, prediction modelling for resource demands, coverage closure optimisation etc.

Beyond this, there is much excitement about AI today, especially the application of Generative AI to problems such as test generation or code writing. We are not going to explore that topic here but, when Verification Engineers start to think and act like data scientists, there may be many opportunities to make tangible improvements to the way that complex designs are verified using less resources, in a shorter time, and delivering higher quality products.

Summary

Hardware Verification is a data-heavy problem.

Verification Engineers have known this for some time, and their day-to-day work involves the gathering, processing, and reporting on some large datasets. The reason it is a data-heavy problem is that verification is intrinsically an open-ended problem. Engineering teams need insightful analytics to make this open-ended process measurable and finite. Some engineering teams are still working with spreadsheet level analysis and visualisation, often using static snapshots of data, and manual data manipulations that are time-consuming to update. There may be many different data sources contained in many different systems which makes it difficult to join data and make insightful correlations.

For many, the challenge is how to exploit verification data with data analytics that will reveal significant opportunities to improve hardware verification.

There are mature disciplines available to assist with this, especially in the areas of data engineering, data analytics and data visualisation. Engineering teams need to either up-skill in modern data analytics, or engage professional data engineers, data analysts, data scientists, to bring these capabilities to the product development process. The end point is a set of interactive and real-time analytics that are intuitive, accessible, accurate, and self-service. Consumers of analytics should no longer need to raise a request to see an updated report. They should access the visualisations themselves and understand how to drill-down and filter to the view they require, which they can save or embed as a favourite view, knowing that this is real-time data, and trusting that the data is accurate. Report generation becomes a less onerous task when you have live analytics at your fingertips. The improved availability, and accessibility means analysis is devolved to those that need the data, and what’s more, curiosity should reveal previously unknown insights when the data is so much easier to see and explore.

If you do nothing else, refine your bug data capture behaviours and processes…

… because bug analytics can reveal insights that can be acted on in the near term.

That’s the baseline verification data analytics to aim for. Do this first. Establish a clean, accurate and complete data pipeline where the end point is fantastic explorable data visualisations. Beyond that, there are further possibilities to explore datasets more deeply and exploit more advanced techniques such as ML or AI to understand previously unseen patterns in data and build feedback loops into processes and workflows to optimise and reduce time, effort, and cost. We note that all the mainstream EDA verification tool vendors are already building ML under the hood for many of their advanced tool offerings. These can be exploited today without the need to train your engineers as data scientists. Most verification activities involve some sort of iteration or refinement towards a result. You may be able to get there with an acceptable % accuracy in a fraction of the time using ML/AI. More advanced teams or teams who are engaging trained data scientists may be able to realise these gains as data maturity grows and engineering teams adopt a strong data culture.

Authors:

Bryan Dickman, Valytic Consulting Ltd.,

Joe Convey, Acuerdo Ltd.

Also Read:

The Quest for Bugs: “Shift-Left, Right?”

The Quest for Bugs: “Correct by Design!”

The Quest for Bugs: Bugs of Power

The Quest for Bugs: “Deep Cycles”

The Quest for Bugs: Dilemmas of Hardware Verification

Share this post via:

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://semiwiki.com/eda/335551-the-quest-for-bugs-verification-is-a-data-problem/