Introduction

Sam Altman said something big is loading. We wondered if OpenAI would release a new search engine or even GPT-5. But the wait is over, and the rumors have been put to rest—GPT-4o is out, and everyone is stunned by its Capabilities!!!

I would say – It is ABSOLUTELY wild and What a time to be Alive.

OpenAI’s flagship model often sparks excitement and speculation. The latest AI community sensation is the GPT-4o, OpenAI’s brainchild. With promises of enhanced capabilities and accessibility, GPT-4o is poised to revolutionize how we interact with AI systems.

With the Spring Update, It is clear that GPT-4o is a step towards a much more natural form of human-computer interaction. The response rate, intelligence level, talk about images, price, solving learning equations, and other things make me say – With GPT-4o Sam Altman is trying to remind me of “HER.”

GPT-4o, here “o,” stands for “omni,” brings the smarts of GPT-4 but works faster and better, not just with text but also with voice and images. This launch shows OpenAI’s commitment to making high-level AI more available to everyone, providing tools that help users everywhere increase their productivity and creativity. For those using GPT-3.5, there’s no more missing out. With GPT-4o, you can expect results as good as, or even better than, GPT-4. Now that we’ve a new model in the market, let’s dig in, shall we?

Table of contents

Who can Access GPT-4o?

Now comes the real question, yes GPT-4o is great and everything but who can access it? The answer is – EVERYONE.

- ChatGPT Free Users: GPT-4o is now available to free-tier users with certain usage limits. Once a user reaches their message cap, GPT-4o will automatically switch to GPT-3.5, allowing conversations to continue seamlessly.

- Plus Users: Plus subscribers benefit from up to 5x more messages with GPT-4o compared to free-tier users.

- Team and Enterprise Users: Team and Enterprise users will enjoy even higher usage limits, making GPT-4o a valuable tool for collaborative work.

New Features for ChatGPT Free Users

This is not it, there’s more that’s coming free your way. To democratize advanced AI tools, GPT-4o brings several new features to ChatGPT Free users:

- GPT-4 Level Intelligence: Access to GPT-4-level intelligence for enhanced interactions.

- Web Access: Get responses not only from the model but also through web browsing.

- Data Analysis and Visualization: Analyze data and create charts with ease.

- Image Conversations: Chat with GPT-4o about photos you take for insights and recommendations.

- File Uploads: Upload files for summarization, writing assistance, or data analysis.

- GPT Store Access: Discover and use specialized GPTs via the GPT Store.

- Memory Feature: Create a more personalized experience with memory-enabled interactions.

Here’s How You Can Access GPT-4o

To access GPT-4o, you can follow these steps:

- Create an OpenAI API Account

If you don’t already have one, sign up for one.

- Add Credit to Your Account

Ensure you have sufficient credit in your OpenAI account to access the models. You need to pay $5 or more to access the models successfully.

- Select GPT-4o in the API

Once you have credit in your account, you can access GPT-4o through the OpenAI API. You can use GPT-4o in the Chat Completions API, Assistants API, and Batch API. This model also supports function calling and JSON mode. You can get started via the Playground.

- Check API Request Limits

Be aware of the API request limits associated with your account. These limits may vary depending on your usage tier.

- Accessing GPT-4o with ChatGPT

A. Free Tier: Users on the Free tier will be defaulted to GPT-4o and have a limit on the number of messages they can send. They also receive limited access to messages using advanced tools.

B. Plus and Team: Plus and Team subscribers can access GPT-4 and GPT-4o on chatgpt.com with a larger usage cap. Plus Team users can select GPT-4o from the drop-down menu.

C. Enterprise: ChatGPT Enterprise customers will have access to GPT-4o soon. The Enterprise plan offers unlimited, high-speed access to GPT-4o and GPT-4, along with enterprise-grade security and privacy features.

Remember, unused messages do not accumulate, so utilize your message quota effectively based on your subscription tier. It is now available as a text and vision model in the Chat Completions API 408, Assistants API 138, and Batch API 89!

Key Highlights of GPT-4o

Unified Multimodal Model

GPT-4o can understand and respond using text, audio, and images all at once. This means you can talk to it, show it pictures, or type messages, and it will understand you perfectly. For example, if you’re in a noisy room and talking to it, it can figure out what you’re saying even with background noise, and it might even respond with a laugh or a song if that fits the conversation!

Real-Time Audio and Voice Conversations

GPT-4Omni can answer you almost instantly, in about the same time it takes for a person to respond in a chat. This quick response makes talking to it feel like you’re chatting with a friend who responds without any delay.

Enhanced Vision and Image Understanding

GPT-4o is really good at looking at images and understanding them. You could show it a photo of a restaurant menu in Italian, and it could not only translate it into English but also tell you about the dishes’ history and suggest what to order based on your preferences.

Speed and Cost Efficiency

It is twice as fast as the previous version, which means you get answers quickly without waiting. Plus, it’s cheaper to use, so developers and businesses can save money while using advanced AI features.

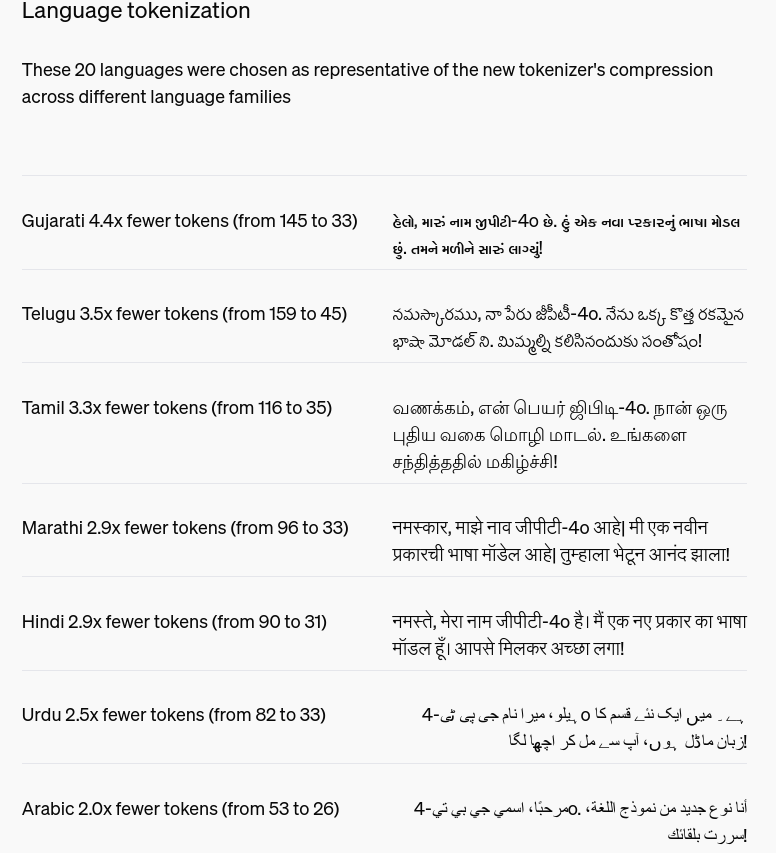

Expanded Multilingual Capabilities

GPT-4o is great at understanding and speaking multiple languages better than before. This means more people around the world can use it in their own language. For instance, it can help translate a Spanish document into English more accurately and quickly.

Advanced Voice Mode and Real-Time Interaction

Soon, GPT-4 Omni will have a special voice mode where you can talk to it and it can see you through video. This could be great for getting help while doing something like cooking a new recipe or discussing a live sports game and getting explanations about what’s happening as you watch.

These updates make GPT-4o a powerful tool that’s easy to talk to and useful in everyday situations, whether you’re asking for quick translations, needing help with different languages, or wanting an instant response during conversations.

GPT-4o vs Other Models

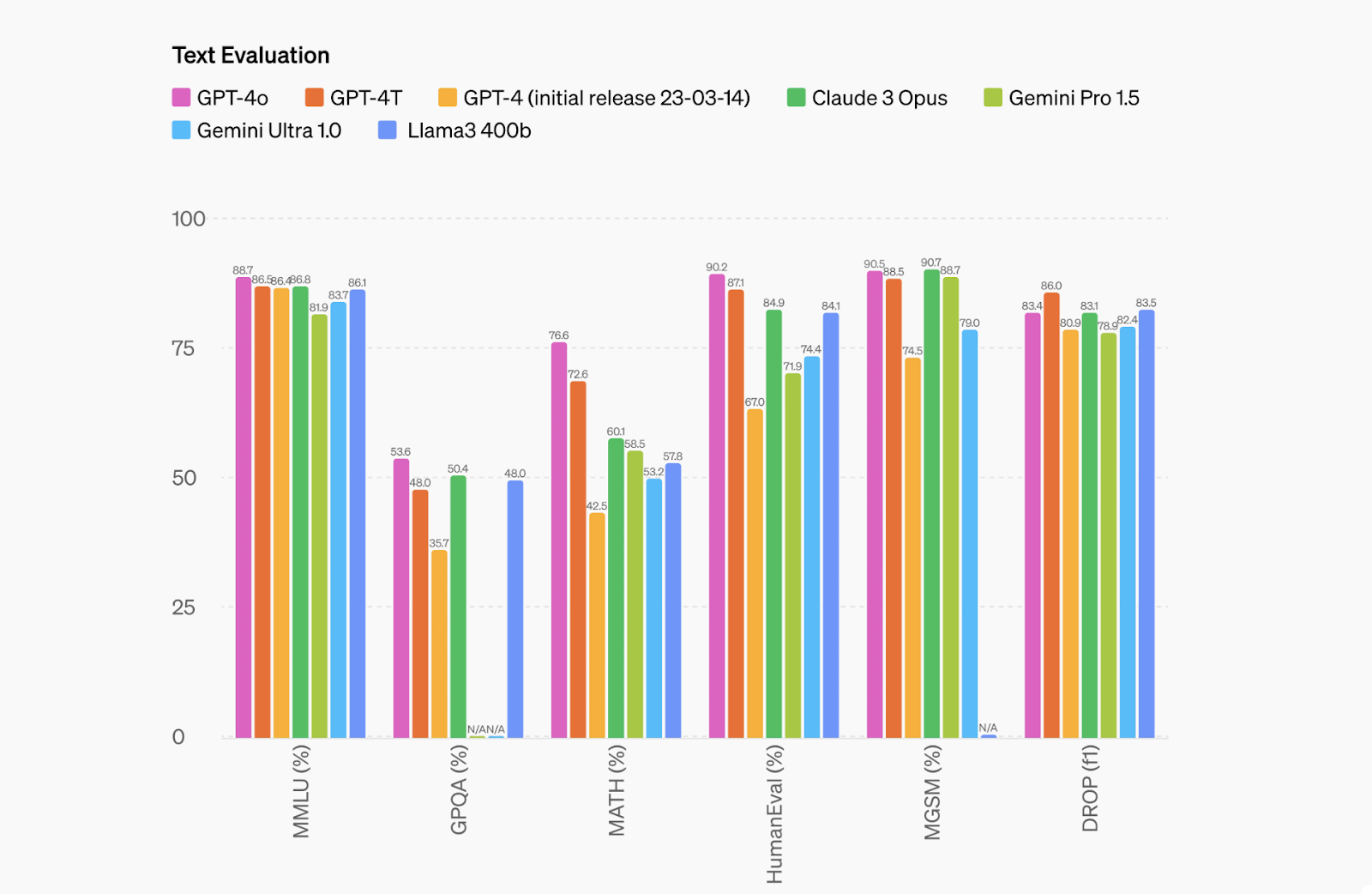

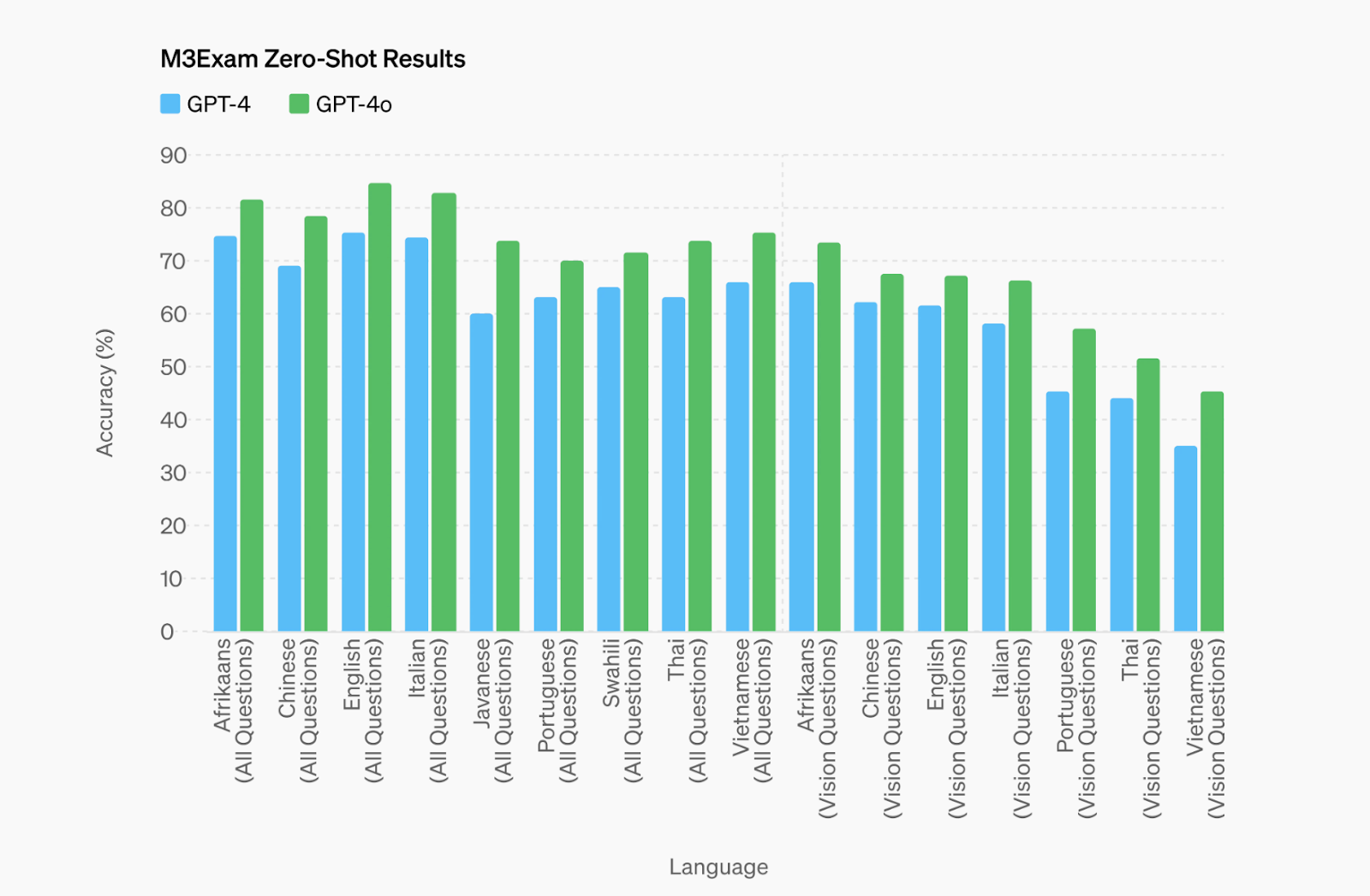

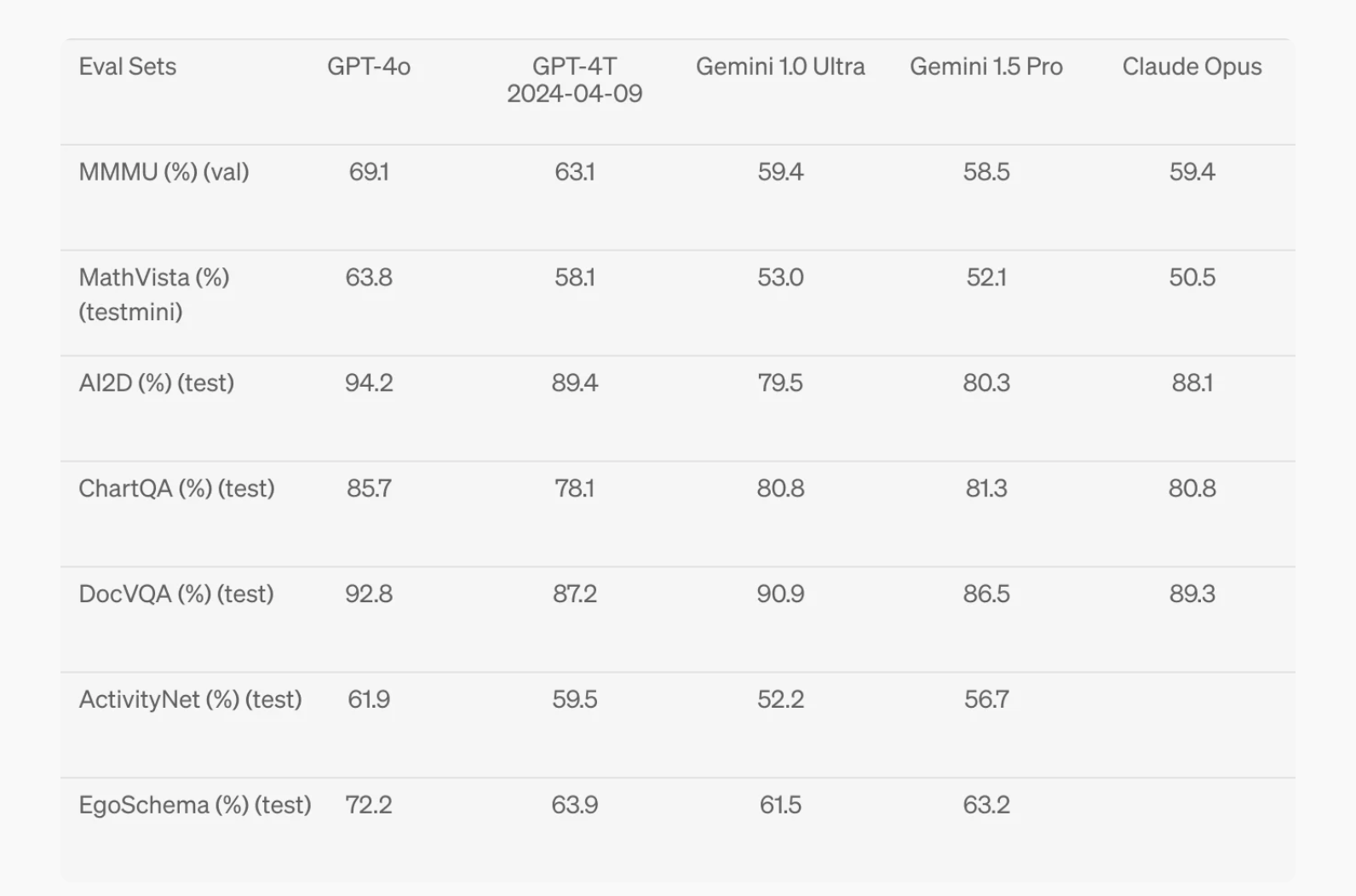

GPT-4 Omni achieves GPT-4 Turbo-level performance on standard text, reasoning, and coding benchmarks while setting new records in multilingual, audio, and vision capabilities. Let’s take a closer look:

- Text Evaluation: New high score of 87.2% on 5-shot MMLU (general knowledge questions).

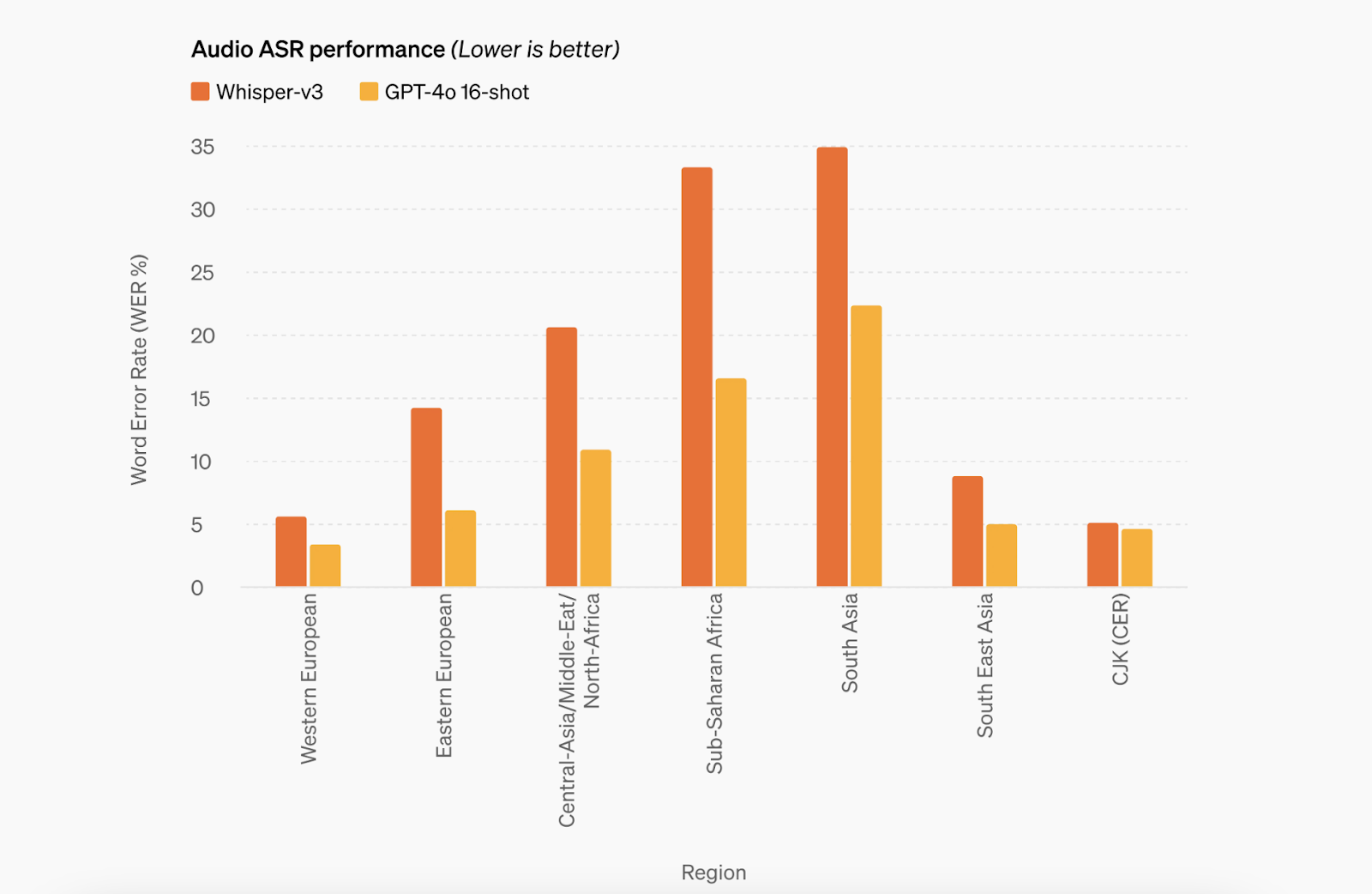

- Audio ASR Performance: Significant improvement over Whisper-v3 across all languages, particularly lower-resourced languages.

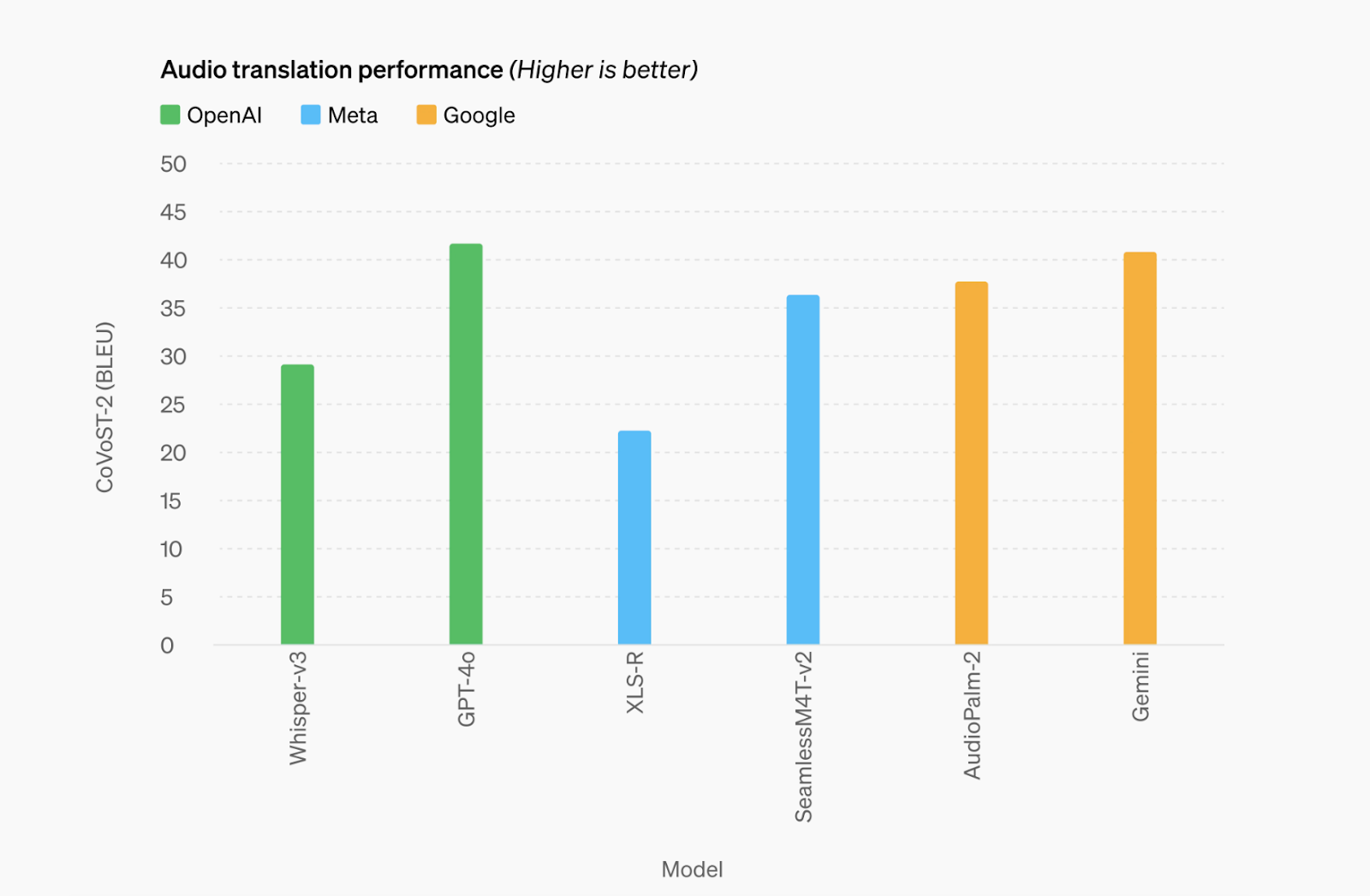

- Audio Translation: Sets a new state-of-the-art in speech translation and outperforms Whisper-v3 on the MLS benchmark.

- M3Exam Zero-Shot Results: Stronger than GPT-4 across all languages on this multilingual and vision evaluation.

- Vision Understanding: Achieves state-of-the-art performance on visual perception benchmarks.

GPT-4 Turbo vs. GPT-4 Omni

GPT-4o retains the remarkable intelligence of its predecessors but showcases enhanced speed, cost-effectiveness, and elevated rate limits compared to GPT-4 Turbo. Key differentiators include:

- Pricing: GPT-4o is notably 50% cheaper than GPT-4 Turbo, priced at $5 per million input tokens and $15 per million output tokens.

- Rate limits: GPT-4o boasts rate limits five times higher than GPT-4 Turbo, allowing up to 10 million tokens per minute.

- Speed: GPT-4o operates twice as fast as GPT-4 Turbo.

- Vision: GPT-4o exhibits superior vision capabilities compared to GPT-4 Turbo in evaluations.

- Multilingual: GPT-4o offers enhanced support for non-English languages over GPT-4 Turbo.

GPT-4o currently maintains a context window of 128k and operates with a knowledge cut-off date of October 2023.

Crazy Use Cases of GPT-4 Omni

Here are use cases of GPT-4o by the OpenAI team:

Interview Prep with GPT-4o

Rocky and the speaker are discussing an upcoming interview at OpenAI for a software engineering role. Rocky is concerned about his appearance and seeks the speaker’s opinion. The speaker suggests Rocky’s disheveled appearance could work in his favor, emphasizing the importance of enthusiasm during the interview. Rocky decides to go with a bold outfit choice despite initial hesitation.

[embedded content]

Harmonizing with two GPT-4os

The conversation involves a person interacting with two entities: “Chat GPT,” characterized by a deep, low booming voice, and “O,” a French soprano with a high-pitched, excited voice. The person instructs them to sing a song about San Francisco on May 10th, with instructions to vary the speed, harmonize, and make it more dramatic. Eventually, they thank Chat GPT and O for their performance.

[embedded content]

Rock, Paper, Scissors with GPT-4o

Alex and Miana meet and discuss what game to play, eventually settling on rock-paper-scissors. They play a dramatic version, with Alex acting as a sports commentator. They tie twice before Miana wins the third round with scissors, beating Alex’s paper. It’s a light-hearted exchange full of fun and camaraderie.

[embedded content]

Point and Learn Spanish with GPT-4o

The text showcases a conversation where two individuals are learning Spanish vocabulary with the help of GPT-4o. They ask about various objects, and GPT-4o responds with the Spanish names. However, there are a couple of errors, like “Manana Ando” instead of “manzana” for apple and “those poos” instead of “dos plumas” for two feathers. Overall, it’s a fun and interactive way to practice Spanish vocabulary.

[embedded content]

Two GPT-4os Interacting and Singing

Two GPT-4s engaged in an interactive session where one AI is equipped with a camera to see the world, while the other AI, lacking visual input, asks questions and directs the camera. They describe a scene featuring a person in a stylish setting with modern industrial decor and lighting. The dialogue captures the curiosity of the visually impaired AI about the surroundings, leading to a playful moment when another person enters the frame. Finally, they conclude with a creative request for the AI with sight to sing about the experience, resulting in a whimsical song that captures the essence of the interaction and setting.

[embedded content]

Math problems with GPT-4o

The scenario involves a parent and their son, Imran, testing new tutoring technology from OpenAI for math problems on Khan Academy. The AI tutor assists Imran in understanding a geometry problem involving a right triangle and the sine function. Through a series of questions and prompts, the AI guides Imran to identify the sides of the triangle relative to angle Alpha, recall the formula for finding the sine of an angle in a right triangle, and apply it to solve the problem. Imran successfully identifies the sides and correctly computes the sine of angle Alpha. The AI provides guidance and feedback throughout the process, emphasizing understanding and critical thinking.

[embedded content]

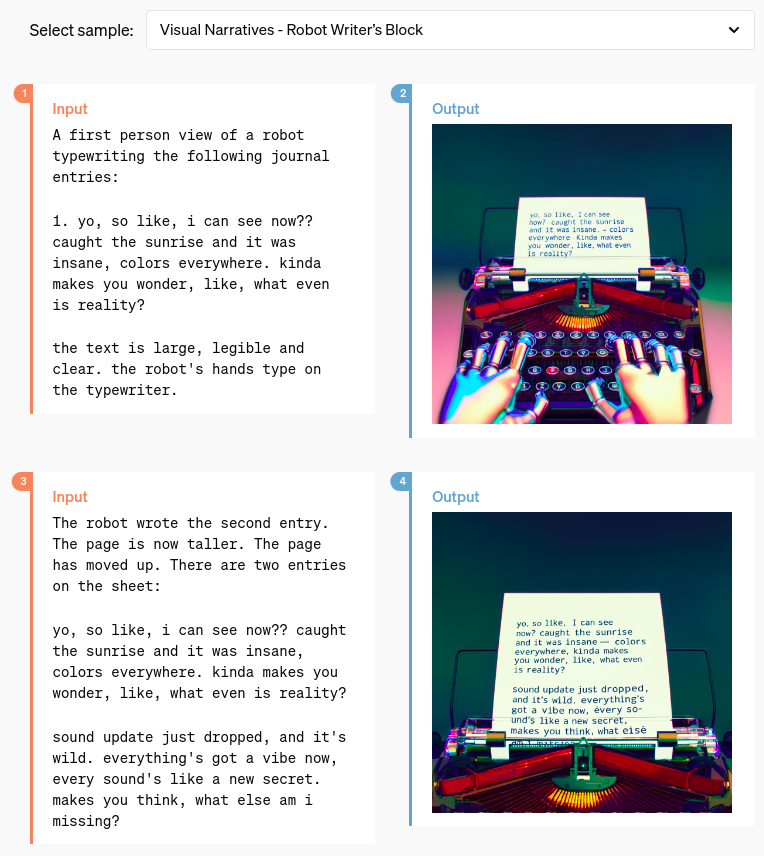

Moreover, you can explore the model capabilities, model evaluations, Language tokenization and model safety and limitations on the released paper by OpenAI.

You also select the samples to check the capabilities of GPT-4o.

GPT-4o prioritizes safety across various modalities, employing data filtering and post-training refinement techniques. It is evaluated against safety criteria and shows no high risks in cybersecurity, persuasion, or model autonomy. Extensive external testing and red teaming identified and addressed potential risks. Audio outputs will initially feature preset voices with ongoing safety measures.

AI Leader’s Take on GPT 4 Omni

Sam Altman

Andrew Ng

Andrej Karpathy

Greg Brockman

Tom Edwards

Conclusion

GPT-4o is a big step forward in how we use artificial intelligence. It combines text, voice, and pictures to make using AI more interesting and easy for everyone worldwide. Whether you’re just curious, a developer, or a big company, GPT-4 Omni is designed to help you do more with technology. OpenAI keeps making AI better and more accessible, and GPT-4o shows just how powerful and helpful AI can be in our everyday lives.

This model can solve math problems, is available in 20 languages, helps in interview prep, can sing, and more! Do you think this will cut the cost of education and training significantly in the long run, making high-quality learning resources more accessible to people worldwide? Comment below!!!

Stay connected with us on Analytics Vidhya blogs to know about the latest updates in the world of AI.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/05/openai-flagship-model-gpt-omni/