Introduction

In the ever-evolving landscape of artificial intelligence, two key players have come together to break new ground: Generative AI and Reinforcement Learning. These cutting-edge technologies, Generative AI and Reinforcement Learning, have the potential to create self-improving AI systems, taking us one step closer to realizing the dream of machines that learn and adapt autonomously. These tools are paving the way for AI systems that can improve themselves, bringing us closer to the idea of machines that can learn and adapt on their own.

AI has made remarkable wonders in recent years,from understanding human language to helping computers see and interpret the world around them. Generative AI models like GPT-3 and Reinforcement Learning algorithms such as Deep Q-Networks stand at the forefront of this progress. While these technologies have been transformative individually, their convergence opens up new dimensions of AI capabilities and pushes the world’s boundaries into ease.

Learning Objectives

- Acquire required and depth knowledge of Reinforcement Learning and its algorithms, reward structures, the general framework of Reinforcement Learning, and state-action policies to understand how agents make decisions.

- Investigate how these two branches can be symbiotically combined to create more adaptive, intelligent systems, particularly in decision-making scenarios.

- Study and analyze various case studies demonstrating the efficacy and adaptability of integrating Generative AI with Reinforcement Learning in fields like healthcare, autonomous vehicles, and content creation.

- Familiarize yourself with Python libraries like TensorFlow, PyTorch, OpenAI’s Gym, and Google’s TF-Agents to gain practical coding experience in implementing these technologies.

This article was published as a part of the Data Science Blogathon.

Table of contents

Generative AI: Giving Machines Creativity

Generative AI models, like OpenAI’s GPT-3, are designed to generate content, whether it’s natural language, images, or even music. These models operate on the principle of predicting what comes next in a given context. They have been used for everything from automated content generation to chatbots that can mimic human conversation. The hallmark of Generative AI is its ability to create something novel from the patterns it learns.

Reinforcement Learning: Teaching AI to Make Decisions

Reinforcement Learning (RL) is another groundbreaking field. It’s the technology that makes Artificial Intelligence to learn from trial and error, just like a human would. It’s been used to teach AI to play complex games like Dota 2 and Go. RL agents learn by receiving rewards or penalties for their actions and use this feedback to improve over time. In a sense, RL gives AI a form of autonomy, allowing it to make decisions in dynamic environments.

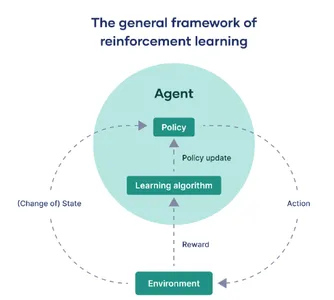

The Framework for Reinforcement Learning

In this section, we will be demystifying the key framework of reinforcemt learning:

The Acting Entity: The Agent

In the realm of Artificial Intelligence and machine learning, the term “agent” refers to the computational model tasked with interacting with a designated external environment. Its primary role is to make decisions and take actions to either accomplish a defined goal or accumulate maximum rewards over a sequence of steps.

The World Around: The Environment

The “environment” signifies the external context or system where the agent operates. In essence, it constitutes every factor that is beyond the agent’s control, yet observable. This could vary from a virtual game interface to a real-world setting, like a robot navigating through a maze. The environment is the ‘ground truth’ against which the agent’s performance is evaluated.

Navigating Transitions: State Changes

In the jargon of reinforcement learning, “state” or denoted by “s,” describes the different scenarios the agent might find itself in while interacting with the environment. These state transitions are pivotal; they inform the agent’s observations and heavily influence its future decision-making mechanisms.

The Decision Rulebook: Policy

The term “policy” encapsulates the agent’s strategy for selecting actions corresponding to different states. It serves as a function mapping from the domain of states to a set of actions, defining the agent’s modus operandi in its quest to achieve its goals.

Refinement Over Time: Policy Updates

“Policy update” refers to the iterative process of tweaking the agent’s existing policy. This is a dynamic aspect of reinforcement learning, allowing the agent to optimize its behavior based on historical rewards or newly acquired experiences. It is facilitated through specialized algorithms that recalibrate the agent’s strategy.

The Engine of Adaptation: Learning Algorithms

Learning algorithms provide the mathematical framework that empowers the agent to refine its policy. Depending on the context, these algorithms can be broadly categorized into model-free methods, which learn directly from real-world interactions, and model-based techniques that leverage a simulated model of the environment for learning.

The Measure of Success: Rewards

Lastly, “rewards” are quantifiable metrics, dispensed by the environment, that gauge the immediate efficacy of an action performed by the agent. The overarching aim of the agent is to maximize the sum of these rewards over a period, which effectively serves as its performance metric.

In a nutshell, reinforcement learning can be distilled into a continuous interaction between the agent and its environment. The agent traverses through varying states, makes decisions based on a specific policy, and receives rewards that act as feedback. Learning algorithms are deployed to iteratively fine-tune this policy, ensuring that the agent is always on a trajectory toward optimized behavior within the constraints of its environment.

The Synergy: Generative AI Meets Reinforcement Learning

The real magic happens when Generative AI meets Reinforcement Learning. AI researchers have been experimenting and researching with combining these two domains AI and Reinforcement learning to create systems or devises that can not only generate content but also learn from user feedback to improve their output and get better AI content.

- Initial Content Generation: Generative AI, like GPT-3, generates content based on a given input or context. This content could be anything from articles to art.

- User Feedback Loop: Once the content is generated and presented to the user, any feedback given becomes a valuable asset for training the AI system further.

- Reinforcement Learning (RL) Mechanism: Utilizing this user feedback, Reinforcement Learning algorithms step in to evaluate what parts of the content were appreciated and which parts need refinement.

- Adaptive Content Generation: Informed by this analysis, the Generative AI then adapts its internal models to better align with user preferences. It iteratively refines its output, incorporating lessons learned from each interaction.

- Fusion of Technologies: The combination of Generative AI and Reinforcement Learning creates a dynamic ecosystem where generated content serves as a playground for the RL agent. User feedback functions as a reward signal, directing the AI on how to improve.

This combinaton of Generative AI and Reinforcement Learning allows for a highly adaptive system and also capable of learning from real-world feedback example human feedback, thereby enabling more user-aligned and effective outcomes and to gain better resuts that aligns with human needs.

Code Snippet Synergy

Let’s understand the synergy between Generative AI and Reinforcement Learning:

import torch

import torch.nn as nn

import torch.optim as optim # Simulated Generative AI model (e.g., a text generator)

class GenerativeAI(nn.Module): def __init__(self): super(GenerativeAI, self).__init__() # Model layers self.fc = nn.Linear(10, 1) # Example layer def forward(self, input): output = self.fc(input) # Generate content, for this example, a number return output # Simulated User Feedback

def user_feedback(content): return torch.rand(1) # Mock user feedback # Reinforcement Learning Update

def rl_update(model, optimizer, reward): loss = -torch.log(reward) optimizer.zero_grad() loss.backward() optimizer.step() # Initialize model and optimizer

gen_model = GenerativeAI()

optimizer = optim.Adam(gen_model.parameters(), lr=0.001) # Iterative improvement

for epoch in range(100): content = gen_model(torch.randn(1, 10)) # Mock input reward = user_feedback(content) rl_update(gen_model, optimizer, reward)

Code explanation

- Generative AI Model: It’s like a machine that tries to generate content, like a text generator. In this case, it’s designed to take some input and produce an output.

- User Feedback: Imagine users providing feedback on the content the AI generates. This feedback helps the AI learn what’s good or bad. In this code, we use random feedback as an example.

- Reinforcement Learning Update: After getting feedback, the AI updates itself to get better. It adjusts its internal settings to improve its content generation.

- Iterative Improvement: The AI goes through many cycles (100 times in this code) of generating content, getting feedback, and learning from it. Over time, it becomes better at creating the desired content.

This code defines a basic Generative AI model and a feedback loop. The AI generates content, receives random feedback, and adjusts itself over 100 iterations to improve its content creation capabilities.

In a real-world application, you would use a more sophisticated model and more nuanced user feedback. However, this code snippet captures the essence of how Generative AI and Reinforcement Learning can harmonize to build a system that not only generates content but also learns to improve it based on feedback.

Real-World Applications

The possibilities arising from the synergy of Generative AI and Reinforcement Learning are endless. Let us take a look at the real-world applications:

Content Generation

Content created by AI can become increasingly personalized, aligning with the tastes and preferences of individual users.

Consider a scenario where an RL agent uses GPT-3 to generate a personalized news feed. After each article read, the user provides feedback. Here, let’s imagine that feedback is simply ‘like’ or ‘dislike’, which are transformed into numerical rewards.

from transformers import GPT2LMHeadModel, GPT2Tokenizer

import torch # Initialize GPT-2 model and tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2') # RL update function

def update_model(reward, optimizer): loss = -torch.log(reward) optimizer.zero_grad() loss.backward() optimizer.step() # Initialize optimizer

optimizer = torch.optim.Adam(model.parameters(), lr=0.001) # Example RL loop

for epoch in range(10): input_text = "Generate news article about technology." input_ids = tokenizer.encode(input_text, return_tensors='pt') with torch.no_grad(): output = model.generate(input_ids) article = tokenizer.decode(output[0]) print(f"Generated Article: {article}") # Get user feedback (1 for like, 0 for dislike) reward = float(input("Did you like the article? (1 for yes, 0 for no): ")) update_model(torch.tensor(reward), optimizer)Art and Music

AI can generate art and music that resonates with human emotions, evolving its style based on audience feedback. An RL agent could optimize the parameters of a neural style transfer algorithm based on feedback to create art or music that better resonates with human emotions.

# Assuming a function style_transfer(image, style) exists

# RL update function similar to previous example # Loop through style transfers

for epoch in range(10): new_art = style_transfer(content_image, style_image) show_image(new_art) reward = float(input("Did you like the art? (1 for yes, 0 for no): ")) update_model(torch.tensor(reward), optimizer)Conversational AI

Chatbots and virtual assistants can engage in more natural and context-aware conversations, making them incredibly useful in customer service. Chatbots can employ reinforcement learning to optimize their conversational models based on the conversation history and user feedback.

# Assuming a function chatbot_response(text, model) exists

# RL update function similar to previous examples for epoch in range(10): user_input = input("You: ") bot_response = chatbot_response(user_input, model) print(f"Bot: {bot_response}") reward = float(input("Was the response helpful? (1 for yes, 0 for no): ")) update_model(torch.tensor(reward), optimizer)Autonomous Vehicles

AI systems in autonomous vehicles can learn from real-world driving experiences, enhancing safety and efficiency. An RL agent in an autonomous vehicle could adjust its path in real-time based on various rewards like fuel efficiency, time, or safety.

# Assuming a function drive_car(state, policy) exists

# RL update function similar to previous examples for epoch in range(10): state = get_current_state() # e.g., traffic, fuel, etc. action = drive_car(state, policy) reward = get_reward(state, action) # e.g., fuel saved, time taken, etc. update_model(torch.tensor(reward), optimizer)These code snippets are illustrative and simplified. They help to manifest the concept that Generative AI and RL can collaborate to improve user experience across various domains. Each snippet showcases how the agent iteratively improves its policy based on the rewards received, similar to how one might iteratively improve a deep learning model like Unet for radar image segmentation.

Case Studies

Healthcare Diagnosis and Treatment Optimization

- Problem: In healthcare, accurate and timely diagnosis is crucial. It’s often challenging for medical practitioners to keep up with vast amounts of medical literature and evolving best practices.

- Solution: Generative AI models like BERT can extract insights from medical texts. An RL agent can optimize treatment plans based on historical patient data and emerging research.

- Case Study: IBM’s Watson for Oncology uses Generative AI and RL to assist oncologists in making treatment decisions by analyzing a patient’s medical records against vast medical literature. This has improved the accuracy of treatment recommendations.

Retail and Personalized Shopping

- Problem: In e-commerce, personalizing shopping experiences for customers is essential for increasing sales.

- Solution: Generative AI, like GPT-3, can generate product descriptions, reviews, and recommendations. An RL agent can optimize these recommendations based on user interactions and feedback.

- Case Study: Amazon utilizes Generative AI for generating product descriptions and uses RL to optimize product recommendations. This has led to a significant increase in sales and customer satisfaction.

Content Creation and Marketing

- Problem: Marketers need to create engaging content at scale. It’s challenging to know what will resonate with audiences.

- Solution: Generative AI, such as GPT-2, can generate blog posts, social media content, and advertising copy. RL can optimize content generation based on engagement metrics.

- Case Study: HubSpot, a marketing platform, uses Generative AI to assist in content creation. They employ RL to fine-tune content strategies based on user engagement, resulting in more effective marketing campaigns.

Video Game Development

- Problem: Creating non-player characters (NPCs) with realistic behaviors and game environments that adapt to player actions is complex and time-consuming.

- Solution: Generative AI can design game levels, characters, and dialog. RL agents can optimize NPC behavior based on player interactions.

- Case Study: In the game industry, studios like Ubisoft use Generative AI for world-building and RL for NPC AI. This approach has resulted in more dynamic and engaging gameplay experiences.

Financial Trading

- Problem: In the highly competitive world of financial trading, finding profitable strategies can be challenging.

- Solution: Generative AI can assist in data analysis and strategy generation. RL agents can learn and optimize trading strategies based on market data and user-defined goals.

- Case Study: Hedge funds like Renaissance Technologies leverage Generative AI and RL to discover profitable trading algorithms. This has led to substantial returns on investments.

These case studies demonstrate how the combination of Generative AI and Reinforcement Learning is transforming various industries by automating tasks, personalizing experiences, and optimizing decision-making processes.

Ethical Considerations

Fairness in AI

Ensuring fairness in AI systems is critical to prevent biases or discrimination. AI models must be trained on diverse and representative datasets. Detecting and mitigating bias in AI models is an ongoing challenge. This is particularly important in domains such as lending or hiring, where biased algorithms can have serious real-world consequences.

Accountability and Responsibility

As AI systems continue to advance, accountability and responsibility become central. Developers, organizations, and regulators must define clear lines of responsibility. Ethical guidelines and standards need to be established to hold individuals and organizations accountable for the decisions and actions of AI systems. In healthcare, for instance, accountability is paramount to ensure patient safety and trust in AI-assisted diagnosis.

Transparency and Explainability

The “black box” nature of some AI models is a concern. To ensure ethical and responsible AI, it’s vital that AI decision-making processes are transparent and understandable. Researchers and engineers should work on developing AI models that are explainable and provide insight into why a specific decision was made. This is crucial for areas like criminal justice, where decisions made by AI systems can significantly impact individuals’ lives.

Data Privacy and Consent

Respecting data privacy is a cornerstone of ethical AI. AI systems often rely on user data, and obtaining informed consent for data usage is paramount. Users should have control over their data, and there must be mechanisms in place to safeguard sensitive information. This issue is particularly important in AI-driven personalization systems, like recommendation engines and virtual assistants.

Harm Mitigation

AI systems should be designed to prevent the creation of harmful, misleading, or false information. This is particularly relevant in the realm of content generation. Algorithms should not generate content that promotes hate speech, misinformation, or harmful behavior. Stricter guidelines and monitoring are essential in platforms where user-generated content is prevalent.

Human Oversight and Ethical Expertise

Human oversight remains crucial. Even as AI becomes more autonomous, human experts in various fields should work in tandem with AI. They can make ethical judgments, fine-tune AI systems, and intervene when necessary. For example, in autonomous vehicles, a human safety driver must be ready to take control in complex or unforeseen situations.

These ethical considerations are at the forefront of AI development and deployment, ensuring that AI technologies benefit society while upholding principles of fairness, accountability, and transparency. Addressing these issues is pivotal for the responsible and ethical integration of AI into our lives.

Conclusion

We are witnessing an exciting era where Generative AI and Reinforcement Learning are beginning to coalesce. This convergence is carving a path toward self-improving AI systems, capable of both innovative creation and effective decision-making. However, with great power comes great responsibility. The rapid advancements in AI bring along ethical considerations that are crucial for its responsible deployment. As we embark on this journey of creating AI that not only comprehends but also learns and adapts, we open up limitless possibilities for innovation. Nonetheless, it is vital to move forward with ethical integrity, ensuring that the technology we create serves as a force for good, benefiting humanity as a whole.

Key Takeaways

- Generative AI and Reinforcement Learning (RL) are converging to create self-improving systems, with the former focused on content generation and the latter on decision-making through trial and error.

- In RL, key components include the agent, which makes decisions; the environment, which the agent interacts with; and rewards, which serve as performance metrics. Policies and learning algorithms enable the agent to improve over time.

- The union of Generative AI and RL allows for systems that generate content and adapt based on user feedback, thereby improving their output iteratively.

- A Python code snippet illustrates this synergy by combining a simulated Generative AI model for content generation with RL to optimize based on user feedback.

- Real-world applications are vast, including personalized content generation, art and music creation, conversational AI, and even autonomous vehicles.

- These combined technologies could revolutionize how AI interacts with and adapts to human needs and preferences, leading to more personalized and effective solutions.

Frequently Asked Questions

A. Combining Generative AI and Reinforcement Learning creates intelligent systems that not only generate new data but also optimize its effectiveness. This synergetic relationship broadens the scope and efficiency of AI applications, making them more versatile and adaptive.

A. Reinforcement Learning acts as the system’s decision-making core. By employing a feedback loop centered around rewards, it evaluates and adapts the generated content from the Generative AI module. This iterative process optimizes the data generation strategy over time.

A. Practical applications are broad-ranging. In healthcare, this technology can dynamically create and refine treatment plans using real-time patient data. Meanwhile, in the automotive sector, it could enable self-driving cars to adjust their routing in real-time in response to fluctuating road conditions.

A. Python remains the go-to language due to its comprehensive ecosystem. Libraries like TensorFlow and PyTorch are frequently used for Generative AI tasks, while OpenAI’s Gym and Google’s TF-Agents are typical choices for Reinforcement Learning implementations.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/10/generative-ai-and-reinforcement-learning/