Power is a ubiquitous concern, and it is impossible to optimize a system’s energy consumption without considering the system as a whole. Tremendous strides have been made in the optimization of a hardware implementation, but that is no longer enough. The complete system must be optimized.

There are far reaching implications to this, some of which are driving the path toward domain-specific computing. Shift left plays a role, but more importantly, it means all parties that play a role in the total energy consumption for a defined task must work together to achieve that goal.

Energy is fast becoming a first-class consideration. “As energy efficiency becomes a critical concern across all computing domains, architects are often requested to consider the energy cost of algorithms for both hardware and software design,” says Guillaume Boillet, senior director of product management and strategic marketing for Arteris. “The focus is shifting from optimizing solely for computational efficiency (speed, throughput, latency) to also optimizing for energy efficiency (joules per operation). This requires considering factors such as the number of memory accesses, the parallelizability of the computation, and the utilization of specialized hardware accelerators that may offer more energy-efficient computation for certain tasks.”

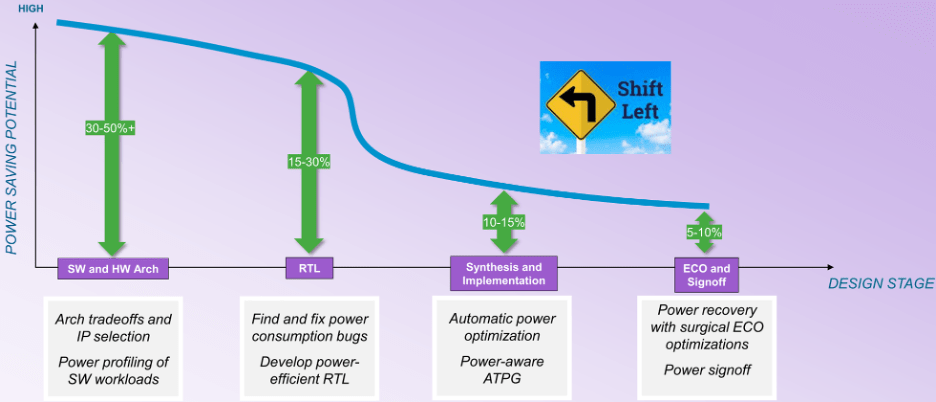

This moves the focus from hardware implementation to architectural analysis of both the hardware and software. “During the later stages of the design flow, the opportunities for optimization diminish,” says William Ruby, product management director within the EDA Group of Synopsys. “You may have more opportunities for automatic optimization, but you’re constrained to a smaller percentage improvement. When considering the curve for potential savings (see figure 1), it is not a smooth curve from architecture to sign-off. There’s an inflection point at synthesis. Before the design is mapped to an implementation, you have many more degrees of freedom, but after this inflection point, things drastically drop off.”

Fig.1: Opportunities for power saving during design stages. Source: Synopsys

The big barrier is that before the inflection point, power becomes activity-dependent, and that makes automatic optimization a lot more difficult. “During RTL development, dynamic vector-driven checks can be used to uncover wastage and perform logic restructuring, clock gating, operator gating, and other techniques to reduce it,” says Qazi Ahmed, principal product manager at Siemens EDA. “It is also important to understand that power is use-case sensitive and has to be profiled with actual software-driven workloads to make sure all possible scenarios are covered. This is especially true in the context of the full SoC, where the power envelope could be completely different from what synthetic vector driven analysis at the IP-level might show.”

“More upfront planning, profiling, and optimization will be required,” says Tim Kogel, principal engineer for virtual prototyping at Synopsys.

Kogel pointed to various levels that need to be addressed:

- At the macro-architecture level, for big-ticket tradeoff analysis and selection of dedicated processing elements;

- At the micro-architecture level, to optimize the instructions set and execution units for the target application;

- At the algorithmic level, selecting and optimizing algorithms for computational efficiency and memory accesses, and

- At the software level, providing feedback to developers on how to optimize their software for power and energy.

“This will require power- and energy-aware tooling for virtual prototyping and software development to generate the data, from which the hardware and software implementation can be optimized,” he noted.

Software mapping

Deciding what should be in software is an early task. “Do I want to have a DSP core to offload the CPU?” asks Synopsys’ Ruby. “How does that impact my power consumption? Do I want to implement a hardware accelerator, or do I want to perform all these functions in software? The energy cost of software running on the CPU is not zero.”

As systems become increasingly defined by their software, that is where consideration for energy has to start. “Software is a key element when it comes to saving energy and improving performance,” says Vincent Risson, senior principal CPU architect for Arm. “Compute-intensive applications often benefit significantly from application optimization. This can be both static in terms of highly tuned libraries, or dynamic frameworks that allow compute to be targeted at the most optimal processing engine. For example, mobile devices have standardized on a CPU system architecture that provides application processors with different compute performance while conforming to a common ISA and configuration. This allows applications to be dynamically migrated to the processors that are optimal for efficiency. Mechanisms provided for introspection and versatility, provided by the combination of both software and heterogenous hardware, will provide opportunities for improved efficiency in the future.”

There is often more than one class of processor that software can run. “We can choose, based on the types of workloads that we are seeing, where a given application should run,” says Jeff Wilcox, fellow and design engineering group CTO for client SoC architectures at Intel. “If it exceeds the needs of a smaller core, bigger cores can be fired up. There is telemetry and workload characterization to try and figure out where things should be run to be the most power efficient. A lot of the workloads that we’re seeing now are different. Even if it’s symmetric agents that are working on the same workload, they have dependencies between each other. Increasingly a lot of workloads require asymmetric agents, where we’ve got GPUs, NPUs, IPUs available, and where these types of workloads are cooperating with the CPU. That’s a much harder thing to optimize. We’ve gotten to the point where we have the hooks to be able to understand the performance and power challenges, but we’re still building the tools to really understand how to fully digest and optimize it.”

The difficulty here is that the architecture of the workload may depend upon the architecture of the hardware. “There are many developments within the field of AI, and it’s not just the size of the model that is important,” says Renxin Xia, vice president of hardware at Untether AI. “It is equally important how the models are constructed, and if they are constructed in an energy efficient way. That is harder to answer because it is architecture-dependent. The energy costs of an algorithm running on a GPU might be very different than the energy cost of that algorithm running on a memory at compute architecture.”

Focus on software

The general consensus is that none of this is possible without more hardware-software cooperation. “Hardware-software co-development is needed to get those step function improvements,” says Sailesh Kottapalli, senior fellow in Intel’s data center unit. “Just trying to do it transparently in hardware is reaching its limits. Look at what’s happening in AI. If hardware was the only element, we would not see the massive progression we’re seeing. A lot of that is algorithmic improvements. With software algorithms, if you reduce path length, you can achieve the same result with fewer number of instructions and decreased software work. Sometimes when you get clarity on that, we can figure out that for those algorithms there is a new optimal instruction set, a new micro-architecture, and then you can further optimize that in hardware.”

It requires a big change in software development flows. “In the past, architectures and software flows were optimized for productivity, meaning general-purpose processors programmed with high-level languages, using the fastest and cheapest software tools,” says Synopsys’ Kogel. “The general direction was to provide as much flexibility and productivity as possible, and optimize only as much as absolutely necessary. This needs to be turned around to providing only as much flexibility as required, and otherwise use dedicated implementations.”

For many software functions, memory access is the biggest consumer of power. “A software function can be implemented in different ways, and that results in different instruction streams with different power and energy profiles,” says Synopsys’ Ruby. “You need to weight or assign heavier costs to the memory access instructions. You need to be careful how you model things. Even though it’s just the CPU, you need to model the costs of energy in the system context.”

In the future, result accuracy also may be a factor that can help in optimization. “Substantial power savings can be obtained by optimizing software to better use available hardware resources,” says Arteris’ Boillet. “This includes compiler optimizations, code refactoring to reduce computational complexity, and algorithms specifically designed to be energy efficient. The latter can be achieved with approximate computing for applications that can tolerate some level of imprecision, like multimedia processing, machine learning, and sensor data analysis.”

Analysis

It all starts with analysis. “We can create a virtual model of the system,” says Ruby. “Then we can define use cases, which in this context is really a sequence of operating modes of the design. That is not software yet. You have the system described as a collection of models, both performance models, as well as power models. And it will give you a power profile based on these models and the use case that you have defined. The next alternative is a similar type of virtual system description. Now, you run an actual software workload against that. If you go even deeper, if you want more visibility, finer grained detail, you can take the RTL description of the design, maybe it’s not final yet, maybe it’s still early, but as long as it’s mostly wiggling, you can put it on an emulator and run the real work. Once you do that, the emulator will generate an activity database. Emulation-oriented power analysis capabilities exist that can take a large amount of data, hundreds of millions of clock cycles of workload data and generate a power profile. There’s a spectrum of things that can be done.”

In some cases, that may not be a long enough time span. “Most of our thermal analysis is based on closed loop analysis, built on silicon data, not on pre-silicon simulation because of the trace lengths required and the amount of time required for the analysis,” says Intel’s Kottapalli. “There is no way we can simulate for that long, to have a realistic thermal profile established. We use profile data from silicon, using different kinds of workloads and traces, and then we do analysis on what solutions we need to build.”

It is easier when the timeframes are shorter. “Fundamental architectural decisions need to be considered with some sort of power view in mind,” says Ruby. “You need a higher level, more abstract model of all parts of your system including all of the processing cores, and the memory subsystem, because how that is organized is really, really important. How much memory is really needed? These are fundamental architectural decisions. You need to have some power data associated with these components. How much power is the CPU consuming under this particular workload or that particular workload? What about the DSP core, the hardware, the memory, the network on the chip – how much are each of those consuming when doing each operation? Those are required to make fundamental architectural decisions.”

There are many new power-related tools required. “While EDA tools exist for dealing with high-speed, high-power density transients, there are many other challenges,” says Intel’s Wilcox. “Some of the other challenges are looking at longer time constant dynamics, or how to manage things across the SoC. I haven’t seen as much in the EDA space that’s helping with that. We’re doing more homegrown tools to try and build those capabilities.”

While tools have been developed for the hardware side of these architectural tradeoffs, few tools exist today to help on the software side. “We need our software engineers to produce correct code as quickly as possible,” says Ruby. “What I believe is really needed is some sort of a companion technology for the software developer. Just like we have RTL power analysis tools for the hardware, software development systems need some sort of power profiler that will tell them how much power and energy this code is consuming. Since we’re now living in the age of AI, it would be cool to have AI technology analyze the code. You get an estimation for power consumption and some AI technology may say, if you restructure your code, this way, you can save a lot of power.”

Conclusion

The hardware world is hitting walls related to power and energy. Thermal limits and concerns are growing within that community. Without taking them into account, hardware functionality cannot grow. But these have not reached the level of being system-level concerns. Until all the parties that contribute to the consumption of energy sit down in the same room and design the system to be energy efficient, we are not going to see true solutions to the problem.

There is a second side to this, as well. All of the people who produce the tools those people use also have to get into the same room and develop flows that enable everyone to be successful. While there has been some progress between the EDA and systems world to solve some of the thermal challenges, there is less progress at the architectural level, and almost no progress between the hardware and software worlds. Virtual prototypes that concentrate on functionality are not enough. They need to be extended to system power and energy, and that cannot be done without the compiler developers getting involved. There is an opportunity within domain-specific computing, because these people are taking a new direction in hardware as a result of these problems, and it may be important enough to them to make progress in the adjacent fields. But it all seems to remain a long time in the future.

Related Reading

The Rising Price Of Power In Chips

More data requires faster processing, which leads to a whole bunch of problems — not all of which are obvious or even solvable.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://semiengineering.com/optimizing-energy-at-the-system-level/