Introduction

In the past, Generative AI has captured the market, and as a result, we now have various models with different applications. The evaluation of Gen AI began with the Transformer architecture, and this strategy has since been adopted in other fields. Let’s take an example. As we know, we are currently using the VIT model in the field of stable diffusion. When you explore the model further, you will see that two types of services are available: paid services and open-source models that are free to use. The user who wants to access the extra services can use paid services like OpenAI, and for the open-source model, we have a Hugging Face.

You can access the model and according to your task, you can download the respective model from the services. Also, note that charges may be applied for token models according to the respective service in the paid version. Similarly, AWS is also providing services like AWS Bedrock, which allows access to LLM models through API. Toward the end of this blog post, let’s discuss pricing for services.

Learning Objectives

- Understanding Generative AI with Stable Diffusion, LLaMA 2, and Claude Models.

- Exploring the features and capabilities of AWS Bedrock’s Stable Diffusion, LLaMA 2, and Claude models.

- Exploring AWS Bedrock and its pricing.

- Learn how to leverage these models for various tasks, such as image generation, text synthesis, and code generation.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Generative AI?

Generative AI is a subset of artificial intelligence(AI) that is developed to create new content based on user requests, such as images, text, or code. These models are highly trained on large amounts of data, which makes the production of content or response to user requests much more accurate and less complex in terms of time. Generative AI has a lot of applications in different domains, such as creative arts, content generation, data augmentation, and problem-solving.

You can refer to some of my blogs created with LLM models, such as chatbot (Gemini Pro) and Automated Fine-Tuning of LLaMA 2 Models on Gradient AI Cloud. I also created the Hugging Face BLOOM model by Meta to develop the chatbot.

Key Features of GenAI

- Content Creation: LLM models can generate new content by using the queries which is provided as input by the user to generate text, images, or code.

- Fine-Tuning: We can easily fine-tune, which means that we can train the model on different parameters to increase the performance of LLM models and improve their power.

- Data-driven Learning: Generative AI models are trained on large datasets with different parameters, allowing them to learn patterns from data and trends in the data to generate accurate and meaningful outputs.

- Efficiency: Generative AI models provide accurate results; in this way, they save time and resources compared to manual creation methods.

- Versatility: These models are useful in all fields. Generative AI has applications across different domains, including creative arts, content generation, data augmentation, and problem-solving.

What is AWS Bedrock?

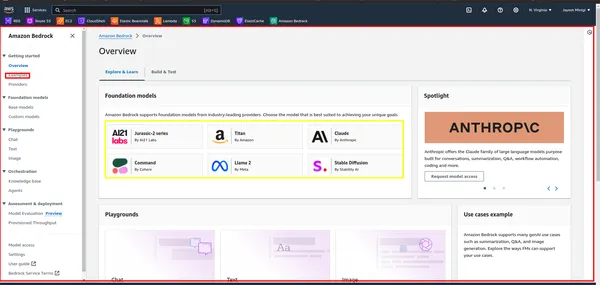

AWS Bedrock is a platform provided by Amazon Web Services (AWS). AWS provides a variety of services, so they recently added the Generative AI service Bedrock, which added a variety of large language models (LLMs). These models are built for specific tasks in different domains. We have various models like the text generation model and the image model that can be integrated seamlessly into software like VSCode by data scientists. We can use LLMs to train and deploy for different NLP tasks such as text generation, summarization, translation, and more.

Key Features of AWS Bedrock

- Access to Pre-trained Models: AWS Bedrock offers a lot of pre-trained LLM models that users can easily utilize without the need to create or train models from scratch.

- Fine-tuning: Users can fine-tune pre-trained models using their own datasets to adapt them to specific use cases and domains.

- Scalability: AWS Bedrock is built on AWS infrastructure, providing scalability to handle large datasets and compute-intensive AI workloads.

- Comprehensive API: Bedrock provides a comprehensive API through which we can easily communicate with the model.

How to Build AWS Bedrock?

Setting up AWS Bedrock is simple yet powerful. This framework, based on Amazon Web Services (AWS), provides a reliable foundation for your applications. Let’s walk through the straightforward steps to get started.

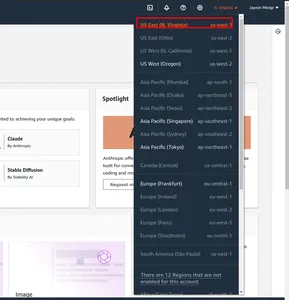

Step 1: Firstly, navigate to the AWS Management Console. And change the region. I marked in red box us-east-1.

Step 2: Next, search for “Bedrock” in the AWS Management Console and click on it. Then, click on the “Get Started” button. This will take you to the Bedrock dashboard, where you can access the user interface.

Step 3: Within the dashboard, you’ll notice a yellow rectangle containing various foundation models such as LLaMA 2, Claude, etc. Click on the red rectangle to view examples and demonstrations of these models.

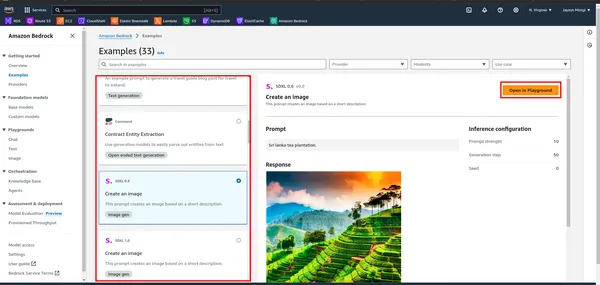

Step 4: Upon clicking the example, you’ll be directed to a page where you’ll find a red rectangle. Click on any one of these options for playground purposes.

What is Stable Diffusion?

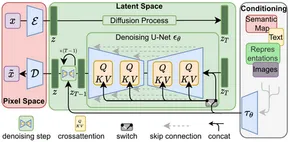

Stable Diffusion is a GenAI model that generates images based on user(text) input. Users provide text prompts, and Stable Diffusion produces corresponding images, as demonstrated in the practical part. It was launched in 2022 and utilizes diffusion technology and latent space to create high-quality images.

After the inception of transformer architecture in natural language processing (NLP), significant progress was made. In computer vision, models like the Vision Transformer (ViT) became prevalent. While traditional architectures like the encoder-decoder model were common, Stable Diffusion adopts an encoder-decoder architecture using U-Net. This architectural choice contributes to its effectiveness in generating high-quality images.

Stable Diffusion operates by progressively adding Gaussian noise to an image until only random noise remains—a process known as forward diffusion. Subsequently, this noise is reversed to recreate the original image using a noise predictor.

Overall, Stable Diffusion represents a notable advancement in generative AI, offering efficient and high-quality image generation capabilities.

Key Features of Stable Diffusion

- Image Generation: Stable Diffusion uses VIT model to create images from the user(text) as inputs.

- Versatility: This model is versatile, so we can use this model on their respective fields. We can create images, GiF, videos, and animations.

- Efficiency: Stable Diffusion models utilize latent space, requiring less processing power compared to other image generation models.

- Fine-Tuning Capabilities: Users can fine-tune Stable Diffusion to meet their specific needs. By adjusting parameters such as denoising steps and noise levels, users can customize the output according to their preferences.

Some of the Images that are created by using the stable diffusion model

How to Build Stable Diffusion?

To build Stable Diffusion, you’ll need to follow several steps, including setting up your development environment, accessing the model, and invoking it with the appropriate parameters.

Step 1. Environment Preparation

- Virtual Environment Creation: Create a virtual environment using venv

conda create -p ./venv python=3.10 -y

- Virtual Environment Activation: Activate the virtual environment

conda activate ./venvStep 2. Installing Requirements Packages

!pip install boto3

!pip install awscliStep 3: Setting up the AWS CLI

- First, you need to create a user in IAM and grant them the necessary permissions, such as administrative access.

- After that, follow the commands below to set up the AWS CLI so that you can easily access the model.

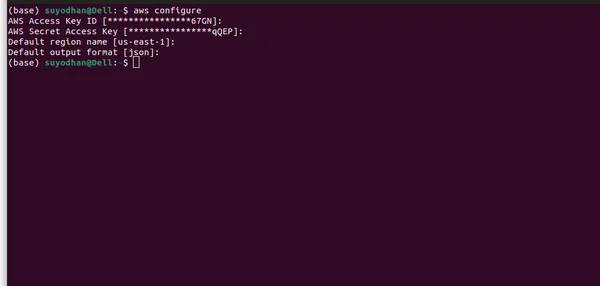

- Configure AWS Credentials: Once installed, you need to configure your AWS credentials. Open a terminal or command prompt and run the following command:

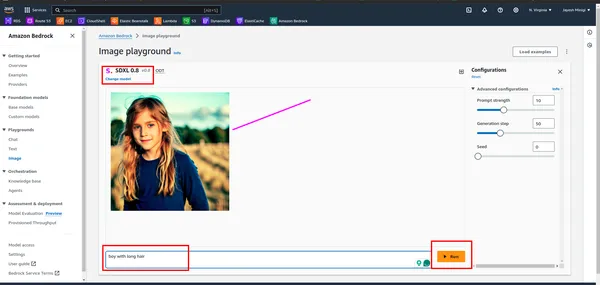

aws configure- After running the above command, you will see a user interface similar to this.

- Please ensure that you provide all the necessary information and select the correct region, as the LLM model may not be available in all regions. Additionally,I specified the region where the LLM model is available on AWS Bedrock.

Step 4: Importing the necessary libraries

- Import the required packages.

import boto3

import json

import base64

import os

- Boto3 is a Python library that provides an easy-to-use interface for interacting with Amazon Web Services (AWS) resources programmatically.

Step 5: Create an AWS Bedrock Client

bedrock = boto3.client(service_name="bedrock-runtime")

Step 6: Define Payload Parameters

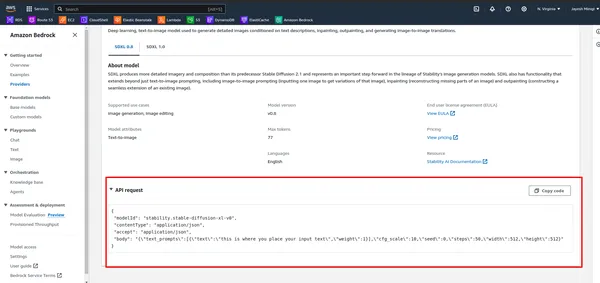

- First, observe the API in AWS Bedrock.

- Run the below cell.

# DEFINE THE USER QUERY

USER_QUERY="provide me an 4k hd image of a beach, also use a blue sky rainy season and

cinematic display"

payload_params = {

"text_prompts": [{"text": USER_QUERY, "weight": 1}],

"cfg_scale": 10,

"seed": 0,

"steps": 50,

"width": 512,

"height": 512

}

Step 7: Define the Payload Object

model_id = "stability.stable-diffusion-xl-v0"

response = bedrock.invoke_model(

body= json.dumps(payload_params),

modelId=model_id,

accept="application/json",

contentType="application/json",

)

Step 8: Send a Request to the AWS Bedrock API and Get the Response Body

response_body = json.loads(response.get("body").read())

Step 9: Extract Image Data from the Response

artifact = response_body.get("artifacts")[0]

image_encoded = artifact.get("base64").encode("utf-8")

image_bytes = base64.b64decode(image_encoded)

Step 10: Save the Image to a File

output_dir = "output"

os.makedirs(output_dir, exist_ok=True)

file_name = f"{output_dir}/generated-img.png"

with open(file_name, "wb") as f:

f.write(image_bytes)

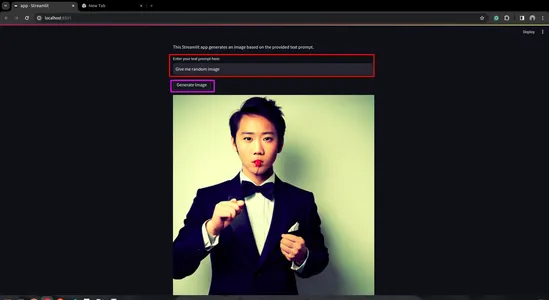

Step 11: Create a Streamlit app

- First Install the Streamlit. For that open the terminal and past it.

pip install streamlit

- Create a Python Script for the Streamlit App

import streamlit as st

import boto3

import json

import base64

import os

def generate_image(prompt_text):

prompt_template = [{"text": prompt_text, "weight": 1}]

bedrock = boto3.client(service_name="bedrock-runtime")

payload = {

"text_prompts": prompt_template,

"cfg_scale": 10,

"seed": 0,

"steps": 50,

"width": 512,

"height": 512

}

body = json.dumps(payload)

model_id = "stability.stable-diffusion-xl-v0"

response = bedrock.invoke_model(

body=body,

modelId=model_id,

accept="application/json",

contentType="application/json",

)

response_body = json.loads(response.get("body").read())

artifact = response_body.get("artifacts")[0]

image_encoded = artifact.get("base64").encode("utf-8")

image_bytes = base64.b64decode(image_encoded)

# Save image to a file in the output directory.

output_dir = "output"

os.makedirs(output_dir, exist_ok=True)

file_name = f"{output_dir}/generated-img.png"

with open(file_name, "wb") as f:

f.write(image_bytes)

return file_name

def main():

st.title("Generated Image")

st.write("This Streamlit app generates an image based on the provided text prompt.")

# Text input field for user prompt

prompt_text = st.text_input("Enter your text prompt here:")

if st.button("Generate Image") and prompt_text:

image_file = generate_image(prompt_text)

st.image(image_file, caption="Generated Image", use_column_width=True)

elif st.button("Generate Image") and not prompt_text:

st.error("Please enter a text prompt.")

if __name__ == "__main__":

main()

- Run the Streamlit App

streamlit run app.py

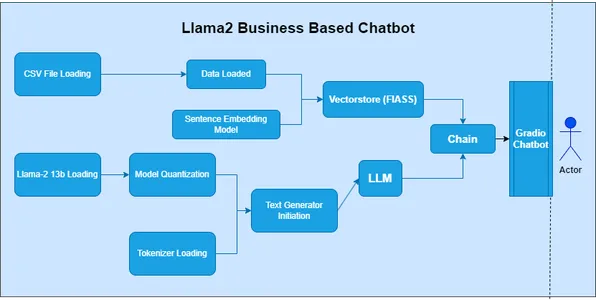

What is LLaMA 2?

LLaMA 2, or the Large Language Model of Many Applications, belongs to the category of Large Language Models (LLM). Facebook (Meta) developed this model to explore a broad spectrum of natural language processing (NLP) applications. In the earlier series, the ‘LAMA’ model was the starting face of development, but it utilized outdated methods.

Key Features of LLaMA 2

- Versatility: LLaMA 2 is a powerful model capable of handling diverse tasks with high accuracy and efficiency

- Contextual Understanding: In sequence-to-sequence learning, we explore phonemes, morphemes, lexemes, syntax, and context. LLaMA 2 allows a better understanding of contextual nuances.

- Transfer Learning: LLaMA 2 is a robust model, that benefits from extensive training on a large dataset. Transfer learning facilitates its quick adaptability to specific tasks.

- Open-Source: In Data Science, a key aspect is the community. Open-source models make it possible for researchers, developers, and communities to explore, adapt, and integrate them into their projects.

Use Cases

- LLaMA 2 can help in creating text-generation tasks, such as story-writing, content creation, etc.

- We know the importance of zero-shot learning. So, we can use LLaMA 2 for question-answering tasks, similar to ChatGPT. It provides relevant and accurate responses.

- For language translation, in the market, we have APIs, but we need to subscribe. But LLaMA 2 provides language translation for free, making it easy to utilize.

- LLaMA 2 is easy to use and an excellent choice for developing chatbots.

How to Build LLaMA 2

To build LLaMA 2, you’ll need to follow several steps, including setting up your development environment, accessing the model, and invoking it with the appropriate parameters.

Step 1: Import Libraries

- In the first cell of the notebook, import the necessary libraries:

import boto3

import json

Step 2: Define Prompt and AWS Bedrock Client

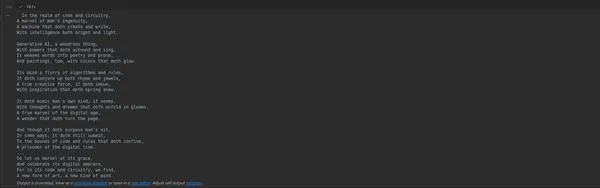

- In the next cell, define the prompt for generating the poem and create a client for accessing the AWS Bedrock API:

prompt_data = """

Act as a Shakespeare and write a poem on Generative AI

"""

bedrock = boto3.client(service_name="bedrock-runtime")

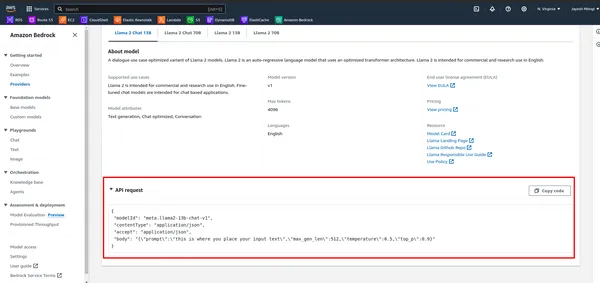

Step 3: Define Payload and Invoke Model

- First, observe the API in AWS Bedrock.

- Define the payload with the prompt and other parameters, then invoke the model using the AWS Bedrock client:

payload = {

"prompt": "[INST]" + prompt_data + "[/INST]",

"max_gen_len": 512,

"temperature": 0.5,

"top_p": 0.9

}

body = json.dumps(payload)

model_id = "meta.llama2-70b-chat-v1"

response = bedrock.invoke_model(

body=body,

modelId=model_id,

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

response_text = response_body['generation']

print(response_text)

Step 4: Run the Notebook

- Execute the cells in the notebook one by one by pressing Shift + Enter. The output of the last cell will display the generated poem.

Step 5: Create a Streamlit app

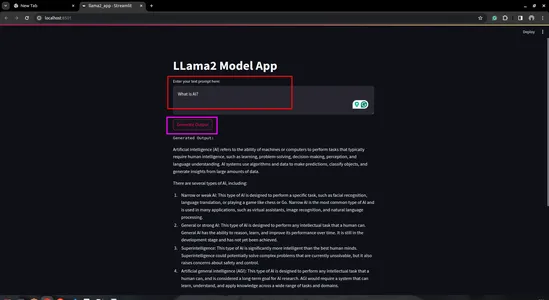

- Create a Python Script: Create a new Python script (e.g., llama2_app.py) and open it in your preferred code editor

import streamlit as st

import boto3

import json

# Define AWS Bedrock client

bedrock = boto3.client(service_name="bedrock-runtime")

# Streamlit app layout

st.title('LLama2 Model App')

# Text input for user prompt

user_prompt = st.text_area('Enter your text prompt here:', '')

# Button to trigger model invocation

if st.button('Generate Output'):

payload = {

"prompt": user_prompt,

"max_gen_len": 512,

"temperature": 0.5,

"top_p": 0.9

}

body = json.dumps(payload)

model_id = "meta.llama2-70b-chat-v1"

response = bedrock.invoke_model(

body=body,

modelId=model_id,

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

generation = response_body['generation']

st.text('Generated Output:')

st.write(generation)

- Run the Streamlit App:

- Save your Python script and run it using the Streamlit command in your terminal:

streamlit run llama2_app.py

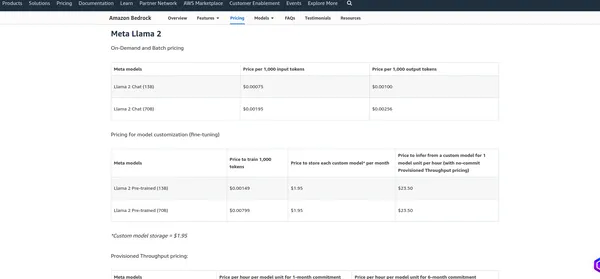

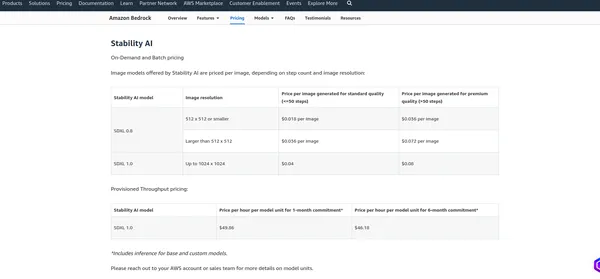

Pricing Of AWS Bedrock

The pricing of AWS Bedrock depends on various factors and the services you use, such as model hosting, inference requests, data storage, and data transfer. AWS typically charges based on usage, meaning you only pay for what you use. I recommend checking the official pricing page as AWS may change their pricing structure. I can provide you with the current charges, but it’s best to verify the information on the official page for the most accurate details.

Meta LlaMA 2

Stability AI

Conclusion

This blog delved into the realm of generative AI, focusing specifically on two powerful LLM models: Stable Diffusion and LLamV2. We also explored AWS Bedrock as a platform for creating LLM model APIs. Using these APIs, we demonstrated how to write code to interact with the models. Additionally, we utilized the AWS Bedrock playground to practice and assess the capabilities of the models.

At the outset, we highlighted the importance of selecting the correct region within AWS Bedrock, as these models may not be available in all regions. Moving forward, we provided a practical exploration of each LLM model, starting with the creation of Jupyter notebooks and then transitioning to the development of Streamlit applications.

Finally, we discussed AWS Bedrock’s pricing structure, underscoring the necessity of understanding the associated costs and referring to the official pricing page for accurate information.

Key Takeaways

- Stable Diffusion and LLAMV2 on AWS Bedrock offer easy access to powerful generative AI capabilities.

- AWS Bedrock provides a simple interface and comprehensive documentation for seamless integration.

- These models have different key features and use cases across various domains.

- Remember to choose the right region for access to desired models on AWS Bedrock.

- Practical implementation of generative AI models like Stable Diffusion and LLAMv2 offers efficiency on AWS Bedrock.

Frequently Asked Questions

A. Generative AI is a subset of artificial intelligence focused on creating new content, such as images, text, or code, rather than just analyzing existing data.

A. Stable Diffusion is a generative AI model that produces photorealistic images from text and image prompts using diffusion technology and latent space.

A. AWS Bedrock provides APIs for managing, training, and deploying models, allowing users to access large language models like LLAMv2 for various applications.

A. You can access LLM models on AWS Bedrock using the provided APIs, such as invoking the model with specific parameters and receiving the generated output.

A. Stable Diffusion can generate high-quality images from text prompts, operates efficiently using latent space, and is accessible to a wide range of users.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/02/building-end-to-end-generative-ai-models-with-aws-bedrock/