Introduction

Power of LLMs have become the new buzz in the AI community. Early adopters have swarmed to the different generative AI solutions like GPT 3.5, GPT 4, and BARD for different use cases. They have been used for question and answering tasks, creative text writing, and critical analysis. Since these models are trained on tasks like next-sentence prediction on a large variety of corpora, they are expected to be great at text generation.

The robust transformer-based neutral networks allow the model to also adapt to language-based machine learning tasks like classification, translation, prediction, and entity recognition. Hence, it has become easy for data scientists to leverage generative AI platforms for more practical and industrial language-based ML use cases by giving the appropriate instructions. In this article, we aim to show how simple it is to use generative LLMs for prevalent language-based ML tasks using prompting and critically analyze the benefits and limitations of zero-shot and few-shot prompting.

Learning Objectives

- Learn about zero-shot and few-shot prompting.

- Analyze their performance on an example machine learning task.

- Evaluate few-shot prompting against more sophisticated techniques like fine-tuning.

- Understand the pros and cons of prompting techniques.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Prompting?

Let us start with defining LLMs. A large language model, or LLM, is a deep learning system built with multiple layers of transformers and feed-forward neural networks that contain hundreds of millions to billions of parameters. They are trained on massive datasets from different sources and are built to understand and generate text. Some example applications are language translation, text summarization, question answering, content generation, and more. There are different types of LLMs: encoder-only(BERT), encoder + decoder (BART, T5), and decoder-only (PALM, GPT, etc.). LLMs with a decoder component are called Generative LLMs; this is the case for most modern LLMs.

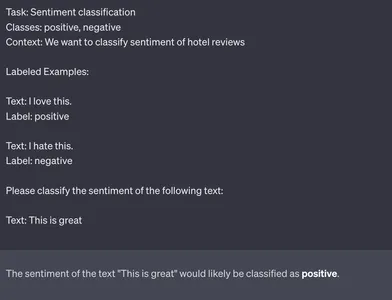

If you tell Generative LLM to do a task, it will generate the corresponding text. However, how do we tell a Generative LLM to do a particular task? It is easy; we give it a written instruction. LLMs have been designed to respond to the end users based on the instructions, aka prompts. You have used prompts if you have interacted with an LLM like ChatGPT. Prompting is about packaging our intent in a natural-language query that will cause the model to return the desired response (Example: Figure 1, Source: Chat GPT).

There are two major types of prompting techniques that we will be looking at in the following sections: zero-shot and few-shot. We will look at their details along with some basic examples.

Zero-shot Prompting

Zero-shot prompting is a specific scenario of zero-shot learning unique to Generative LLMs. In zero-shot, we provide no labeled data to the model and expect the model to work on a completely new problem. For example, use ChatGPT for zero-shot prompting on new tasks by providing appropriate instructions. LLMs can adapt to unseen problems because they understand content from many resources. Let us take a look at a few examples.

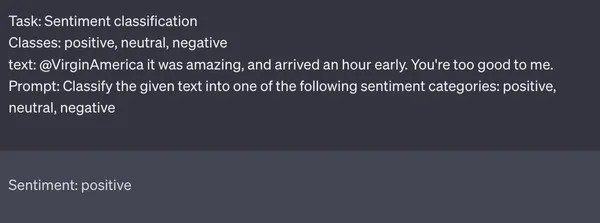

Here is an example query for the classification of text into positive, neutral, and negative sentiment classes.

Tweet Examples

The tweet examples are from the Twitter US Airline Sentiment Dataset. The dataset consists of feedback tweets to different airlines labeled positive, neutral, or negative. In Figure 2(Source: ChatGPT), we provided the task name, i.e., Sentiment Classification, classes, i.e., positive, neutral, and negative, the text, and the prompt to classify. The airline feedback in Figure 2 is a positive one and appreciates the flying experience with the airline. ChatGPT correctly classified the sentiment of the review as positive, showing the capability of ChatGPT to generalize on a new task.

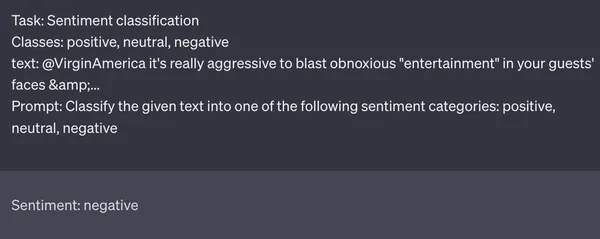

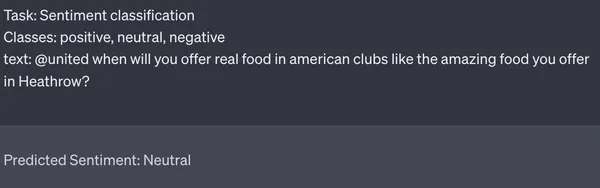

Figure 3 above shows Chat GPT with zero shot on another example but with negative sentiment. Chat GPT again correctly predicts the sentiment of the tweet. While we have shown two examples where the model successfully classifies the review text, there are several borderline cases where even the state-of-the-art LLMs fail. For example, let us look at the example below in Figure 4. The user is complaining about food quality with the airline carrier; Chat GPT incorrectly identifies the sentiment as neutral.

In the table below, we can see the comparison of zero-shot with the performance of the BERT model (Source) on the Twitter Sentiment dataset. We will look at the metrics accuracy, F1-score, precision, and recall. Evaluate the performance for zero-shot prompting on randomly selected subset of data from the airlines sentiment dataset for each case and round off the performance numbers to the nearest integers. Zero-shot has lower but decent performances on every evaluation metric, showing how powerful prompting could be. The performance numbers have been rounded off to the nearest integers.

| Model | Accuracy | F1 Score | Precision | Recall |

| Fine-tuned BERT | 84% | 79% | 80% | 79% |

| Chat GPT (Zero-shot) [Source] | 73% | 72% | 74% | 76% |

Few-shot Prompting

Unlike zero-shot, few-shot prompting involves providing a few labeled examples in the prompt. This differs from traditional few-shot learning, which entails fine-tuning the LLM with a few samples for a novel problem. This approach lessens the reliance on large labeled datasets by allowing models to swiftly adapt and produce precise predictions for new classes with a small number of labeled samples. This method is beneficial when gathering a sizable amount of labeled data for new classes takes time and effort. Here is an example (Figure 5) of few-shot:

Few Shot vs Zero Shot

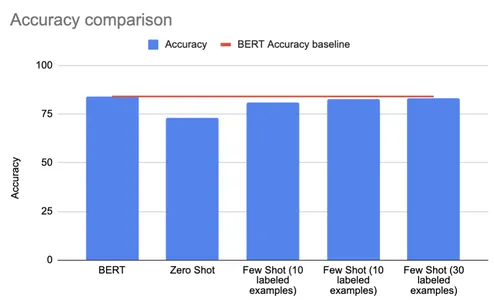

How much does few-shot improve the performance? While the few-shot and zero-shot techniques have shown good performance on anecdotal examples, few-shot has a higher overall performance than zero-shot. As the table below shows, we could improve the accuracy of the task at hand by providing a few high-quality examples and samples of borderline and critical examples while prompting the Generative AI models. Performance improves by using few-shot learning (10, 20, and 50 examples). The performance for few-shot prompting was evaluated on randomly subset of data from the airlines sentiment dataset for each case and the performance numbers have been rounded off to the nearest integers.

| Model | Accuracy | F1 Score | Precision | Recall |

| Fine-tuned BERT | 84% | 79% | 80% | 79% |

| Chat GPT (Few-shot 10 examples) [Source] | 80.8% | 76% | 74% | 79% |

| Chat GPT (Few-shot 20 examples) [Source] | 82.8% | 79% | 77% | 81% |

| Chat GPT (Few-shot 30 examples) [Source] | 83% | 79% | 77% | 81% |

Based on the evaluation metrics in the table above, few-shot beats zero-shot by a notable margin of 10% on accuracy, 7% on F1 score, and achieved on-par performance to fine-tuned BERT model. Another key observation is that, after 20 examples, the improvements stagnate. The example we have covered in our analysis is a particular use case of Chat GPT on Twitter US Airlines Sentiment Dataset. Let us look at another example to understand if our observations span more tasks and generative AI models.

Language Models: Few Shot Learners

Below (Figure 6) is an example from the studies described in the paper “Language Models are Few-Shot Learners” comparing the performance of few-shot, one-shot, and zero-shot models with GPT-3. The performance is measured on the LAMBADA benchmark (target word prediction) under different few-shot settings. The uniqueness of LAMBADA lies in its focus on evaluating a model’s ability to handle long-range dependencies in text, which are situations where a considerable distance separates a piece of information from its relevant context. Few-shot learning beats zero-shot learning by a notable margin of 12.2pp on accuracy.

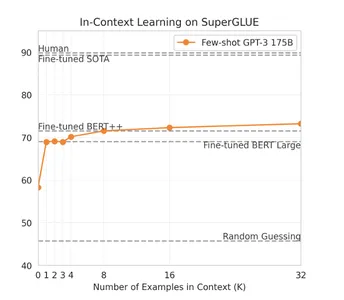

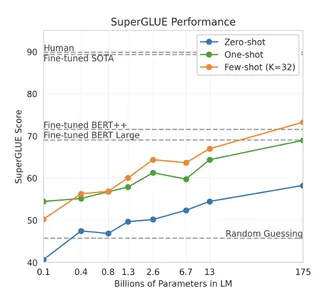

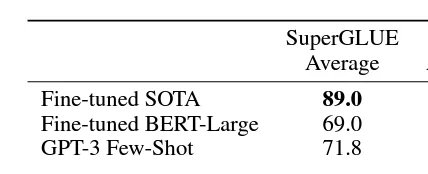

In another example covered in the above-mentioned paper, the performance of GPT-3 is compared across different numbers of examples provided in the prompt against a fine-tuned BERT model on the SuperGLUE benchmark. SuperGLUE is considered a key benchmark for evaluating performance on language understanding ML tasks. The graph (Figure 7) shows that the first eight examples have the most impact. As we add more examples for few-shot prompting, we hit a wall where we need to exponentially increase the examples to see a notable improvement. We can very clearly see that see that the same observations as our sentiment classification example are replicated.

Zero-shot should be considered only in scenarios where labeled data is missing. If we get a few labeled examples, we can achieve great performance wins using few-shot compared to zero-shot. A lingering question is how well these techniques perform when compared against more sophisticated techniques like fine-tuning. There have been several well-developed LLM fine-tuning techniques recently, and their usage cost has also been greatly reduced. Why should one not just fine-tune their models? In the upcoming sections, we will look deeper into comparing the prompting techniques against fine-tuned models.

Few-shot Prompting vs Fine-Tuning

The main benefit of few-shot with generative LLMs is the simplicity of implementation of the approach. Collect a few labeled examples and prepare the prompt, run inference and we are done. Even with several modern innovations, fine-tuning is quite bulky in implementation and needs a lot of training time, and resources. For a few particular instances, we can use the different generative LLM UIs to get the results. For inference on a larger dataset, the code would be something as simple as:

import os

import openai messages = [] # Chat GPT labeled examples

few_shot_message = "" # Mention the Task

few_shot_message = "Task: Sentiment Classification n" # Mention the classes

few_shot_message += "Classes: positive, negative n" # Add context

few_shot_message += "Context: We want to classify sentiment of hotel reviews n" #Add labeled examples

few_shot_message += "Labeled Examples: n" for labeled_data in labeled_dataset: few_shot_message += "Text: " + labeled_data["text"] + "n"; few_shot_message += "Label: " + labeled_data["label"] + "n" # Call OpenAI API for ChatGPT providing the few-shot examples

messages.append({"role": "user", "content": few_shot_message})

chat = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=messages ) for data in unlabeled_dataset: # Add the text to classfy message = "Text: " + data + ", " # Add the prompt message += "Prompt: Classify the given text into one of the sentiment categories." messages.append({"role": "user", "content": message}) # Call OpenAI API for ChatGPT for classification chat = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=messages ) reply = chat.choices[0].message.content print(f"ChatGPT: {reply}") messages.append({"role": "assistant", "content": reply})

Another key benefit of few-shot over fine-tuning is the amount of data. In the Twitter US Airlines Sentiment classification task, BERT fine-tuning was done with over 10,000 examples, whereas few-shot prompting needed only 20 to 50 examples to get similar performance. However, do these performance wins generalize to other language-based ML tasks? The sentiment classification example we have covered is a very specific use case. The performance of few-shot prompting would not be up to the mark of a fine-tuned model for every use case. However, it shows similar/better capability spanning a wide variety of language tasks. To show the power of few-shot prompting, we have compared the performance with SOTA and fine-tuned language models like BERT on tasks across standardized language understanding, translation, and QA benchmarks in the sections below. (Source: Language Models are Few-Shot Learners)

Language Understanding

For comparing the performance of few-shot and fine-tuning on language understanding tasks, we will be looking at the SuperGLUE benchmark. SuperGLUE is a language understanding benchmark consisting of classification, text similarity, and natural language inference tasks. The fine-tuned model used for comparison is a fine-tuned BERT large and fine-tuned BERT++ model, and the generative LLM used is GPT-3. The charts in the figures (Figure 8 and Figure 9) below show few-shot prompting with Generative LLMs of sufficiently large sizes, and about 32 few-shot examples are enough to beat Fine-tuned BERT++ and Fine-tuned BERT Large. The accuracy gain over BERT large is about 2.8 pp, showcasing the power of few-shot on generative LLMs.

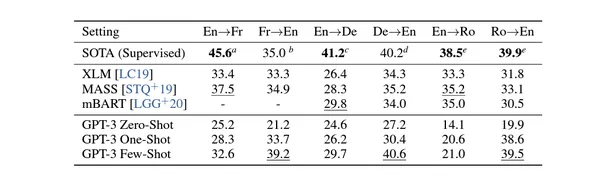

Translation

In the next task, we will compare the performance of few-shot and fine-tuning on translation-based tasks. We will look at the BLUE benchmark, also called Bilingual Evaluation Understudy. BLEU computes a score between 0 and 1, where a higher score indicates better translation quality. The main idea behind BLEU is to compare the generated translation against one or more reference translations and measure the extent to which the generated translation contains similar n-grams as the reference translations. The models used for comparison are XLM, MASS, and mBART, and the generative LLM used is GPT-3.

As the table in the figure (Figure 10) below shows, few-shot prompting with Generative LLMs with a few examples is enough to beat XLM, MASS, multilingual BART, and even the SOTA for different translation tasks. Few-shot GPT-3 outperforms previous unsupervised Neural Machine Translation work by 5 BLEU when translating into English, reflecting its strength as an English translation language model. However, it is important to note that the model performed poorly on certain translation tasks, like English to Romanian, highlighting its gaps and the need to evaluate the performance case by case.

Question-Answering

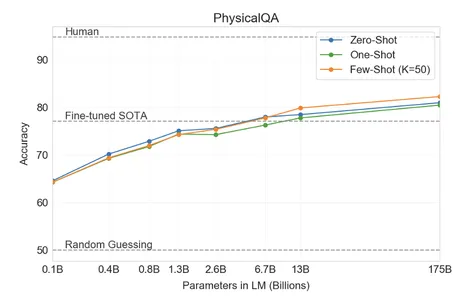

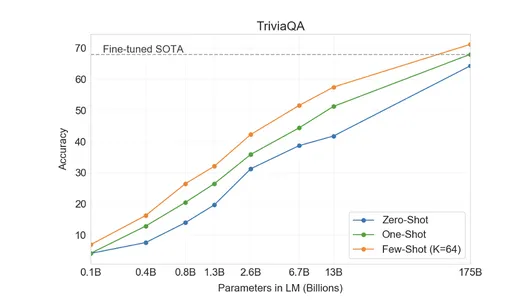

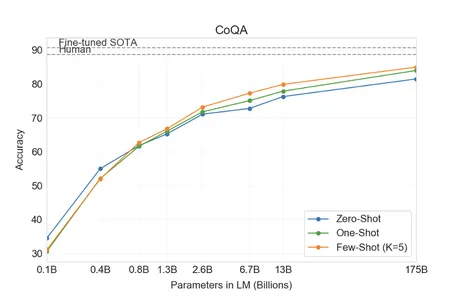

In the final task, we will compare the performance of few-shot and fine-tuning on question-answering tasks. The task name is self-explanatory. We will be looking at three key benchmarks for QA tasks: PI QA (Procedural Information Question Answering), Trivia QA (factual knowledge and answering questions), and CoQA (Conversational Question Answering). The comparison is made against the SOTA for fine-tuned models, and the generative LLM used is GPT-3. As shown by the charts in the figures (Figure 11, Figure 12, and Figure 13) below, few-shot prompting on Generative LLMs with a few examples is enough to beat the fine-tuned SOTA for PIQA and Trivia QA. The model missed out on the fine-tuned SOTA for CoQA but had a fairly similar accuracy.

Limitations of Prompting

The numerous examples and case studies in the sections above clearly show how few-shot can be the go-to solution over fine-tuning for several language-based ML tasks. In most cases, few-shot techniques achieved better or proximate results than fine-tuned language models. However, it is essential to note that in most niche use cases, domain-specific pre-training would greatly outperform fine-tuning [Source] and, consequently, prompting techniques. This limitation cannot be solved at the prompt design level and would need substantial strides in the generalized LLM developments.

Another fundamental limitation is the hallucination from Generative LLMs. Generalist LLMs have been prone to hallucinations as they are often catered heavily to creative writing. This is another reason domain-specific LLMs are more precise and perform better on their field-specific benchmarks.

Lastly, using generalized LLMs like Chat GPT and GPT-4 will have higher privacy risks than fine-tuned or domain-specific models, for which we can build our model instance. This is a concern, especially for use cases depending on proprietary or sensitive user data.

Conclusion

Prompting techniques have become a bridge between LLMs and practical language-based ML tasks. Zero-shot, requiring no prior labeled data, showcases the potential of these models to generalize and adapt to new problems. However, it fails to attain similar/better performance compared to fine-tuning. Numerous examples and benchmark performance comparisons show that few-shot prompting offers a compelling alternative to fine-tuning across a range of tasks. By presenting a few labeled examples within prompts, these techniques enable models to adapt to new classes with minimal labeled data swiftly. Moreover, the performance data listed in the sections above suggests that moving existing solutions to use few-shot prompting with Generative LLM is a worthwhile investment. Running experiments with the approaches mentioned in this article will improve the chances of achieving your targets using prompting techniques.

Key Takeaways

- Prompting Techniques Enable Practical Use: Prompting techniques are a powerful bridge between generative LLMs and practical language-based machine learning tasks. Zero-shot prompting allows models to generalize without labeled data, while few-shot leverages several examples to adapt quickly. These techniques simplify deployment, offering a pathway for effective utilization.

- Few-shot performs better than zero-shot: Few-shot offers better performance by providing the LLM with targeted guidance through labeled examples. It allows the model to utilize its pre-trained knowledge while benefiting from minimal task-specific examples, resulting in more accurate and relevant responses for the given task.

- Few-Shot Prompting Competes with Fine-Tuning: Few-shot is a promising alternative to fine-tuning. Few-shot achieves similar or better performance across classification, language understanding, translation, and question-answering tasks by providing labeled examples within prompts. It especially excels in scenarios where labeled data is scarce.

- Limitations and Considerations: While generative LLMs and prompting techniques have several benefits, domain-specific pre-training is still the way for specialized tasks. Also, privacy risks associated with generalized LLMs underscore the need to handle sensitive data carefully.

Frequently Asked Questions

A: Generative LLMs are advanced AI systems like GPT-3.5, GPT-4, and BARD designed to understand and generate human-like text. They are employed in AI applications, like creative writing, question answering, and critical analysis.

A: Zero-shot involves using LLMs for new tasks without prior labeled data. Few-shot employs a few labeled examples in prompts to quickly adapt models to new tasks. These techniques simplify deploying LLMs for real-world language-based machine learning tasks.

A: While zero-shot and few-shot are potent techniques, few-shot offers better performance by providing the LLM with targeted guidance through labeled examples. It allows the model to utilize its pre-trained knowledge while benefiting from minimal task-specific examples, resulting in more accurate and relevant responses for the given task.

A: Few-shot has shown great performance gains, often surpassing or closely matching fine-tuned models across different tasks. With just a few labeled examples, few-shot can deliver similar results while being simpler to implement.

A: While powerful, generative LLMs may need help with domain-specific tasks that need deep contextual understanding. Additionally, privacy concerns arise when using generalized LLMs, especially for sensitive data, making careful handling essential.

References

- Tom B. Brown and others, Language models are few-shot learners, In Proceedings of the 34th International Conference on Neural Information Processing Systems (NIPS’20), 2020.

- https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment

- https://www.kaggle.com/code/sdfsghdhdgresa/sentiment-analysis-using-bert-distillation

- https://github.com/Deepanjank/OpenAI/blob/main/open_ai_sentiment_few_shot.py

- https://www.analyticsvidhya.com/blog/2023/08/domain-specific-llms/

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/09/power-of-llms-zero-shot-and-few-shot-prompting/