The increasing demands of data-intensive applications necessitate more efficient storage and memory utilization. The rapid evolution of AI workloads, particularly with Generative AI (GenAI), demands infrastructure that can adapt to diverse computational needs. AI models vary widely in resource requirements, necessitating different optimization strategies for real-time and batch inference. Open infrastructure platforms, leveraging modular design and open standards, provide the flexibility to support various AI applications. Addressing scalability, performance bottlenecks, and continuous innovation are crucial for building systems that can handle the growing complexity and demands of AI workloads effectively.

Alphawave Semi recently sponsored a webinar on the topic of driving data frontiers using PCIe and CXL technologies with Dave Kulansky, Director of Product Marketing hosting the live session. Dave’s talk covered the motivation, the traditional and optical architectural configurations, the various components of the solution, the challenges with implementing optical interfaces and what kind of collaboration is needed for driving innovation.

Why PCIe and CXL for Driving Data Frontiers?

The evolution of PCIe (Peripheral Component Interconnect Express) and the advent of CXL (Compute Express Link) are pivotal in pushing the boundaries of data handling and processing in modern computing infrastructures. Designed to support high data transfer rates, PCIe and CXL enable rapid data movement essential for applications like AI/ML training, big data analytics, and real-time processing. PCIe’s scalable architecture and CXL’s enhanced functionality for coherent memory sharing and accelerator integration offer flexible system designs.

Both technologies prioritize power efficiency and robustness, with advanced error correction ensuring data integrity. CXL further enhances performance by reducing latency, enabling efficient memory utilization, and supporting virtualization and composable infrastructure, making these technologies indispensable for data-intensive and latency-sensitive applications.

Additionally, disaggregated infrastructure models, enabled by CXL’s low latency and high bandwidth, decouple compute, storage, and memory resources, allowing for modular scalability and optimized resource management. These advancements lead to enhanced performance and flexibility in data centers, high-performance computing, and cloud computing environments, ensuring efficient handling of dynamic workloads and large datasets while reducing operational costs.

Linear Pluggable Optics (LPO): A Key Advancement in High-Speed Networking

Linear Pluggable Optics (LPO) are crucial for meeting the escalating demands for higher bandwidth and efficiency in data centers and high-speed networking environments. LPO modules support data rates from 100 Gbps to 400 Gbps and beyond, leveraging PAM4 modulation to double data rates while maintaining power efficiency. Their pluggable form factor allows for easy integration and scalability, enabling network operators to upgrade systems with minimal disruption. LPO modules are compatible with a wide range of existing network hardware, ensuring seamless adoption. Their reliability and low latency make them ideal for data centers, telecommunications, cloud computing, and emerging technologies like AI and machine learning, providing a robust solution for evolving data transmission needs.

Energy-Efficient Accelerator Card

Energy-efficient solutions are key, particularly for data center networking, AI/ML workloads, and disaggregated infrastructure. An energy-efficient accelerator card, utilizing advanced electrical and optical interfaces, can significantly reduce power consumption while maintaining high performance. This card can integrate low-latency PCIe switches, potentially as optical interfaces, to enhance connectivity, support memory expansion, and optimize bandwidth.

The accelerator card approach offers scalability, resource efficiency, and accelerated processing, benefiting data centers and AI/ML applications by reducing energy costs and improving performance. Its hybrid electrical-optical design balances short-distance and long-distance data transfers, ensuring adaptability across various deployment scenarios. Smart power management and efficient thermal solutions further enhance its energy efficiency, making it a vital component for sustainable, scalable, and flexible computing environments.

Implementation Challenges

Implementing optical interfaces faces significant challenges, including the lack of standardization, nonlinearities of optical components, complexity in system-level analysis, and signal integrity optimization. Key issues include the absence of standardized optical interfaces from bodies like OIF and PCI-SIG, the nonlinear behavior of optical transmitters, the lack of comprehensive analysis tools for optical channels, and the need for optimized host setups and Continuous-Time Linear Equalization (CTLE) for signal integrity. Additional layer of complication is due to the fact that the original specifications were not anticipating current use cases, necessitating careful adaptation.

Addressing these challenges requires collaborative efforts to establish standards, advanced modeling and simulation tools, innovative signal processing techniques, and interdisciplinary collaboration.

Testing Approach

Transmitter dispersion and eye closure quaternary (TDECQ) and Bit Error Rate (BER) are important metrics for high performance in optical communication systems. An iterative testing approach can help refine optical interface implementations, ultimately leading to more efficient, reliable computing systems with enhanced connectivity. For example, first focus on minimizing TDECQ, adjusting transmitter (TX) settings such as laser bias, modulation current, pre-emphasis, de-emphasis, and pulse shaping, while maintaining optimal operating temperatures. Continuous monitoring and a feedback loop ensure these settings remain optimal.

Next focus on reducing BER, optimizing receiver (RX) settings including Clock and Data Recovery (CDR), Continuous-Time Linear Equalization (CTLE), Decision Feedback Equalization (DFE), Automatic Gain Control (AGC), and FIR filters.

Summary

Success hinges on ecosystem support, involving collaboration among stakeholders, standards bodies, and industry players to drive innovation.

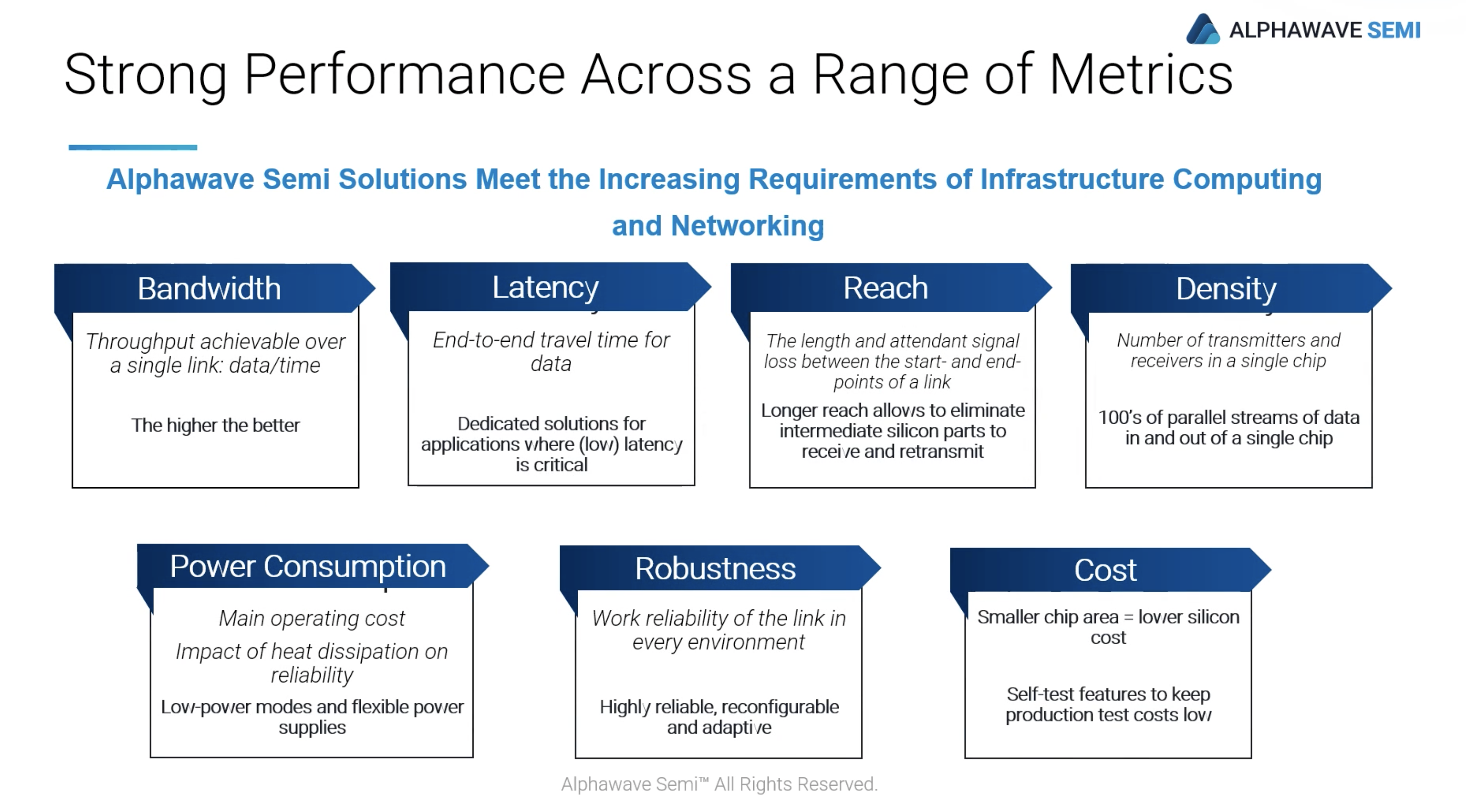

Alphawave Semi collaborates with a broad range of industry bodies and players and its solutions deliver strong performance on various metrics.

Direct drive optical interconnects at 64Gbps appear feasible, offering a straightforward high-speed data transmission solution without retiming. However, scaling to 128Gbps may introduce signal integrity and timing challenges, potentially requiring retiming to ensure reliability. Navigating these challenges underscores the importance of coordinated efforts to balance practicality and performance as data rates rise.

Learn more about Alphawave Semi’s pcie-cxl subsytems.

The webinar is available on-demand from here.

Share this post via:

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://semiwiki.com/artificial-intelligence/345986-driving-data-frontiers-high-performance-pcie-and-cxl-in-modern-infrastructures/