Hundreds of thousands of organizations build data integration pipelines to extract and transform data. They establish data quality rules to ensure the extracted data is of high quality for accurate business decisions. These rules assess the data based on fixed criteria reflecting current business states. However, when the business environment changes, data properties shift, rendering these fixed criteria outdated and causing poor data quality.

For example, a data engineer at a retail company established a rule that validates daily sales must exceed a 1-million-dollar threshold. After a few months, daily sales surpassed 2 million dollars, rendering the threshold obsolete. The data engineer couldn’t update the rules to reflect the latest thresholds due to lack of notification and the effort required to manually analyze and update the rule. Later in the month, business users noticed a 25% drop in their sales. After hours of investigation, the data engineers discovered that an extract, transform, and load (ETL) pipeline responsible for extracting data from some stores had failed without generating errors. The rule with outdated thresholds continued to operate successfully without detecting this issue. The ordering system that used the sales data placed incorrect orders, causing low inventory for future weeks. What if the data engineer had the ability to set up dynamic thresholds that automatically adjusted as business properties changed?

We are excited to talk about how to use dynamic rules, a new capability of AWS Glue Data Quality. Now, you can define dynamic rules and not worry about updating static rules on a regular basis to adapt to varying data trends. This feature enables you to author dynamic rules to compare current metrics produced by your rules with your historical values. These historical comparisons are enabled by using the last(k) operator in expressions. For example, instead of writing a static rule like RowCount > 1000, which might become obsolete as data volume grows over time, you can replace it with a dynamic rule like RowCount > min(last(3)) . This dynamic rule will succeed when the number of rows in the current run is greater than the minimum row count from the most recent three runs for the same dataset.

This is part 7 of a seven-part series of posts to explain how AWS Glue Data Quality works. Check out the other posts in the series:

Previous posts explain how to author static data quality rules. In this post, we show how to create an AWS Glue job that measures and monitors the data quality of a data pipeline using dynamic rules. We also show how to take action based on the data quality results.

Solution overview

Let’s consider an example data quality pipeline where a data engineer ingests data from a raw zone and loads it into a curated zone in a data lake. The data engineer is tasked with not only extracting, transforming, and loading data, but also identifying anomalies compared against data quality statistics from historical runs.

In this post, you’ll learn how to author dynamic rules in your AWS Glue job in order to take appropriate actions based on the outcome.

The data used in this post is sourced from NYC yellow taxi trip data. The yellow taxi trip records include fields capturing pickup and dropoff dates and times, pickup and dropoff locations, trip distances, itemized fares, rate types, payment types, and driver-reported passenger counts. The following screenshot shows an example of the data.

Set up resources with AWS CloudFormation

This post includes an AWS CloudFormation template for a quick setup. You can review and customize it to suit your needs.

The CloudFormation template generates the following resources:

- An Amazon Simple Storage Service (Amazon S3) bucket (

gluedataqualitydynamicrules-*) - An AWS Lambda which will create the following folder structure within the above Amazon S3 bucket:

raw-src/landing/nytaxi/processed/nytaxi/dqresults/nytaxi/

- AWS Identity and Access Management (IAM) users, roles, and policies. The IAM role

GlueDataQuality-*has AWS Glue run permission as well as read and write permission on the S3 bucket.

To create your resources, complete the following steps:

- Sign in to the AWS CloudFormation console in the us-east-1 Region.

- Choose Launch Stack:

- Select I acknowledge that AWS CloudFormation might create IAM resources.

- Choose Create stack and wait for the stack creation step to complete.

Upload sample data

- Download the dataset to your local machine.

- Unzip the file and extract the Parquet files into a local folder.

- Upload parquet files under prefix

raw-src/in Amazon s3 bucket (gluedataqualitydynamicrules-*)

Implement the solution

To start configuring your solution, complete the following steps:

- On the AWS Glue Studio console, choose ETL Jobs in the navigation pane and choose Visual ETL.

- Navigate to the Job details tab to configure the job.

- For Name, enter

GlueDataQualityDynamicRules - For IAM Role, choose the role starting with

GlueDataQuality-*. - For Job bookmark, choose Enable.

This allows you to run this job incrementally. To learn more about job bookmarks, refer to Tracking processed data using job bookmarks.

- Leave all the other settings as their default values.

- Choose Save.

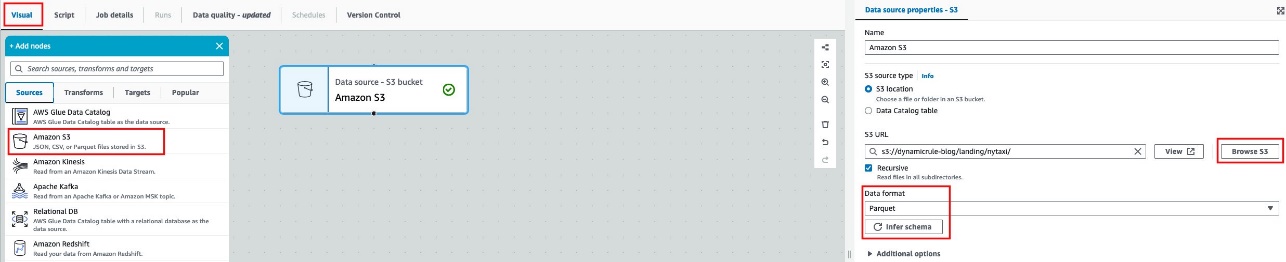

- After the job is saved, navigate to the Visual tab and on the Sources menu, choose Amazon S3.

- In the Data source properties – S3 pane, for S3 source type, select S3 location.

- Choose Browse S3 and navigate to the prefix

/landing/nytaxi/in the S3 bucket starting withgluedataqualitydynamicrules-*. - For Data format, choose Parquet and choose Infer schema.

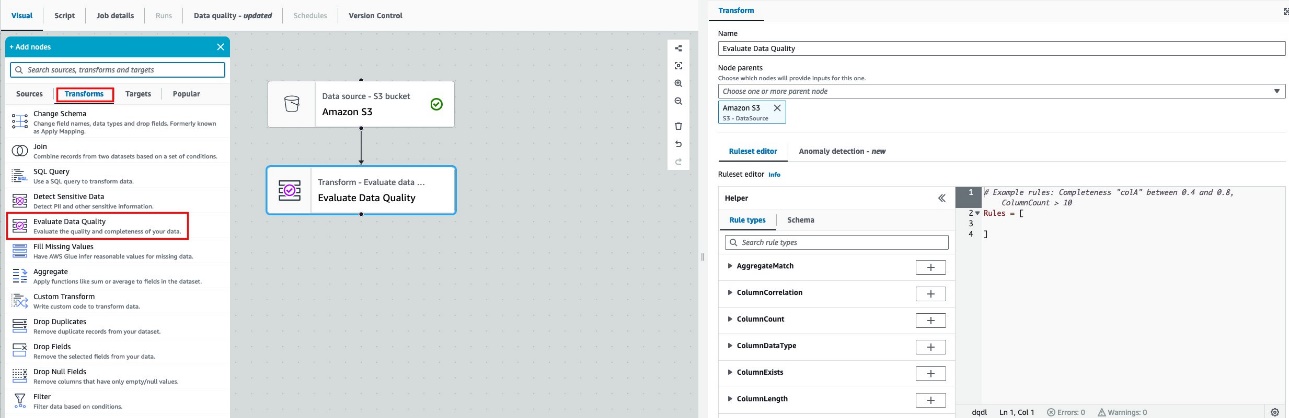

- On the Transforms menu, choose Evaluate Data Quality.

You now implement validation logic in your process to identify potential data quality problems originating from the source data.

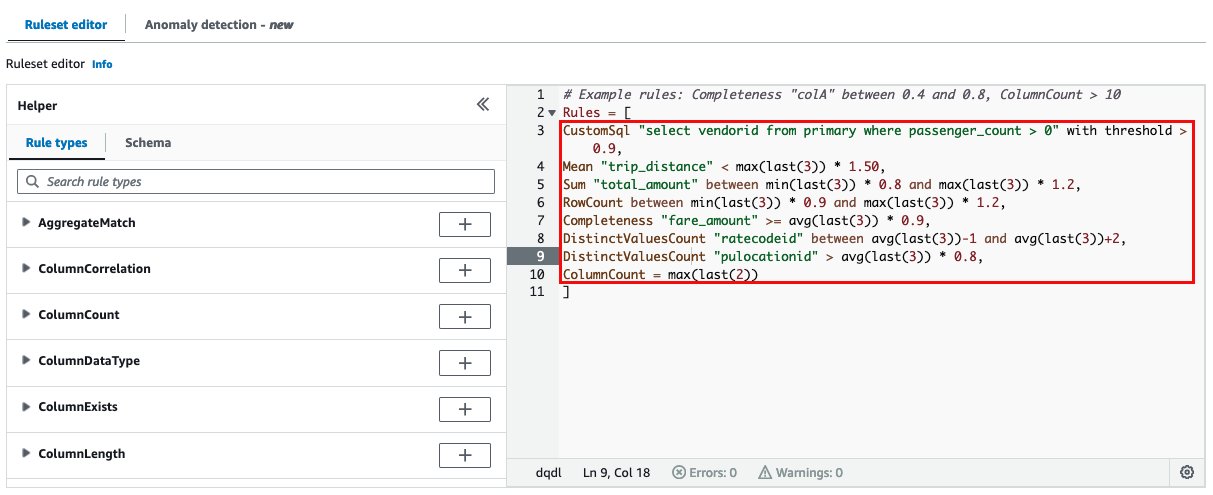

- To accomplish this, specify the following DQDL rules on the Ruleset editor tab:

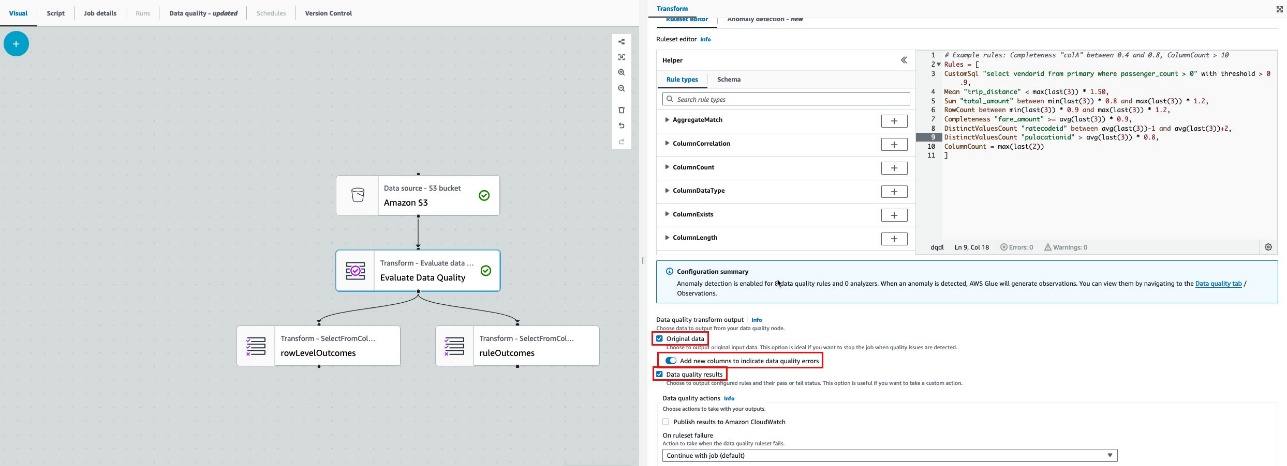

- Select Original data to output the original input data from the source and add a new node below the Evaluate Data Quality node.

- Choose Add new columns to indicate data quality errors to add four new columns to the output schema.

- Select Data quality results to capture the status of each rule configured and add a new node below the Evaluate Data Quality node.

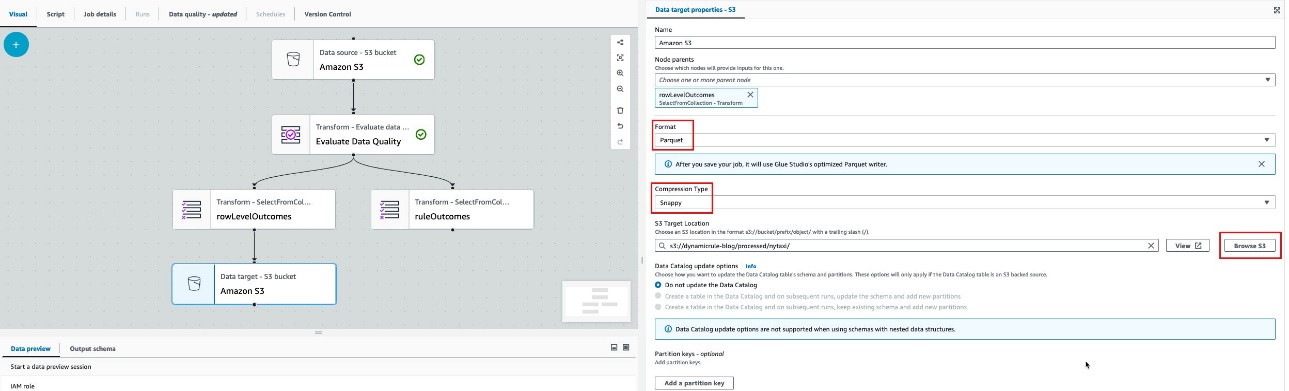

- With

rowLevelOutcomesnode selected, choose Amazon S3 on the Targets menu. - Configure the S3 target location to

/processed/nytaxi/under the bucket name starting withgluedataqualitydynamicrules-*and set the output format to Parquet and compression type to Snappy.

- With the

ruleOutcomesnode selected, choose Amazon S3 on the Targets menu. - Configure the S3 target location to

/dqresults/under the bucket name starting withgluedataqualitydynamicrules-*. - Set the output format to Parquet and compression type to Snappy.

- Choose Save.

Up to this point, you have set up an AWS Glue job, specified dynamic rules for the pipeline, and configured the target location for both the original source data and AWS Glue Data Quality results to be written on Amazon S3. Next, let’s examine dynamic rules and how they function, and provide an explanation of each rule we used in our job.

Dynamic rules

You can now author dynamic rules to compare current metrics produced by your rules with their historical values. These historical comparisons are enabled by using the last() operator in expressions. For example, the rule RowCount > max(last(1)) will succeed when the number of rows in the current run is greater than the most recent prior row count for the same dataset. last() takes an optional natural number argument describing how many prior metrics to consider; last(k) where k >= 1 will reference the last k metrics. The rule has the following conditions:

- If no data points are available,

last(k)will return the default value 0.0 - If fewer than k metrics are available,

last(k)will return all prior metrics

For example, if values from previous runs are (5, 3, 2, 1, 4), max(last (3)) will return 5.

AWS Glue supports over 15 types of dynamic rules, providing a robust set of data quality validation capabilities. For more information, refer to Dynamic rules. This section demonstrates several rule types to showcase the functionality and enable you to apply these features in your own use cases.

CustomSQL

The CustomSQL rule provides the capability to run a custom SQL statement against a dataset and check the return value against a given expression.

The following example rule uses a SQL statement wherein you specify a column name in your SELECT statement, against which you compare with some condition to get row-level results. A threshold condition expression defines a threshold of how many records should fail in order for the entire rule to fail. In this example, more than 90% of records should contain passenger_count greater than 0 for the rule to pass:

Note: Custom SQL also supports Dynamic rules, below is an example of how to use it in your job

CustomSql "select count(*) from primary" between min(last(3)) * 0.9 and max(last(3)) * 1.2

Mean

The Mean rule checks whether the mean (average) of all the values in a column matches a given expression.

The following example rule checks that the mean of trip_distance is less than the maximum value for the column trip distance over the last three runs times 1.5:

Sum

The Sum rule checks the sum of all the values in a column against a given expression.

The following example rule checks that the sum of total_amount is between 80% of the minimum of the last three runs and 120% of the maximum of the last three runs:

RowCount

The RowCount rule checks the row count of a dataset against a given expression. In the expression, you can specify the number of rows or a range of rows using operators like > and <.

The following example rule checks if the row count is between 90% of the minimum of the last three runs and 120% of the maximum of last three runs (excluding the current run). This rule applies to the entire dataset.

Completeness

The Completeness rule checks the percentage of complete (non-null) values in a column against a given expression.

The following example rule checks if the completeness of the fare_amount column is greater than or equal to the 90% of the average of the last three runs:

DistinctValuesCount

The DistinctValuesCount rule checks the number of distinct values in a column against a given expression.

The following example rules checks for two conditions:

- If the distinct count for the

ratecodeidcolumn is between the average of the last three runs minus 1 and the average of the last three runs plus 2 - If the distinct count for the

pulocationidcolumn is greater than 80% of the average of the last three runs

ColumnCount

The ColumnCount rule checks the column count of the primary dataset against a given expression. In the expression, you can specify the number of columns or a range of columns using operators like > and <.

The following example rule check if the column count is equal to the maximum of the last two runs:

Run the job

Now that the job setup is complete, we are prepared to run it. As previously indicated, dynamic rules are determined using the last(k) operator, with k set to 3 in the configured job. This implies that data quality rules will be evaluated using metrics from the previous three runs. To assess these rules accurately, the job must be run a minimum of k+1 times, requiring a total of four runs to thoroughly evaluate dynamic rules. In this example, we simulate an ETL job with data quality rules, starting with an initial run followed by three incremental runs.

First job (initial)

Complete the following steps for the initial run:

- Navigate to the source data files made available under the prefix

/raw-src/in the S3 bucket starting withgluedataqualitydynamicrules-*. - To simulate the initial run, copy the day one file 20220101.parquet under

/raw-src/to the/landing/nytaxi/folder in the same S3 bucket.

- On the AWS Glue Studio console, choose ETL Jobs in the navigation pane.

- Choose

GlueDataQualityDynamicRuleunder Your jobs to open it. - Choose Run to run the job.

You can view the job run details on the Runs tab. It will take a few minutes for the job to complete.

- After job successfully completes, navigate to the Data quality -updated tab.

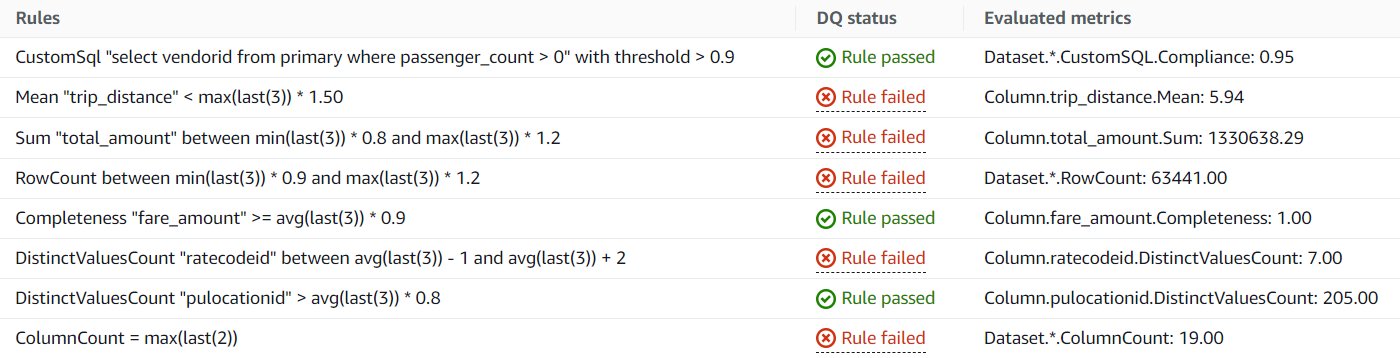

You can observe the Data Quality rules, rule status, and evaluated metrics for each rule that you set in the job. The following screenshot shows the results.

The rule details are as follows:

- CustomSql – The rule passes the data quality check because 95% of records have a

passenger_countgreater than 0, which exceeds the set threshold of 90%. - Mean – The rule fails due to the absence of previous runs, resulting in a default value of 0.0 when using last(3), with an overall mean of 5.94, which is greater than 0. If no data points are available, last(k) will return the default value of 0.0.

- Sum – The rule fails for the same reason as the mean rule, with last(3) resulting in a default value of 0.0.

- RowCount – The rule fails for the same reason as the mean rule, with last(3) resulting in a default value of 0.0.

- Completeness – The rule passes because 100% of records are complete, meaning there are no null values for the

fare_amountcolumn. - DistinctValuesCount “ratecodeid” – The rule fails for the same reason as the mean rule, with last(3) resulting in a default value of 0.0.

- DistinctValuesCount “pulocationid” – The rule passes because the distinct count of 205 for the

pulocationidcolumn is higher than the set threshold, with a value of 0.00 because avg(last(3))*0.8 results in 0. - ColumnCount – The rule fails for the same reason as the mean rule, with last(3) resulting in a default value of 0.0.

Second job (first incremental)

Now that you have successfully completed the initial run and observed the data quality results, you are ready for the first incremental run to process the file from day two. Complete the following steps:

- Navigate to the source data files made available under the prefix

/raw-src/in the S3 bucket starting withgluedataqualitydynamicrules-*. - To simulate the first incremental run, copy the day two file 20220102.parquet under

/raw-src/to the/landing/nytaxi/folder in the same S3 bucket. - On the AWS Glue Studio console, repeat Steps 4–7 from the first (initial) run to run the job and validate the data quality results.

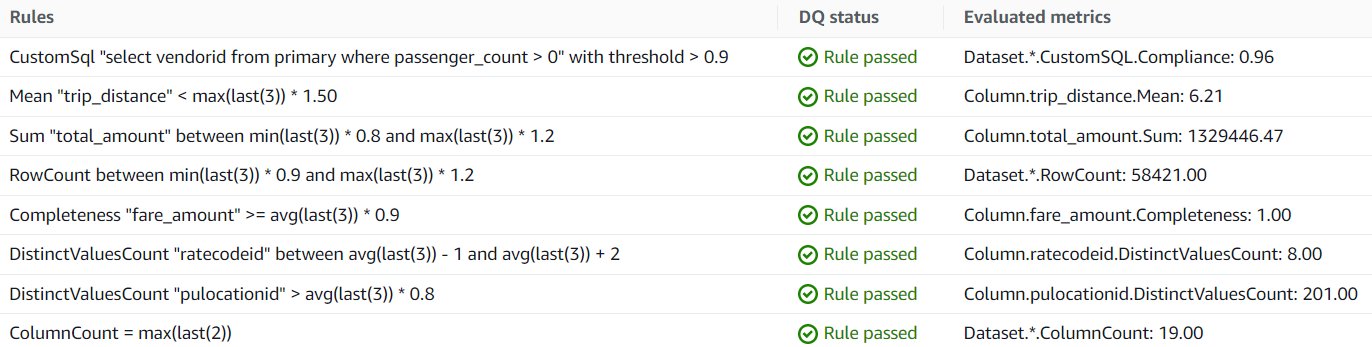

The following screenshot shows the data quality results.

On the second run, all rules passed because each rule’s threshold has been met:

- CustomSql – The rule passed because 96% of records have a

passenger_countgreater than 0, exceeding the set threshold of 90%. - Mean – The rule passed because the mean of 6.21 is less than 9.315 (6.21 * 1.5, meaning the mean from max(last(3)) is 6.21, multiplied by 1.5).

- Sum – The rule passed because the sum of the total amount, 1,329,446.47, is between 80% of the minimum of the last three runs, 1,063,557.176 (1,329,446.47 * 0.8), and 120% of the maximum of the last three runs, 1,595,335.764 (1,329,446.47 * 1.2).

- RowCount – The rule passed because the row count of 58,421 is between 90% of the minimum of the last three runs, 52,578.9 (58,421 * 0.9), and 120% of the maximum of the last three runs, 70,105.2 (58,421 * 1.2).

- Completeness – The rule passed because 100% of the records have non-null values for the fare amount column, exceeding the set threshold of the average of the last three runs times 90%.

- DistinctValuesCount “ratecodeid” – The rule passed because the distinct count of 8 for the ratecodeid column is between the set threshold of 6, which is the average of the last three runs minus 1 ((7)/1 = 7 – 1), and 9, which is the average of the last three runs plus 2 ((7)/1 = 7 + 2).

- DistinctValuesCount “pulocationid” – The rule passed because the distinct count of 201 for the

pulocationidcolumn is greater than 80% of the average of the last three runs, 160.8 (201 * 0.8). - ColumnCount – The rule passed because the number of columns, 19, is equal to the maximum of the last two runs.

Third job (second incremental)

After the successful completion of the first incremental run, you are ready for the second incremental run to process the file from day three. Complete the following steps:

- Navigate to the source data files under the prefix

/raw-src/in the S3 bucket starting withgluedataqualitydynamicrules-*. - To simulate the second incremental run, copy the day three file 20220103.parquet under

/raw-src/to the/landing/nytaxi/folder in the same S3 bucket. - On the AWS Glue Studio console, repeat Steps 4–7 from the first (initial) job to run the job and validate data quality results.

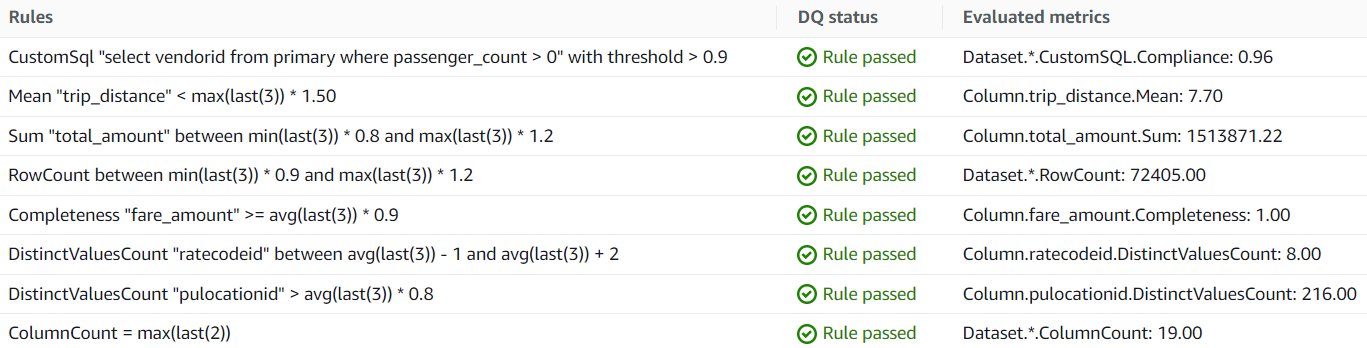

The following screenshot shows the data quality results.

Similar to the second run, the data file from the source didn’t contain any data quality issues. As a result, all of the defined data validation rules were within the set thresholds and passed successfully.

Fourth job (third incremental)

Now that you have successfully completed the first three runs and observed the data quality results, you are ready for the final incremental run for this exercise, to process the file from day four. Complete the following steps:

- Navigate to the source data files under the prefix

/raw-src/in the S3 bucket starting withgluedataqualitydynamicrules-*. - To simulate the third incremental run, copy the day four file 20220104.parquet under

/raw-src/to the/landing/nytaxi/folder in the same S3 bucket. - On the AWS Glue Studio console, repeat Steps 4–7 from the first (initial) job to run the job and validate the data quality results.

The following screenshot shows the data quality results.

In this run, there are some data quality issues from the source that were caught by the AWS Glue job, causing the rules to fail. Let’s examine each failed rule to understand the specific data quality issues that were detected:

- CustomSql – The rule failed because only 80% of the records have a

passenger_countgreater than 0, which is lower than the set threshold of 90%. - Mean – The rule failed because the mean of

trip_distanceis 71.74, which is greater than 1.5 times the maximum of the last three runs, 11.565 (7.70 * 1.5). - Sum – The rule passed because the sum of

total_amountis 1,165,023.73, which is between 80% of the minimum of the last three runs, 1,063,557.176 (1,329,446.47 * 0.8), and 120% of the maximum of the last three runs, 1,816,645.464 (1,513,871.22 * 1.2). - RowCount – The rule failed because the row count of 44,999 is not between 90% of the minimum of the last three runs, 52,578.9 (58,421 * 0.9), and 120% of the maximum of the last three runs, 88,334.1 (72,405 * 1.2).

- Completeness – The rule failed because only 82% of the records have non-null values for the

fare_amountcolumn, which is lower than the set threshold of the average of the last three runs times 90%. - DistinctValuesCount “ratecodeid” – The rule failed because the distinct count of 6 for the ratecodeid column is not between the set threshold of 6.66, which is the average of the last three runs minus 1 ((8+8+7)/3 = 7.66 – 1), and 9.66, which is the average of the last three runs plus 1 ((8+8+7)/3 = 7.66 + 2).

- DistinctValuesCount “pulocationid” – The rule passed because the distinct count of 205 for the pulocationid column is greater than 80% of the average of the last three runs, 165.86 ((216+201+205)/3 = 207.33 * 0.8).

- ColumnCount – The rule passed because the number of columns, 19, is equal to the maximum of the last two runs.

To summarize the outcome of the fourth run: the rules for Sum and DistinctValuesCount for pulocationid, as well as the ColumnCount rule, passed successfully. However, the rules for CustomSql, Mean, RowCount, Completeness, and DistinctValuesCount for ratecodeid failed to meet the criteria.

Upon examining the Data Quality evaluation results, further investigation is necessary to identify the root cause of these data quality issues. For instance, in the case of the failed RowCount rule, it’s imperative to ascertain why there was a decrease in record count. This investigation should delve into whether the drop aligns with actual business trends or if it stems from issues within the source system, data ingestion process, or other factors. Appropriate actions must be taken to rectify these data quality issues or update the rules to accommodate natural business trends.

You can expand this solution by implementing and configuring alerts and notifications to promptly address any data quality issues that arise. For more details, refer to Set up alerts and orchestrate data quality rules with AWS Glue Data Quality (Part 4 in this series).

Clean up

To clean up your resources, complete the following steps:

- Delete the AWS Glue job.

- Delete the CloudFormation stack.

Conclusion

AWS Glue Data Quality offers a straightforward way to measure and monitor the data quality of your ETL pipeline. In this post, you learned about authoring a Data Quality job with dynamic rules, and how these rules eliminate the need to update static rules with ever-evolving source data in order to keep the rules current. Data Quality dynamic rules enable the detection of potential data quality issues early in the data ingestion process, before downstream propagation into data lakes, warehouses, and analytical engines. By catching errors upfront, organizations can ingest cleaner data and take advantage of advanced data quality capabilities. The rules provide a robust framework to identify anomalies, validate integrity, and provide accuracy as data enters the analytics pipeline. Overall, AWS Glue dynamic rules empower organizations to take control of data quality at scale and build trust in analytical outputs.

To learn more about AWS Glue Data Quality, refer to the following:

About the Authors

Prasad Nadig is an Analytics Specialist Solutions Architect at AWS. He guides customers architect optimal data and analytical platforms leveraging the scalability and agility of the cloud. He is passionate about understanding emerging challenges and guiding customers to build modern solutions. Outside of work, Prasad indulges his creative curiosity through photography, while also staying up-to-date on the latest technology innovations and trends.

Prasad Nadig is an Analytics Specialist Solutions Architect at AWS. He guides customers architect optimal data and analytical platforms leveraging the scalability and agility of the cloud. He is passionate about understanding emerging challenges and guiding customers to build modern solutions. Outside of work, Prasad indulges his creative curiosity through photography, while also staying up-to-date on the latest technology innovations and trends.

Mahammadali Saheb is a Data Architect at AWS Professional Services, specializing in Data Analytics. He is passionate about helping customers drive business outcome via data analytics solutions on AWS Cloud.

Mahammadali Saheb is a Data Architect at AWS Professional Services, specializing in Data Analytics. He is passionate about helping customers drive business outcome via data analytics solutions on AWS Cloud.

Tyler McDaniel is a software development engineer on the AWS Glue team with diverse technical interests including high-performance computing and optimization, distributed systems, and machine learning operations. He has eight years of experience in software and research roles.

Tyler McDaniel is a software development engineer on the AWS Glue team with diverse technical interests including high-performance computing and optimization, distributed systems, and machine learning operations. He has eight years of experience in software and research roles.

Rahul Sharma is a Senior Software Development Engineer at AWS Glue. He focuses on building distributed systems to support features in AWS Glue. He has a passion for helping customers build data management solutions on the AWS Cloud. In his spare time, he enjoys playing the piano and gardening.

Rahul Sharma is a Senior Software Development Engineer at AWS Glue. He focuses on building distributed systems to support features in AWS Glue. He has a passion for helping customers build data management solutions on the AWS Cloud. In his spare time, he enjoys playing the piano and gardening.

Edward Cho is a Software Development Engineer at AWS Glue. He has contributed to the AWS Glue Data Quality feature as well as the underlying open-source project Deequ.

Edward Cho is a Software Development Engineer at AWS Glue. He has contributed to the AWS Glue Data Quality feature as well as the underlying open-source project Deequ.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://aws.amazon.com/blogs/big-data/get-started-with-aws-glue-data-quality-dynamic-rules-for-etl-pipelines/