In recent years, natural language processing and conversational AI have gained significant attention as technologies that are transforming the way we interact with machines and each other. These fields involve the use of machine learning and artificial intelligence to enable machines to understand, interpret, and generate human language.

Over the centuries, humans have developed and evolved many forms of communication, from the earliest hieroglyphs and pictograms to the complex and nuanced language systems of today. With the advent of technology, we have been able to take language communication to a whole new level, with chatbots and other artificial intelligence (AI) systems capable of understanding and responding to natural language. We have come a long way from the earliest forms of language to the sophisticated language technology of today, and the possibilities for the future are limitless.

Google, one of the world’s leading technology companies, has been at the forefront of research and development in these areas, with its latest advancements showing tremendous potential for improving the efficiency and effectiveness of NLP and conversational AI systems.

Advancing natural language processing and conversational AI: Google’s take

In November of last year, Google made a public announcement regarding their 1,000 Languages Initiative. This was a significant pledge to construct a machine learning (ML) model that would facilitate the usage of the world’s one thousand most commonly spoken languages, promoting inclusion and accessibility for billions of people worldwide. Nonetheless, several of these languages are only spoken by fewer than twenty million individuals, posing a fundamental challenge of how to provide assistance to languages that have limited speakers or insufficient data.

Google Universal Speech Model (USM)

Goole provided further details about the Universal Speech Model (USM) in its blog post. It is a significant initial step towards the objective of supporting 1,000 languages. The USM comprises a collection of cutting-edge speech models with 2 billion parameters, which have been trained on 12 million hours of speech and 28 billion sentences of text, spanning over 300 languages.

The USM has been created for use on YouTube, specifically for closed captions. The model’s automatic speech recognition (ASR) capabilities are not limited to commonly spoken languages like English and Mandarin. Instead, it can also recognize under-resourced languages, such as Amharic, Cebuano, Assamese, and Azerbaijani, to name a few.

Google demonstrates that pre-training the model’s encoder on a massive, unlabeled multilingual dataset and fine-tuning it on a smaller labeled dataset enables recognition of under-represented languages. Moreover, the model training process is capable of adapting to new languages and data effectively.

Current ASR comes with many challenges

To accomplish this ambitious goal, we need to address two significant challenges in ASR.

One major issue with conventional supervised learning approaches is that they lack scalability. One of the primary obstacles in expanding speech technologies to numerous languages is acquiring enough data to train models of high quality. With traditional approaches, audio data necessitates manual labeling, which can be both time-consuming and expensive.

Alternatively, the audio data can be gathered from sources that already have transcriptions, which are difficult to come by for languages with limited representation. On the other hand, self-supervised learning can utilize audio-only data, which is more readily available across a wide range of languages. As a result, self-supervision is a superior approach to achieving the goal of scaling across hundreds of languages.

Expanding language coverage and quality presents another challenge in that models must enhance their computational efficiency. This necessitates a flexible, efficient, and generalizable learning algorithm. The algorithm should be capable of using substantial amounts of data from diverse sources, facilitating model updates without necessitating complete retraining, and generalizing to new languages and use cases. In summary, the algorithm must be able to learn in a computationally efficient manner while expanding language coverage and quality.

Self-supervised learning with fine-tuning

The Universal Speech Model (USM) employs the conventional encoder-decoder architecture, with the option of using the CTC, RNN-T, or LAS decoder. The Conformer, or convolution-augmented transformer, is used as the encoder in USM. The primary element of the Conformer is the Conformer block, which includes attention, feed-forward, and convolutional modules. The encoder receives the speech signal’s log-mel spectrogram as input and then performs convolutional sub-sampling. Following this, a sequence of Conformer blocks and a projection layer are applied to generate the final embeddings.

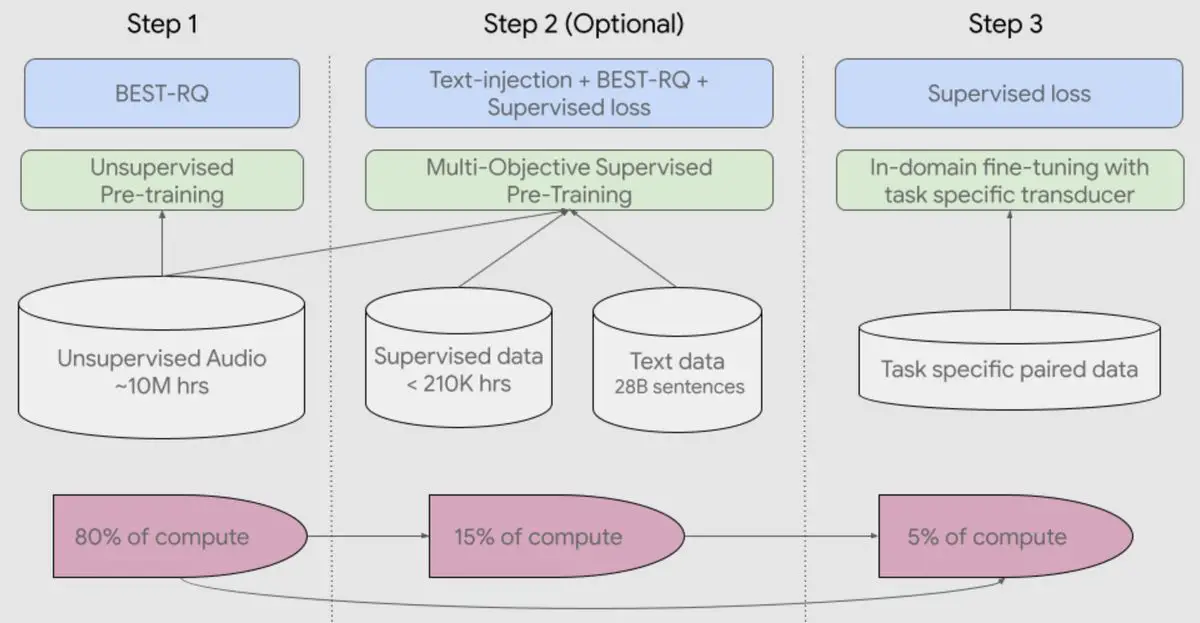

The USM training process begins with self-supervised learning on speech audio for hundreds of languages. In the second step, an optional pre-training step utilizing text data may be used to improve the model’s quality and language coverage. The decision to include this step is based on the availability of text data. The USM performs most effectively when this optional pre-training step is included. The final step in the training pipeline involves fine-tuning the model with a small amount of supervised data on downstream tasks such as automatic speech recognition (ASR) or automatic speech translation.

- In the first step, the USM utilizes the BEST-RQ method, which has previously exhibited state-of-the-art performance on multilingual tasks and has been proven to be effective when processing large amounts of unsupervised audio data.

- In the second (optional) step, the USM employs multi-objective supervised pre-training to integrate knowledge from supplementary text data. The model incorporates an extra encoder module to accept the text as input, along with additional layers to combine the outputs of the speech and text encoders. The model is trained jointly on unlabeled speech, labeled speech, and text data.

- In the final stage of the USM training pipeline, the model is fine-tuned on the downstream tasks.

The following diagram illustrates the overall training pipeline:

Data regarding the encoder

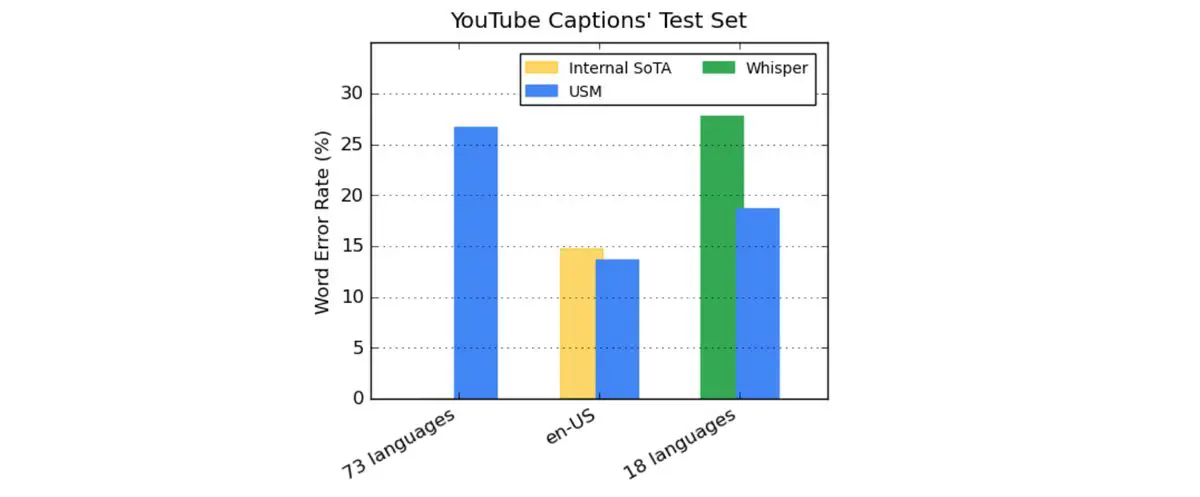

Google shared some significant insights in its blog post regarding the USM’s encoder, which incorporates over 300 languages through pre-training. In the blog post, the effectiveness of the pre-trained encoder is demonstrated through fine-tuning YouTube Caption’s multilingual speech data.

The supervised YouTube data contains 73 languages and has an average of fewer than three thousand hours of data per language. Despite having limited supervised data, the USM model achieves a word error rate (WER) of less than 30% on average across the 73 languages, which is a milestone that has never been accomplished before.

In comparison to the current internal state-of-the-art model, the USM has a 6% relatively lower WER for en-US. Additionally, the USM was compared with the recently released large model, Whisper (large-v2), which was trained with over 400,000 hours of labeled data. For the comparison, only the 18 languages that Whisper can decode with lower than 40% WER were used. For these 18 languages, the USM model has, on average, a 32.7% relative lower WER in comparison to Whisper.

Comparisons between the USM and Whisper were also made on publicly available datasets, where the USM demonstrated lower WER on CORAAL (African American Vernacular English), SpeechStew (en-US), and FLEURS (102 languages). The USM achieves a lower WER with and without in-domain data training. The FLEURS comparison involves the subset of languages (62) that overlap with the languages supported by the Whisper model. In this comparison, the USM without in-domain data has a 65.8% relative lower WER compared to Whisper, and the USM with in-domain data has a 67.8% relative lower WER.

About automatic speech translation (AST)

In the realm of speech translation, the USM model is fine-tuned on the CoVoST dataset. By including text via the second stage of the USM training pipeline, the model achieves state-of-the-art quality despite having limited supervised data. To evaluate the model’s performance breadth, the languages from the CoVoST dataset are segmented into high, medium, and low categories based on resource availability. The BLEU score (higher is better) is then calculated for each segment.

As illustrated below, the USM model outperforms Whisper for all segments.

Google aims over 1,000 new languages

The development of USM is a critical effort toward realizing Google’s mission to organize the world’s information and make it universally accessible. We believe USM’s base model architecture and training pipeline comprises a foundation on which we can build to expand speech modeling to the next 1,000 languages.

Central concept: Natural language processing and conversational AI

To comprehend Google’s utilization of the Universal Speech Model, it is crucial to have a fundamental understanding of natural language processing and conversational AI.

Natural language processing involves the application of artificial intelligence to comprehend and respond to human language. It aims to enable machines to analyze, interpret, and generate human language in a way that is indistinguishable from human communication.

Conversational AI, on the other hand, is a subset of natural language processing that focuses on developing computer systems capable of communicating with humans in a natural and intuitive manner.

What is natural language processing (NLP)?

Natural language processing is a field of study in artificial intelligence (AI) and computer science that focuses on the interactions between humans and computers using natural language. It involves the development of algorithms and techniques to enable machines to understand, interpret, and generate human language, allowing computers to interact with humans in a way that is more intuitive and efficient.

History of NLP

The history of NLP dates back to the 1950s, with the development of early computational linguistics and information retrieval. Over the years, NLP has evolved significantly, with the emergence of machine learning and deep learning techniques, leading to more advanced applications of NLP.

Can a conversational AI pass NLP training?

Applications of NLP

NLP has numerous applications in various industries, including healthcare, finance, education, customer service, and marketing. Some of the most common applications of NLP include:

- Sentiment analysis

- Text classification

- Named entity recognition

- Machine translation

- Speech recognition

- Summarization

Understanding NLP chatbots

One of the most popular applications of NLP is in the development of conversational agents, also known as chatbots. These chatbots use NLP to understand and respond to user inputs in natural language, enabling them to mimic human-like interactions. Chatbots are being used in a variety of industries, from customer service to healthcare, to provide instant support and reduce operational costs. NLP-powered chatbots are becoming more sophisticated and are expected to play a significant role in the future of communication and customer service.

What is conversational AI?

Conversational AI is a subset of natural language processing (NLP) that focuses on developing computer systems capable of communicating with humans in a natural and intuitive manner. It involves the development of algorithms and techniques to enable machines to understand, interpret, and generate human language, allowing computers to interact with humans in a conversational manner.

Types of conversational AI

There are several types of conversational AI systems, including:

- Rule-based systems: These systems rely on pre-defined rules and scripts to provide responses to user inputs.

- Machine learning-based systems: These systems use machine learning algorithms to analyze and learn from user inputs and provide more personalized and accurate responses over time.

- Hybrid systems: These systems combine rule-based and machine learning-based approaches to provide the best of both worlds.

Applications of conversational AI

Conversational AI has numerous applications in various industries, including healthcare, finance, education, customer service, and marketing. Some of the most common applications of conversational AI include:

- Customer service chatbots

- Virtual assistants

- Voice assistants

- Language translation

- Sales and marketing chatbots

Advantages of conversational AI

Conversational AI offers several advantages, including:

- Improved customer experience: Conversational AI systems provide instant and personalized responses, improving the overall customer experience.

- Cost savings: Conversational AI systems can automate repetitive tasks and reduce the need for human customer service representatives, leading to cost savings.

- Scalability: Conversational AI systems can handle a large volume of requests simultaneously, making them highly scalable.

Understanding conversational AI chatbots

Conversational AI chatbots are computer programs that simulate conversation with human users in natural language. These chatbots use conversational AI techniques to understand and respond to user inputs, providing instant support and personalized recommendations. They are being used in a variety of industries, from customer service to healthcare, to provide instant support and reduce operational costs. Conversational AI chatbots are becoming more sophisticated and are expected to play a significant role in the future of communication and customer service.

Examples of NLP and conversational AI working together

Natural language processing and conversational AI are being used together in various industries to improve customer service, automate tasks, and provide personalized recommendations. Some examples of NLP and conversational AI working together include:

- Amazon Alexa: The virtual assistant uses NLP to understand and interpret user requests and conversational AI to respond in a natural and intuitive manner.

- Google Duplex: A conversational AI system that uses NLP to understand and interpret user requests and generate human-like responses.

- IBM Watson Assistant: A virtual assistant that uses NLP to understand and interpret user requests and conversational AI to provide personalized responses.

- PayPal: The company uses an NLP-powered chatbot that uses conversational AI to assist customers with account management and transaction-related queries.

These examples illustrate how Natural language processing and conversational AI can work together to create powerful and intuitive chatbots and virtual assistants that provide instant support and enhance the user experience.

Importance of NLP in conversational AI

Natural language processing is critical to the development of conversational AI, as it enables machines to understand, interpret, and generate human language. NLP techniques, such as sentiment analysis, entity recognition, and language translation, provide the foundation for conversational AI by allowing machines to comprehend user inputs and generate appropriate responses. Without NLP, conversational AI systems would not be able to understand the nuances of human language, making it difficult to provide accurate and personalized responses.

Role of conversational AI in NLP

Conversational AI plays a crucial role in NLP by enabling machines to interact with humans in a conversational and intuitive manner. By incorporating conversational AI techniques, such as chatbots and virtual assistants, into NLP systems, organizations can provide more personalized and engaging experiences for their customers. Conversational AI can also help to automate tasks and reduce the need for human intervention, improving the efficiency and scalability of NLP systems.

In addition, conversational AI can help to improve the quality and accuracy of NLP systems by providing a feedback loop for machine learning algorithms. By analyzing user interactions with chatbots and virtual assistants, NLP systems can identify areas for improvement and refine their algorithms to provide more accurate and personalized responses over time.

The integration of NLP is critical to the development of intelligent and intuitive systems that can understand, interpret, and generate human language. By leveraging these technologies, organizations can create powerful chatbots and virtual assistants that provide instant support and enhance the user experience.

Conversational AI and NLP chatbot examples

These tools utilize natural language processing and conversational AI technologies for different purposes:

Future of natural language processing and conversational AI

As technology continues to evolve, the future of natural language processing and conversational AI is full of potential advancements and new possibilities. Some potential future advancements in natural language processing and conversational AI include:

- Improved accuracy and personalization: As machine learning algorithms become more sophisticated, NLP and conversational AI systems will become more accurate and better able to provide personalized responses to users.

- Multilingual support: NLP and conversational systems will continue to improve their support for multiple languages, allowing them to communicate with users around the world.

- Emotion recognition: NLP and conversational systems may incorporate emotion recognition capabilities, enabling them to detect and respond to user emotions.

- Natural language generation: Natural language processing and conversational AI systems may evolve to generate natural language responses rather than relying on pre-programmed responses.

Impact on various industries

The impact of NLP and conversational AI on various industries is already significant, and this trend is expected to continue in the future. Some industries that are likely to be affected by NLP and conversational AI include:

- Healthcare: Natural language processing and conversational AI can be used to provide medical advice, connect patients with doctors and specialists, and assist with remote patient monitoring.

- Customer service: NLP and conversational AI can be used to automate customer service and provide instant support to customers.

- Finance: Natural language processing and conversational AI can be used to automate tasks, such as fraud detection and customer service, and provide personalized financial advice to customers.

- Education: NLP and conversational AI can be used to enhance learning experiences by providing personalized support and feedback to students.

Future trends and predictions

Some future trends and predictions for Natural language processing and conversational AI include:

- More human-like interactions: As NLP and conversational AI systems become more sophisticated; they will become better able to understand and respond to natural language inputs in a way that feels more human-like.

- Increased adoption of chatbots: Chatbots will become more prevalent across industries as they become more advanced and better able to provide personalized and accurate responses.

- Integration with other technologies: Natural language processing and conversational AI will increasingly be integrated with other technologies, such as virtual and augmented reality, to create more immersive and engaging user experiences.

Final words

Natural language processing and conversational AI have been rapidly evolving and their applications are becoming more prevalent in our daily lives. Google’s new advancements in these fields through its Universal Speech Model (USM) have shown the potential to make significant impacts in various industries by providing users with a more personalized and intuitive experience. USM has been trained on a vast amount of speech and text data from over 300 languages and is capable of recognizing under-resourced languages with low data availability. The model has demonstrated state-of-the-art performance across various speech and translation datasets, achieving significant reductions in word error rates compared to other models.

In addition, the integration of NLP and conversational AI has become increasingly prevalent, with chatbots and virtual assistants being used in various industries, including healthcare, finance, and education. The ability to understand and generate human language has allowed these systems to provide personalized and accurate responses to users, improving efficiency and scalability.

Looking ahead, natural language processing and conversational AI are expected to continue advancing, with potential improvements in accuracy, personalization, and emotion recognition. Furthermore, as these technologies become more integrated with other emerging technologies, such as virtual and augmented reality, the possibilities for immersive and engaging user experiences will continue to grow.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://dataconomy.com/2023/03/natural-language-processing-conversational-ai/