Data engineering plays a pivotal role in the vast data ecosystem by collecting, transforming, and delivering data essential for analytics, reporting, and machine learning. Aspiring data engineers often seek real-world projects to gain hands-on experience and showcase their expertise. This article presents the top 20 data engineering project ideas with their source code. Whether you’re a beginner, an intermediate-level engineer, or an advanced practitioner, these projects offer an excellent opportunity to sharpen your data engineering skills.

Table of contents

Data Engineering Projects for Beginners

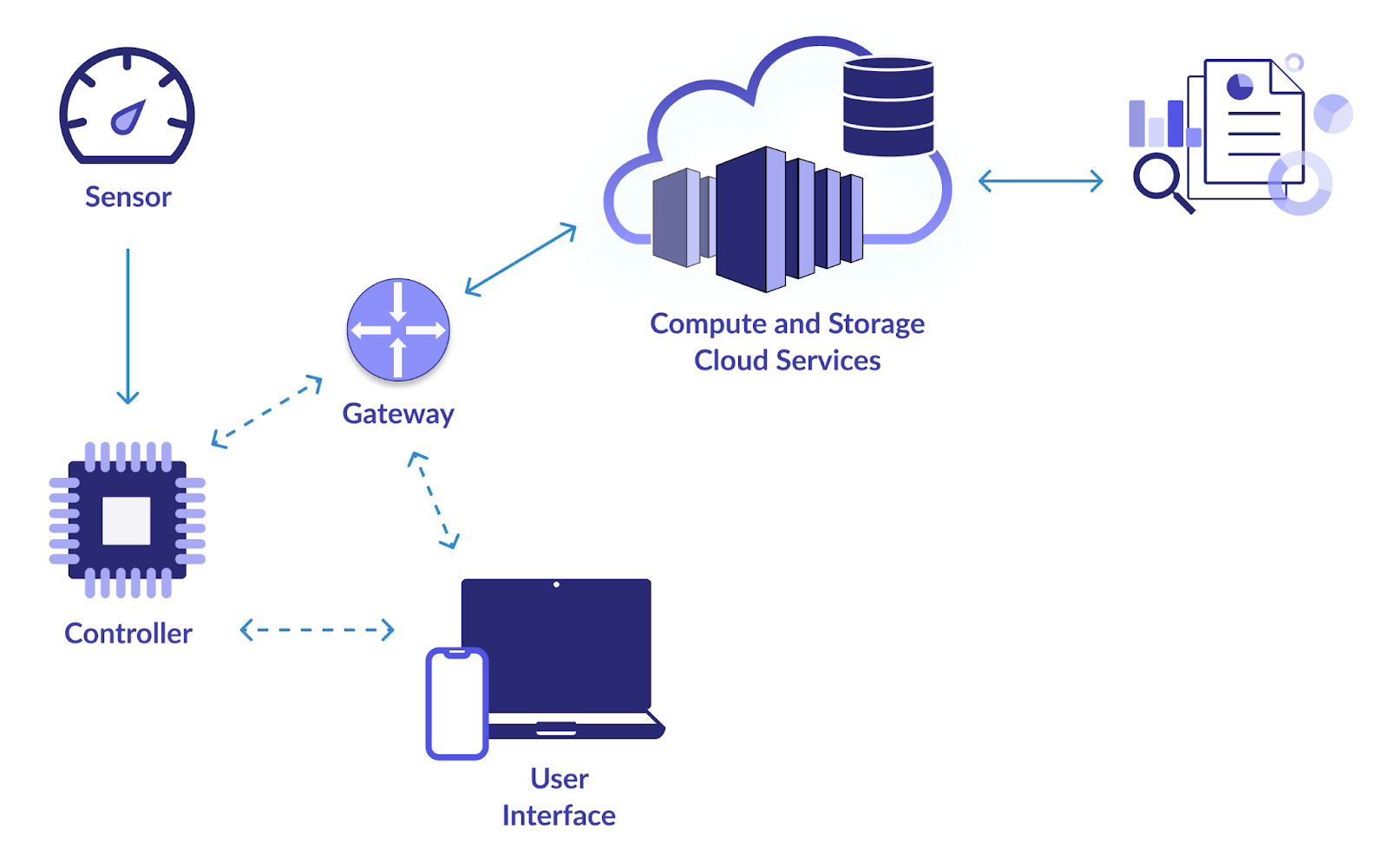

1. Smart IoT Infrastructure

Objective

The major goal of this project is to establish a trustworthy data pipeline for collecting and analysing data from IoT (Internet of Things) devices. Webcams, temperature sensors, motion detectors, and other IoT devices all generate a lot of data. You want to design a system to effectively consume, store, process, and analyze this data. By doing this, real-time monitoring and decision-making based on the learnings from the IoT data are made possible.

How to Solve?

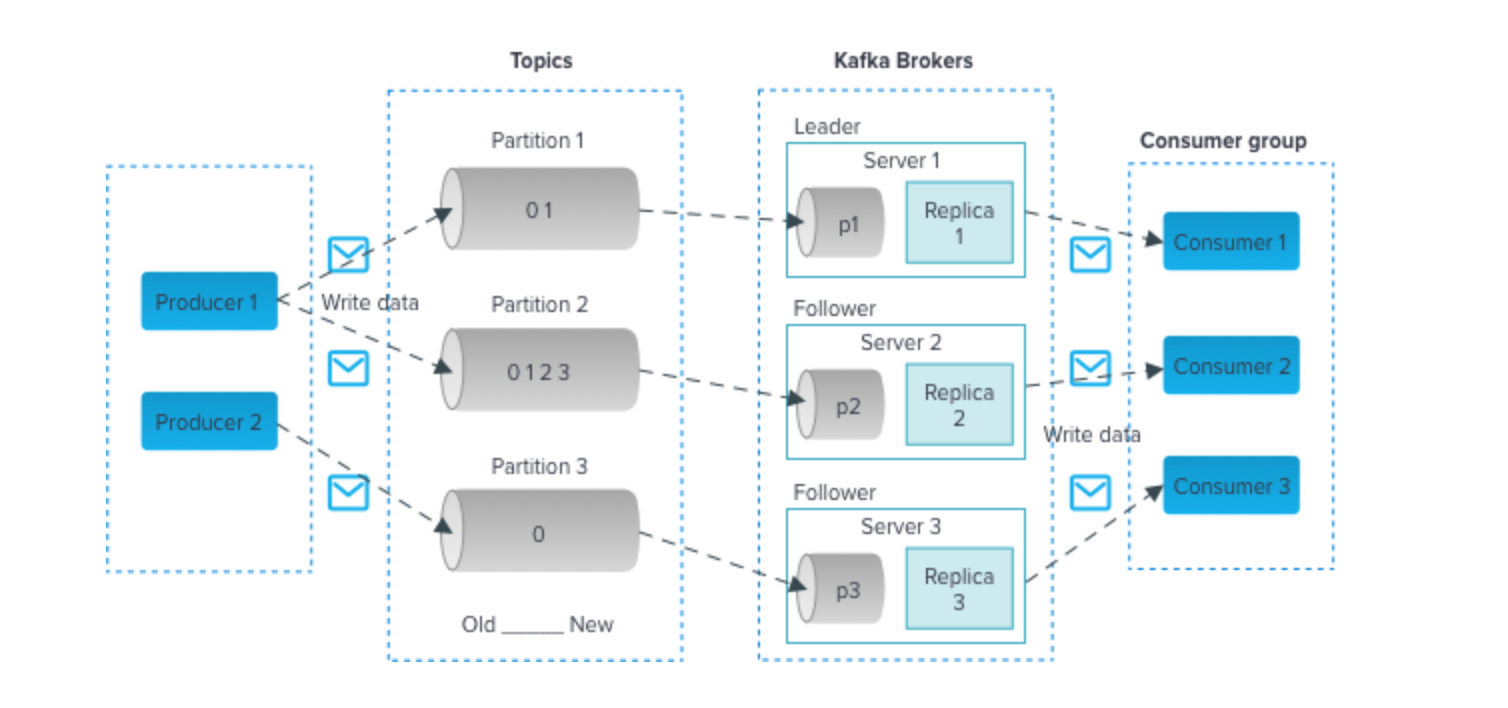

- Utilize technologies like Apache Kafka or MQTT for efficient data ingestion from IoT devices. These technologies support high-throughput data streams.

- Employ scalable databases like Apache Cassandra or MongoDB to store the incoming IoT data. These NoSQL databases can handle the volume and variety of IoT data.

- Implement real-time data processing using Apache Spark Streaming or Apache Flink. These frameworks allow you to analyze and transform data as it arrives, making it suitable for real-time monitoring.

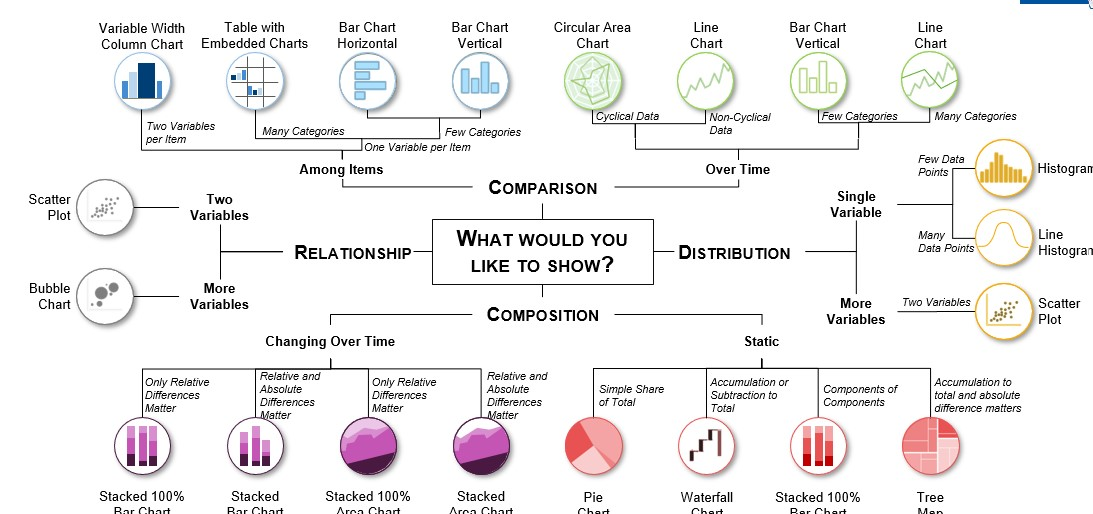

- Use visualization tools like Grafana or Kibana to create dashboards that provide insights into the IoT data. Real-time visualizations can help stakeholders make informed decisions.

Click here to check the source code

2. Aviation Data Analysis

Objective

To collect, process, and analyze aviation data from numerous sources, including the Federal Aviation Administration (FAA), airlines, and airports, this project attempts to develop a data pipeline. Aviation data includes flights, airports, weather, and passenger demographics. Your goal is to extract meaningful insights from this data to improve flight scheduling, enhance safety measures, and optimize various aspects of the aviation industry.

How to Solve?

- Apache Nifi or AWS Kinesis can be used for data ingestion from diverse sources.

- Store the processed data in data warehouses like Amazon Redshift or Google BigQuery for efficient querying and analysis.

- Employ Python with libraries like Pandas and Matplotlib to analyze in-depth aviation data. This can involve identifying patterns in flight delays, optimizing routes, and evaluating passenger trends.

- Tools like Tableau or Power BI can be used to create informative visualizations that help stakeholders make data-driven decisions in the aviation sector.

Click here to view the Source Code

3. Shipping and Distribution Demand Forecasting

Objective

In this project, your objective is to create a robust ETL (Extract, Transform, Load) pipeline that processes shipping and distribution data. By using historical data, you will build a demand forecasting system that predicts future product demand in the context of shipping and distribution. This is crucial for optimizing inventory management, reducing operational costs, and ensuring timely deliveries.

How to Solve?

- Apache NiFi or Talend can be used to build the ETL pipeline, which will extract data from various sources, transform it, and load it into a suitable data storage solution.

- Utilize tools like Python or Apache Spark for data transformation tasks. You may need to clean, aggregate, and preprocess data to make it suitable for forecasting models.

- Implement forecasting models such as ARIMA (AutoRegressive Integrated Moving Average) or Prophet to predict demand accurately.

- Store the cleaned and transformed data in databases like PostgreSQL or MySQL.

Click here to view the source code for this data engineering project,

4. Event Data Analysis

Objective

Make a data pipeline that collects information from various events, including conferences, sporting events, concerts, and social gatherings. Real-time data processing, sentiment analysis of social media posts on these events, and the creation of visualizations to show trends and insights in real-time are all part of the project.

How to Solve?

- Depending on the event data sources, you might use the Twitter API for collecting tweets, web scraping for event-related websites or other data ingestion methods.

- Employ Natural Language Processing (NLP) techniques in Python to perform sentiment analysis on social media posts. Tools like NLTK or spaCy can be valuable.

- Use streaming technologies like Apache Kafka or Apache Flink for real-time data processing and analysis.

- Create interactive dashboards and visualizations using frameworks like Dash or Plotly to present event-related insights in a user-friendly format.

Click here to check the source code.

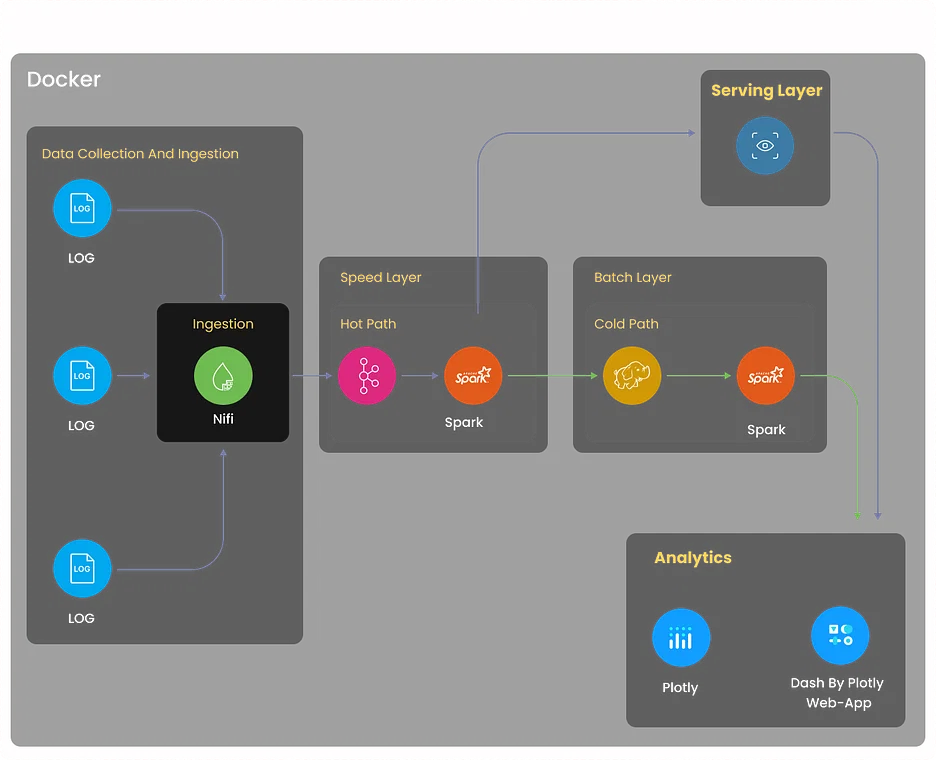

5. Log Analytics Project

Objective

Build a comprehensive log analytics system that collects logs from various sources, including servers, applications, and network devices. The system should centralize log data, detect anomalies, facilitate troubleshooting, and optimize system performance through log-based insights.

How to Solve?

- Implement log collection using tools like Logstash or Fluentd. These tools can aggregate logs from diverse sources and normalize them for further processing.

- Utilize Elasticsearch, a powerful distributed search and analytics engine, to efficiently store and index log data.

- Employ Kibana to create dashboards and visualizations that allow users to monitor log data in real time.

- Set up alerting mechanisms using Elasticsearch Watcher or Grafana Alerts to notify relevant stakeholders when specific log patterns or anomalies are detected.

Click here to explore this data engineering project

6. Movielens Data Analysis for Recommendations

Objective

- Design and develop a recommendation engine using the Movielens dataset.

- Create a robust ETL pipeline to preprocess and clean the data.

- Implement collaborative filtering algorithms to provide personalized movie recommendations to users.

How to Solve?

- Leverage Apache Spark or AWS Glue to build an ETL pipeline that extracts movie and user data, transforms it into a suitable format, and loads it into a data storage solution.

- Implement collaborative filtering techniques, such as user-based or item-based collaborative filtering, using libraries like Scikit-learn or TensorFlow.

- Store the cleaned and transformed data in data storage solutions such as Amazon S3 or Hadoop HDFS.

- Develop a web-based application (e.g., using Flask or Django) where users can input their preferences, and the recommendation engine provides personalized movie recommendations.

Click here to explore this data engineering project.

7. Retail Analytics Project

Objective

Create a retail analytics platform that ingests data from various sources, including point-of-sale systems, inventory databases, and customer interactions. Analyze sales trends, optimize inventory management, and generate personalized product recommendations for customers.

How to Solve?

- Implement ETL processes using tools like Apache Beam or AWS Data Pipeline to extract, transform, and load data from retail sources.

- Utilize machine learning algorithms such as XGBoost or Random Forest for sales prediction and inventory optimization.

- Store and manage data in data warehousing solutions like Snowflake or Azure Synapse Analytics for efficient querying.

- Create interactive dashboards using tools like Tableau or Looker to present retail analytics insights in a visually appealing and understandable format.

Click here to explore the source code.

Data Engineering Projects on GitHub

8. Real-time Data Analytics

Objective

Contribute to an open-source project focused on real-time data analytics. This project provides an opportunity to improve the project’s data processing speed, scalability, and real-time visualization capabilities. You may be tasked with enhancing the performance of data streaming components, optimizing resource usage, or adding new features to support real-time analytics use cases.

How to Solve?

The solving method will depend on the project you contribute to, but it often involves technologies like Apache Flink, Spark Streaming, or Apache Storm.

Click here to explore the source code for this data engineering project.

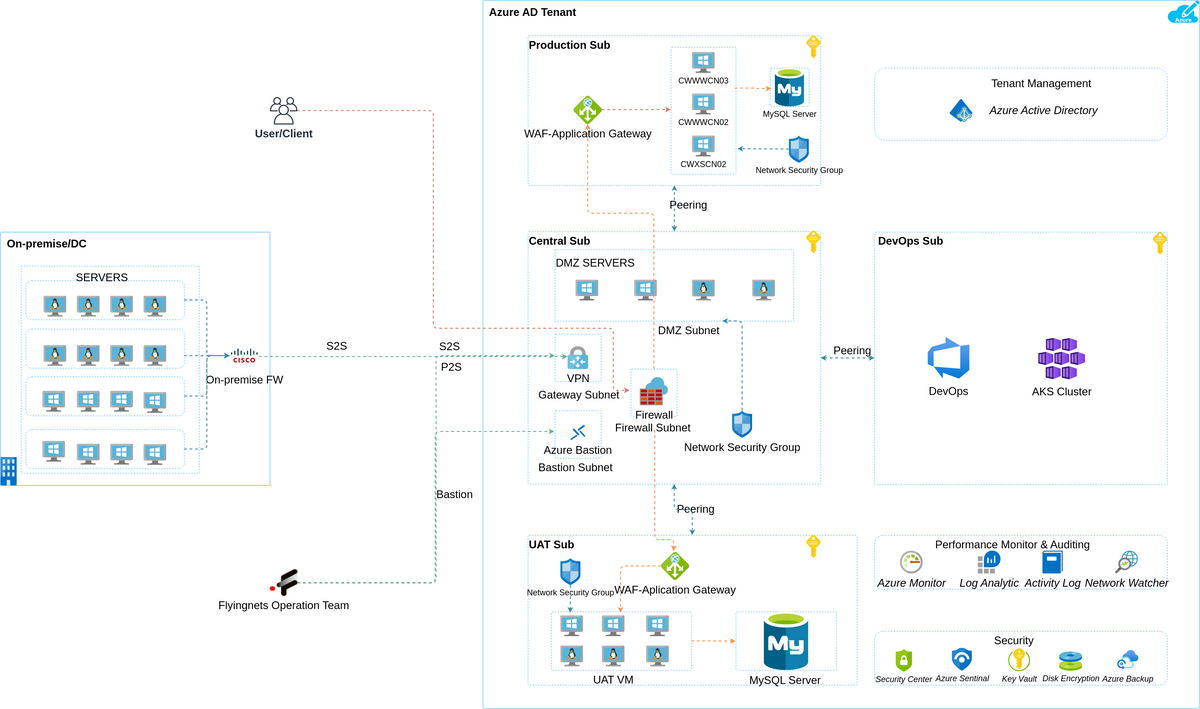

9. Real-time Data Analytics with Azure Stream Services

Objective

Explore Azure Stream Analytics by contributing to or creating a real-time data processing project on Azure. This may involve integrating Azure services like Azure Functions and Power BI to gain insights and visualize real-time data. You can focus on enhancing the real-time analytics capabilities and making the project more user-friendly.

How to Solve?

- Clearly outline the project’s objectives and requirements, including data sources and desired insights.

- Create an Azure Stream Analytics environment, configure inputs/outputs, and integrate Azure Functions and Power BI.

- Ingest real-time data, apply necessary transformations using SQL-like queries.

- Implement custom logic for real-time data processing using Azure Functions.

- Set up Power BI for real-time data visualization and ensure a user-friendly experience.

Click here to explore the source code for this data engineering project.

10. Real-time Financial Market Data Pipeline with Finnhub API and Kafka

Objective

Build a data pipeline that collects and processes real-time financial market data using the Finnhub API and Apache Kafka. This project involves analyzing stock prices, performing sentiment analysis on news data, and visualizing real-time market trends. Contributions can include optimizing data ingestion, enhancing data analysis, or improving the visualization components.

How to Solve?

- Clearly outline the project’s goals, which include collecting and processing real-time financial market data and performing stock analysis and sentiment analysis.

- Create a data pipeline using Apache Kafka and the Finnhub API to collect and process real-time market data.

- Analyze stock prices and perform sentiment analysis on news data within the pipeline.

- Visualize real-time market trends, and consider optimizations for data ingestion and analysis.

- Explore opportunities to optimize data processing, improve analysis, and enhance the visualization components throughout the project.

Click here to explore the source code for this project.

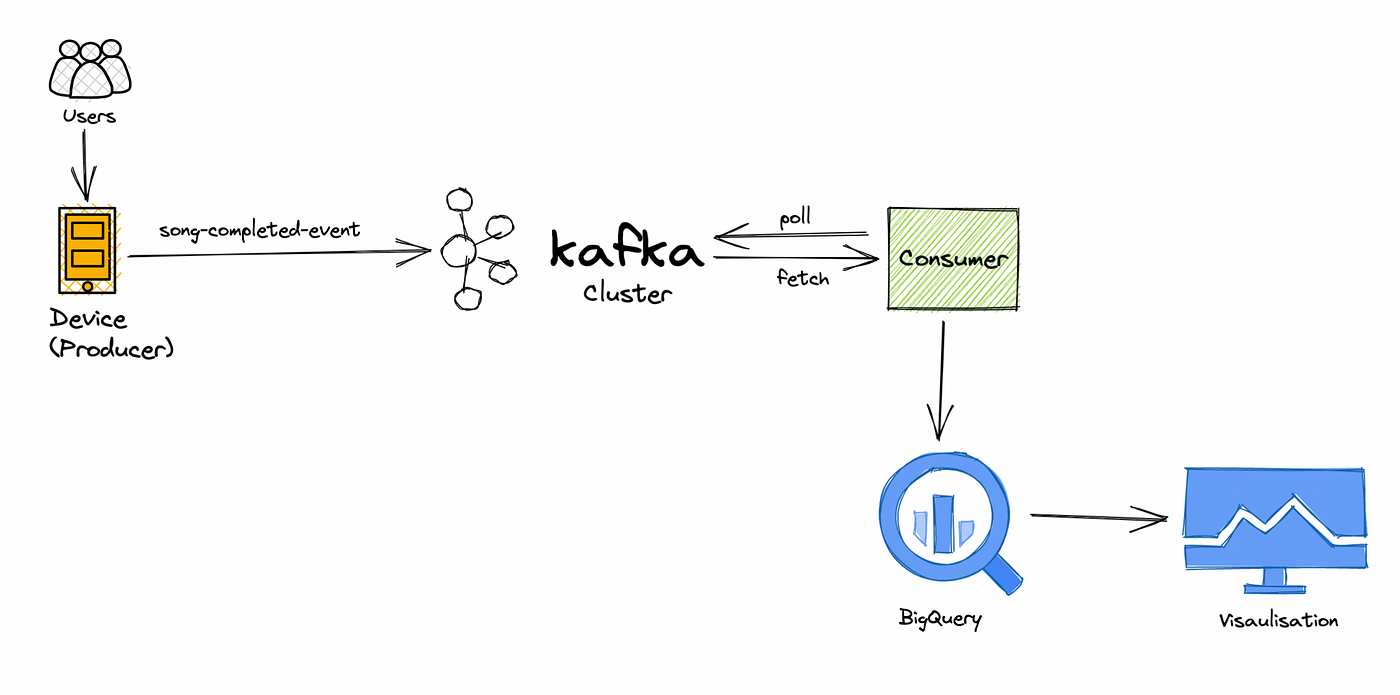

11. Real-time Music Application Data Processing Pipeline

Objective

Collaborate on a real-time music streaming data project focused on processing and analyzing user behavior data in real time. You’ll explore user preferences, track popularity, and enhance the music recommendation system. Contributions may include improving data processing efficiency, implementing advanced recommendation algorithms, or developing real-time dashboards.

How to Solve?

- Clearly define project goals, focusing on real-time user behavior analysis and music recommendation enhancement.

- Collaborate on real-time data processing to explore user preferences, track popularity, and refine the recommendation system.

- Identify and implement efficiency improvements within the data processing pipeline.

- Develop and integrate advanced recommendation algorithms to enhance the system.

- Create real-time dashboards for monitoring and visualizing user behavior data, and consider ongoing enhancements.

Click here to explore the source code.

Advanced-Data Engineering Projects for Resume

12. Website Monitoring

Objective

Develop a comprehensive website monitoring system that tracks performance, uptime, and user experience. This project involves utilizing tools like Selenium for web scraping to collect data from websites and creating alerting mechanisms for real-time notifications when performance issues are detected.

How to Solve?

- Define project objectives, which include building a website monitoring system for tracking performance and uptime, as well as enhancing user experience.

- Utilize Selenium for web scraping to collect data from target websites.

- Implement real-time alerting mechanisms to notify when performance issues or downtime are detected.

- Create a comprehensive system to track website performance, uptime, and user experience.

- Plan for ongoing maintenance and optimization of the monitoring system to ensure its effectiveness over time.

Click here to explore the source code of this data engineering project.

13. Bitcoin Mining

Objective

Dive into the cryptocurrency world by creating a Bitcoin mining data pipeline. Analyze transaction patterns, explore the blockchain network, and gain insights into the Bitcoin ecosystem. This project will require data collection from blockchain APIs, analysis, and visualization.

How to Solve?

- Define the project’s objectives, focusing on creating a Bitcoin mining data pipeline for transaction analysis and blockchain exploration.

- Implement data collection mechanisms from blockchain APIs for mining-related data.

- Dive into blockchain analysis to explore transaction patterns and gain insights into the Bitcoin ecosystem.

- Develop data visualization components to represent Bitcoin network insights effectively.

- Create a comprehensive data pipeline that encompasses data collection, analysis, and visualization for a holistic view of Bitcoin mining activities.

Click here to explore the source code for this data engineering project.

14. GCP Project to Explore Cloud Functions

Objective

Explore Google Cloud Platform (GCP) by designing and implementing a data engineering project that leverages GCP services like Cloud Functions, BigQuery, and Dataflow. This project can include data processing, transformation, and visualization tasks, focusing on optimizing resource usage and improving data engineering workflows.

How to Solve?

- Clearly define the project’s scope, emphasizing the use of GCP services for data engineering, including Cloud Functions, BigQuery, and Dataflow.

- Design and implement the integration of GCP services, ensuring efficient utilization of Cloud Functions, BigQuery, and Dataflow.

- Execute data processing and transformation tasks as part of the project, aligning with the overarching goals.

- Focus on optimizing resource usage within the GCP environment to enhance efficiency.

- Seek opportunities to improve data engineering workflows throughout the project’s lifecycle, aiming for streamlined and effective processes.

Click here to explore the source code for this project.

15. Visualizing Reddit Data

Objective

Collect and analyze data from Reddit, one of the most popular social media platforms. Create interactive visualizations and gain insights into user behavior, trending topics, and sentiment analysis on the platform. This project will require web scraping, data analysis, and creative data visualization techniques.

How to Solve?

- Define the project’s objectives, emphasizing data collection and analysis from Reddit to gain insights into user behavior, trending topics, and sentiment analysis.

- Implement web scraping techniques to gather data from Reddit’s platform.

- Dive into data analysis to explore user behavior, identify trending topics, and perform sentiment analysis.

- Create interactive visualizations to effectively convey insights drawn from the Reddit data.

- Employ innovative data visualization techniques to enhance the presentation of findings throughout the project.

Click here to explore the source code for this project.

Azure Data Engineering Projects

16. Yelp Data Analysis

Objective

In this project, your goal is to comprehensively analyze Yelp data. You will build a data pipeline to extract, transform, and load Yelp data into a suitable storage solution. The analysis can involve:

- Identifying popular businesses.

- Analyzing user review sentiment.

- Providing insights to local businesses for improving their services.

How to Solve?

- Use web scraping techniques or the Yelp API to extract data.

- Clean and preprocess data using Python or Azure Data Factory.

- Store data in Azure Blob Storage or Azure SQL Data Warehouse.

- Perform data analysis using Python libraries like Pandas and Matplotlib.

Click here to explore the source code for this project.

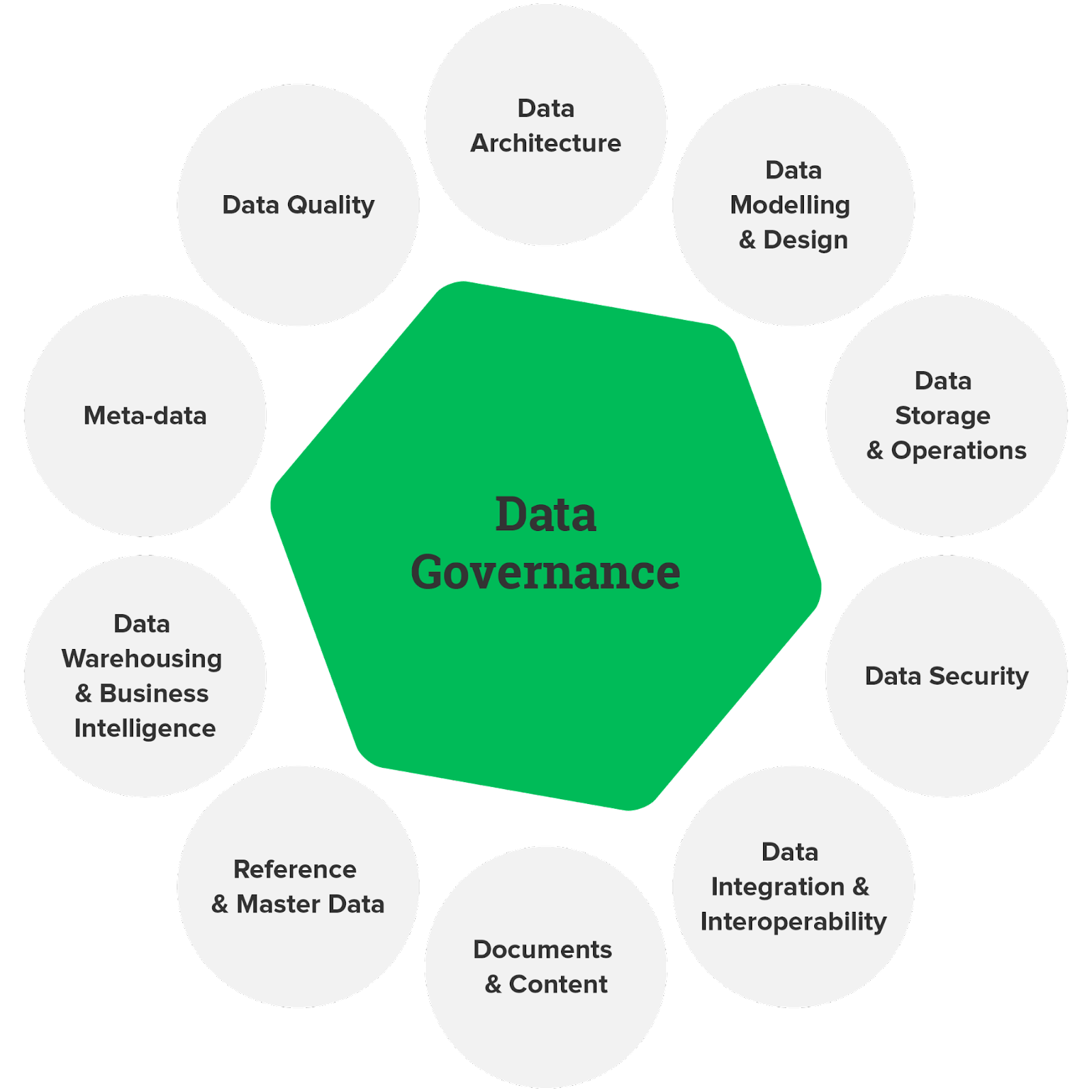

17. Data Governance

Objective

Data governance is critical for ensuring data quality, compliance, and security. In this project, you will design and implement a data governance framework using Azure services. This may involve defining data policies, creating data catalogs, and setting up data access controls to ensure data is used responsibly and in accordance with regulations.

How to Solve?

- Utilize Azure Purview to create a catalog that documents and classifies data assets.

- Implement data policies using Azure Policy and Azure Blueprints.

- Set up role-based access control (RBAC) and Azure Active Directory integration to manage data access.

Click here to explore the source code for this data engineering project.

18. Real-time Data Ingestion

Objective

Design a real-time data ingestion pipeline on Azure using services like Azure Data Factory, Azure Stream Analytics, and Azure Event Hubs. The goal is to ingest data from various sources and process it in real time, providing immediate insights for decision-making.

How to Solve?

- Use Azure Event Hubs for data ingestion.

- Implement real-time data processing with Azure Stream Analytics.

- Store processed data in Azure Data Lake Storage or Azure SQL Database.

- Visualize real-time insights using Power BI or Azure Dashboards.

lick here to explore the source code for this project.

AWS Data Engineering Project Ideas

19. ETL Pipeline

Objective

Build an end-to-end ETL (Extract, Transform, Load) pipeline on AWS. The pipeline should extract data from various sources, perform transformations, and load the processed data into a data warehouse or lake. This project is ideal for understanding the core principles of data engineering.

How to Solve?

- Use AWS Glue or AWS Data Pipeline for data extraction.

- Implement transformations using Apache Spark on Amazon EMR or AWS Glue.

- Store processed data in Amazon S3 or Amazon Redshift.

- Set up automation using AWS Step Functions or AWS Lambda for orchestration.

Click here to explore the source code for this project.

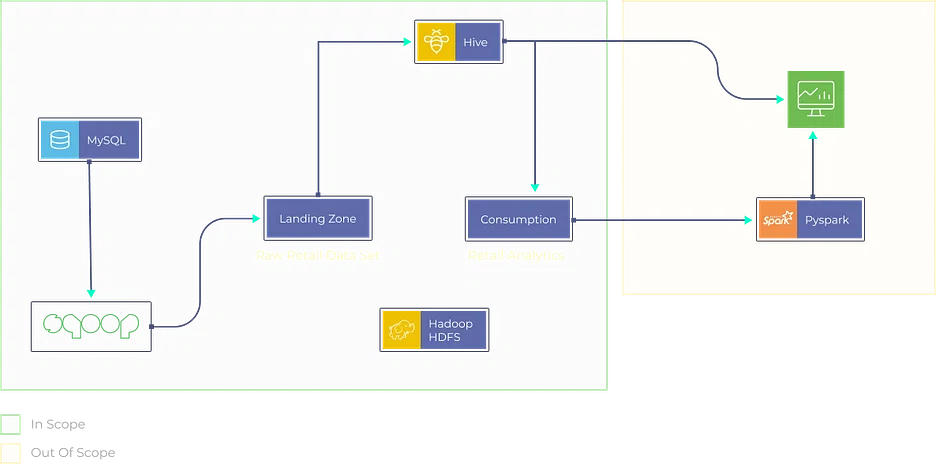

20. ETL and ELT Operations

Objective

Explore ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) data integration approaches on AWS. Compare their strengths and weaknesses in different scenarios. This project will provide insights into when to use each approach based on specific data engineering requirements.

How to Solve?

- Implement ETL processes using AWS Glue for data transformation and loading. Employ AWS Data Pipeline or AWS DMS (Database Migration Service) for ELT operations.

- Store data in Amazon S3, Amazon Redshift, or Amazon Aurora, depending on the approach.

- Automate data workflows using AWS Step Functions or AWS Lambda functions.

Click here to explore the source code for this project.

Conclusion

Data engineering projects offer an incredible opportunity to dive into the world of data, harness its power, and drive meaningful insights. Whether you’re building pipelines for real-time streaming data or crafting solutions to process vast datasets, these projects sharpen your skills and open doors to exciting career prospects.

But don’t stop here; if you’re eager to take your data engineering journey to the next level, consider enrolling in our BlackBelt Plus program. With BB+, you’ll gain access to expert guidance, hands-on experience, and a supportive community, propelling your data engineering skills to new heights. Enroll Now!

Frequently Asked Questions

A. Data engineering involves designing, constructing, and maintaining data pipelines. Example: Creating a pipeline to collect, clean, and store customer data for analysis.

A. Best practices in data engineering include robust data quality checks, efficient ETL processes, documentation, and scalability for future data growth.

A. Data engineers work on tasks like data pipeline development, ensuring data accuracy, collaborating with data scientists, and troubleshooting data-related issues.

A. To showcase data engineering projects on a resume, highlight key projects, mention technologies used, and quantify the impact on data processing or analytics outcomes.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/09/data-engineering-project/