Commissioned Generative AI runs on data, and many organizations have found GenAI is most valuable when they combine it with their unique and proprietary data. But therein lies a conundrum. How can an organization tap into their data treasure trove without putting their business at undue risk?

Many organizations have addressed these concerns with specific guidance on when and how to use generative AI with their own proprietary data. Other organizations have outright banned its use over concerns of IP leakage or exposing sensitive data.

But what if I told you there was an easy way forward already sitting behind your firewall either in your datacenter or on a workstation? And the great news is it doesn’t require months-long procurement cycles or a substantial deployment for a minimum viable product. Not convinced? Let me show you how.

Step 1: Repurpose existing hardware for trial

Depending on what you’re doing with generative AI, workloads can be run on all manner of hardware in a pilot phase. How? There are effectively four stages of data science with these models. The first and second, inferencing and Retrieval-Augmented-Generation (RAG), can be done on relatively modest hardware configurations, while the last two, fine-tuning/retraining and new model creation, require extensive infrastructure to see results. Furthermore, models can be of various sizes and not everything has to be a “large language model”. Consequently, we’re seeing a lot of organizations finding success with domain-specific and enterprise-specific “small language models” that are targeted at very narrow use cases. This means you can go repurpose a server, find a workstation a model can be deployed on, or if you’re very adventurous, you could even download LLaMA 2 onto your laptop and play around with it. It’s really not that difficult to support this level of experimentation.

Step 2: Hit open source

Perhaps nowhere is the open-source community more at the bleeding edge of what is possible than in GenAI. We’re seeing relatively small models rivaling some of the biggest commercial deployments on earth in their aptitude and applicability. The only thing stopping you from getting started is the download speed. There are a whole host of open-source projects at your disposal, so pick a distro and get going. Once downloaded and installed, you’ve effectively activated the first phase of GenAI: inferencing. Theoretically your experimentation could stop here, but what if with just a little more work you could unlock some real magic?

Step 3: Identify your use cases

You might be tempted to skip this step, but I don’t recommend it. Identify a pocket of use cases you want to solve for. The next step is data collection and you need to ensure you’re grabbing the right data to deliver the right results via the open source pre-trained LLM you’re augmenting with your data. Figure out who your pilot users will be and ask them what’s important to them – for example, a current project they would like assistance with and what existing data they have that would be helpful to pilot with.

Step 4: Activate Retrieval-Augmented-Generation (RAG)

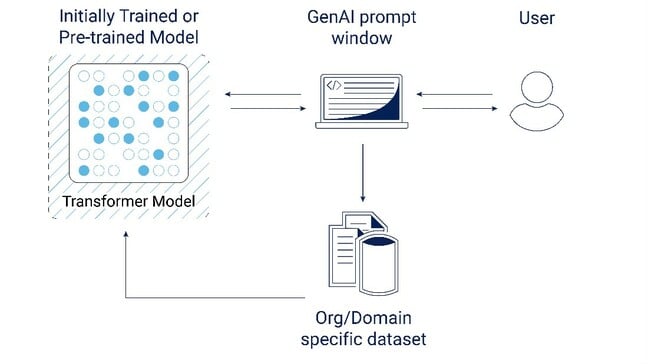

You might think adding data to a model sounds extremely hard – it’s the sort of thing we usually think requires data scientists. But guess what: any organization with a developer can activate retrieval-augmented generation (RAG). In fact, for many use cases this may be all you will ever need to do to add data to a generative AI model. How does it work? Effectively RAG takes unstructured data like your documents, images, and videos and helps encode them and index them for use. We piloted this ourselves using open-source technologies like LangChain to create vector databases which enable the GenAI model to analyze data in less than an hour. The result was a fully functioning chatbot, which proved out this concept in record time.

Source: Dell Technologies

In Closing

The unique needs and capabilities of GenAI make for a unique PoC experience, and one that can be rapidly piloted to deliver immediate value and prove its worth to the organization. Piloting this in your own environment offers many advantages in terms of security and cost efficiencies you cannot replicate in the public cloud.

Public cloud is great for many things, but you’re going to pay by the drip for a PoC, it’s very easy to burn through a budget with users who are inexperienced at prompt engineering. Public cloud also doesn’t offer the same safeguards for sensitive and proprietary data. This can actually result in internal users moving slower as they think through every time they use a generative AI tool whether the data they’re inputting is “safe” data that can be used with that particular system. Counterintuitively, this is one of the few times the datacenter offers unusually high agility and a lower up front cost than its public cloud counterpart.

So go forth, take an afternoon and get your own PoC under way, and once you’re ready for the next phase we’re more than happy to help.

Here’s where you can learn more about Dell Generative AI Solutions.

Brought to you by Dell Technologies.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://go.theregister.com/feed/www.theregister.com/2023/10/09/the_generative_ai_easy_button/