Amazon Redshift ML empowers data analysts and database developers to integrate the capabilities of machine learning and artificial intelligence into their data warehouse. Amazon Redshift ML helps to simplify the creation, training, and application of machine learning models through familiar SQL commands.

You can further enhance Amazon Redshift’s inferencing capabilities by Bringing Your Own Models (BYOM). There are two types of BYOM: 1) remote BYOM for remote inferences, and 2) local BYOM for local inferences. With local BYOM, you utilize a model trained in Amazon SageMaker for in-database inference within Amazon Redshift by importing Amazon SageMaker Autopilot and Amazon SageMaker trained models into Amazon Redshift. Alternatively, with remote BYOM you can invoke remote custom ML models deployed in SageMaker. This enables you to use custom models in SageMaker for churn, XGBoost, linear regression, multi-class classification and now LLMs.

Amazon SageMaker JumpStart is a SageMaker feature that helps deploy pretrained, publicly available large language models (LLM) for a wide range of problem types, to help you get started with machine learning. You can access pretrained models and use them as-is or incrementally train and fine-tune these models with your own data.

In prior posts, Amazon Redshift ML exclusively supported BYOMs that accepted text or CSV as the data input and output format. Now, it has added support for the SUPER data type for both input and output. With this support, you can use LLMs in Amazon SageMaker JumpStart which offers numerous proprietary and publicly available foundation models from various model providers.

LLMs have diverse use cases. Amazon Redshift ML supports available LLM models in SageMaker including models for sentiment analysis. In sentiment analysis, the model can analyze product feedback and strings of text and hence the sentiment. This capability is particularly valuable for understanding product reviews, feedback, and overall sentiment.

Overview of solution

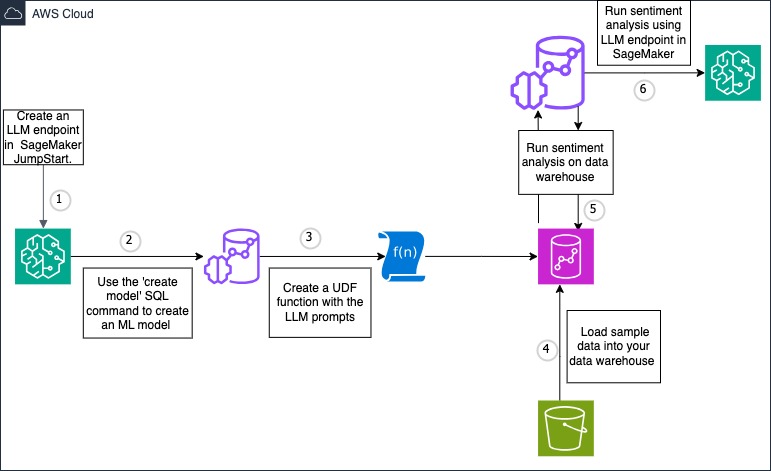

In this post, we use Amazon Redshift ML for sentiment analysis on reviews stored in an Amazon Redshift table. The model takes the reviews as an input and returns a sentiment classification as the output. We use an out of the box LLM in SageMaker Jumpstart. Below picture shows the solution overview.

Walkthrough

Follow the steps below to perform sentiment analysis using Amazon Redshift’s integration with SageMaker JumpStart to invoke LLM models:

- Deploy LLM model using foundation models in SageMaker JumpStart and create an endpoint

- Using Amazon Redshift ML, create a model referencing the SageMaker JumpStart LLM endpoint

- Create a user defined function(UDF) that engineers the prompt for sentiment analysis

- Load sample reviews data set into your Amazon Redshift data warehouse

- Make a remote inference to the LLM model to generate sentiment analysis for input dataset

- Analyze the output

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

- An Amazon Redshift Serverless preview workgroup or an Amazon Redshift provisioned preview cluster. Refer to creating a preview workgroup or creating a preview cluster documentation for steps.

- For the preview, your Amazon Redshift data warehouse should be on preview_2023 track in of these regions – US East (N. Virginia), US West (Oregon), EU-West (Ireland), US-East (Ohio), AP-Northeast (Tokyo) or EU-North-1 (Stockholm).

Solution Steps

Follow the below solution steps

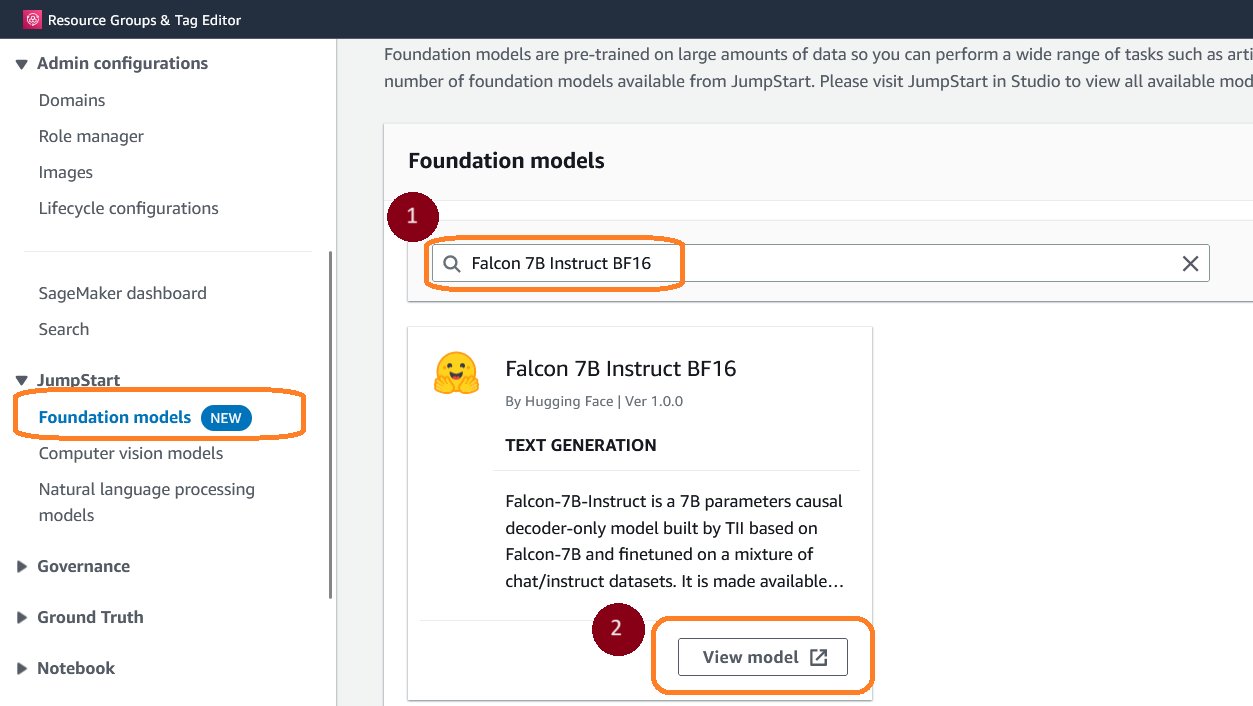

1. Deploy LLM Model using Foundation models in SageMaker JumpStart and create an endpoint

- Navigate to Foundation Models in Amazon SageMaker Jumpstart

- Search for the foundation model by typing Falcon 7B Instruct BF16 in the search box

- Choose View Model

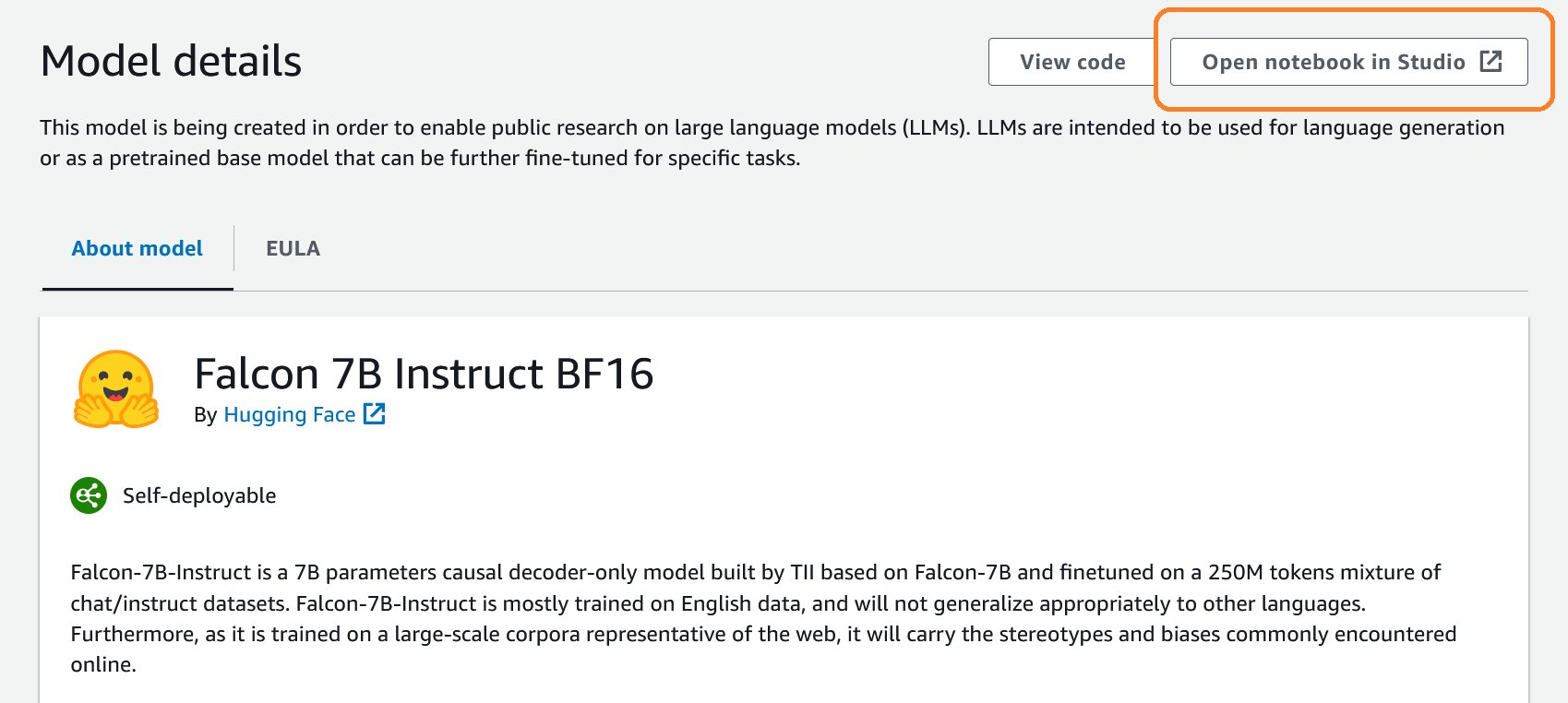

- In the Model Details page, choose Open notebook in Studio

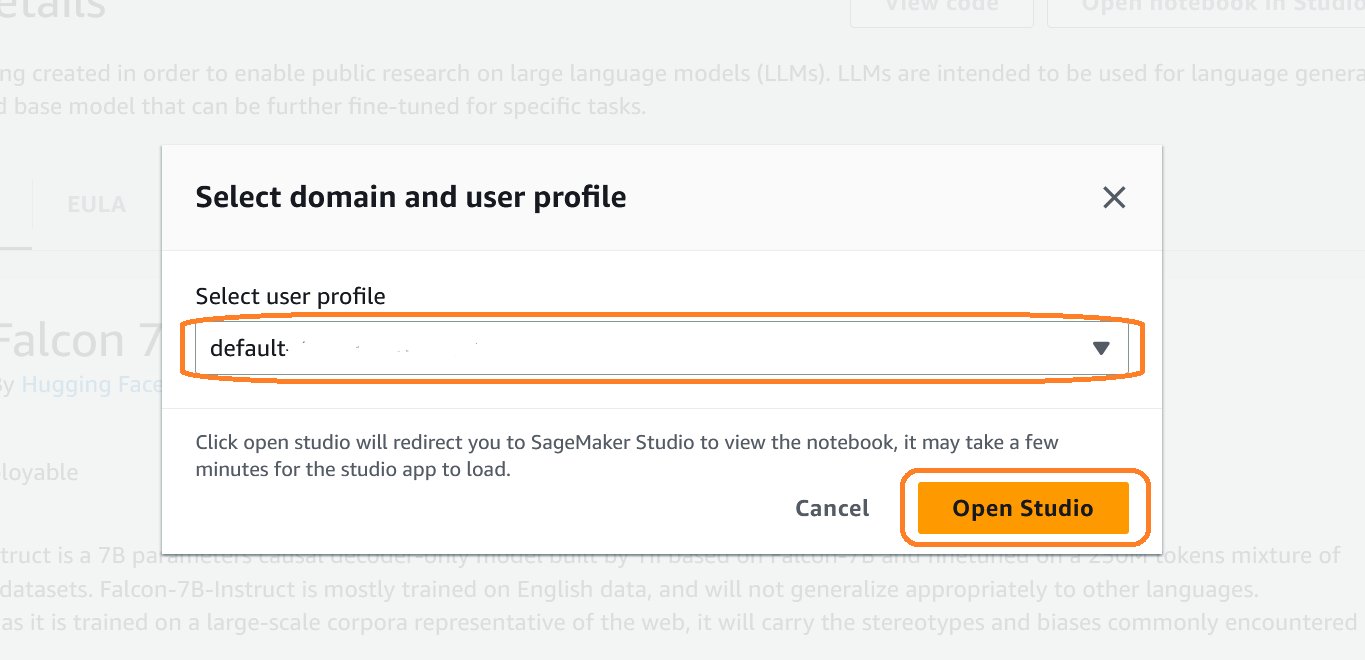

- When Select domain and user profile dialog box opens up, choose the profile you like from the drop down and choose Open Studio

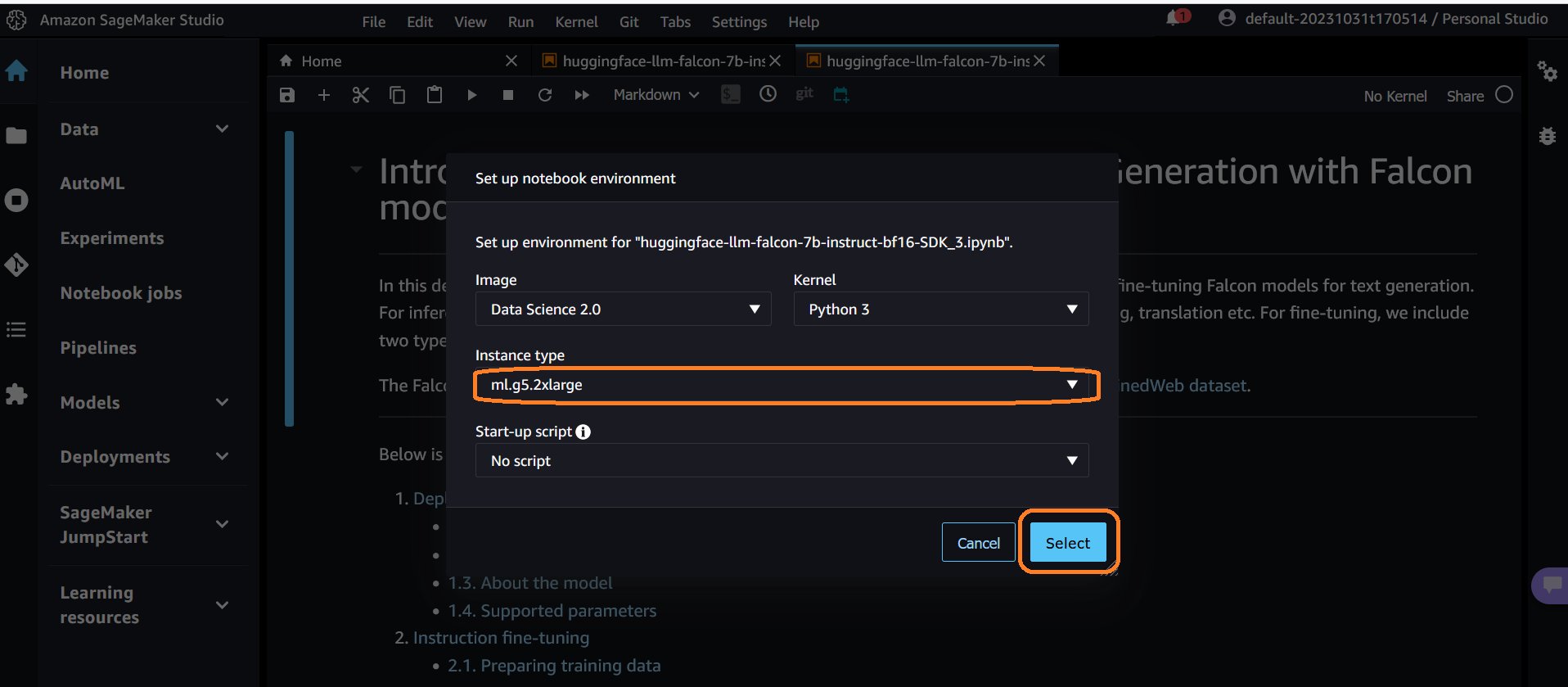

- When the notebook opens, a prompt Set up notebook environment pops open. Choose ml.g5.2xlarge or any other instance type recommended in the notebook and choose Select

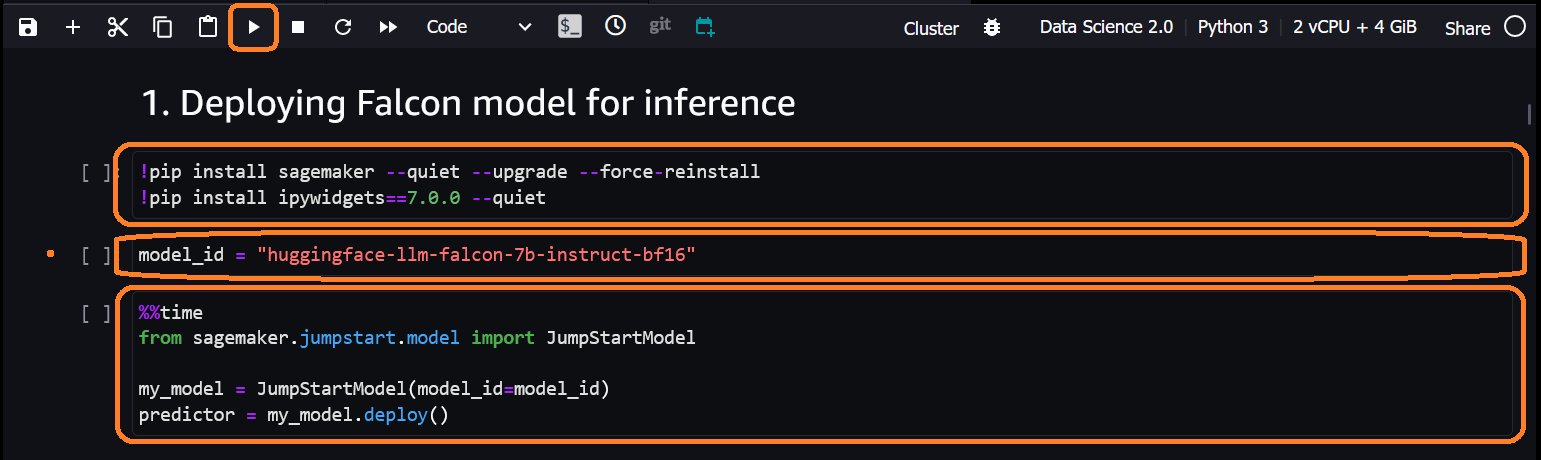

- Scroll to Deploying Falcon model for inference section of the notebook and run the three cells in that section

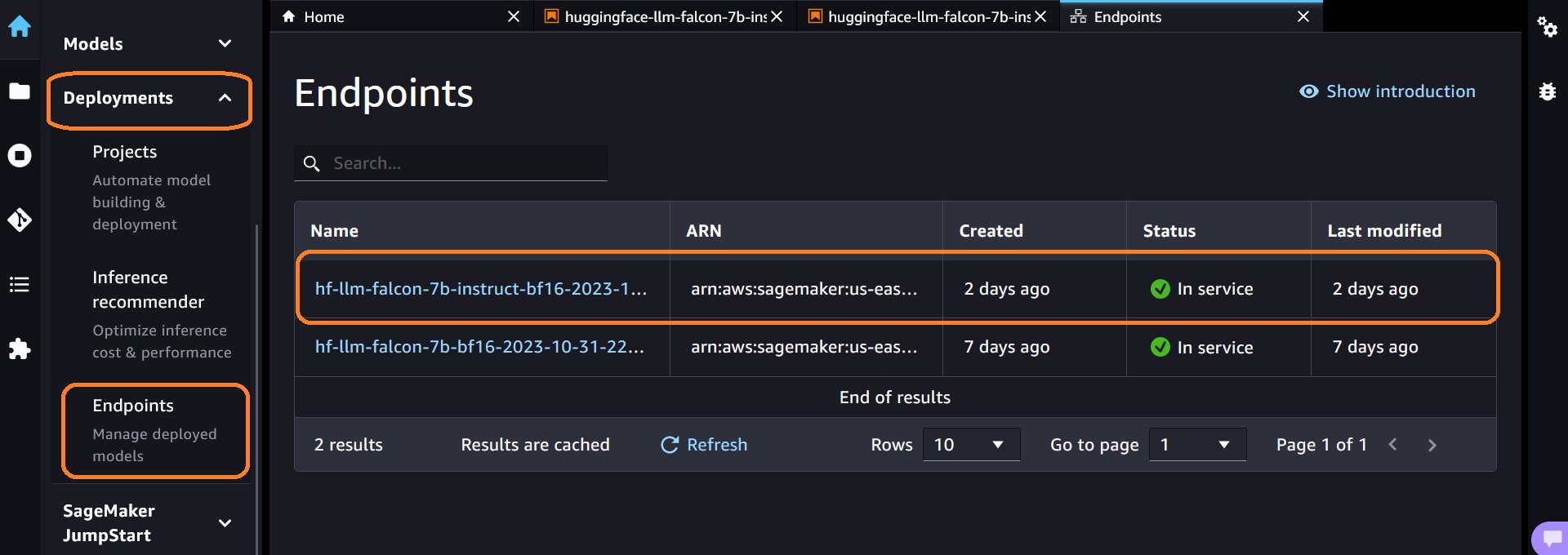

- Once the third cell execution is complete, expand Deployments section in the left pane, choose Endpoints to see the endpoint created. You can see endpoint Name. Make a note of that. It will be used in the next steps

- Select Finish.

2. Using Amazon Redshift ML, create a model referencing the SageMaker JumpStart LLM endpoint

Create a model using Amazon Redshift ML bring your own model (BYOM) capability. After the model is created, you can use the output function to make remote inference to the LLM model. To create a model in Amazon Redshift for the LLM endpoint created previously, follow the below steps.

- Login to Amazon Redshift endpoint. You can use Query editor V2 to login

- Import this notebook into Query Editor V2. It has all the SQLs used in this blog.

- Ensure you have the below IAM policy added to your IAM role. Replace <endpointname> with the SageMaker JumpStart endpoint name captured earlier

- Create model in Amazon Redshift using the create model statement given below. Replace <endpointname> with the endpoint name captured earlier. The input and output data type for the model needs to be SUPER.

3. Load sample reviews data set into your Amazon Redshift data warehouse

In this blog post, we will use a sample fictitious reviews dataset for the walkthrough

- Login to Amazon Redshift using Query Editor V2

- Create

sample_reviewstable using the below SQL statement. This table will store the sample reviews dataset - Download the sample file, upload into your S3 bucket and load data into

sample_reviewstable using the below COPY command

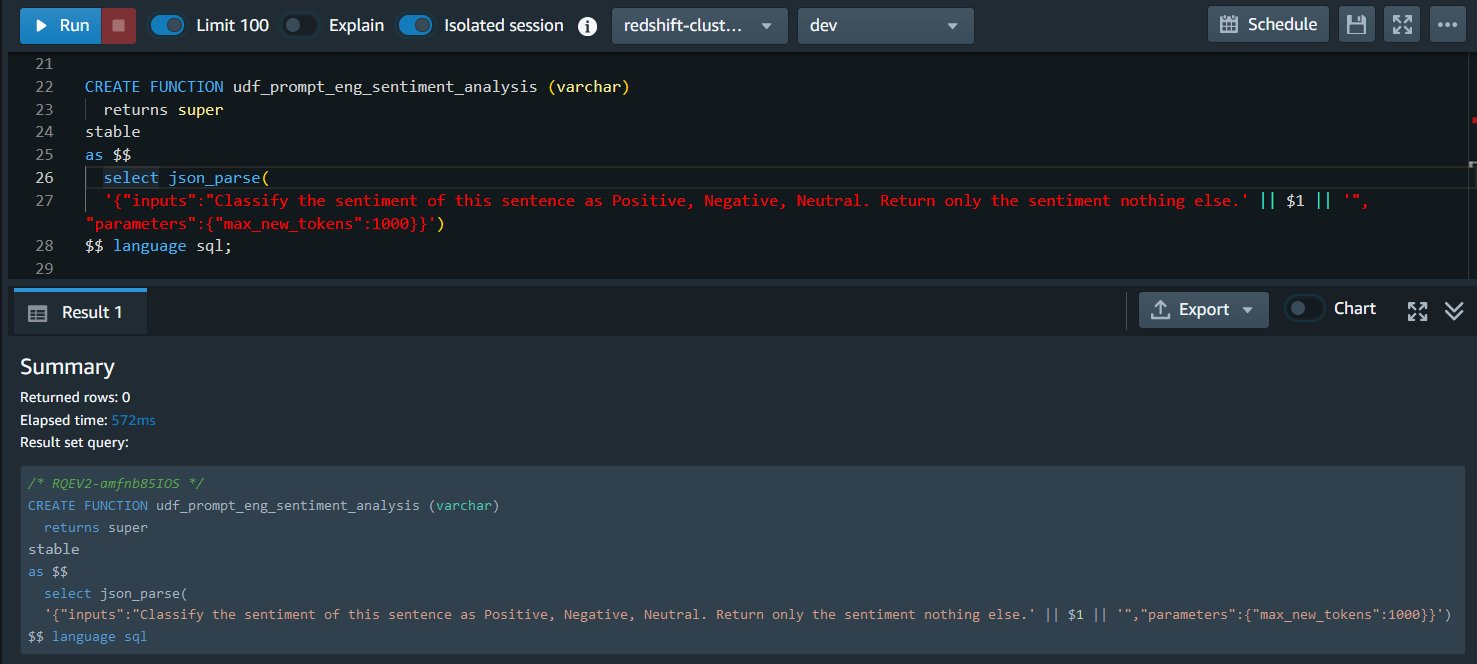

4. Create a UDF that engineers the prompt for sentiment analysis

The input to the LLM consists of two main parts – the prompt and the parameters.

The prompt is the guidance or set of instructions you want to give to the LLM. Prompt should be clear to provide proper context and direction for the LLM. Generative AI systems rely heavily on the prompts provided to determine how to generate a response. If the prompt does not provide enough context and guidance, it can lead to unhelpful responses. Prompt engineering helps avoid these pitfalls.

Finding the right words and structure for a prompt is challenging and often requires trial and error. Prompt engineering allows experimenting to find prompts that reliably produce the desired output. Prompt engineering helps shape the input to best leverage the capabilities of the Generative-AI model being used. Well-constructed prompts allow generative AI to provide more nuanced, high-quality, and helpful responses tailored to the specific needs of the user.

The parameters allow configuring and fine-tuning the model’s output. This includes settings like maximum length, randomness levels, stopping criteria, and more. Parameters give control over the properties and style of the generated text and are model specific.

The UDF below takes varchar data in your data warehouse, parses it into SUPER (JSON format) for the LLM. This flexibility allows you to store your data as varchar in your data warehouse without performing data type conversion to SUPER to use LLMs in Amazon Redshift ML and makes prompt engineering easy. If you want to try a different prompt, you can just replace the UDF

The UDF given below has both the prompt and a parameter.

- Prompt: “Classify the sentiment of this sentence as Positive, Negative, Neutral. Return only the sentiment nothing else” – This instructs the model to classify the review into 3 sentiment categories.

- Parameter: “max_new_tokens”:1000 – This allows the model to return up to 1000 tokens.

5. Make a remote inference to the LLM model to generate sentiment analysis for input dataset

The output of this step is stored in a newly created table called sentiment_analysis_for_reviews. Run the below SQL statement to create a table with output from LLM model

6. Analyze the output

The output of the LLM is of datatype SUPER. For the Falcon model, the output is available in the attribute named generated_text. Each LLM has its own output payload format. Please refer to the documentation for the LLM you would like to use for its output format.

Run the below query to extract the sentiment from the output of LLM model. For each review, you can see it’s sentiment analysis

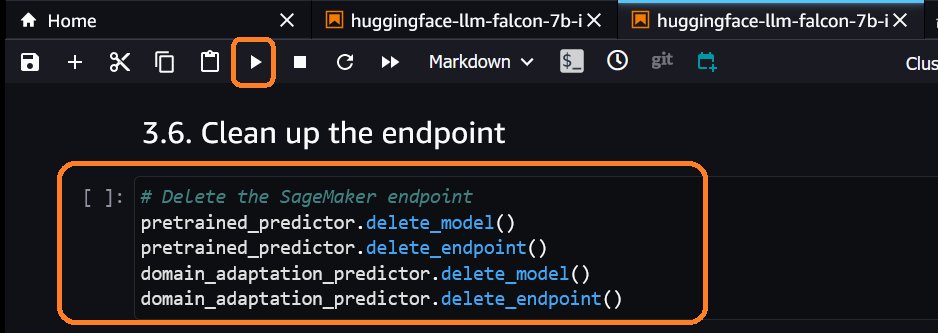

Cleaning up

To avoid incurring future charges, delete the resources.

- Delete the LLM endpoint in SageMaker Jumpstart

- Drop the

sample_reviewstable and the model in Amazon Redshift using the below query

- If you have created an Amazon Redshift endpoint, delete the endpoint as well

Conclusion

In this post, we showed you how to perform sentiment analysis for data stored in Amazon Redshift using Falcon, a large language model(LLM) in SageMaker jumpstart and Amazon Redshift ML. Falcon is used as an example, you can use other LLM models as well with Amazon Redshift ML. Sentiment analysis is just one of the many use cases that are possible with LLM support in Amazon Redshift ML. You can achieve other use cases such as data enrichment, content summarization, knowledge graph development and more. LLM support broadens the analytical capabilities of Amazon Redshift ML as it continues to empower data analysts and developers to incorporate machine learning into their data warehouse workflow with streamlined processes driven by familiar SQL commands. The addition of SUPER data type enhances Amazon Redshift ML capabilities, allowing smooth integration of large language models (LLM) from SageMaker JumpStart for remote BYOM inferences.

About the Authors

Blessing Bamiduro is part of the Amazon Redshift Product Management team. She works with customers to help explore the use of Amazon Redshift ML in their data warehouse. In her spare time, Blessing loves travels and adventures.

Blessing Bamiduro is part of the Amazon Redshift Product Management team. She works with customers to help explore the use of Amazon Redshift ML in their data warehouse. In her spare time, Blessing loves travels and adventures.

Anusha Challa is a Senior Analytics Specialist Solutions Architect focused on Amazon Redshift. She has helped many customers build large-scale data warehouse solutions in the cloud and on premises. She is passionate about data analytics and data science.

Anusha Challa is a Senior Analytics Specialist Solutions Architect focused on Amazon Redshift. She has helped many customers build large-scale data warehouse solutions in the cloud and on premises. She is passionate about data analytics and data science.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://aws.amazon.com/blogs/big-data/large-language-models-for-sentiment-analysis-with-amazon-redshift-ml-preview/