Introduction

Kubernetes plays a vital role in managing containerized applications, especially in today’s dynamic cloud-native environment. With the rise of microservices and containers, Kubernetes simplifies deployment and scaling. Effective monitoring of Kubernetes is crucial for maintaining the health and performance of containerized systems, ensuring their reliability.

In this complex and ever-changing environment, where applications are spread across multiple containers on various nodes, monitoring becomes essential for maintaining efficiency. Without proper monitoring, it’s challenging to detect issues early and respond promptly, leading to downtime, performance issues, and inefficient resource utilization.

Monitoring is also essential for Kubernetes to function smoothly. In microservices, it’s crucial to understand the importance of monitoring. Additionally, adhering to Kubernetes monitoring best practices is highly advisable, and we’ll explore them in greater detail in this article.

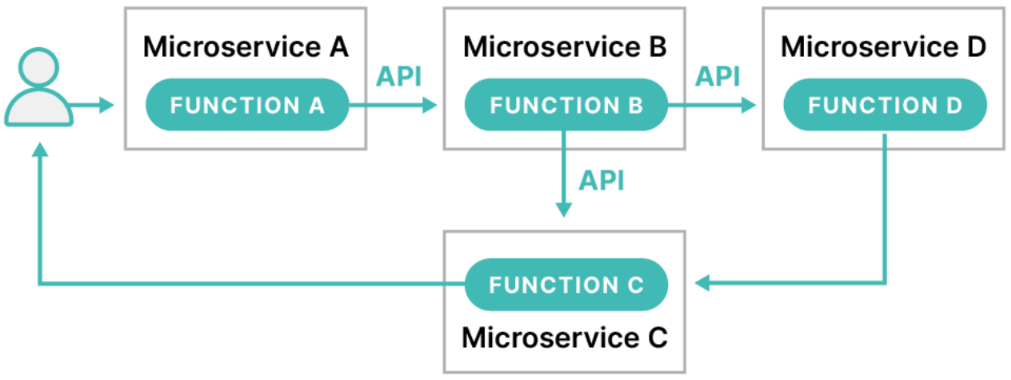

Why is Monitoring Needed in Microservices Architecture?

Monitoring is critically important in Kubernetes environments due to the dynamic and complex nature of container orchestration. Monitoring helps in making decisions about scaling, troubleshooting, etc. Let’s discuss these points further below:

Performance Optimization

Kubernetes enables automatic scaling of applications based on demand, allowing for the dynamic creation or termination of pods (containers) as the infrastructure load increases. This seamless operation ensures organizations can operate without any performance delays.

To effectively manage the ever-changing resource allocation, monitor resource utilization, and ensure optimal system performance, it is crucial to have a reliable monitoring setup. This setup will enable organizations to stay informed about any changes and take necessary actions to optimize resource utilization and troubleshoot any issues that may arise.

Resource Allocation and Optimization

Several apps with different resource requirements run on the same Kubernetes cluster in the Kubernetes architecture. This can lead to issues with resource allocation. For example, certain applications may use resources excessively, which can negatively impact other applications.

Monitoring aids in resource allocation optimization, allowing for early detection of either extensive or insufficient use of the resources inside the Kubernetes cluster. It guarantees that every pod has the resources it needs, preventing bottlenecks and improving efficiency all around.

Performance and Efficient Troubleshooting

Managing the performance and well-being of individual applications in a constantly changing environment can be quite challenging. When you have a variety of microservices spread across different nodes, identifying the root cause of an issue can be extremely difficult. Monitoring is a crucial aspect of any system, providing valuable real-time insights into the status of pods, nodes, and the entire cluster.

By keeping a close eye on these components, you can ensure the overall health and performance of your system. Efficiently identifying problems, reducing downtime, and enabling swift troubleshooting – monitoring allows you to easily track response times, error rates, and throughput. It allows for quick identification and resolution of any issues that may arise, ensuring a seamless user experience.

Best Practices for Kubernetes Monitoring

When implementing monitoring within Kubernetes, it is recommended that some of the monitoring best practices be followed. These best practices can help you understand and monitor logs in a more efficient way. Let’s explore them in more detail below:

Collect Comprehensive Metrics

When it comes to monitoring, there is a wide range of metrics that you can effectively track and measure various aspects. It is highly advisable to gather a wide range of metrics to gain a comprehensive understanding of your system. These metrics should cover resource utilization such as CPU, memory, and disk, as well as network traffic, pod and node health, and application-specific metrics.

With this extensive data, you can gain a complete understanding of the Kubernetes environment. Additionally, it can provide valuable insights into the utilization of CPU resources by the system or node. This information is crucial for determining whether the resources are being underutilized or overutilized.

Implement Distributed Tracing

Traditional logging methods are not feasible for microservices, which Kubernetes supports and works on. Therefore, it is advised to use distributed tracing in order to comprehend how requests move between microservices.

It records the time a request entered and was processed within a particular microservice. This gives a more thorough picture of application performance and aids in the identification of latency problems. By taking a closer look at the correct view, it will also be feasible to troubleshoot microservices onfiguration issues as well.

Set Proper Resource Quota and Limits

When deploying Kubernetes nodes, they have the flexibility to utilize resources and quotas based on their specific requirements. However, this can sometimes lead to challenges, such as certain nodes being underutilized.

Setting resource quotas and limits for pods is crucial to avoid resource contention and ensure a fair distribution of resources. It is essential to closely monitor and strictly enforce these limits in order to prevent any potential decline in performance and unforeseen system outages.

Conclusion

The implementation of robust monitoring practices is highly recommended within Kubernetes environments. Monitoring not only facilitates the troubleshooting of microservices but also ensures proper resource allocation and optimization, thereby increasing the overall efficiency of the Kubernetes architecture.

By adhering to the best practices, including the incorporation of distributed tracing and

comprehensive metrics, organizations can proactively address challenges associated with the dynamic and distributed nature of containerized systems. This approach not only enhances the resilience of the environment but also empowers teams to make informed decisions, optimize performance, and uphold the reliability of applications in the ever-evolving landscape of Kubernetes orchestration.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: Plato Data Intelligence.