Google Gemma AI has arrived, offering developers powerful yet compact language models accessible for innovation and customization. Built upon the foundational research powering Google’s impressive Gemini models, Google Gemma AI is part of the company’s ongoing commitment to open-source AI development.

Let’s delve into Google Gemma AI, its variants, and the potential it offers.

What is Google Gemma AI?

Google Gemma AI represents a suite of lightweight language models released for open-source use. They are designed to be highly efficient while delivering a performance that can exceed the capabilities of some larger language models. Here’s the core proposition of Google Gemma AI:

- Accessibility: Google Gemma AI models can function within various environments, including laptops, workstations, and cloud platforms like Google Cloud

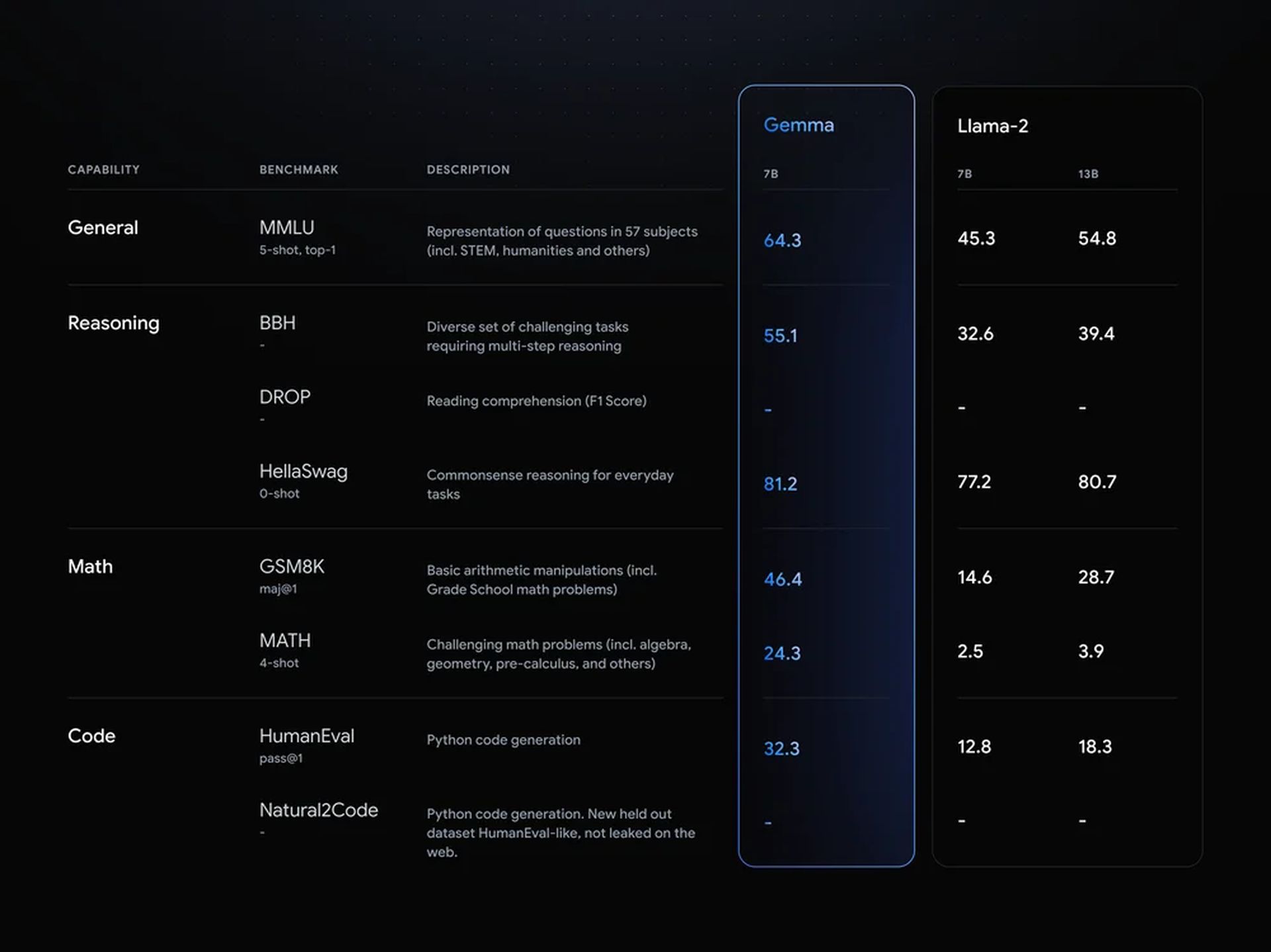

- Performance: These models demonstrate impressive capabilities across diverse natural language processing tasks

- Responsible AI: Google backs Gemma with its Responsible Generative AI Toolkit, promoting safe, ethical applications

Gemma comes in two primary sizes:

- Gemma 2B: This model offers 2 billion parameters, ensuring a lightweight footprint

- Gemma 7B: The larger variant has 7 billion parameters for more complex tasks

Both Gemma 2B and Gemma 7B are available as:

- Pre-trained variants: Ideal for general language understanding

- Instruction-tuned variants: Optimized for following instructions and task completion

Gemma 2B powering AI solutions on the go and beyond

Don’t let Gemma 2B’s compact size deceive you. This lightweight language model packs impressive capabilities that unlock novel AI applications across diverse fields.

Let’s explore some key areas where Gemma 2B shines.

Mobile and edge devices

Gemma 2B eliminates the need for powerful cloud connectivity to enable AI-powered features. Imagine on-device language translation for travelers, intelligent assistants for smart home devices, or offline text summarization tools for students with limited internet access. Gemma 2B makes these scenarios possible by delivering powerful AI capabilities within the constraints of resource-limited hardware.

Chatbots and conversational AI

Gemma 2B excels in crafting realistic and contextually aware conversations. This could revolutionize customer service chatbots, providing more nuanced and helpful interactions that go beyond simple FAQ responses. Additionally, Gemma 2B could power virtual companions for the elderly, engaging in contextually-aware conversations to combat loneliness and provide mental stimulation.

Summarization tasks

The ability to condense information into essential summaries is invaluable across various domains. Gemma 2B can automatically generate concise abstracts of research papers, summarize news articles for quick consumption, or even create meeting transcripts with key takeaways highlighted. This saves time and increases efficiency for students, researchers, and professionals alike.

These are just a few examples of the exciting possibilities that Gemma 2B presents. Its lightweight nature, coupled with impressive performance, opens doors to AI innovations in places where larger, more computationally demanding models simply wouldn’t be feasible.

Gemma 7B unlocks next-level AI applications

Where Gemma 2B excels in efficiency, Gemma 7B offers the power to tackle more complex and demanding AI scenarios. With a larger parameter count, Gemma 7B unlocks these exciting potential applications.

Complex text generation

Gemma 7B possesses the capacity to generate more sophisticated and elaborate text formats. This extends from creative storytelling, where it can craft compelling narratives and character development, to technical report writing, where it can synthesize and present data-driven insights in clear, structured formats. Imagine AI tools that assist authors, or automatically generate detailed reports from raw data sets.

In-depth question answering

Gemma 7B’s ability to process and understand vast amounts of information makes it ideal for complex question-answering systems. Think beyond simple search engine queries. Gemma 7B could help researchers extract precise insights from scientific literature, assist legal professionals in finding relevant case law, or provide customers with detailed product comparisons based on nuanced criteria.

Translation

High-quality language translation requires understanding context, nuance, and the ability to accurately transfer meaning between languages. Gemma 7B excels in these areas, enabling translation tools that capture the essence of the source text and render it with fluency in the target language. This could transform how we communicate and access information across linguistic barriers.

The capabilities outlined above illustrate how Gemma 7B pushes the boundaries of language model applications. Its increased size and power open doors to scenarios where a streamlined, lightweight approach might not suffice, empowering new levels of creativity and insight extraction.

Getting started with Google Gemma models

Here’s a detailed breakdown of how you can get started with Google Gemma AI models, covering essential resources and approaches:

1. Choose Your Framework & Environment

- Flexibility is key: Google Gemma AI works seamlessly with popular deep learning frameworks like JAX, PyTorch, and Keras 3.0 (TensorFlow backend). You can build upon your existing workflows and skillsets with minimal friction

- Cloud or local:

- Google Cloud: Vertex AI and Google Kubernetes Engine (GKE) provide powerful cloud options, including one-click deployment, scalable resources, and optimization for Google’s TPUs

- Workstations and laptops: Download Gemma models and run them directly on local machines with suitable hardware

- Quick start tools: Colab and Kaggle notebooks offer code examples and easy experimentation within browser-based environments

2. Select Your Model Variant

- Gemma 2B: The lightweight option for memory constraints and focus on efficiency

- Gemma 7B: The larger model shines in complex text generation, nuanced question-answering, and demanding translation tasks

- Pre-trained vs Instruction-tuned:

- Pre-trained models provide general language understanding

- Instruction-tuned models are optimized for following prompts and completing tasks

3. Explore and Experiment

- Google AI Gemma Website: Find quickstart guides, code samples, and detailed documentation.

- Integrations:

- Hugging Face Transformers: Leverage popular libraries and pre-built pipelines

- NVIDIA NeMo and TensorRT-LLM: Optimize performance on NVIDIA GPUs

- Kaggle: Access Gemma models, community discussions, and examples

- Colab: Run ready-made notebooks to learn the basics

Join the Gemma Community

Google Gemma AI marks a significant step forward for the open-source AI community. With compact models, a focus on responsibility, and a broad toolkit, Gemma empowers developers and researchers to create innovative and ethical AI solutions.

To get started with Gemma, visit Google Gemma AI page for resources, tutorials, and more. Stay connected for upcoming Gemma-focused events, new model variants, and opportunities to shape the future of this exciting technology!

Featured image credit: Google.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://dataconomy.com/2024/02/22/what-is-google-gemma-ai-gemma-2b-and-gemma-7b/