Get to know Mixtral 8x7B by Mistral AI. It’s super smart at dealing with big data and can help with tasks like translating languages and generating code. Developers around the world are excited about its potential to streamline their projects and improve efficiency. With its user-friendly design and impressive capabilities, Mixtral 8x7B is quickly becoming a go-to tool for AI development.

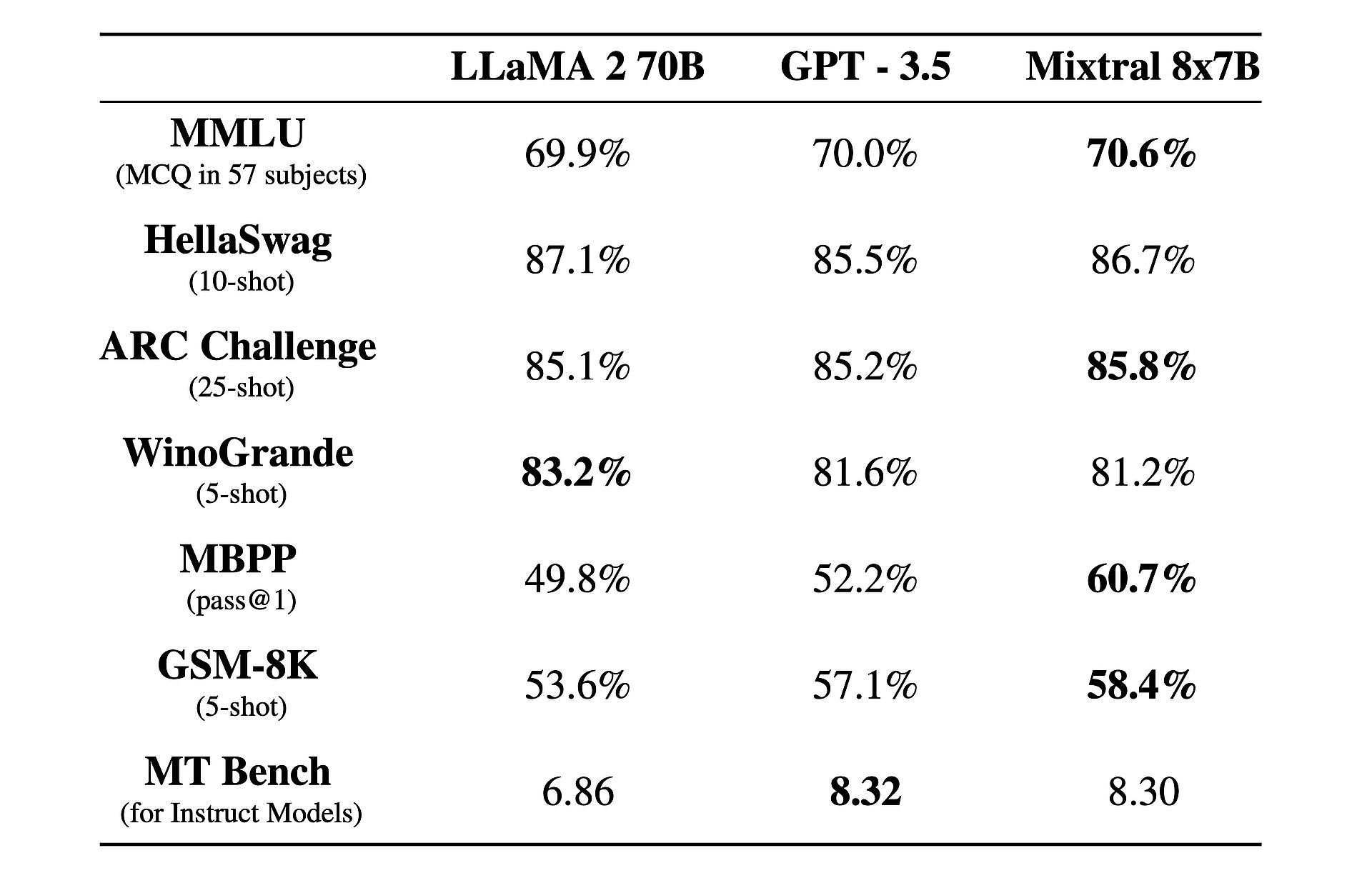

Also, there is an interesting fact about it: It is performing better than GPT-3.5 and Llama 2!

What is Mixtral 8x7B?

Mixtral 8x7B is an advanced artificial intelligence model developed by Mistral AI. It uses a unique architecture called sparse mixture-of-experts (SMoE) to process large amounts of data efficiently. Despite its complexity, Mixtral is designed to be easy to use and adaptable for various tasks like language translation and code generation. It outperforms other models in terms of speed and accuracy, making it a valuable tool for developers. Plus, it’s available under the Apache 2.0 license, allowing anyone to use and modify it freely.

Want to learn more? At its essence, Mixtral 8x7B operates as a decoder-only model, leveraging a unique approach where a feedforward block selects from eight distinct groups of parameters, referred to as “experts.” These experts are dynamically chosen by a router network to process each token, enhancing efficiency and performance while minimizing computational overhead.

One of Mixtral’s key strengths lies in its adaptability and scalability. Capable can handle contexts up to 32,000 tokens and support multiple languages, including English, French, Italian, German, and Spanish. Mixtral empowers developers to tackle a wide range of tasks with ease and precision.

What truly sets Mixtral apart is its performance-to-cost ratio. With a staggering total of 46.7 billion parameters, Mixtral achieves remarkable efficiency by utilizing only a fraction of these parameters per token, resulting in faster inference times and reduced computational expenses.

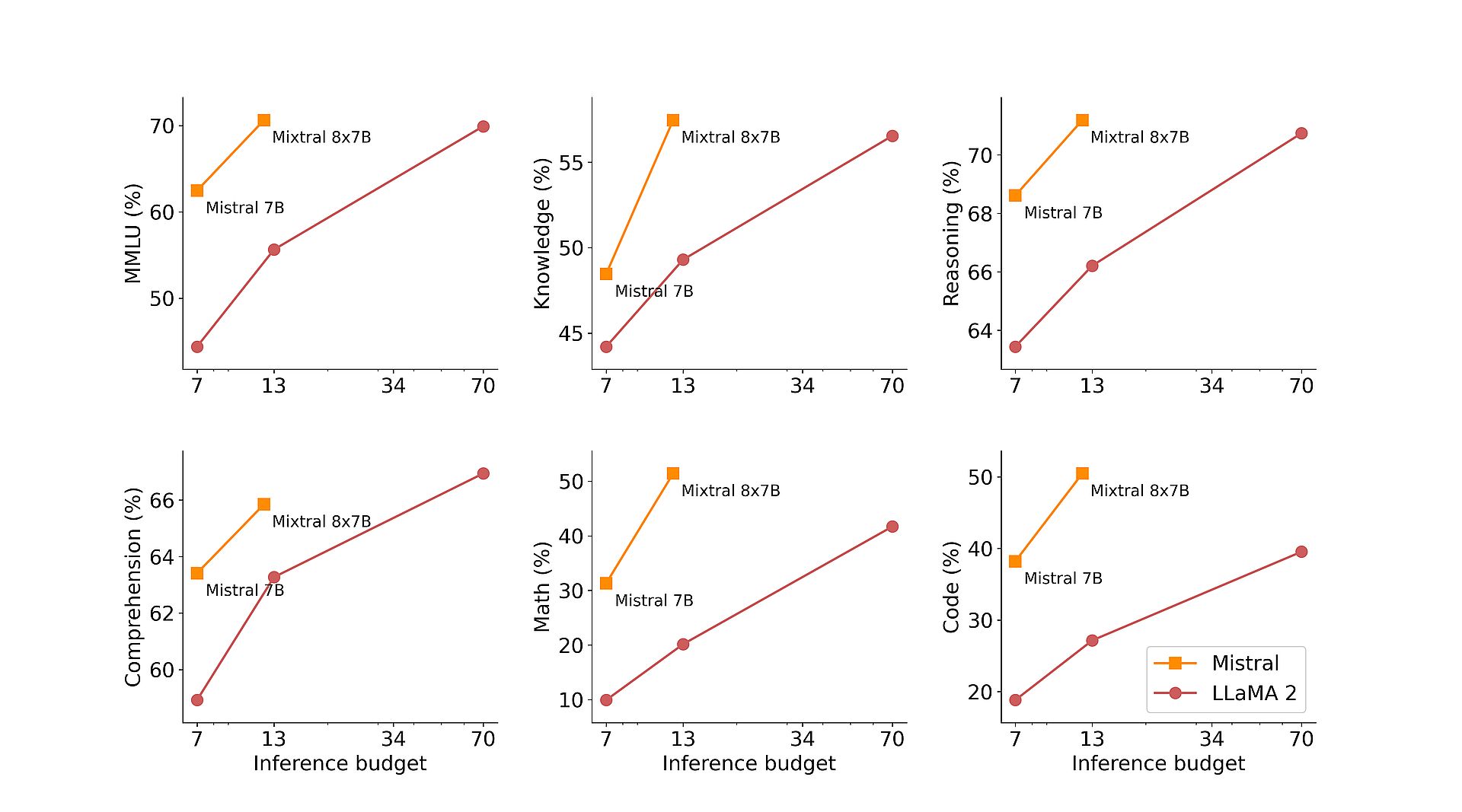

Moreover, Mixtral’s pre-training on extensive datasets extracted from the open web ensures robustness and versatility in real-world applications. Whether it’s code generation, language translation, or sentiment analysis, Mixtral delivers outstanding results across various benchmarks, surpassing traditional models like Llama 2 and even outperforming GPT3.5 in many instances.

To further enhance its capabilities, Mistral AI has introduced Mixtral 8x7B Instruct, a specialized variant optimized for instruction-following tasks. Achieving an impressive score of 8.30 on the MT-Bench, Mixtral 8x7B Instruct solidifies its position as a leading open-source model for supervised fine-tuning and preference optimization.

In addition to its technical prowess, Mistral AI is committed to democratizing access to Mixtral by contributing to the vLLM project, enabling seamless integration and deployment with open-source tools. This empowers developers to harness the power of Mixtral across a wide range of applications and platforms, driving innovation and advancement in the field of artificial intelligence.

Groq AI, not Grok, roasts Elon Musk with its “fastest LLM”

How to use Mixtral 8x7B

Mixtral 8x7B is accessible through Mistral’s endpoint mistral-small, which is in the beta testing phase. If you’re interested in gaining early access to all of Mistral’s generative and embedding endpoints, you can register now. By registering, you’ll be among the first to experience the full capabilities of Mixtral 8x7B and explore its innovative solutions.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://dataconomy.com/2024/02/21/mistral-ai-mixtral-8x7b/