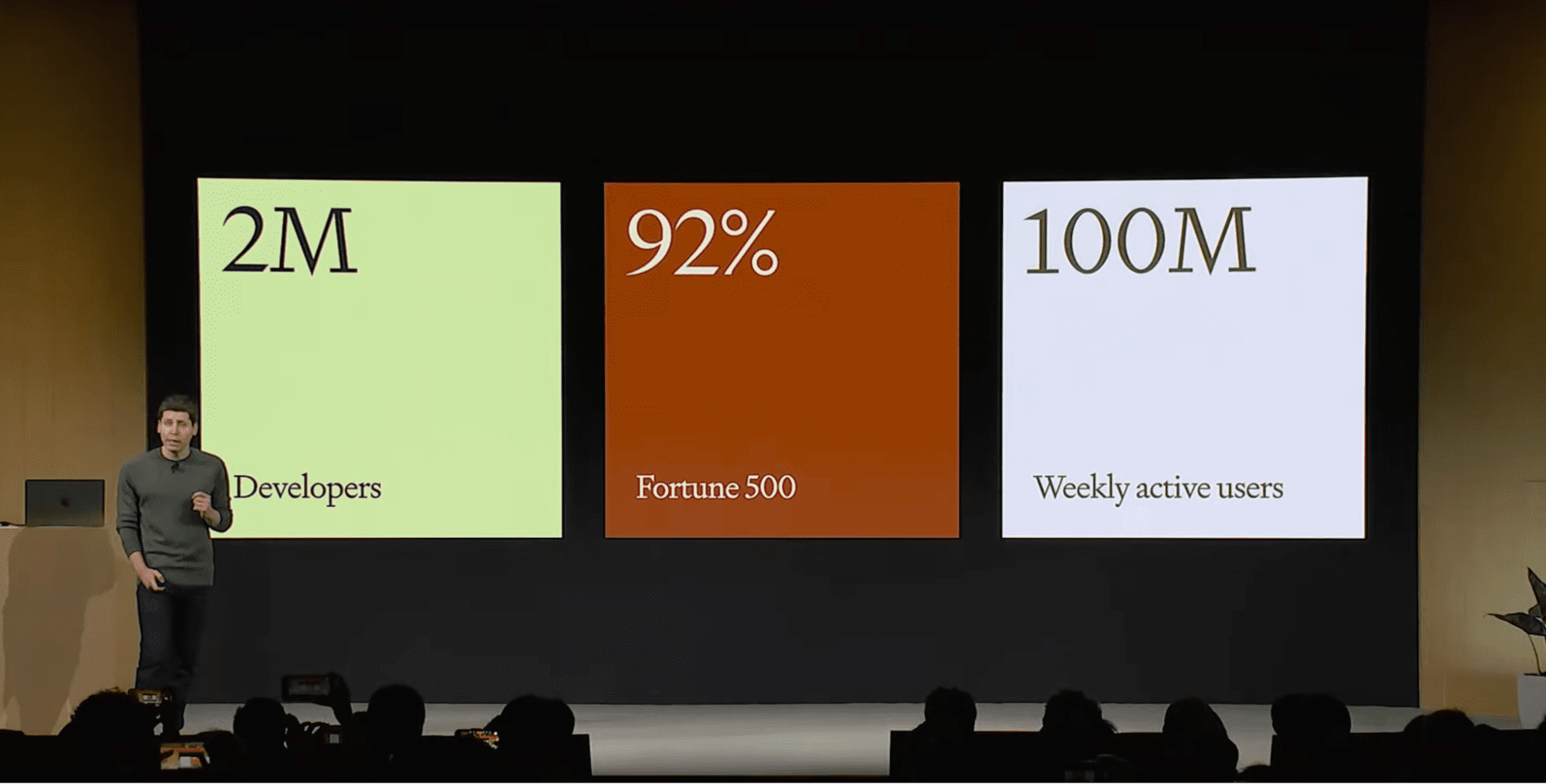

Sam Altman, OpenAI’s CEO, presents product usage numbers at the OpenAI Developer Day in October 2023. OpenAI consider three customer segments: developers, businesses, and general users. link: https://www.youtube.com/watch?v=U9mJuUkhUzk&t=120s

At the OpenAI Developer Day in October 2023, Sam Altman, OpenAI’s CEO, showed a slide on product usage across three different customer segments: developers, businesses, and general users.

In this article, we’re going to focus on the developer segment. We’ll cover what a generative AI developer does, what tools you need to master for this job, and how to get started.

While a few companies are dedicated to making generative AI products, most generative AI developers are based in other companies where this hasn’t been the traditional focus.

The reason for this is that generative AI has uses that apply to a wide range of businesses. Four common uses of generative AI apply to most businesses.

Chatbots

Image Generated by DALL·E 3

While chatbots have been mainstream for more than a decade, the majority of them have been awful. Typically, the most common first interaction with a chatbot is to ask it if you can speak to a human.

The advances in generative AI, particularly large language models and vector databases, mean that that is no longer true. Now that chatbots can be pleasant for customers to use, every company is busy (or at least should be busy) scrambling to upgrade them.

The article Impact of generative AI on chatbots from MIT Technology Review has a good overview of how the world of chatbots is changing.

Semantic search

Search is used in a wide variety of places, from documents to shopping websites to the internet itself. Traditionally, search engines make heavy use of keywords, which creates the problem that the search engine needs to be programmed to be aware of synonyms.

For example, consider the case of trying to search through a marketing report to find the part on customer segmentation. You press CMD+F, type “segmentation”, and cycle through hits until you find something. Unfortunately, you miss the cases where the author of the doc wrote “classification” instead of “segmentation”.

Semantic search (searching on meaning) solves this synonym problem by automatically finding text with similar meanings. The idea is that you use an embedding model—a deep learning model that converts text to a numeric vector according to its meaning—and then finding related text is just simple linear algebra. Even better, many embedding models allow other data types like images, audio, and video as inputs, letting you provide different input data types or output data types for your search.

As with chatbots, many companies are trying to improve their website search capabilities by making use of semantic search.

This tutorial on Semantic Search from Zillus, the maker of the Milvus vector database, provides a good description of the use cases.

Personalized content

Image Generated by DALL·E 3

Generative AI makes content creation cheaper. This makes it possible to create tailored content for different groups of users. Some common examples are changing the marketing copy or product descriptions depending on what you know about the user. You can also provide localizations to make content more relevant for different countries or demographics.

This article on How to achieve hyper-personalization using generative AI platforms from Salesforce Chief Digital Evangelist Vala Afshar covers the benefits and challenges of using generative AI to personalize content.

Natural language interfaces to software

As software gets more complicated and fully featured, the user interface gets bloated with menus, buttons, and tools that users can’t find or figure out how to use. Natural language interfaces, where users want to explain what they want in a sentence, can dramatically improve the useability of software. “Natural language interface” can refer to either spoken or typed ways of controlling software. The key is that you can use standard human-understandable sentences.

Business intelligence platforms are some of the earlier adopters of this, with natural language interfaces helping business analysts write less data manipulation code. The applications for this are fairly limitless, however: almost every feature-rich piece of software could benefit from a natural language interface.

This Forbes article on Embracing AI And Natural Language Interfaces from Gaurav Tewari, founder and Managing Partner of Omega Venture Partners, has an easy-to-read description of why natural language interfaces can help software usability.

Firstly, you need a generative AI model! For working with text, this means a large language model. GPT 4.0 is the current gold standard for performance, but there are many open-source alternatives like Llama 2, Falcon, and Mistral.

Secondly, you need a vector database. Pinecone is the most popular commercial vector database, and there are some open-source alternatives like Milvus, Weaviate, and Chroma.

In terms of programming language, the community seems to have settled around Python and JavaScript. JavaScript is important for web applications, and Python is suitable for everyone else.

On top of these, it is helpful to use a generative AI application framework. The two main contenders are LangChain and LlamaIndex. LangChain is a broader framework that allows you to develop a wide range of generative AI applications, and LlamaIndex is more tightly focused on developing semantic search applications.

If you are making a search application, use LlamaIndex; otherwise, use LangChain.

It’s worth noting that the landscape is changing very fast, and many new AI startups are appearing every week, along with new tools. If you want to develop an application, expect to change parts of the software stack more frequently than you would with other applications.

In particular, new models are appearing regularly, and the best performer for your use case is likely to change. One common workflow is to start using APIs (for example, the OpenAI API for the API and the Pinecone API for the vector database) since they are quick to develop. As your userbase grows, the cost of API calls can become burdensome, so at this point, you may want to switch to open-source tools (the Hugging Face ecosystem is a good choice here).

As with any new project, start simple! It’s best to learn one tool at a time and later figure out how to combine them.

The first step is to set up accounts for any tools you want to use. You’ll need developer accounts and API keys to make use of the platforms.

A Beginner’s Guide to The OpenAI API: Hands-On Tutorial and Best Practices contains step-by-step instructions on setting up an OpenAI developer account and creating an API key.

Likewise, Mastering Vector Databases with Pinecone Tutorial: A Comprehensive Guide contains the details for setting up Pinecone.

What is Hugging Face? The AI Community’s Open-Source Oasis explains how to get started with Hugging Face.

Learning LLMs

To get started using LLMs like GPT programmatically, the simplest thing is to learn how to call the API to send a prompt and receive a message.

While many tasks can be achieved using a single exchange back and forth with the LLM, use cases like chatbots require a long conversation. OpenAI recently announced a “threads” feature as part of their Assistants API, which you can learn about in the OpenAI Assistants API Tutorial.

This isn’t supported by every LLM, so you may also need to learn how to manually manage the state of the conversation. For example, you need to decide which of the previous messages in the conversation are still relevant to the current conversation.

Beyond this, there’s no need to stop when only working with text. You can try working with other media; for example, transcribing audio (speech to text) or generating images from text.

Learning vector databases

The simplest use case of vector databases is semantic search. Here, you use an embedding model (see Introduction to Text Embeddings with the OpenAI API) that converts the text (or other input) into a numeric vector that represents its meaning.

You then insert your embedded data (the numeric vectors) into the vector database. Searching just means writing a search query, and asking which entries in the database correspond most closely to the thing you asked for.

For example, you could take some FAQs on one of your company’s products, embed them, and upload them into a vector database. Then, you ask a question about the product, and it will return the closest matches, converting back from a numeric vector to the original text.

Combining LLMs and vector databases

You may find that directly returning the text entry from the vector database isn’t enough. Often, you want the text to be processed in a way that answers the query more naturally.

The solution to this is a technique known as retrieval augmented generation (RAG). This means that after you retrieve your text from the vector database, you write a prompt for an LLM, then include the retrieved text in your prompt (you augment the prompt with the retrieved text). Then, you ask the LLM to write a human-readable answer.

In the example of answering user questions from FAQs, you’d write a prompt with placeholders, like the following.

"""

Please answer the user's question about {product}.

---

The user's question is : {query}

---

The answer can be found in the following text: {retrieved_faq}

"""

The final step is to combine your RAG skills with the ability to manage message threads to hold a longer conversation. Voila! You have a chatbot!

DataCamp has a series of nine code-alongs to teach you to become a generative AI developer. You need basic Python skills to get started, but all the AI concepts are taught from scratch.

The series is taught by top instructors from Microsoft, Pinecone, Imperial College London, and Fidelity (and me!).

You’ll learn about all the topics covered in this article, with six code-alongs focused on the commercial stack of the OpenAI API, the Pinecone API, and LangChain. The other three tutorials are focused on Hugging Face models.

By the end of the series, you’ll be able to create a chatbot and build NLP and computer vision applications.

Richie Cotton is a Data Evangelist at DataCamp. He is the host of the DataFramed podcast, he’s written 2 books on R programming, and created 10 DataCamp courses on data science that have been taken by over 700k learners.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/4-steps-to-become-a-generative-ai-developer?utm_source=rss&utm_medium=rss&utm_campaign=4-steps-to-become-a-generative-ai-developer