Image by Author | Midjourney & Canva

The discussions on the ethical and responsible development of AI have gained significant traction in recent years and rightly so. Such discussions aim to address myriad risks, involving bias, misinformation, fairness, etc.

While some of these challenges are not entirely new, the surge in demand for AI applications has certainly amplified them. Data privacy, a persistent issue, has gained increased importance with the emergence of Generative AI.

This statement from Halsey Burgund, a fellow in the MIT Open Documentary Lab, highlights the intensity of the situation. – “One should think of everything one puts out on the internet freely as potential training data for somebody to do something with.”

Changing times call for changing measures. So, let’s understand the repercussions and gain cognizance of handling the risks stemming from data privacy.

Time to Raise the Guards

Every company that is handling user data, be it in the form of collecting and storing data, performing data manipulation and processing it to build models, etc. must take care of varied data aspects, such as:

- Where is data coming from and where is it going?

- How is it manipulated?

- Who is using it and how?

In short, it is crucial to note how and with whom data is exchanged.

Every user who is sharing their data and giving consent to use it must watch out for the information they are comfortable sharing. For example, one needs to be comfortable sharing data, if they ought to receive personalized recommendations.

GDPR is the Gold Standard!!!

Managing the data becomes high stakes, when it concerns the PII i.e. Personal Identifiable Information. As per the US Department of Labour, it largely includes information that directly identifies an individual, such as name, address, any identifying number or code, telephone number, email address, etc. A more nuanced definition and guidance on PII is available here.

To safeguard individuals’ data, the European Union enacted the General Data Protection Regulation (GDPR), setting strict accountability standards for companies that store and collect data on EU citizens.

Development Is Faster Than Regulation

It is empirically evident that the rate of development on any technological innovation and breakthrough is ay faster than the rate at which the authorities can foresee its concerns and govern it timely.

So, what would one do till regulation catches up with the fast-paced developments? Let’s find out.

Self-regulation

One way to address this gap is to build internal governance measures, much like corporate governance and data governance. It is equivalent to owning up your models to the best of your knowledge clubbed with the known industry standards and best practices.

Such measures of self-regulation are a very strong indicator of holding high standards of integrity and customer-centricity, which can become a differentiator in this highly competitive world. Organizations adopting the charter of self-regulation can wear it as a badge of honor and gain customers’ trust and loyalty – which is a big feat, given the low switch costs for the users among the plethora of options floating around.

One aspect of building internal AI governance measures is that it keeps the organizations on the path of a responsible AI framework, so they are prepared for easy adoption when the legal regulations are put in place.

Rules must be the same for everyone

Setting the precedence is good, theoretically. Technically speaking, no one organization is fully capable of foreseeing it all and safeguarding themselves.

Another argument that goes against self-regulation is that everyone should be adhering to the same rules. No one would wish to self-sabotage their growth in anticipation of upcoming regulation by over-regulating themselves, hindering their business growth.

The Other Side of Privacy

Many actors can play their role in upholding high privacy standards, such as organizations and their employees. However, the users have an equally important role to play – it is time to raise your guard and develop a lens of awareness. Let’s discuss them in detail below:

Role of organizations and employees

The organizations have created a responsibility framework to sensitize their teams and create awareness of the right ways to prompt the model. For sectors like healthcare and finance, any sensitive information shared through input prompts is also a form of breach of privacy – this time unknowingly but through the employees and not from the model developers.

Role of users

Essentially, privacy can not be a question, if we are feeding such data into such models ourselves.

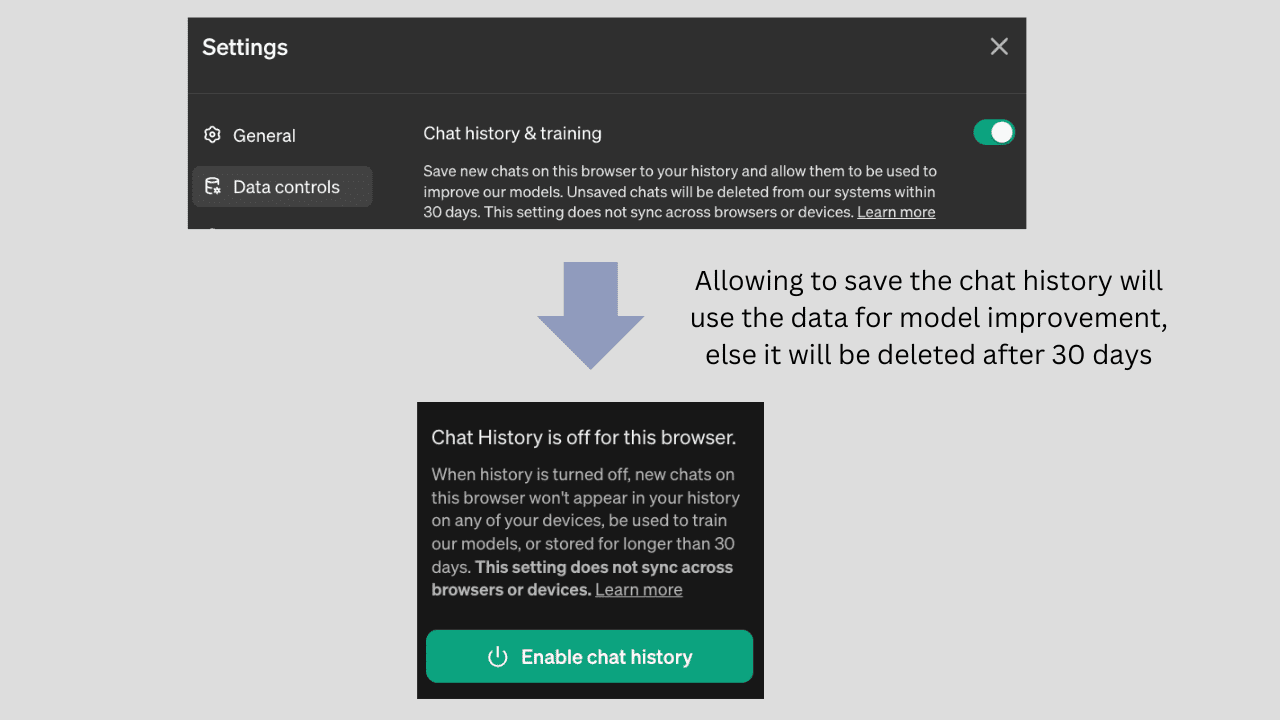

Image by Author

Most of the foundational models (similar to the example shown in the image above) highlight that the chat history might be used to improve the model, hence the users must thoroughly check the settings control to allow the appropriate access to promote their data privacy.

Scale of AI

Users must visit and modify the consent control across each browser per device to stop such breaches. However, now think of large models that are scanning such data through almost all of the internet, primarily including everybody.

That scale becomes a problem!!!

Precisely the reason for which large language models get advantaged by having access to training data of several orders of magnitude higher than traditional models, that same scale creates massive issues raising privacy concerns too.

Deepfakes – A Disguised Form of Privacy Breach

Recently, an incident surfaced where a company executive directed its employee to make a multi-million dollar transaction to a certain account. Following the skepticism, the employee suggested arranging a call to discuss this, after which he made the transaction – only to know later that everyone on the call was deepfakes.

For the unversed, the Government Accountability Office explains it as – “a video, photo, or audio recording that seems real but has been manipulated with AI. The underlying technology can replace faces, manipulate facial expressions, synthesize faces, and synthesize speech. Deepfakes can depict someone appearing to say or do something that they never said or did.”

Thinking rhetorically, deepfakes are also a form of privacy breach, that is equivalent to identity theft, where the bad actors are pretending to be someone they are not.

With such stolen identities, they can drive decisions and actions, that would otherwise not have taken place.

This serves as a crucial reminder for us that bad actors aka attackers are often way ahead of good actors, who are on defense. Good actors are still scrambling their way around to damage control first, as well as ensure robust measures to prevent future mishaps.

Vidhi Chugh is an AI strategist and a digital transformation leader working at the intersection of product, sciences, and engineering to build scalable machine learning systems. She is an award-winning innovation leader, an author, and an international speaker. She is on a mission to democratize machine learning and break the jargon for everyone to be a part of this transformation.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/understanding-data-privacy-in-the-age-of-ai?utm_source=rss&utm_medium=rss&utm_campaign=understanding-data-privacy-in-the-age-of-ai