Introduction

The world of 3D generation has just made huge progress with the introduction of Dual3D. This new framework efficiently transforms text descriptions into high-quality 3D assets in just a minute. In the field of text-to-3D creation, Dual3D sets a new benchmark with its dual-mode multi-view latent diffusion model. This article explains the development of Dual3D and its varied applications in 3D model generation across industries.

Table of Contents

Significance and Uses of Text-to-3D Generation

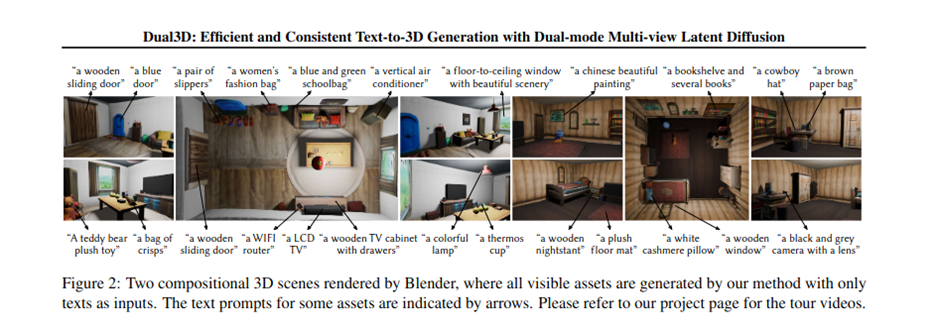

In computer vision and graphics, text-to-3D generation is a significant development. Robotics, virtual reality (VR), augmented reality (AR), and gaming are among its uses. Using textual descriptions, for example, game makers can generate intricate 3D settings. By displaying textual data, robots in robotics are better able to comprehend and interact with their surroundings. More vivid and engaging virtual worlds are also available to users in VR and AR. These areas are about to undergo a revolution because of Dual3D’s game-changing, fast, and dependable text-to-3D conversion capabilities.

Overview of Dual3D

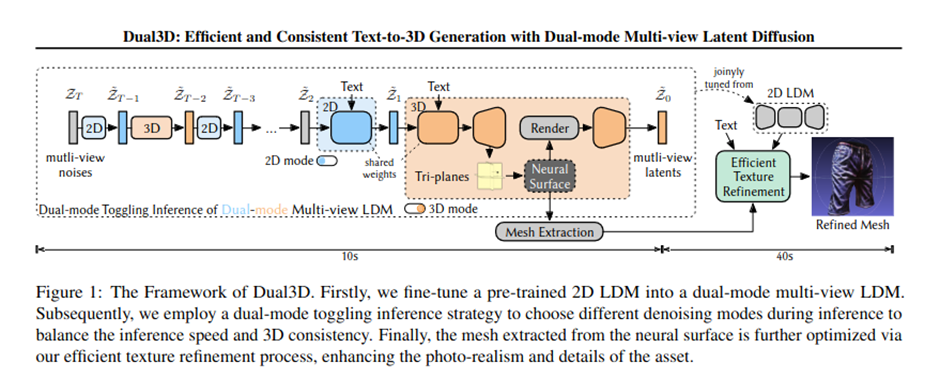

Dual3D is a novel framework designed to convert text descriptions into 3D models swiftly and consistently. The key innovation lies in its dual-mode multi-view latent diffusion model. This model operates in two modes: 2D and 3D. The 2D mode efficiently denoises noisy multi-view latent, while the 3D mode ensures consistent rendering-based denoising. By combining these modes, Dual3D achieves high-quality 3D assets with remarkable speed and accuracy.

Also Read: New Stable Diffusion Model TripoSR Can Generate 3D Object from a Single Image

Development and Evolution

The concept of Dual3D stemmed from the need for a fast, efficient, and consistent text-to-3D generation framework. Previous methods often struggled with low success rates and inconsistent quality due to the lack of 3D priors in 2D diffusion models. The creators of Dual3D aimed to overcome these challenges by integrating a dual-mode approach. This approach leverages the strengths of both 2D and 3D models to deliver superior results.

Key Milestones in Development

The development of Dual3D marked several significant milestones. Initially, the team focused on fine-tuning a pre-trained text-to-image latent diffusion model. This step allowed them to avoid the high costs associated with training from scratch. They then introduced the dual-mode toggling inference strategy. This strategy uses only a fraction of the denoising steps in 3D mode, reducing generation time without compromising quality. The addition of an efficient texture refinement process further enhanced the visual fidelity of the generated 3D assets. Extensive experiments validated Dual3D’s state-of-the-art performance, proving its capability to generate high-quality 3D assets rapidly.

The development of Dual3D from an idea to a fully functional framework demonstrates the team’s commitment to advancing text-to-3D generation. They have raised the bar in the industry by addressing the drawbacks of earlier models and offering creative fixes.

Dual3D Technology and Its Advantages

- Dual-mode Operation: Uses 2D and 3D modes for efficient denoising and rendering-based consistency.

- Speed: Completes 3D asset generation in just 50 seconds on an NVIDIA RTX 3090 GPU.

- Quality: Ensures 3D consistency, solving issues like incomplete geometry and blurry textures.

- Cost-effective: Uses pre-trained models to avoid costly training from scratch.

- Accessibility: More accessible and scalable due to efficient processing and lower training costs.

Dual3D’s Unique Features

Dual3D marks a leap ahead in 3D model generation. Here are some of its unique features and notable advancements.

Dual-mode Toggling Inference Strategy

One of Dual3D’s standout features is its dual-mode toggling inference strategy. This innovative approach toggles between the 2D and 3D modes during the denoising process. By using only 1/10 of the denoising steps in 3D mode, the model dramatically reduces the time required for 3D asset generation without sacrificing quality. This strategy allows Dual3D to generate a 3D asset in just 10 seconds of denoising time, a significant improvement over traditional methods.

Efficient Texture Refinement Process

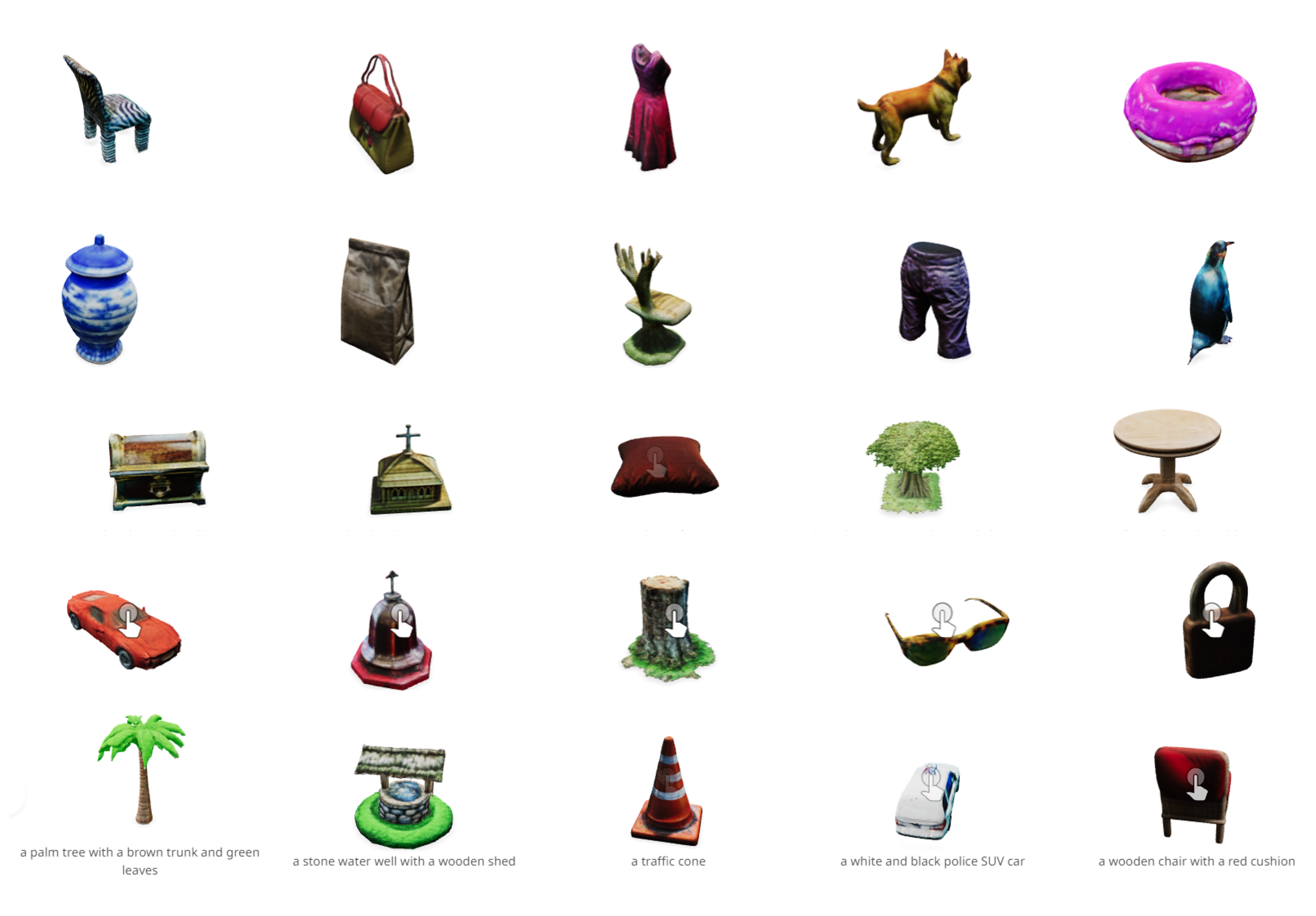

Dual3D also includes an efficient texture refinement process to enhance the visual quality of the generated 3D assets. During the denoising phase, the model identifies and addresses style differences between the synthetic multi-view datasets and real-world textures. This refinement process optimizes the texture map of the extracted mesh from the 3D neural surface, resulting in highly realistic and detailed 3D assets. This step ensures that the generated assets not only meet technical specifications but also possess a high degree of photorealism.

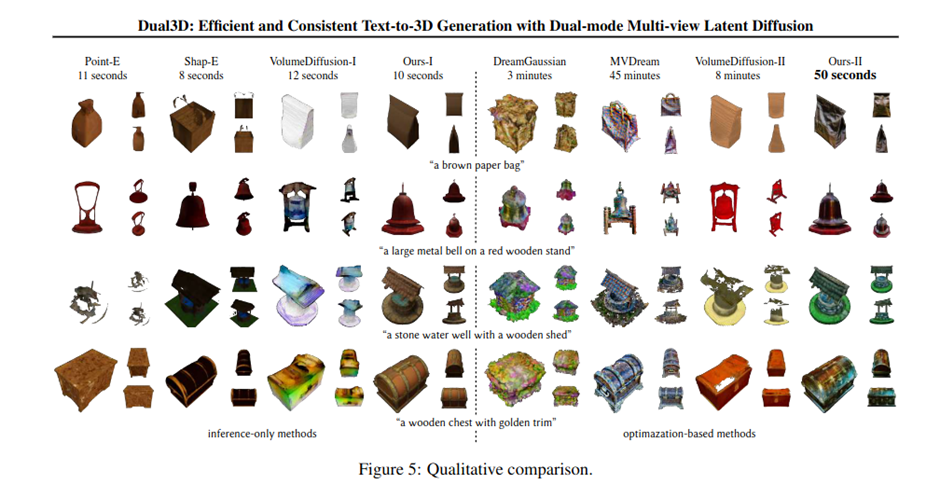

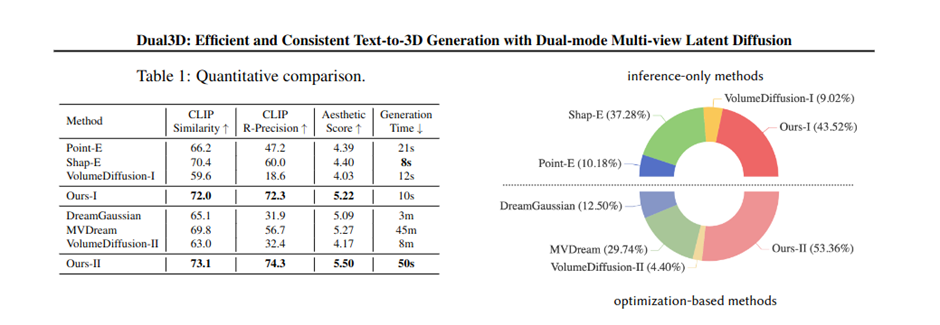

Comparison with Other Text-to-3D Models

When compared to other text-to-3D generation models, Dual3D exhibits superior performance in several areas. Models like DreamFusion and MVDream often struggle with the multi-faceted Janus problem, resulting in lower success rates and inconsistent quality. In contrast, Dual3D’s dual-mode approach ensures robust 3D consistency and high-quality output. Additionally, while methods like DMV3D require extensive full-resolution rendering at each denoising step, Dual3D’s toggling inference strategy and pre-trained model tuning significantly cut down on processing time and computational costs. These features make Dual3D a highly efficient and reliable solution for text-to-3D generation, setting a new standard in the field.

Learn More: Overview of Latent Diffusion, Stable Diffusion 1.5, and Challenges with SD 1.5

Technical Architecture

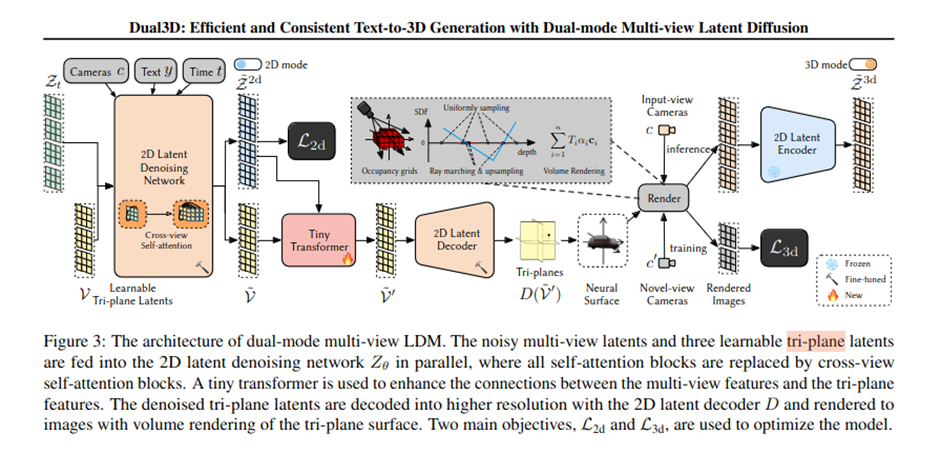

The Dual3D framework is designed to transform text descriptions into high-quality 3D assets efficiently. At its core, the framework uses a dual-mode multi-view latent diffusion model. This model operates in both 2D and 3D modes, leveraging the strengths of each to achieve fast and consistent 3D generation. Dual3D framework consists of shared modules fine-tuned from a pre-trained text-to-image latent diffusion model, enabling efficient multi-view latent denoising and high-quality 3D surface generation.

What are 2D and 3D Modes of Dual3D?

In 2D mode, the framework employs a single latent denoising network to process noisy multi-view latents. This model is highly efficient and leverages pre-trained text-to-image diffusion models. The primary task in this mode is to denoise the latents, producing clear and consistent 2D images from multiple viewpoints.

In 3D mode, the framework generates a tri-plane neural surface, which provides the basis for consistent rendering-based denoising. This mode ensures that the generated 3D asset maintains high fidelity and geometric accuracy. The tri-plane representation is treated as three special latents, and the network synchronizes the denoising process across these latents to produce a noise-free 3D neural surface.

The dual-mode toggling inference strategy allows the framework to switch between these two modes during the denoising process, optimizing for both speed and quality.

Multi-view Latent Diffusion Model in Dual3D

The multi-view latent diffusion model is the cornerstone of the Dual3D framework. It utilizes the strong priors of 2D latent diffusion models while incorporating multi-view image data to ensure 3D consistency. During training, the model adds noise to multi-view latents and employs a latent denoising network to process these noisy latents. A tiny transformer enhances the connections between multi-view features and tri-plane features, further improving the denoising process.

The model uses a combination of mean squared error (MSE) loss and Learned Perceptual Image Patch Similarity (LPIPS) loss to optimize the denoising process. Additionally, rendering techniques based on NeuS (Neural Surface) improve geometric quality, making the generated 3D assets more accurate and realistic.

Performance and Efficiency

Dual3D excels in both speed and quality of 3D asset generation. On a single NVIDIA RTX 3090 GPU, the framework can generate a high-quality 3D asset in just 50 seconds. This impressive speed is achieved through the dual-mode toggling inference strategy, which minimizes the number of 3D mode denoising steps required. By efficiently switching between 2D and 3D modes, Dual3D maintains high-quality outputs without compromising on generation time.

Benchmarks and Performance Metrics

Extensive experiments demonstrate that Dual3D delivers state-of-the-art performance in text-to-3D generation. The framework significantly reduces the generation time compared to traditional models while ensuring high-quality, 3D-consistent assets. Benchmarks reveal that Dual3D can generate 3D assets in as little as 10 seconds of denoising time, a testament to its efficiency.

The process of texture refinement guarantees a high level of visual realism in the 3D objects that are generated. The model is a prominent solution in the text-to-3D generation sector because of its capacity to handle multi-view image data while maintaining geometric accuracy.

Dual3D’s performance metrics highlight its capability to deliver both speed and quality, revolutionizing how text descriptions are transformed into 3D assets.

Real-World Applications

Dual3D offers transformative potential in several key industries, including gaming, robotics, and VR/AR. In gaming, developers can use Dual3D to quickly create detailed and consistent 3D environments from textual descriptions, saving significant time and resources. Robots can now perceive and comprehend text-based instructions thanks to a technological innovation in robotics called Dual3D, which improves their ability to interact with and navigate difficult environments. Applications like autonomous navigation and human-robot interaction that demand precise 3D representations of the surroundings depend on this capability.

Creating engaging virtual environments for VR and AR is made easier with Dual3D. From straightforward word descriptions, designers can quickly prototype and create engaging VR/AR experiences by turning them into 3D assets. The user experience is improved by the realistic and detailed virtual objects made possible by the framework’s effective texture refinement process.

Potential Impact on Various Industries

Dual3D has the potential to transform a number of different industries in addition to gaming, robotics, and VR/AR. It might be applied to the healthcare industry, for instance, to produce intricate 3D models of anatomical structures from medical descriptions, which would help with surgery planning and teaching. It can create 3D models of components from textual specifications in manufacturing, which speeds up the design and prototype process. Additionally, Dual3D can be used in education to create 3D representations that improve comprehension and engagement with text-based learning materials.

Challenges and Solutions

3D generation presents several technical challenges, including the need for high computational resources, maintaining geometric consistency, and achieving realistic textures. Traditional methods often struggle with these issues, resulting in incomplete or low-quality 3D assets.

Major Challenges

One significant challenge is the multi-faceted Janus problem, where 2D diffusion models lack the 3D priors needed for accurate 3D representation. This issue leads to low success rates and inconsistencies in the generated models. Additionally, the high rendering cost during inference and the need for extensive optimization for each asset further complicate the 3D generation process.

Suggested Solutions

Dual3D addresses these challenges through its innovative dual-mode multi-view latent diffusion model. By combining 2D and 3D modes, the framework leverages the strengths of each to achieve consistent and high-quality 3D generation. The dual-mode toggling inference strategy significantly reduces the denoising steps required in 3D mode, cutting down on generation time and computational cost.

The framework’s efficient texture refinement process enhances the realism of the generated assets by addressing style differences between synthetic and real-world textures. Furthermore, the use of pre-trained models for fine-tuning avoids the high costs associated with training from scratch, making the framework more accessible and scalable.

Future Prospects

The Dual3D team plans to enhance the framework’s capabilities by expanding its ability to handle complex text inputs and improving visual quality with advanced rendering techniques. They aim to integrate more sophisticated texture refinement algorithms, making the generated models look indistinguishable from real-world objects. These improvements will reinforce Dual3D’s position as a leading text-to-3D generation solution.

In the long term, Dual3D aims to become an easily integrated platform for various industries, transforming text descriptions into 3D models. The team focuses on advancing 3D generation while improving precision, efficiency, and versatility. Their goal is to make high-quality 3D generation accessible to everyone, enabling quick and easy conversion of textual ideas into realistic 3D models. Ongoing research and development will keep Dual3D at the cutting edge of technology in computer vision and graphics.

Conclusion

Dual3D has effectively converted text descriptions into high-quality 3D models, greatly advancing the field of 3D generation. Dual3D sets a new standard for text-to-3D generation by ensuring speed, accuracy, and consistency through the use of a dual-mode multi-view latent diffusion model. Its transformational potential is demonstrated by its applications in robotics, gaming, VR/AR, and other industries. By overcoming previous challenges and introducing efficient solutions, Dual3D offers a scalable and accessible tool for creating detailed 3D assets. As the framework continues to evolve, it promises to remain at the forefront of 3D generation technology, further enhancing its capabilities and impact.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/05/dual3d-new-diffusion-model-for-text-to-3d-model-generation/