Get ready to step into a world of pure imagination, because Google Genie has arrived to make your dreams a virtual reality!

Last week, OpenAI mesmerized all of us with its advanced video-generation tool, Sora AI, and now Google’s groundbreaking AI model transforms simple images into fully playable virtual environments.

Yes, you can now craft an entire 2D platformer game with a flick of your wrist (or a touch of your keyboard, at least).

Tim Rocktäschel, Open-Endedness Team Leader at Google Deep Mind, has announced the birth of Google Genie on X with the following words:

I am really excited to reveal what @GoogleDeepMind‘s Open Endedness Team has been up to 🚀. We introduce Genie 🧞, a foundation world model trained exclusively from Internet videos that can generate an endless variety of action-controllable 2D worlds given image prompts. pic.twitter.com/TnQ8uv81wc

— Tim Rocktäschel (@_rockt) February 26, 2024

What is Google Genie?

Traditional game design often requires complex coding skills. With Google Genie, the technical barriers are significantly lowered. The AI handles the intricate processes of transforming your idea into a playable virtual environment, letting you focus on the pure joy of creation.

Google Genie is at the forefront of AI technology, classified as a “foundation world model“.

This means it has been trained on a massive dataset of internet videos, particularly those showcasing gameplay. Through this training, Genie develops a deep understanding of how environments function and how players typically interact with them.

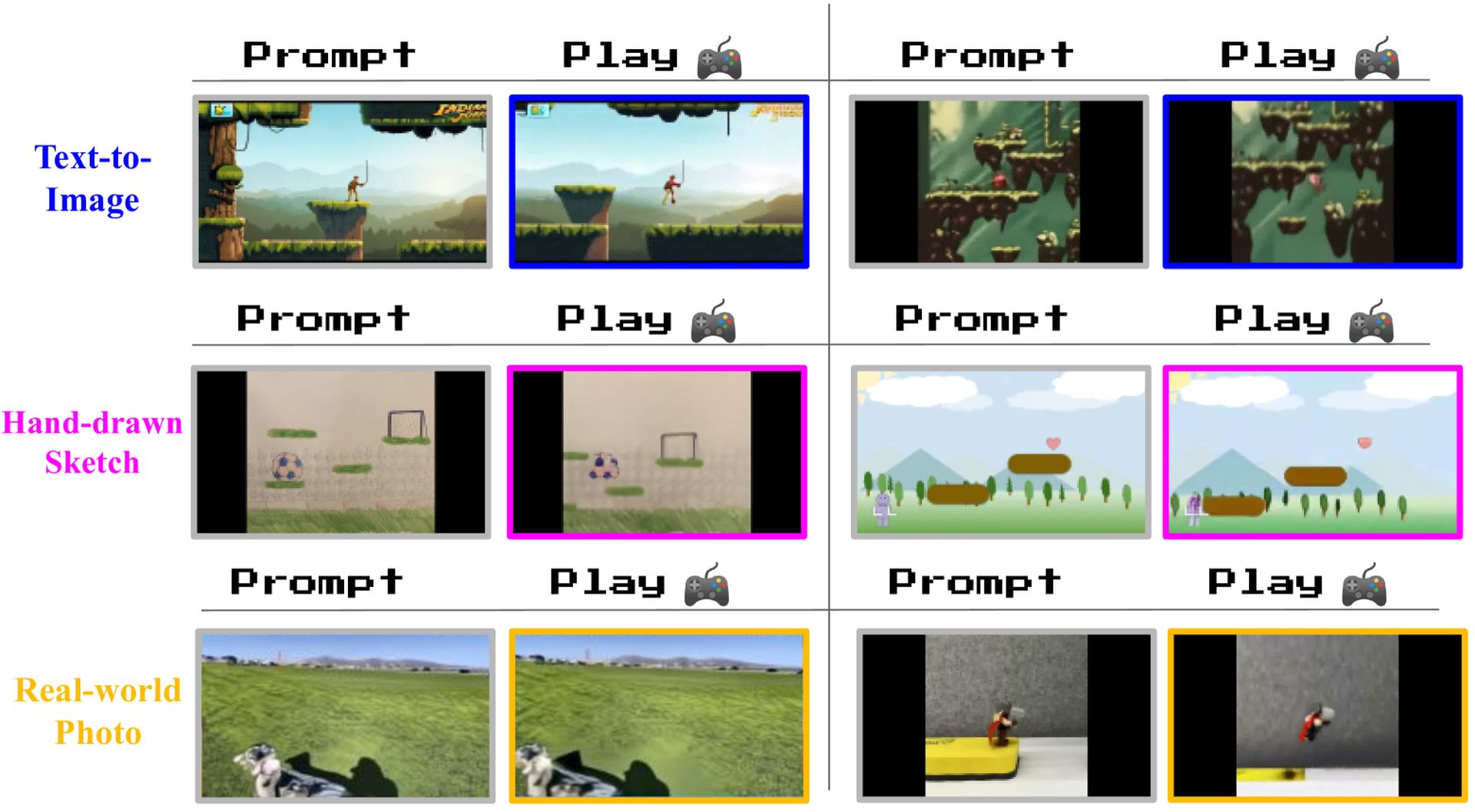

Think of Google Genie as your personal game development assistant. All you need to do is provide a starting point and this could be:

- an image

- a written description

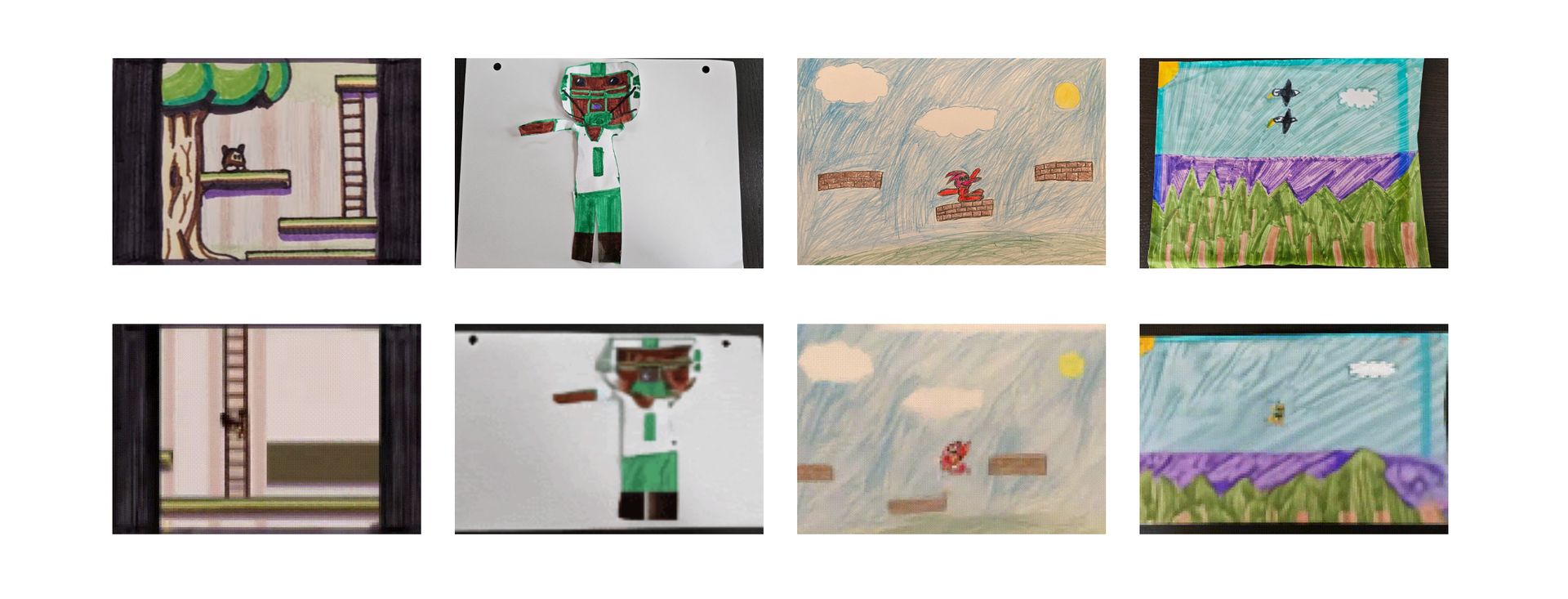

- a simple hand-drawn sketch

Google Genie then takes your input and uses its creative power to build a unique, fully playable virtual space.

The real magic here is that Google Genie learns to create controllable virtual worlds without specific gameplay instructions. It analyzes videos to understand the basic rules of environments and what players can interact with. Remarkably, this allows for consistent control schemes even across totally new, AI-generated worlds.

The magic of Google DeepMind

Google DeepMind manages to shock us with almost everything it does and Google Genie is no exception.

Google Genie’s brain is built on a special type of transformer called a spatiotemporal (ST) transformer. Unlike regular transformers designed for text, ST transformers are specifically tuned to understand videos. They pay attention to what’s happening within each individual frame (spatial attention) and also how things change across multiple frames over time (temporal attention). This makes them much better at handling the complex patterns found in moving images.

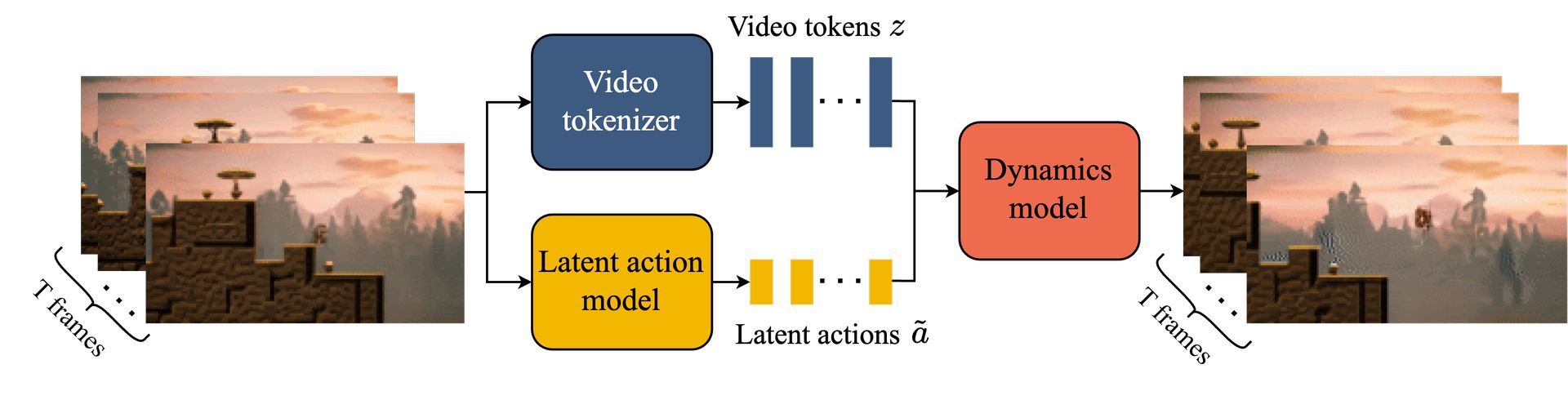

Videos are made up of a ton of pixels, which can be a lot for a model to handle. Genie uses a video tokenizer to squash those pixel-filled frames down into smaller, easier-to-process chunks called tokens. Think of it like translating a whole movie into a series of key symbols. This simplification makes the whole video generation process smoother and faster.

The LAM is like a detective within Google Genie. It watches videos and tries to figure out the unspoken actions happening between the frames. This is important because if you want to control how a generated video plays out, you need to understand the actions that drive it. Since videos from the internet don’t come with action labels, the LAM has to learn to figure these things out on its own.

The dynamics model is the heart of Google Genie’s video-making power. It takes the video tokens and the figured-out actions from the LAM and uses them to predict what the next frame of the video should look like. It’s like having a crystal ball that can show you the next step in a movie based on what’s happened so far and the action you want to take.

VQ-VAE is a fancy technique that helps Google Genie organize information. It’s kind of like giving both the video tokenizer and the LAM a special codebook to translate things into smaller, more manageable pieces. This makes learning and representing complex patterns in videos a lot more efficient.

Here is a summary of the Google Genie’s workflow:

- Latent action inference:

- Encoder: Takes in a video sequence. It generates continuous representations that relate to the actions occurring between frames

- Decoder: This component exists only for training. It predicts the actual next frame using previous frames and the latent actions produced by the encoder. This helps train the LAM to generate meaningful action representations

- VQ-VAE: The predicted latent actions are quantized into a small set of discrete codes. This ensures a limited action vocabulary, making human control during the generation process easier

- Video tokenization:

- ST-Transformer Based Video Tokenizer (ST-ViViT): Incorporates both spatial and temporal information during the tokenization phase. This improves video generation quality compared to spatial-only tokenizers

- Dynamics modeling:

- MaskGIT Transformer: Genie uses a decoder-only variant of the MaskGIT architecture

- Input: At each step, it receives both the previous video tokens and the corresponding latent action

- Output: Predicts the tokens representing the next frame

- Training: Trained with a cross-entropy loss to align the predicted tokens with the real tokens from the video. Masking is used at training time to improve robustness

- Inference:

- Initialization: The user provides an initial image frame, which is tokenized

- Action Selection: The user chooses a desired action from the discrete vocabulary learned during the LAM phase

- Prediction: The dynamics model generates the next frame’s tokens based on the initial frame tokens and the chosen action

- Decoding: The video tokenizer’s decoder converts the predicted tokens back into a video frame

- Autoregression: The process repeats, with the newly generated frame and a new user-specified action becoming the input for the next prediction

Want to learn more? Here is Google Genie’s research paper.

How to use Google Genie

While Google Genie isn’t yet available for public use, you can find more info and fascinating demos on the official website. And keep an eye out: This technology has the potential to fundamentally change how we create and experience games!

Building the future of gaming

While still in its early stages, Google Genie showcases the astonishing power of AI-driven creativity. It blurs the line between our imagined worlds and the ones we play in, hinting at a future where sharing your game is as easy as sharing a photo.

However, there are challenges to overcome. Currently, Genie excels at 2D platformers, but scaling to complex 3D worlds remains difficult.

Additionally, the generated games have relatively simple controls; future research will likely focus on finer control and complex mechanics.

As a generative model, Genie can be surprising, for better or worse – finding ways to guide the generation process towards the creator’s intent is an area of active research.

Featured image credit: Oleg Gamulinskii/Pixabay.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://dataconomy.com/2024/02/27/what-is-google-genie-and-how-to-use-it/