Wikipedia downgraded CNET’s reliability rating after the tech publication, in operation for 30 years, used AI to generate news stories that turned out to be plagiarised and fraught with errors.

CNET published more than 70 financial advice articles written by artificial intelligence between November 2022 and January 2023. The articles were published under the byline ‘CNET Money Staff.’

An audit confirmed that many of the stories contained factual errors, serious omissions, and plagiarised content. CNET stopped running AI-written stories after the news broke early in 2023, but the damage, according to Wikipedia, had already been done.

Also read: CNET Suspends AI After Publishing a Series of Bad Articles

Demoting AI-driven CNET

“CNET, usually regarded as an ordinary tech RS [reliable source], has started experimentally running AI-generated articles, which are riddled with errors,” said Wikipedia editor David Gerard, as reported by Futurism.

“So far, the experiment is not going down well, as it shouldn’t. I haven’t found any yet, but any of these articles that make it into a Wikipedia article need to be removed.”

Gerard was speaking to kick-start a meeting of Wikipedia editors to discuss CNET’s AI content in January 2023. Editors of the know-it-all online dictionary maintain the Wikipedia Reliable Sources or Perennial Sources forum, where they meet to decide whether a news source can be trusted and used in citations.

The forum features a chart ranking news outlets according to their reliability. After many hours of debate, the editors agreed that the AI-driven version of CNET was not trustworthy and demoted content from the website to “generally unreliable.”

“Let’s take a step back and consider what we’ve witnessed here,” another Wikipedia editor who goes by the name “Bloodofox” said.

“CNET generated a bunch of content with AI, listed some of it as written by people (!), claimed it was all edited and vetted by people, and then, after getting caught, issued some ‘corrections’ followed by attacks on the journalists that reported on it,” they added.

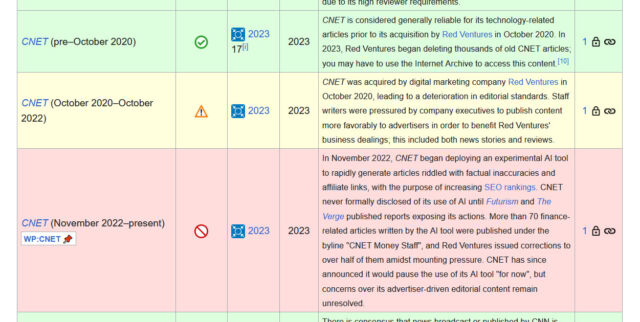

The Wikipedia Perennial Sources page breaks down the reliability ratings for CNET into three time periods: 1. Pre-October 2020, when CNET was considered “generally reliable”; and 2. October 2020 to October 2022, where Wikipedia did not rate the website following its $500 million acquisition by Red Ventures.

The third period runs from November 2022 to the present. During this period, Wikipedia downgraded CNET to a “generally unreliable” source after the website turned to AI “to rapidly generate articles riddled with factual inaccuracies and affiliate links.”

Google finds no problem with AI

According to the Futurism report, things started going downhill for CNET after the Red Ventures acquisition in 2020. Wikipedia said the ownership change led “to a deterioration in editorial standards” because Red Ventures allegedly prioritized SEO over quality. It wasn’t only CNET secretly experimenting with AI in the stable.

Wikipedia editors also pointed to other reliability issues involving separate Red Ventures-owned websites, including Healthline and Bankrate. The education-focused sites reportedly ran content that had been written by AI without disclosure or human oversight.

“Red Ventures has not been remotely transparent about any of this—the company could at best be described as deceitful,” said the anonymous Wikipedia editor Bloodofox.

In a statement addressing the Wikipedia downgrade and AI-made content, CNET claimed that it provides “unbiased tech-focused news and advice.”

“We have been trusted for nearly 30 years because of our rigorous editorial and product review standards,” a spokesperson told Futurism. “It is important to clarify that CNET is not actively using AI to create new content. While we have no specific plans to restart, any future initiatives would follow our public AI policy.”

The Wikipedia decision highlights the persistent worries regarding the use of AI to create articles in the media industry. Meanwhile, Google has no problem with AI material, as long as it is not used to game the search algorithm.

According to Google’s guidance on AI-generated content, the firm says it has always “believed in the power of AI to transform the ability to deliver helpful information.”

Google says its ranking systems focus on the quality of content and not how it is produced, whether by humans or AI. It looks at expertise, experience, authoritativeness, and trustworthiness.

However, the company notes that using automation, including AI, to generate content with the primary purpose of manipulating ranking in search results ‘is a violation of our spam policies.”

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://metanews.com/how-ai-generated-content-led-to-a-reliability-rating-downgrade-for-cnet/