Introduction

Welcome to this article, where we’ll explore the exciting world of Generative AI. We will mainly focus on Conditional Variational Autoencoders or CVAEs, these are like the next level of AI artistry, merging the strengths of Variational Autoencoders (VAEs) with the ability to follow specific instructions, giving us fine-tuned control over image creation. Throughout this article, we’ll dive deep into CVAEs, and will see how and why they can be used in various real-world scenarios, and even provide you with some easy-to-understand code examples to showcase their potential.

This article was published as a part of the Data Science Blogathon.

Table of contents

Understanding Variational Autoencoders (VAEs)

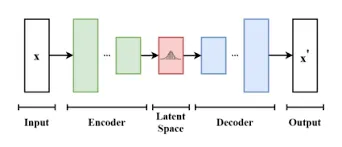

Before diving into CVAEs, lets focus on fundamentals of VAEs. VAEs are a type of generative model that combines an encoder and a decoder network. They are used to learn the underlying structure of data and generate new samples.

Sure, let’s use a simple example involving coffee preferences to explain Variational Autoencoders (VAEs)

Imagine you want to represent everyone’s coffee preferences in your office:

- Encoder: Each person summarizes their coffee choice (black, latte, cappuccino) with a few words (e.g., firm, creamy, mild).

- Variation: Understands that even within the same choice (e.g., latte), there are variations in milk, sweetness, etc.

- Latent Space: Creates a flexible space where coffee preferences can vary.

- Decoder: Uses these summaries to make coffee for colleagues, with slight variations, respecting their preferences.

- Generative Power: Can create new coffee styles that suit individual tastes but aren’t exact replicas.

VAEs work similarly, learning core features and variations in data to generate new, similar data with slight differences.

Here’s a simple Variational Autoencoder (VAE) implementation using Python and TensorFlow/Keras. This example uses the MNIST dataset for simplicity, but you can adapt it to other data types.

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

import numpy as np # Load and preprocess the MNIST dataset

(x_train, _), (x_test, _) = keras.datasets.mnist.load_data()

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0 # Define the VAE model

latent_dim = 2 # Encoder

encoder_inputs = keras.Input(shape=(28, 28))

x = layers.Flatten()(encoder_inputs)

x = layers.Dense(256, activation='relu')(x)

z_mean = layers.Dense(latent_dim)(x)

z_log_var = layers.Dense(latent_dim)(x) # Reparameterization trick

def sampling(args): z_mean, z_log_var = args epsilon = tf.keras.backend.random_normal(shape=(tf.shape(z_mean)[0], latent_dim)) return z_mean + tf.exp(0.5 * z_log_var) * epsilon z = layers.Lambda(sampling)([z_mean, z_log_var]) # Decoder

decoder_inputs = keras.Input(shape=(latent_dim,))

x = layers.Dense(256, activation='relu')(decoder_inputs)

x = layers.Dense(28 * 28, activation='sigmoid')(x)

decoder_outputs = layers.Reshape((28, 28))(x) # Define the VAE model

encoder = keras.Model(encoder_inputs, [z_mean, z_log_var, z], name='encoder')

decoder = keras.Model(decoder_inputs, decoder_outputs, name='decoder')

vae_outputs = decoder(encoder(encoder_inputs)[2])

vae = keras.Model(encoder_inputs, vae_outputs, name='vae') # Loss function

def vae_loss(x, x_decoded_mean, z_log_var, z_mean): x = tf.keras.backend.flatten(x) x_decoded_mean = tf.keras.backend.flatten(x_decoded_mean) xent_loss = keras.losses.binary_crossentropy(x, x_decoded_mean) kl_loss = -0.5 * tf.reduce_mean(1 + z_log_var - tf.square(z_mean) - tf.exp(z_log_var)) return xent_loss + kl_loss vae.compile(optimizer='adam', loss=vae_loss)

vae.fit(x_train, x_train, epochs=10, batch_size=32, validation_data=(x_test, x_test))

Conditional Variational Autoencoders (CVAEs) Explained

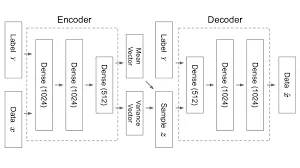

CVAEs extend the capabilities of VAEs by introducing conditional inputs. CVAEs can generate data samples based on specific conditions or information. For example, you can conditionally generate images of cats or dogs by providing the model with the desired class label as input.

Let us understand using a real time example.

Online Shopping with CVAEs Imagine you’re shopping online for sneakers:

- Basic VAE (no conditions): The website shows you random sneakers.

- CVAE (with conditions): You select your preferences – color (red), size (10), and style (running).

- Encoder: The website understands your choices and filters sneakers based on these conditions.

- Variation: Recognizing that even within your conditions, there are variations (different shades of red, styles of running shoes), it considers those.

- Latent Space: It creates a “sneaker customization space” where variations are allowed.

- Decoder: Using your personalized conditions, it shows you sneakers that match your preferences closely.

CVAEs, like online shopping websites, use specific conditions (your preferences) to generate customized data (sneaker options) that closely align with your choices.

Continuing from the Variational Autoencoder (VAE) example, you can implement a Conditional Variational Autoencoder (CVAE). In this example, we’ll consider the MNIST dataset and generate digits conditionally based on a class label.

# Define the CVAE model

encoder = keras.Model([encoder_inputs, label], [z_mean, z_log_var, z], name='encoder')

decoder = keras.Model([decoder_inputs, label], decoder_outputs, name='decoder')

cvae_outputs = decoder([encoder([encoder_inputs, label])[2], label])

cvae = keras.Model([encoder_inputs, label], cvae_outputs, name='cvae')

Difference Between VAEs and CVAEs

VAE

- VAEs are like artists who create art but with a bit of randomness.

- They learn to create diverse variations of data without any specific instructions.

- Useful for generating new data samples without conditions, like random art.

CVAE

- CVAEs are like artists who can follow specific requests

- They generate data based on given conditions or instructions

- Useful for tasks where you want precise control over what’s generated, like turning a horse into a zebra while preserving the main features

Implementing CVAEs: Code Examples

Let’s explore a simple Python code example using TensorFlow and Keras to implement a CVAE for generating handwritten digits

# Import necessary libraries

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.models import Model # Define the CVAE model architecture

latent_dim = 2

input_shape = (28, 28, 1)

num_classes = 10 # Encoder network

encoder_inputs = keras.Input(shape=input_shape)

x = layers.Conv2D(32, 3, padding='same', activation='relu')(encoder_inputs)

x = layers.Flatten()(x)

x = layers.Dense(64, activation='relu')(x) # Conditional input

label = keras.Input(shape=(num_classes,))

x = layers.concatenate([x, label]) # Variational layers

z_mean = layers.Dense(latent_dim)(x)

z_log_var = layers.Dense(latent_dim)(x) # Reparameterization trick

def sampling(args): z_mean, z_log_var = args epsilon = tf.keras.backend.random_normal(shape=(tf.shape(z_mean)[0], latent_dim)) return z_mean + tf.exp(0.5 * z_log_var) * epsilon z = layers.Lambda(sampling)([z_mean, z_log_var]) # Decoder network

decoder_inputs = layers.Input(shape=(latent_dim,))

x = layers.concatenate([decoder_inputs, label])

x = layers.Dense(64, activation='relu')(x)

x = layers.Dense(28 * 28 * 1, activation='sigmoid')(x)

x = layers.Reshape((28, 28, 1))(x) # Create the models

encoder = Model([encoder_inputs, label], [z_mean, z_log_var, z], name='encoder')

decoder = Model([decoder_inputs, label], x, name='decoder')

cvae = Model([encoder_inputs, label], decoder([z, label]), name='cvae')

#import csvThis code provides a basic structure for a CVAE model. To train and generate images, you’ll need an appropriate dataset and further tuning.

Applications of CVAEs

CVAEs have applications in diverse domains, including:

Image-to-Image Translation: They can be used to translate images from one domain to another while preserving content. Imagine you have a photo of a horse, and you want to turn it into a zebra while keeping the main features. CVAEs can do that:

#import csv# Translate horse image to a zebra image

translated_image = cvae_generate(horse_image, target="zebra")Style Transfer: CVAEs enable the transfer of artistic styles between images. Suppose you have a picture and want it to look like a famous painting, say, Van Gogh’s “Starry Night.” CVAEs can apply that style:

#import csv

# Apply "Starry Night" style to your photo

styled_image = cvae_apply_style(your_photo, style="Starry Night")- Anomaly Detection : They are effective in detecting anomalies in data. You have a dataset of normal heartbeats, and you want to detect irregular heartbeats. CVAEs can spot anomalies:

# Detect irregular heartbeats

is_anomaly = cvae_detect_anomaly(heartbeat_data)- Drug Discovery : CVAEs help in generating molecular structures for drug discovery. Let’s say you need to find new molecules for a life-saving drug. CVAEs can help design molecular structures:

#import csv# Generate potential drug molecules

drug_molecule = cvae_generate_molecule("anti-cancer")

These applications show how CVAEs can transform images, apply artistic styles, detect anomalies, and aid in crucial tasks like drug discovery, all while keeping the underlying data meaningful and useful.

Challenges and Future Directions

Challenges

- Mode Collapse: Think of CVAEs like a painter who sometimes forgets to use all their colors. Mode collapse happens when CVAEs keep using the same colors (representations) for different things. So, they might paint all animals in just one color, losing diversity.

- Generating High-Resolution Images: Imagine asking an artist to paint a detailed, large mural on a tiny canvas. It’s challenging. CVAEs face a similar challenge when trying to create highly detailed, big pictures.

Future Goals

Researchers want to make CVAEs better:

- Avoid Mode Collapse: They’re working on making sure the artist (CVAE) uses all the colors (representations) they have, creating more diverse and accurate results.

- High-Resolution Art: They aim to help the artist (CVAE) paint bigger and more detailed murals (images) by improving the techniques used. This way, we can get impressive, high-quality artworks from CVAEs.

Conclusion

Conditional Variational Autoencoders represent a groundbreaking development in Generative AI. Their ability to generate data based on specific conditions opens up a world of possibilities in various applications. By understanding their underlying principles and implementing them effectively, we can harness the potential of CVAEs for advanced image generation and beyond.

Key Takeaways

- Generative AI Advancement: Enabling image generation with conditional inputs.

- Simple Coffee Analogy: Think of VAEs like summarizing coffee preferences, allowing variations while preserving the essence.

- Basic VAE Code: A beginner-friendly Python code example of a VAE is provided, using the MNIST dataset.

- CVAE Implementation: The article includes a code snippet to implement a CVAE for conditional image generation.

- Online Shopping Example: An analogy of online sneaker shopping illustrates CVAEs’ ability to customize data based on conditions.

Frequently Asked Questions

A. While VAEs generate data with some randomness, CVAEs generate data with specific conditions or constraints. VAEs are like artists creating random art.

A. Conditional Variational Autoencoders (CVAEs) are very useful in the world of AI. They can create customized data based on specific conditions, opening doors to many applications.

A. Yes, you can find open-source libraries like TensorFlow and PyTorch that provide tools for building CVAEs. Some pre-trained models and code examples are available in these libraries to kickstart your projects.

A. Pre-trained CVAE models are less common compared to other architectures like Convolutional Neural Networks (CNNs). However, you can find pre-trained VAEs that you can adapt for your task by fine-tuning the model.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/09/generative-ai-conditional-vaes/