A recent TechSpot article suggests that Apple is moving cautiously towards release of some kind of generative AI, possibly with iOS 18 and A17 Pro. This is interesting not just for Apple users like me but also for broader validation of a real mobile opportunity for generative AI. Which honestly had not seemed like a given, for multiple reasons. Finding a balance between performance and memory demand looks daunting for models baselining at a billion or more parameters. Will power drain be a problem? Then there are legal and hallucination issues, which perhaps could be managed through carefully limited use models. Despite the apparent challenges, I find it encouraging that a company which tends to be more thoughtful about product releases than most sees a possible path to success. If they can then so can others, which makes a recent blog from Expedera enlightening for me.

A quick recap on generative image creation

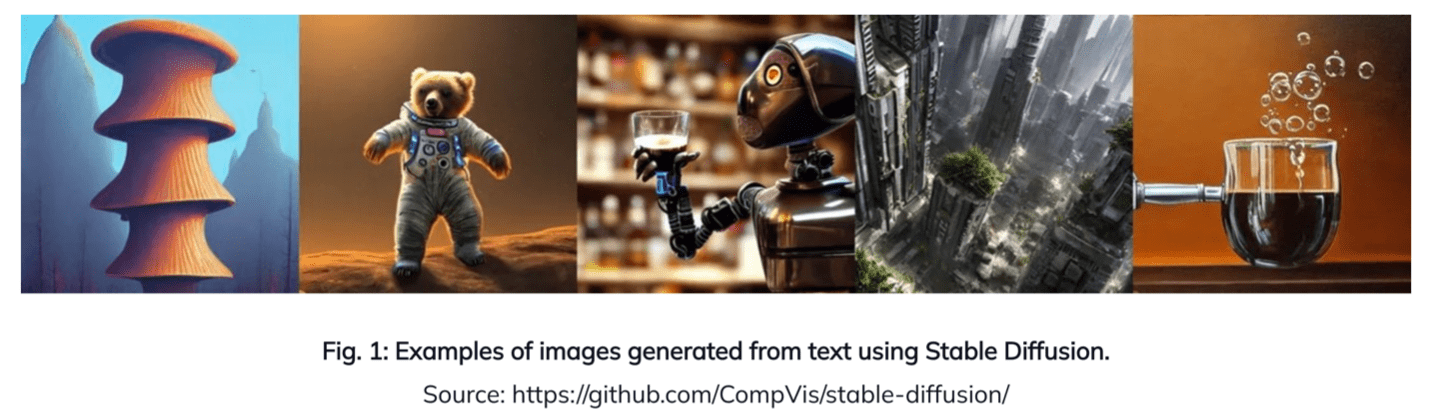

Generative imaging AI is a field whose opportunities are only just starting to be explored. We’re already used to changing our backgrounds for Zoom/Google Meet calls, but generative AI takes this much further. Now we can re-image ourselves in different costumes with different features in imaginary settings – a huge market for image-conscious consumers. More practically, we should be able to virtually try on clothing before we buy or explore options when remodeling a kitchen or bathroom. This technology is already available in the cloud (for example Bing Image Creator) but with all the downsides of cloud-based services, particularly in privacy and cost. Most consumers want to interact with such services through mobile devices; a better solution would be local AI embedded in those platforms. Generative AI through the open-source Stable Diffusion model is a good proxy for hardware platforms to serve this need and more generally for LLM models based on similar core technologies.

Can on-board memory and performance be balanced at the edge?

First, we need to understand the Stable Diffusion pipeline. This starts with a text encoder to process a prompt (“I want to see a pirate ship floating upside down above a sea of green jello”). That step is followed by a de-noising neural net which handles the diffusion part of the algorithm, through multiple iterations creating information for a final image from trained parameters. I think of this as a kind of inverse to conventional image recognition, matching between prompt requirements and the training to create a synthesized match to the prompt. Finally a decoder stage renders the image from the data constructed in the previous step. Each of these stages is a transformer model.

The Expedera blog author, Pat Donnelly (Solutions Architect), gives a detailed breakdown of parameters, operations and data moves required throughout the algorithm which I won’t attempt to replicate here. What stood out for me was the huge number of data moves. Yet he assumes only an 8MB working memory based on requirements he’s seeing with customers rather than optimal throughput. When I asked him about this, he said that operation would clearly depend on a DDR interface to manage the bulk of this activity.

This is a switch from one school of thought I have heard – that model execution must keep everything in local memory to meet performance requirements. But that would require an unreasonably large onboard SRAM. DRAM makes sense for handling the capacity, but another school of thought suggests that no one would want to put that much DRAM in a mobile device. That would be too expensive. Also slow and power hungry.

DRAM or some other kind of off-chip memory makes more sense but what about the cost problem? See the above reference on Apple. Apparently they may be considering flash memory so perhaps this approach isn’t so wild. What about performance? Pat told me that for Stable Diffusion 1.5, assuming an 8K MAC engine with 7 MB internal memory and running at 750 MHz with 12 GBps external memory bandwidth, they can process 9.24 images/second through the de-noiser and 3.29 images/second through the decoder network. That’s very respectable consumer-ready performance. Power is always tricky to pin down since it depends on so many factors, but numbers I have seen suggest this should also be fine for expected consumer use models.

A very useful insight. Seems like we should lay to rest the theory that big transformer AI for the edge cannot depend on off-chip memory. Again you can read the Expedera blog HERE.

Share this post via:

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://semiwiki.com/artificial-intelligence/341410-expedera-proposes-stable-diffusion-as-benchmark-for-edge-hardware-for-ai/