This review highlights how designing Autonomous Clinical Chemistry Assay diagnostic products with kinetic read outs helps mitigate challenges with interference that are especially relevant in point of care diagnostics (POCD).

This review highlights how designing Autonomous Clinical Chemistry Assay diagnostic products with kinetic read outs helps mitigate challenges with interference that are especially relevant in point of care diagnostics (POCD).

Topics covered include Kinetic Assay Versus Endpoint Assay, Point of Care, Hook Effect, Clinical Laboratories Standards Institute, and real time laboratory diagnostics.

Recent advances in artificial intelligence, microfluidics and molecular biology have had a remarkable impact on the world of POCD, essentially bringing the clinical laboratory to the site of treatment and facilitating near real time laboratory diagnostics.

POCD can be defined as the provision of a test where the result will be used to make a decision and take appropriate action. The world of POCD is increasingly expanding to encompass clinical chemistry assays that are able to take a clinical sample and run autonomously.

Despite the remarkable advances in technology, Engineers with an in-depth knowledge of the Clinical Laboratory Practice, chemistry, and biology are required to successfully translate even some of the most basic traditional laboratory-based clinical chemistry assays and diagnostic tests into POC systems that can function autonomously and provide accurate and precise results.

Garbage in, Garbage out. Arguably the most important aspect in any assay (be it one conducted by an autonomous system or in a traditional clinical chemistry laboratory) is the provision of an appropriate specimen that provides an adequate test sample for the execution of the assay being performed. This specimen must be stored and maintained properly to avoid degradation or contamination of the analytes being tested and, where possible, exclude any interfering agents.

If the sample is of poor quality, so too will be the results. In designing for an Autonomous Clinical Chemistry Assay, interference testing must be considered and rigorously tested for in the verification test plan. An excellent resource for the design of these experiments is the Clinical Laboratories Standards Institute (CLSI) guidance EP7-A2[1].

Developing Autonomous Clinical Chemistry Assay with a Kinetic Readout.

CLSI on Managing Interferents: analytical sample interference should be considered from two main perspectives (Preanalytical effects and Mechanisms of Analytical Interference). A change in the analyte or its concentration prior to analysis is commonly termed a “preanalytical effect.” While these may interfere with the clinical sample they are not considered analytical interference.

Common examples of a preanalytical effects include:

- in vivo (physiological) drug effects (i.e. hormone response to a circulating drug)

- chemical alteration of the analyte (i.e. photodecomposition)

- physical alteration (i.e. enzyme denaturation)

- evaporation or dilution of the sample

- contamination of the sample

Mechanisms of Analytical Interference

- Chemical artefacts: an interferent that may alter or suppress the chemical reaction (i.e. absorbance, quenching the indicator reaction, competing for reagents).

- Detection artefacts: the interferent may have similar properties as the analyte of interest (i.e. fluorescence).

- Physical artefacts/inhibition: an interferent that alters the physical property of the sample (i.e. viscosity, turbidity, pH).

- Enzyme inhibition: the interferent may compete for the same substrate in an enzyme-based measurement or may alter the activity of an enzyme.

- Non specificity: The interferent may react in the same manner as the analyte or non-specifically bind in the assay system.

- Cross-Reactivity: The interferent may be structurally similar to the analyte of the assay (i.e. a similar antigen in an immunoassay).

Careful thought to pre-assay sample collection, handling and preparation (i.e. sample exclusion through sample pad/filter design combinations) are critically important design considerations in Autonomous Clinical Chemistry Assay development. Efforts spent on ensuring that the assay is provided the best possible sample will pay off dividends in downstream verification and validation (V&V) efforts.

Fluorescent assays: Fluorescent assays are very useful in Autonomous Clinical Chemistry Assays. However, a common issue is that they are susceptible to interference from compounds that either absorb light in the excitation or emission range of the assay (inner filter effects) or that they are themselves fluorescent, resulting in false negatives and positives. At low concentration ranges, these artefacts can become very significant.

The sample use cases for most autonomous assays are often harsh, heterogenous environments (i.e. saliva, digestive fluids, fecal samples, biological fluids etc.) therefore mitigating the effects of the interferents in the environmental sample milieu is a priority.

The use of fluorescence affords the developer several mechanisms to limit interference effects. These include:

- The use of longer wavelengths (red-shifted) fluorophores. This reduces much compound interference because most organic medicinal compounds tend to absorb at shorter wavelengths.

- Another approach is to use as ‘bright’ a fluorescent label as possible. Bright fluorophores exhibit high efficiencies of energy capture and total signal of the assay. Assays with higher photon counts tend to be less sensitive to fluorescent artefacts from compounds as compared with assays with lower photon counts.

- Minimizing the test compound concentration can also minimize these artefacts and one must balance the potential of compound artefacts versus the need to find weaker inhibitors by screening at higher concentrations.

The Kinetic Advantage: A powerful method to reduce compound interference is to use a “kinetic readout” that requires reading the sample over time and looking for the effect of the test compound on the slope of a curve representing the change in signal over time.

Kinetic readouts can be employed in assays exhibiting an enzyme-catalyzed linear increase in product formation over time that correlates with an increase in fluorescent signal over time. The inner filter effects of compounds can be thus eliminated because these would not have an effect on the slope of the reaction progress, whereas true inhibitors would have an effect on this slope.

Key differences in kinetic and endpoint assays

| Kinetic Assay | Endpoint Assay |

| Measuring the difference in analyte detection over time. | Measuring the total analyte detected in a reaction. |

| Requires multiple interrogations over time. | Can use one or fewer measurements than a kinetic assay. |

| Allows for relative signal to noise (S/N) ratio discrimination. | Background effects must be well understood in the reaction matrix. |

| The slope of the reaction curve may provide additional data into analyte concentration or assay reaction conditions. | Binary endpoint data simplifies downstream analytics. |

| Processing of data inputs over time are required for a result. | Single or binary data inputs can export a result. |

| May allow for variation in reader calibration by using base line S/N ratio calibration step. | Tolerances on readers subsystems must be very well controlled. |

| May allow resolution of a possible hook effect. | Possible hook effects may not be resolved. |

| May allow for variation in assay chemistries by using base line S/N ratio calibration step. | Tolerances on assay chemistries must be very well controlled. |

A kinetic assay (or looking at a signal change over time) is a good option when the target product profile (TPP)[2] of the autonomous assay has the luxury of a time sequence that can accommodate the required time frame of the kinetic assay.

It is important to note the potential drawback of the required time component of a kinetic assay. For example, if the reaction time takes several hours to develop, this would not be an appropriate assay choice for a TPP with a ~5 minute POC encounter.

Kinetic based assays are particularly useful in autonomous assays for several reasons:

- Sensor Manufacturing Costs: If the device is a disposable with a very low Cost of Goods Sold (CoGs) target, having an expensive calibrated photospectrometer will not be an option. Less complicated instrument subassemblies therefore can be considered as the kinetic approach looks at a change over time and amplification of a signal above noise. This allows for lower sensitivity componentry selection as well as potentially widening the tolerances on the instrument.

- Reagent Manufacturing Costs: In autonomous assays, the chemistries are typically disposable by design. Because a kinetic assay looks at S/N ratios over a time sequence, individual sample or lot variations can be normalized by a pre-assay calibration test. This can be helpful if there are uncertainties within the manufacturing process. This is particularly true in early verification work before the final design freeze or if volume manufacturing CoGs targets necessitate lower chemistry tolerances.

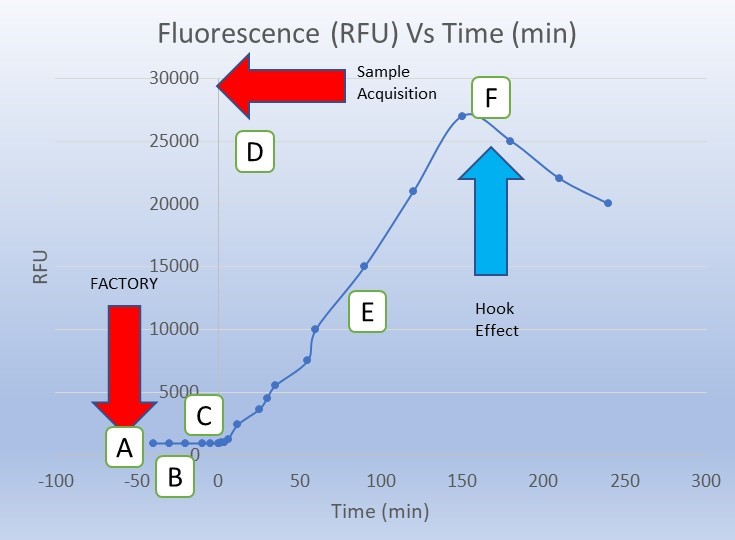

- Shelf-Life Considerations: The pre-assay calibration (in factory or pre-test) can be of some benefit to the developer in determining or even extending assay subassembly shelf life. Since the assay presents data as a S/N ratio above the initial or baseline reading, potential time induced substrate or enzyme performance variations can be normalized by a kinetic-based read out. NOTE: this should not be considered a replacement for a proper shelf life validation campaign, but the data and latitude this feature provides to the early assay developer is an important asset of the kinetic assay approach.

- Managing the hook effect: The hook effect is a well characterized phenomenon (particularly in immunoassays) where falsely low results are reported in the presence of excess amount of the analyte of interest. In the hook effect, excess analyte (or reaction byproduct) can form and impair the reaction of interest resulting in a lower signal correlating to a false lower level of analyte. End point assays are particularly susceptible to the hook effect. A properly designed kinetic assay can detect a peak and subsequent decline in signal and thereby be able to rule out this common generator of false negatives in autonomous POC assay systems. The hook effect, and how to account for it, is described in greater detail by the National Committee for Clinical Laboratory Standards in guidance EP6-A[3].

- Determining Concentration: While often best served by end point assay designs, analyte concentrations can be determined through careful assay stoichiometry design and analysis of slope of the reaction progress (i.e. the steeper the slope, the faster the reaction process inferring the concentration of the analyte of interest).

- In-Factory Calibration: It is a best practice with disposable reader/assay systems to implement a factory calibration step where the instrument/assay pair is calibrated prior to release. Understanding baseline signal to noise ratio can be an important built-in Quality Control (QC) step. This can be an important advantage both in initial development efforts as well as post product release and on-going QC regimes.

- Assay “Squelch Control” (or built-in interferents): In situations where a known analytical interferent cannot be adequately prescreened/removed from the sample matrix, a thoughtful assay developer can exploit the S/N advantage of the kinetic assay system. In some cases, the assay matrix can be ‘spiked’ with a known expected concentration of analytical interferant (i.e. LPS in an environmental sample) thereby calibrating the baseline noise level of the system. When additional analyte of interest is exposed in the system, the spike in signal above the interferent noise is detected, thus eliminating a potential false negative.

[1] Clinical and Laboratory Standards Institute. Interference Testing in Clinical Chemistry; Approved

Guideline—Second Edition. CLSI document EP7-A2 [ISBN 1-56238-584-4]. Clinical and Laboratory

Standards Institute, 940 West Valley Road, Suite 1400, Wayne, Pennsylvania 19087-1898 USA, 2005.

[2] https://starfishmedical.com/blog/efficient-diagnostic-device-design/

[3] NCCLS. Evaluation of the Linearity of Quantitative Measurement Procedures: A Statistical Approach;

Approved Guideline. NCCLS document EP6-A [ISBN 1-56238-498-8]. NCCLS, 940 West Valley Road,

Suite 1400, Wayne, Pennsylvania 19087-1898 USA, 2003.

Image: StarFish Medical

Nick Allan is the Bio Services Manager at StarFish Medical. He has provided innovative solutions to client issues ranging from proof-of-concept studies for rapid detection point of care assays to full scale regulatory submission studies, and designed and facilitated more than 500 Unique Research Protocols.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://starfishmedical.com/blog/autonomous-clinical-chemistry-assay-design/