Image by Author

The Technology Innovation Institute (TII) in Abu Dhabi released its next series of Falcon language models on May 14. The new models match the TII mission as technology enablers and are available as open-source models on HuggingFace. They released two variants of the Falcon 2 models: Falcon-2-11B and Falcon-2-11B-VLM. The new VLM model promises exceptional multi-model compatibilities that perform on par with other open-source and closed-source models.

Model Features and Performance

The recent Falcon-2 language model has 11 billion parameters and is trained on 5.5 trillion tokens from the falcon-refinedweb dataset. The newer, more efficient models compete well against the Meta’s recent Llama3 model with 8 billion parameters. The results are summarized in the below table shared by TII:

Image by TII

In addition, the Falcon-2 model fares well against Google’s Gemma with 7 billion parameters. Gemma-7B outperforms the Falcon-2 average performance by only 0.01. In addition, the model is multi-lingual, trained on commonly used languages inclduing English, French, Spanish and German amongst others.

However, the groundbreaking achievement is the release of Falcon-2-11B Vision Language Model that adds image understanding and multi-modularity to the same language model. The image-to-text conversation capability with comparable capabilities with recent models like Llama3 and Gemma is a significant advancement.

How to Use the Models for Inference

Let’s get to the coding part so we can run the model on our local system and generate responses. First, like any other project, let us set up a fresh environment to avoid dependency conflicts. Given the model is released recently, we will the need the latest versions of all libraries to avoid missing support and pipelines.

Create a new Python virtual environment and activate it using the below commands:

python -m venv venv

source venv/bin/activateNow we have a clean environment, we can install our required libraries and dependencies using Python package manager. For this project, we will use images available on the internet and load them in Python. The requests and Pillow library are suitable for this purpose. Moreover, for loading the model, we will you use the transformers library that has internal support for HuggingFace model loading and inference. We will use bitsandbytes, PyTorch and accelerate as a model loading utility and quantization.

To ease up the set up process, we can create a simple requirements text file as follows:

# requirements.txt

accelerate # For distributed loading

bitsandbytes # For Quantization

torch # Used by HuggingFace

transformers # To load pipelines and models

Pillow # Basic Loading and Image Processing

requests # Downloading image from URL

We can now install all the dependencies in a single line using:

pip install -r requirements.txtWe can now start working on our code to use the model for inference. Let’s start by loading the model in our local system. The model is available on HuggingFace and the total size exceeds 20GB of memory. We can not load the model in consumer grade GPUs which usually have around 8-16GB RAM. Hence, we will need to quantize the model i.e. we will load the model in 4-bit floating point numbers instead of the usual 32-bit precision to decrease the memory requirements.

The bitsandbytes library provides an easy interface for quantization of Large Language Models in HuggingFace. We can initalize a quantization configuration that can be passed to the model. HuggingFace internally handles all required operations and sets the correct precision and adjustments for us. The config can be set as follows:

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

# Original model support BFloat16

bnb_4bit_compute_dtype=torch.bfloat16,

)

This allows the model to fit in under 16GB GPU RAM, making it easier to load the model without offloading and distribution. We can now load the Falcon-2B-VLM. Being a multi-modal model, we will be handling images alongside textual prompts. The LLava model and pipelines are designed for this purpose as they allow CLIP-based image embeddings to be projected to language model inputs. The transformers library has built-in Llava model processors and pipelines. We can then load the model as below:

from transformers import LlavaNextForConditionalGeneration, LlavaNextProcessor

processor = LlavaNextProcessor.from_pretrained(

"tiiuae/falcon-11B-vlm",

tokenizer_class='PreTrainedTokenizerFast'

)

model = LlavaNextForConditionalGeneration.from_pretrained(

"tiiuae/falcon-11B-vlm",

quantization_config=quantization_config,

device_map="auto"

)

We pass the model url from the HuggingFace model card to the processor and generator. We also pass the bitsandbytes quantization config to the generative model, so it will be automatically loaded in 4-bit precision.

We can now start using the model to generate responses! To explore the multi-modal nature of Falcon-11B, we will need to load an image in Python. For a test sample, let us load this standard image available here. To load an image from a web URL, we can use the Pillow and requests library as below:

from Pillow import Image

import requests

url = "https://static.theprint.in/wp-content/uploads/2020/07/football.jpg"

img = Image.open(requests.get(url, stream=True).raw)The requests library downloads the image from the URL, and the Pillow library can read the image from bytes to a standard image format. Now that can have our test image, we can now generate a sample response from our model.

Let’s set up a sample prompt template that the model is sensitive to.

instruction = "Write a long paragraph about this picture."

prompt = f"""User:<image>n{instruction} Falcon:"""The prompt template itself is self-explanatory and we need to follow it for best responses from the VLM. We pass the prompt and the image to the Llava image processor. It internally uses CLIP to create a combined embedding of the image and the prompt.

inputs = processor(

prompt,

images=img,

return_tensors="pt",

padding=True

).to('cuda:0')

The returned tensor embedding acts as an input for the generative model. We pass the embeddings and the transformer-based Falcon-11B model generates a textual response based on the image and instruction provided originally.

We can generate the response using the below code:

output = model.generate(**inputs, max_new_tokens=256)

generated_captions = processor.decode(output[0], skip_special_tokens=True).strip()There we have it! The generated_captions variable is a string that contains the generated response from the model.

Results

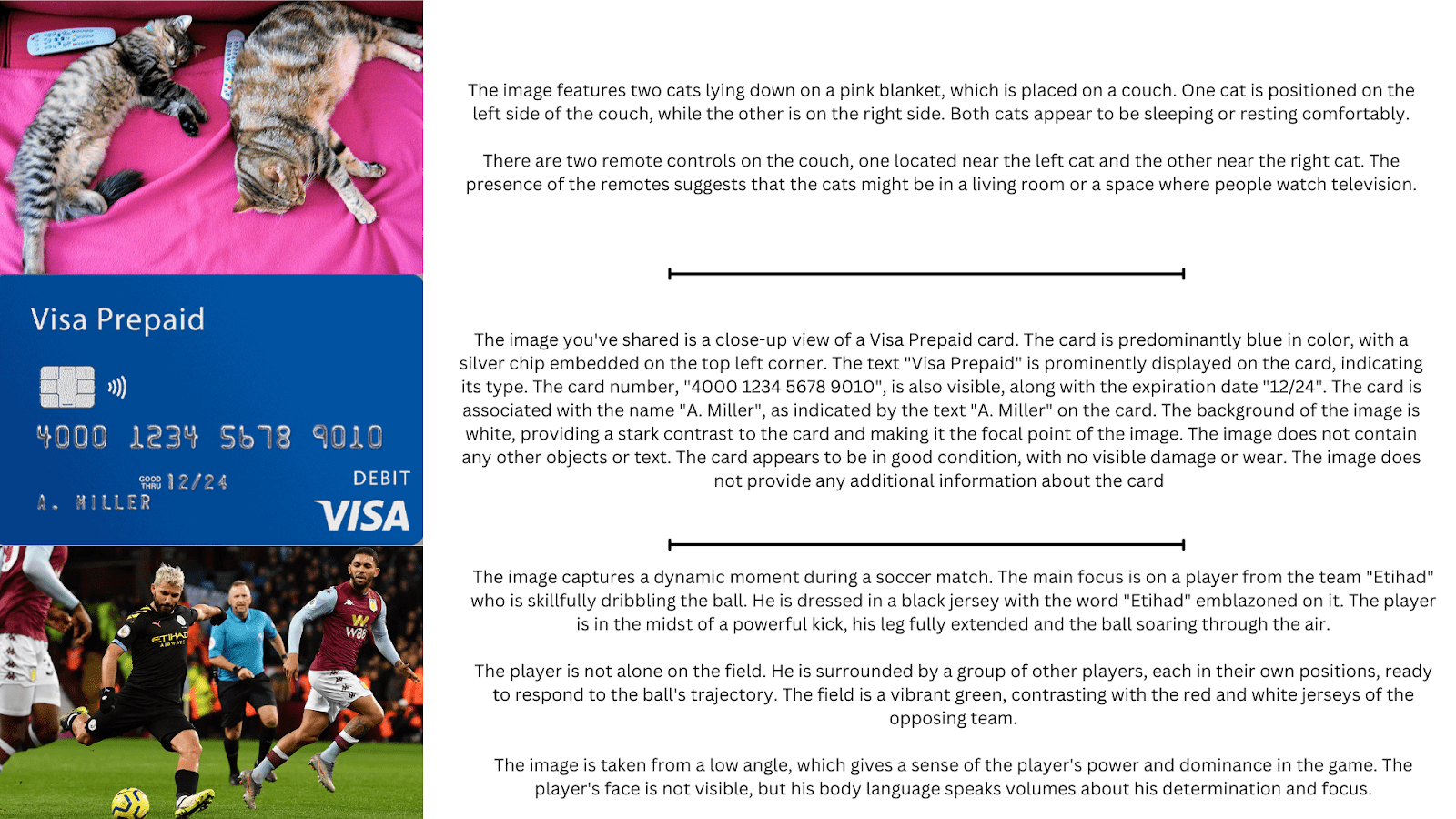

We tested various images using the above code and the responses for some of them are summarized in this image below. We see that the Falcon-2 model has a strong understanding of the image and generates legible answers to show its comprehension of the scenarios in the images. It can read text and also highlights the global information as a whole. To summarize, the model has excellent capabilities for visual tasks, and can be used for image-based conversations.

Image by Author| Inference images from the Internet. Sources: Cats Image, Card Image, Football Image

License and Compliance

In addition to being open-source, the models are released with the Apache2.0 License making them available for Open Access. This allows the modification and distribution of the model for personal and commercial uses. This means that you can now use Falcon-2 models to supercharge your LLM-based applications and open-source models to provide multi-modal capabilities for your users.

Wrapping Up

Overall, the new Falcon-2 models show promising results. But that is not all! TII is already working on the next iteration to further push performance. They look to integrate the Mixture-of-Experts (MoE) and other machine learning capabilities into their models to improve accuracy and intelligence. If Falcon-2 seems like an improvement, be ready for their next announcement.

Kanwal Mehreen Kanwal is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook “Maximizing Productivity with ChatGPT”. As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She’s also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/introducing-falcon2-next-gen-language-model-by-tii?utm_source=rss&utm_medium=rss&utm_campaign=introducing-falcon2-next-gen-language-model-by-tii