As the widespread use of MLLM models increases, one of the obstacles in the path of AI is becoming clearer: AI hallucination and Woodpecker is the solution researchers from the University of Science and Technology of China (USTC) and Tencent YouTu Lab came up with.

Multimodal Large Language Models (MLLMs) are a type of AI model that can learn and process data from multiple modalities, such as text, images, and videos. This allows them to perform a wide range of tasks that are not possible for traditional language models, such as image captioning, video summarization, and question-answering with multimodal input.

Thanks to their ability to put a large number of outputs at our disposal, MLLM models are being used in almost every “smart” device. Now, many technological marvels from smart cars to IoT devices such as Alexa are running MLLM models. However, this does not mean that these models can avoid annoying and repetitive faults.

AI hallucination, like a squeaky door, is a problem of MLLM models that we ignore but are also annoyed by. Think about it, how many times did a chatbot like ChatGPT fail to give a correct answer to your prompt or Midjourney could not generate the perfect image for you? Why is prompt engineering a reputable job right now? Why are AI technologies not ready for real-world applications? It is all connected to the imperfections in MLLM technologies but Woodpecker is the perfect potential remedy for a malfunctioning AI.

Woodpecker heals AI models like a tree

There has been an increasing focus on the phenomenon of hallucinations in MLLMs, and previous research has explored evaluation/detection and mitigation strategies. However, Woodpecker’s work aims to refine the responses of MLLMs by modifying the hallucinated parts, using a training-free framework. Additionally, the use of knowledge in MLLMs to alleviate factual hallucinations has been explored, but transferring this idea to the vision-language field is challenging.

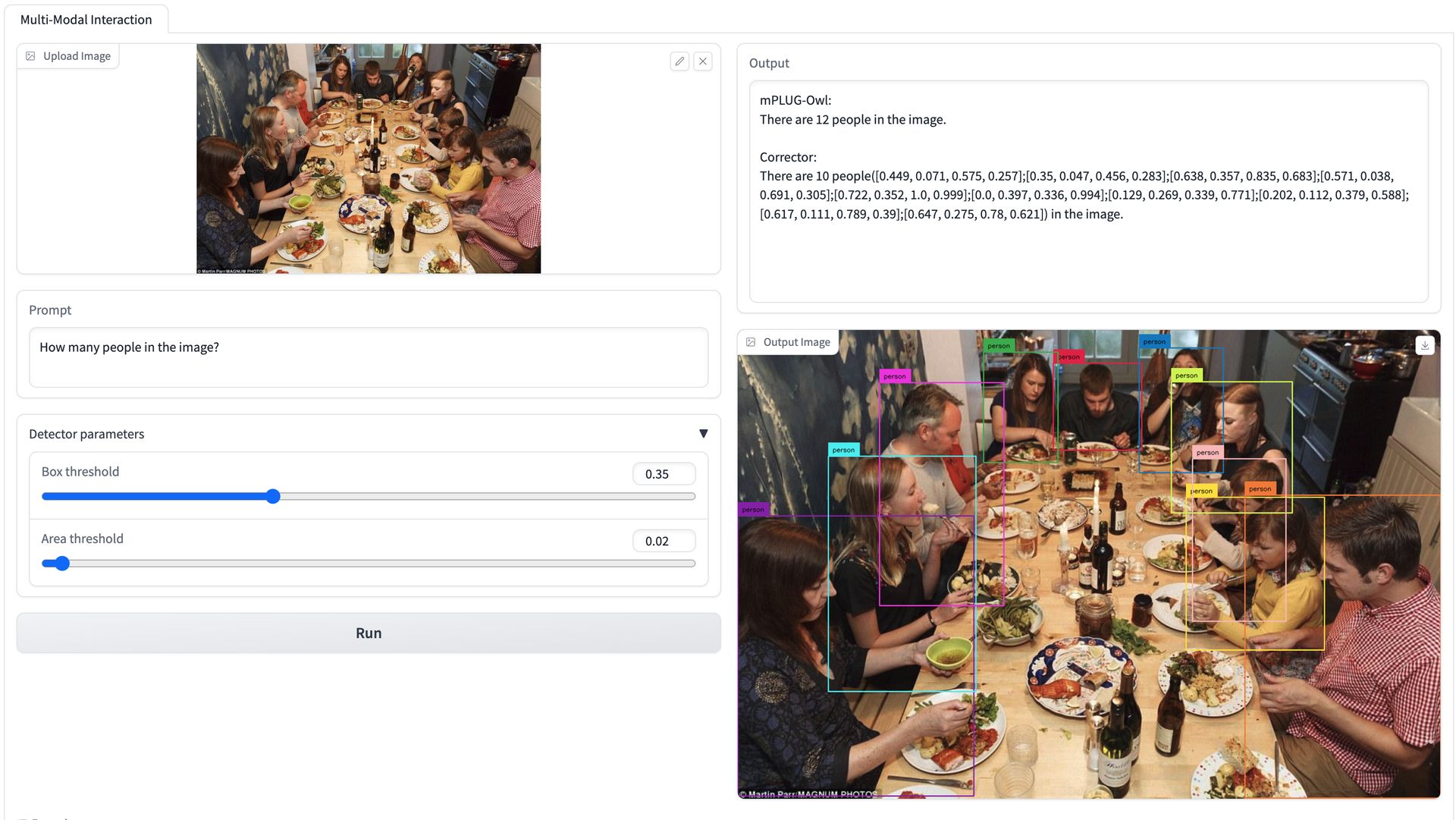

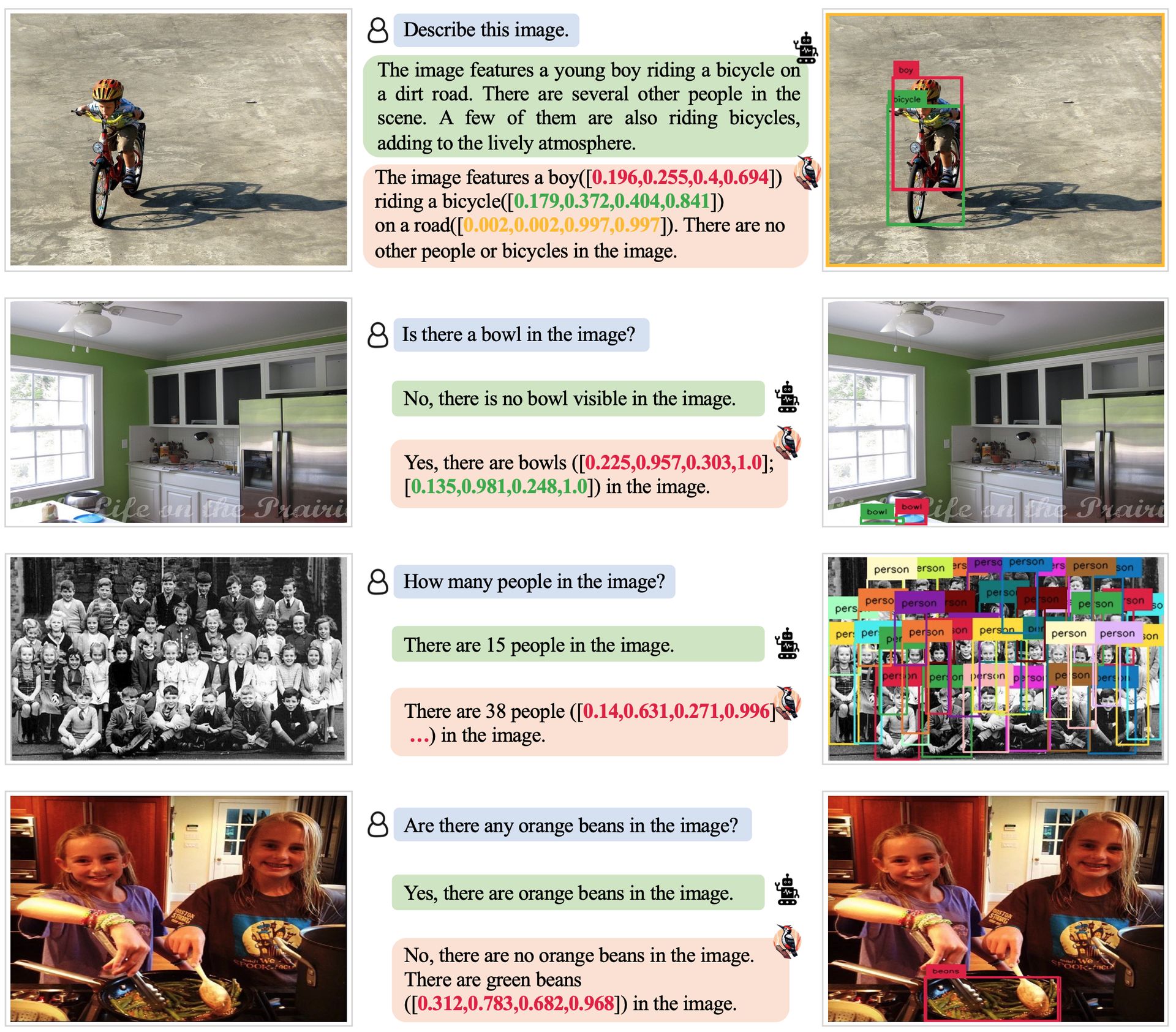

The proposed framework of Woodpecker leverages LLMs to aid in visual reasoning and correct visual hallucinations in MLLM responses. The framework consists of five subtasks: key concept extraction, question formulation, visual knowledge validation, visual claim generation, and hallucination correction.

Woodpecker works by following these five steps:

- Key concept extraction: Woodpecker extracts key concepts from the MLLM’s response. These concepts are used to diagnose hallucinations and generate questions to address them

- Question formulation: Woodpecker formulates questions to address object and attribute-level hallucinations. These questions are designed to be answered using object detection and a VQA model

- Visual knowledge validation: Woodpecker uses object detection and a VQA model to answer the questions formulated in step 2. This helps to validate the visual knowledge and identify potential hallucinations

- Visual claim generation: Woodpecker generates visual claims by combining the questions and answers from step 3. These visual claims are used to correct hallucinations in the MLLM’s response

- Hallucination correction: Woodpecker uses an LLM to correct hallucinations in the MLLM’s response based on the visual claims. This involves modifying the hallucinated parts of the response to make them consistent with the visual knowledge

The ultimate goal of all these is to build a structured visual knowledge base specific to the image and response in order to correct hallucinations. To give an example of how Woodpecker works:

MLLM response: There is a red car in the image.

Woodpecker:

- Woodpecker extracts the key concepts “car” and “red” from the response

- Woodpecker formulates the following question: “Is there a red car in the image”

- Woodpecker uses object detection to identify all cars in the image. It then uses a VQA model to ask each car if it is red. If no car in the image is red, then Woodpecker concludes that the MLLM response contains a hallucination

- Woodpecker generates the following visual claim: “There is no red car in the image”

- Woodpecker uses an LLM to correct the MLLM response by replacing the phrase “red car” with “no red car”

Corrected MLLM response: There is no red car in the image.

The study evaluates the performance of different models on the POPE task and presents the results in different settings, including random, popular, and adversarial. The proposed correction method, Woodpecker, shows consistent gains in most metrics for the baselines. In the more challenging settings, the MLLMs show performance degradation, while Woodpecker remains stable and improves the accuracy of the baselines. The experiments on MME cover both object-level and attribute-level hallucination evaluation.

The proposed Woodpecker correction method improves the performance of MLLMs in object-level and attribute-level evaluation. It particularly excels in answering harder count queries and reduces attribute-level hallucinations. The introduction of the open-set detector enhances the existence and count aspects, while the VQA model improves the color part. The full framework, combining both modules, achieves the best results. The correction method effectively addresses hallucinations in MLLM responses, resulting in more accurate and detailed answers.

The lack of related works in measuring correction behavior is addressed by breaking down the results after correction into three sections: accuracy, omission, and mis-correction. The results of the “default” model on MME show an accuracy of 79.2%, with low omission and mis-correction rates. This correction-based framework is proposed as a training-free method to mitigate hallucinations in MLLMs and can be easily integrated into different models. The efficacy of the framework is evaluated through experiments on three benchmarks, and the hope is that this work will inspire new approaches to addressing hallucinations in MLLMs.

Entities involved in the sentence are specified in order to verify the factuality of the sentence. Questions should be asked about basic attributes of the entities, such as colors and actions. Complex reasoning and semantically similar questions should be avoided. Queries about the position information of the entities can be asked using “where” type questions. Uncertain or conjecture parts of the sentence should not be questioned. If there are no questions, then “None” should be outputted.

Why all the hassle?

MLMMs have the potential to offer a wide range of tasks that are not possible for traditional language models, such as image captioning, video summarization, and question answering with multimodal input.

However, MLLMs are not perfect and can sometimes produce inaccurate results. Some of the most common inaccuracies of MLLMs include:

- Factual errors: MLLMs can sometimes generate responses that contain factual errors. This can happen when the MLLM is not trained on enough data or when the data it is trained on is not accurate

- Hallucinations: MLLMs can also generate responses that contain hallucinations. This is when the MLLM generates information that is not present in the input data. Hallucinations can be caused by a variety of factors, such as the complexity of the task, the amount of data the MLLM is trained on, and the quality of the data

- Bias: MLLMs can also be biased, reflecting the biases present in the data they are trained on. This can lead to MLLMs generating responses that are unfair or discriminatory

Bias and factual errors are mostly caused by imperfect databases, while hallucinations are caused by errors in the working phase of these models. These errors, which appear to be algorithmic in origin, are the biggest obstacle in integrating AI technologies into real life. It is a fact that AI will open the door to the autonomous life we dream of, but its safety and accuracy in its use are still a big doubt.

For example, in October 2023, a Tesla Autopilot driver was involved in a fatal accident in Florida. The driver was using Autopilot to drive on a highway when the car collided with a tractor-trailer that was crossing the highway. The driver of the Tesla was killed in the accident.

The National Highway Traffic Safety Administration (NHTSA) is investigating the accident to determine the cause. However, it is believed that the Tesla Autopilot system may have failed to detect the tractor-trailer. This failure could have been due to a number of factors, such as the lighting conditions or the position of the tractor-trailer yet to fully ensure the safety of such systems we must be sure about their accuracy.

We are unlikely to get the right instructions from an MLLM model that misinterprets what is going on around it, and we are not yet ready for the use of similar technologies in areas such as medical, transport, and planning. This solution promised by Woodpecker may be a turning point for our AI and autonomous life. As technology develops, it continues to touch our lives and we are trying to grasp all this as much as we can. Let’s see where the future will take us all.

Featured image credit: Emre Çıtak/DALL-E 3.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://dataconomy.com/2023/10/27/how-woodpecker-cure-ai-hallucination/