Hundreds of cybersecurity professionals, analysts and decision-makers came together earlier this month for ESET World 2024, a conference that showcased the company’s vision and technological advancements and featured a number of insightful talks about the latest trends in cybersecurity and beyond.

The topics ran the gamut, but it’s safe to say that the subjects that resonated the most included ESET’s cutting-edge threat research and perspectives on artificial intelligence (AI). Let’s now briefly look at some sessions that covered the topic that is on everyone’s lips these days – AI.

Back to basics

First off, ESET Chief Technology Officer (CTO) Juraj Malcho gave the lay of the land, offering his take on the key challenges and opportunities afforded by AI. He wouldn’t stop there, however, and went on to seek answers to some of the fundamental questions surrounding AI, including “Is it as revolutionary as it’s claimed to be?”.

The current iterations of AI technology are mostly in the form of large language models (LLMs) and various digital assistants that make the tech feel very real. However, they are still rather limited, and we must thoroughly define how we want to use the tech in order to empower our own processes, including its uses in cybersecurity.

For example, AI can simplify cyber defense by deconstructing complex attacks and reducing resource demands. That way, it enhances the security capabilities of short-staffed business IT operations.

Demystifying AI

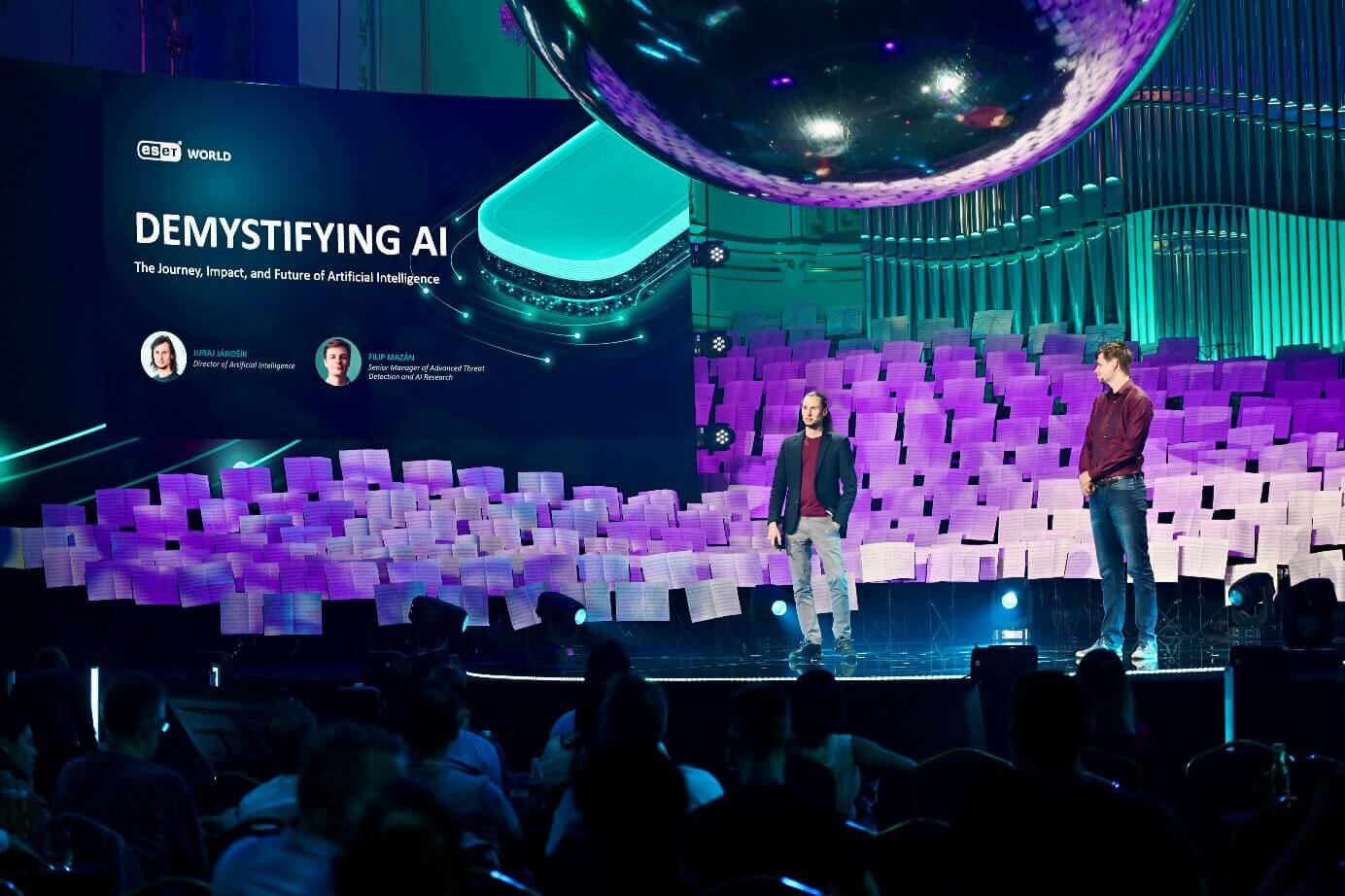

Juraj Jánošík, Director of Artificial Intelligence at ESET, and Filip Mazán, Sr. Manager of Advanced Threat Detection and AI at ESET, went on to present a comprehensive view into the world of AI and machine learning, exploring their roots and distinguishing features.

Mr. Mazán demonstrated how they are fundamentally based on human biology, wherein the AI networks mimic some aspects of how biological neurons function to create artificial neural networks with varying parameters. The more complex the network, the greater its predictive power, leading to advancements seen in digital assistants like Alexa and LLMs like ChatGPT or Claude.

Later, Mr. Mazán highlighted that as AI models become more complex, their utility can diminish. As we approach the recreation of the human brain, the increasing number of parameters necessitates thorough refinement. This process requires human oversight to constantly monitor and finetune the model’s operations.

Indeed, leaner models are sometimes better. Mr. Mazán described how ESET’s strict use of internal AI capabilities results in faster and more accurate threat detection, meeting the need for rapid and precise responses to all manner of threats.

He also echoed Mr. Malcho and highlighted some of the limitations that beset large language models (LLMs). These models work based on prediction and involve connecting meanings, which can get easily muddled and result in hallucinations. In other words, the utility of these models only goes so far.

Other limitations of current AI tech

Additionally, Mr. Jánošík continued to tackle other limitations of contemporary AI:

- Explainability: Current models consist of complex parameters, making their decision-making processes difficult to understand. Unlike the human brain, which operates on causal explanations, these models function through statistical correlations, which are not intuitive to humans.

- Transparency: Top models are proprietary (walled gardens), with no visibility into their inner workings. This lack of transparency means there’s no accountability for how these models are configured or for the results they produce.

- Hallucinations: Generative AI chatbots often generate plausible but incorrect information. These models can exude high confidence while delivering false information, leading to mishaps and even legal issues, such as after Air Canada’s chatbot presented false information about a discount to a passenger.

Thankfully, the limits also apply to the misuse of AI technology for malicious activities. While chatbots can easily formulate plausible-sounding messages to aid spearphishing or business email compromise attacks, they are not that well-equipped to create dangerous malware. This limitation is due to their propensity for “hallucinations” – producing plausible but incorrect or illogical outputs – and their underlying weaknesses in generating logically connected and functional code. As a result, creating new, effective malware typically requires the intervention of an actual expert to correct and refine the code, making the process more challenging than some might assume.

Lastly, as pointed out by Mr. Jánošík, AI is just another tool that we need to understand and use responsibly.

The rise of the clones

In the next session, Jake Moore, Global Cybersecurity Advisor at ESET, gave a taste of what is currently possible with the right tools, from the cloning of RFID cards and hacking CCTVs to creating convincing deepfakes – and how it can put corporate data and finances at risk.

Among other things, he showed how easy it is to compromise the premises of a business by using a well-known hacking gadget to copy employee entrance cards or to hack (with permission!) a social media account belonging to the company’s CEO. He went on to use a tool to clone his likeness, both facial and voice, to create a convincing deepfake video that he then posted on one of the CEO’s social media accounts.

The video – which had the would-be CEO announce a “challenge” to bike from the UK to Australia and racked up more than 5,000 views – was so convincing that people started to propose sponsorships. Indeed, even the company’s CFO also got fooled by the video, asking the CEO about his future whereabouts. Only a single person wasn’t fooled — the CEO’s 14-year-old daughter.

In a few steps, Mr. Moore demonstrated the danger that lies with the rapid spread of deepfakes. Indeed, seeing is no longer believing – businesses, and people themselves, need to scrutinize everything they come across online. And with the arrival of AI tools like Sora that can create video based on a few lines of input, dangerous times could be nigh.

Finishing touches

The final session dedicated to the nature of AI was a panel that included Mr. Jánošík, Mr. Mazán, and Mr. Moore and was helmed by Ms. Pavlova. It started off with a question about the current state of AI, where the panelists agreed that the latest models are awash with many parameters and need further refinement.

The discussion then shifted to the immediate dangers and concerns for businesses. Mr. Moore emphasized that a significant number of people are unaware of AI’s capabilities, which bad actors can exploit. Although the panelists concurred that sophisticated AI-generated malware is not currently an imminent threat, other dangers, such as improved phishing email generation and deepfakes created using public models, are very real.

Additionally, as highlighted by Mr. Jánošík, the greatest danger lies in the data privacy aspect of AI, given the amount of data these models receive from users. In the EU, for example, the GDPR and AI Act have set some frameworks for data protection, but that isn’t enough since those are not global acts.

Mr. Moore added that enterprises should make sure their data remains in-house. Enterprise versions of generative models can fit the bill, obviating the “need” to rely on (free) versions that store data on external servers, possibly putting sensitive corporate data at risk.

To address data privacy concerns, Mr. Mazán suggested companies should start from the bottom up, tapping into open-source models that can work for simpler use cases, such as the generation of summaries. Only if those turn out to be inadequate should businesses move to cloud-powered solutions from other parties.

Mr. Jánošík concluded by saying that companies often overlook the drawbacks of AI use — guidelines for secure use of AI are indeed needed, but even common sense goes a long way towards keeping their data safe. As encapsulated by Mr. Moore in an answer concerning how AI should be regulated, there’s a pressing need to raise awareness about AI’s potential, including potential for harm. Encouraging critical thinking is crucial for ensuring safety in our increasingly AI-driven world.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.welivesecurity.com/en/cybersecurity/eset-world-2024-big-prevention-bigger-ai/