In today’s cutthroat business world, quickly and accurately gathering information can give you the edge over your competition.

As of 2025, the global Business Intelligence market is expected to grow to $33.3 billion. Companies, no matter their size, become increasingly avid in data harvesting and processing, which brings the spotlight to the web scraping industry. Likewise, according to Google’s trend, interest in term web scraping has increased almost 5-fold compared to 2016.

When more and more people talk about web scraping, we can’t help but think, would there be a better solution to get data using artificial intelligence? Before answering this question, we would need to delve into the specific challenges of web scraping and the current limitations of AI systems.

In Need of An Alternative

Data harvesting is no easy task as modern websites level up with more Javascript-based interaction and anti-scraping technologies. Existing data scraping software also costs a pretty penny once the volume scales up, not to mention there will be a need for professional developers on regular maintenance.

Why AI Alone May Fall Short

While there is potential to combine AI and robotic process automation to streamline repetitive tasks in web scraping, several challenges remain.

Data Accuracy And Privacy

First, the biggest problem would be finding the correct data. This would be difficult due to the volatile nature of the internet as websites are continually changing with new structures and formats. How to navigate the website without being detected and pick the right data

continuously without violating any existing data privacy laws and the site’s Terms of Service requires sophisticated algorithms.

High Costs and Technical Challenges

Delegating the entire scraping task to AI, where AI operates the browser to collect data, is prohibitively expensive due to the need for advanced infrastructure and continuous updates to handle website changes.

Additionally, AI systems struggle with anti-blocking measures implemented by websites to prevent automated access. These systems also face scaling issues, where handling large volumes of data can lead to performance bottlenecks.

If we consider using AI to write scraping rules, we encounter further challenges. Current AI models, like those based on machine learning, often experience “hallucinations”, generating incorrect or nonsensical data that can undermine the reliability of the scraping process. Training these models to be accurate requires a vast amount of labeled data, which involves significant manual effort and time. Despite these challenges, there remains hope for advancement as AI technologies continue to evolve and improve.

Hope Remains With No Code Scraper Providing An Actionable Solution

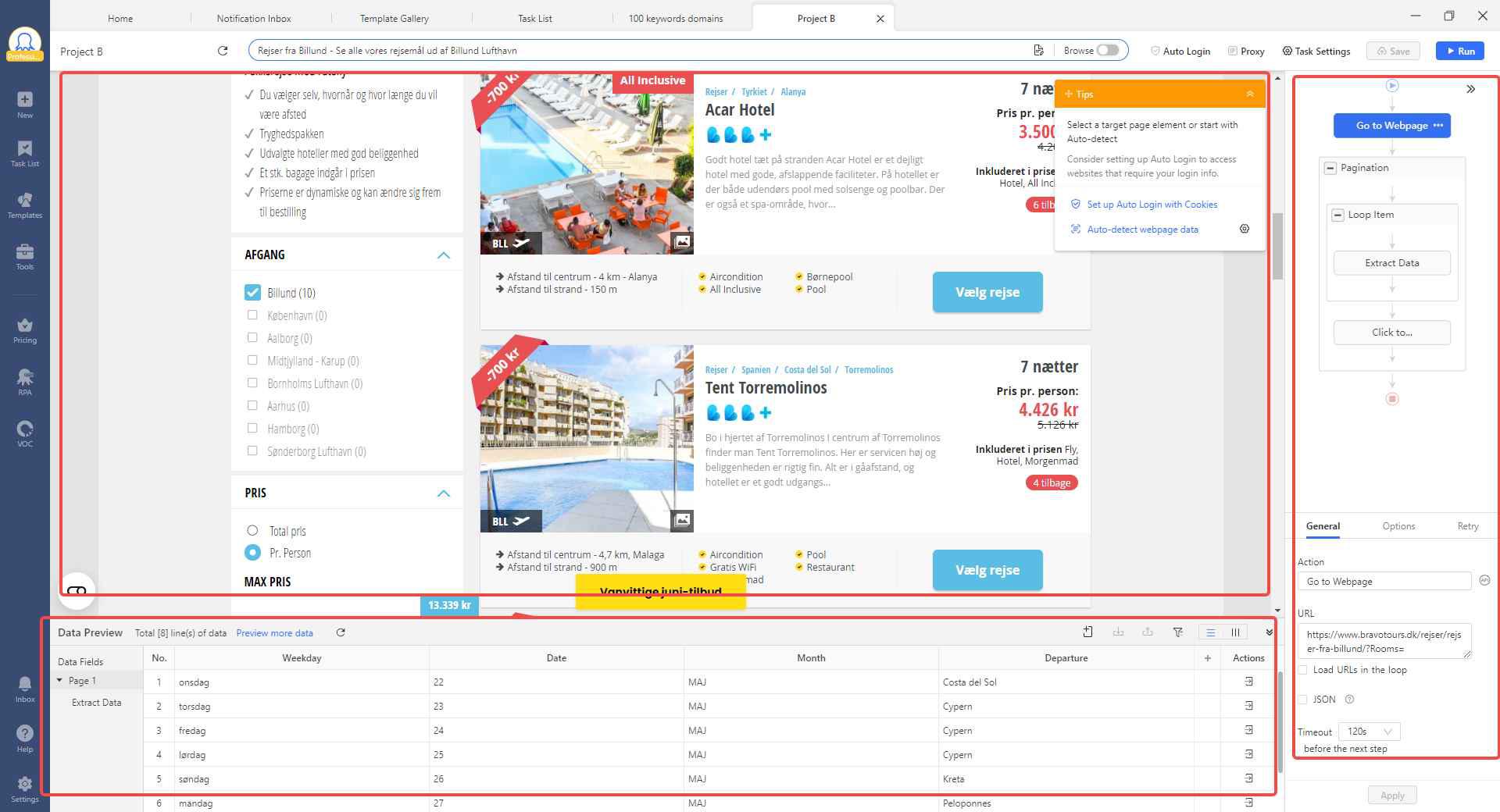

Though AI cannot provide a command-as-you-go solution for the time being, there are some tools that help us free from the manual work of writing scripts and transition to a stage where simple point and click can build an automated workflow. As a no-code-involved web scraper, Octoparse boasts a highly intuitive interface that enables its users to create an HTML-based scraping workflow the way they visit the website by just clicking and moving their mouse.

During the workflow-creating process, users don’t see any code since all the HTML changes happen behind the scenes. when you move modules within the workflow interface, the underlying HTML or configuration behind the workflow changes accordingly. So you can see that the sequence of actions and data extraction rules are updated to reflect the new order and connections of the modules. This dynamic adjustment allows users to customize their scrapers according to their needs.

since all the HTML changes happen behind the scenes. when you move modules within the workflow interface, the underlying HTML or configuration behind the workflow changes accordingly. So you can see that the sequence of actions and data extraction rules are updated to reflect the new order and connections of the modules. This dynamic adjustment allows users to customize their scrapers according to their needs.

However, HTML is not stable as websites change often. Relying solely on HTML-based workflow and manual settings such as AJAX, wait before action, and scroll action increases the possibility of bugs or workflow errors.

By changing the underlying scripts from HTML to Python and integrating Octoparse with LLM (Large Language Models), however, may reduce the errors as LLM can help fill the code gaps between changed modules.

To put it another way, when you rearrange the modules in Octoparse’s workflow interface, LLM can interpret the changes and modify the underlying scripts or configurations to ensure more robust and error-free task executions. This integration not only improves efficiency but also lays the groundwork for more advanced automation in web scraping.

Conclusion

While AI shows promise in augmenting web scraping capabilities, complete replacement

remains challenging due to the intricate nature of data extraction, privacy concerns, high cost, and technical problems. However, by leveraging AI to enhance no-code scraping solutions like Octoparse, businesses can achieve greater automation and accuracy in their data harvesting efforts, while paving the way for a more intelligent data-driven future.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: Plato Data Intelligence.