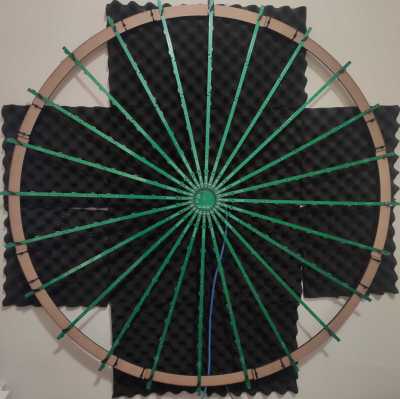

Remember the scene from Blade Runner, where Deckard puts a photograph into a Photo Inspector? The virtual camera can pan and move around the captured scene, pulling out impossible details. It seems that [Ben Wang] discovered how to make that particular trick a reality, but with audio instead of video. The secret sauce isn’t a sophisticated microphone, but a whole bunch of really simple ones. In this case, it’s 192 of them, arranged on long PCBs working as the spokes of a wall-art wheel. Quite the conversation piece.

You might imagine that capturing the data from 192 microphones all at once is a challenge in itself, and that seems to be an accurate assessment. The first data capture problem was due to the odd PCBs pushing the manufacturing process to its limits. About half of the spokes were dead on arrival, with the individual mics having a tendency to short the shared clock line to either ground or the power supply line. Then to pull all that data in, a Colorlight is used as a general purpose FPGA with a convenient form factor. This former pixel controller can be used for a wide variety of projects, thanks to an Open Sourced reverse engineering effort, and is even supported by the Project Trellis toolchain, which was used for this effort, too.

You might imagine that capturing the data from 192 microphones all at once is a challenge in itself, and that seems to be an accurate assessment. The first data capture problem was due to the odd PCBs pushing the manufacturing process to its limits. About half of the spokes were dead on arrival, with the individual mics having a tendency to short the shared clock line to either ground or the power supply line. Then to pull all that data in, a Colorlight is used as a general purpose FPGA with a convenient form factor. This former pixel controller can be used for a wide variety of projects, thanks to an Open Sourced reverse engineering effort, and is even supported by the Project Trellis toolchain, which was used for this effort, too.

Packetizing all those microphones into UDP packets winds up pushing a whopping 715 Mbps, which manages to fit nicely on a Gigabit Ethernet connection. That data is fed into a GPU Kernel written with Triton, an Open Source alternative to CUDA. This performs one of two beamforming operations. Near-field beamforming divides the space directly in front of the microphone array into a 64x64x64 grid of 5cm voxels, and can locate a sound source in that 3d space. Alternatively, the system can run a far-field beamform, and locate a sound source in a 2d direction, on a 512×512 grid.

As part of the calibration, the speed of sound is also a parameter which is optimized to obtain the best model of the system, which allows this whole procedure to act as a ridiculously overengineered thermometer.

The most impressive trick is to run the process the other way, and isolate the incoming audio coming from a specific direction. The demo here was to play static fro one source, and music from a second, nearby source. When listening from only one microphone, the result is a garbled mess. But applying the beamforming algorithm does an impressive job of isolating the directional audio. Click through to hear the results.

And if that’s not enough, check out the details of another similar microphone array project.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://hackaday.com/2023/07/04/3d-audio-imaging-with-a-phased-array-microphone/