| Feb 15, 2024 |

|

|

|

(Nanowerk Spotlight) Effectively mimicking the unmatched visual capacities of the human brain while operating within stringent energy constraints poses a formidable challenge for artificial intelligence developers. The human visual system elegantly processes optical data using brief electrical pulses termed spikes, transmitted among neurons. This spiking neural code underpins our unparalleled pattern recognition using limited computational resources.

|

|

However, contemporary machine vision entails substantial processing of visual inputs from power-hungry sensors into representations digestible for computer algorithms. This computational intensity throttles the deployment of continuously operating vision systems in mobile and internet-of-things contexts. The pursuit of alternative bioinspired architectures better balancing visual intelligence with energy efficiency has accordingly intensified.

|

|

Previously, researchers have struggled to translate key advantages of biological vision into artificial systems. Custom spiking cameras and sensors often sacrifice image quality while requiring extra components to encode visual inputs as spikes. Meanwhile, algorithms mimicking spiking neurons rarely match the efficiency of their biological counterparts when running on conventional computer hardware. These limitations have stalled the development of artificially intelligent vision systems combining the visual capabilities of state-of-the-art computer vision with the low energy consumption of spiking neural networks.

|

|

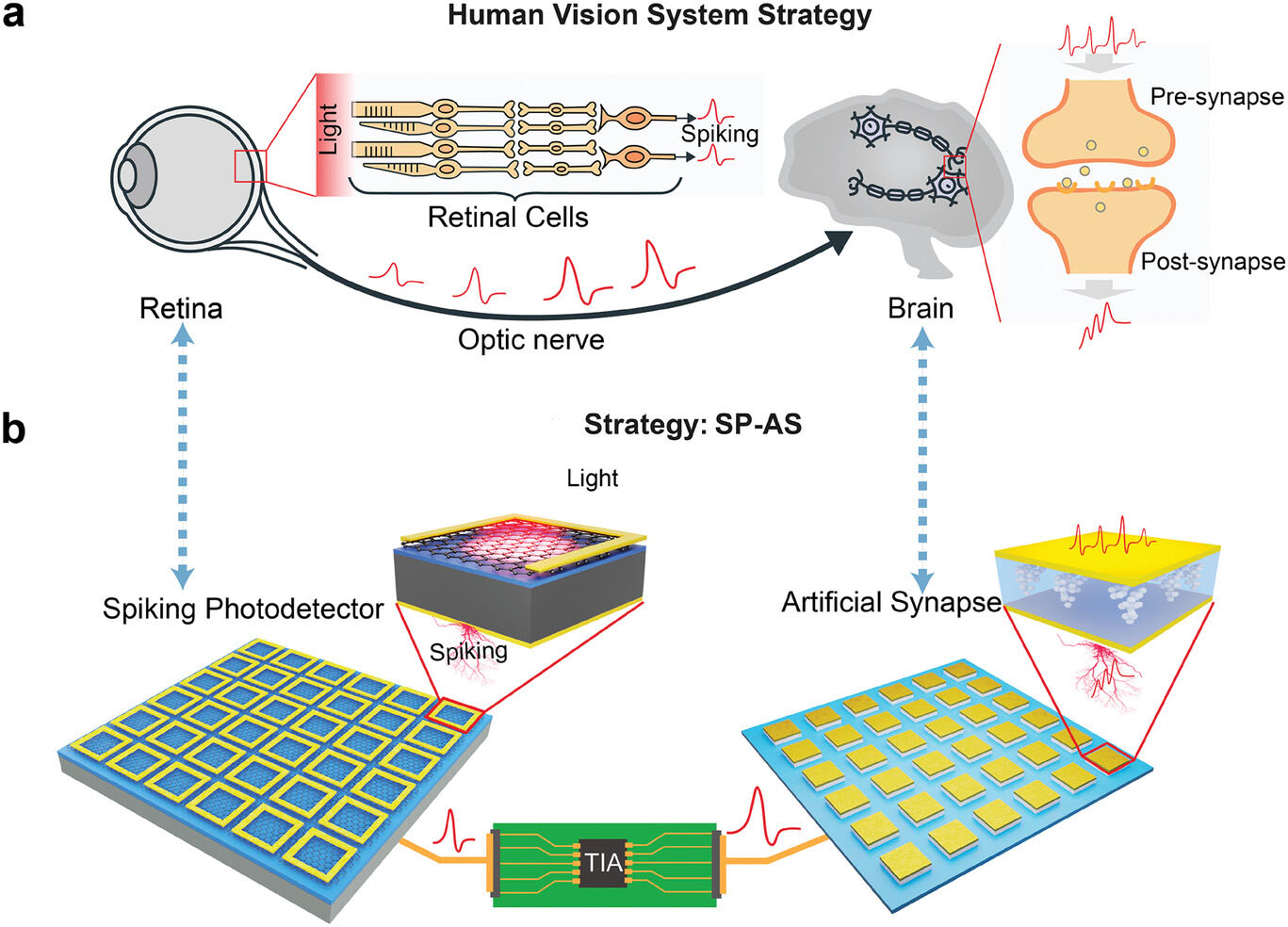

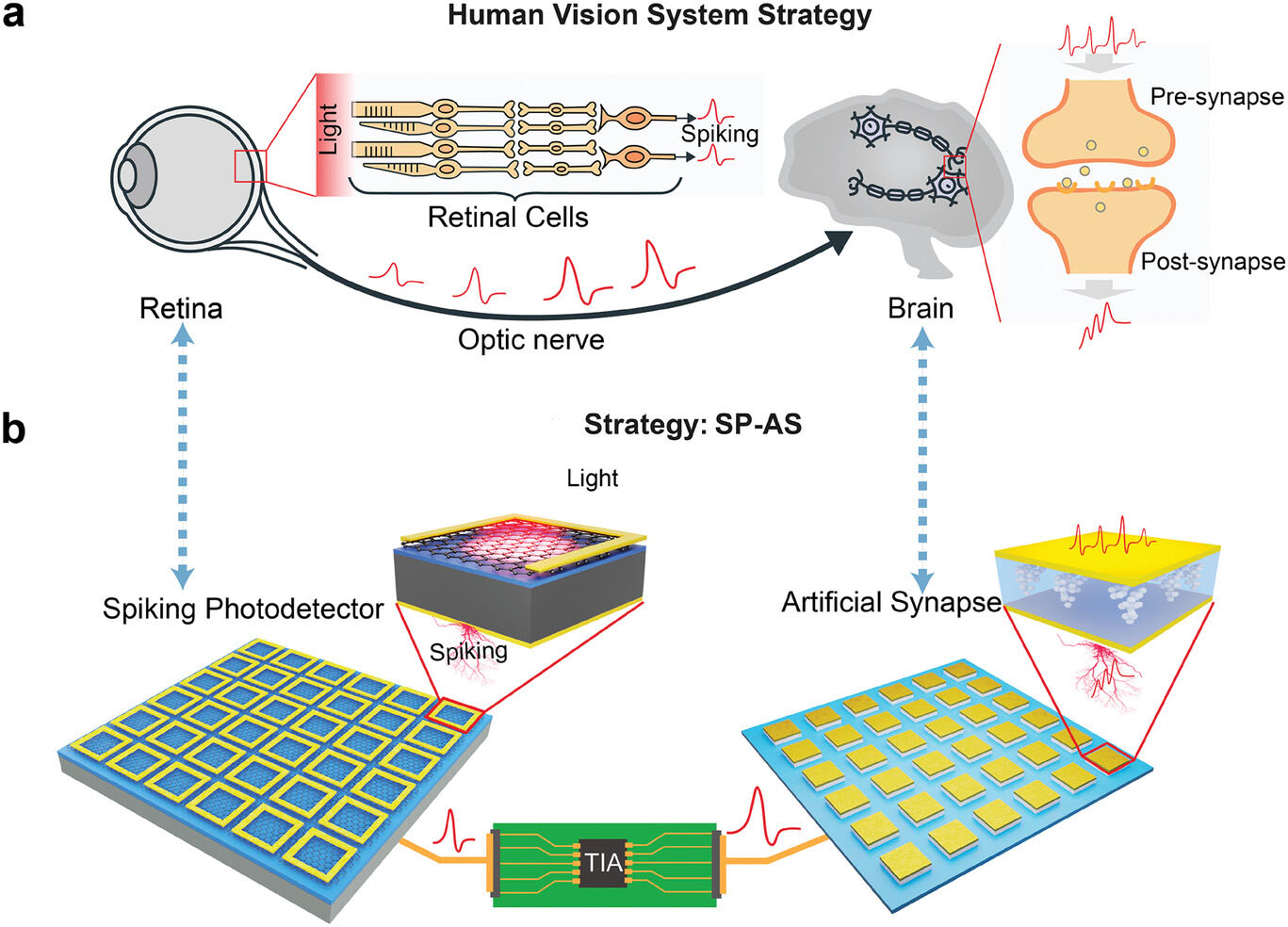

Now, researchers from Beijing University of Technology report (Advanced Materials, “A Spiking Artificial Vision Architecture Based on Fully Emulating the Human Vision”) a promising spiking-based artificial vision system emulating key facets of biological vision in silicon circuits. Their novel photoactive neural network chip converts light directly into spikes of electric current while exhibiting retinal cells’ selectivity for visual change over static inputs. Using this bioinspired approach analyzing live imagery, the group achieved over 90% accuracy recognizing hand gestures with an elementary neural network after marginal training.

|

|

| Artificial vision architectures based on fully copying and pasting the human vision. a The human visual system, consisting of the retina (spiking encoding) and the brain (information processing). b The novel spiking-based artificial vision strategy, consisting of the spiking photodetector (spiking encoding) and the artificial synapse (information processing). (Image: Reprinted with permission by Wiley-VCH Verlag)

|

|

At the core of this innovation lies specialized photodetector circuits that output spikes of electric current in response to changing light levels, emulating retinal cells in the human eye. Unlike a typical digital camera that outputs a constant stream of pixel data regardless of image content, these ‘spiking photodetectors’ stay inactive when viewing static scenes, firing spikes only for moving or newly visible objects needing encoding.

|

|

This selective spiking behavior allows efficient information representation similar to the human retina’s neural encoding of visual stimuli. Rather than capturing absolute light levels across a whole scene, the spiking photodetectors and their biological counterparts respond predominantly to light level changes within their receptive field.

|

|

The researchers suggest that filtering out unchanging and likely unimportant background image elements enables the exceptional pattern recognition of biological vision using limited neural resources.

|

|

In tests, illuminating arrays of these event-driven pixels with symbolic graphics and hand gestures of varying dynamics induced spike patterns containing sufficient information for subsequent classification with simple neural network processing.

|

|

For example, converting American Sign Language finger spellings into spikes allowed rapid neural network identification of four distinct letters using only 50 training samples per letter. Significantly, established deep learning techniques only attained comparable accuracy after processing extensive frame sequences from far more power-hungry digital cameras and graphics processing units.

|

|

Likewise, when evaluating their system on a standardized human activity dataset, the spiking photodetector pixels extracted adequate posture and movement nuances from just four sparse binary silhouette frames per video. Feeding these condensed spike representations of actions like jumping and waving into a basic neural network classifier enabled recognition with 90% accuracy after only five training epochs. Matching this benchmark typically requires analyzing thousands of high-resolution video stills with elaborately engineered deep neural networks.

|

|

To handle image recognition tasks, the team built out their bio-inspired circuitry by integrating synaptic devices previously developed for spiking neuromorphic processors. These artificial synapses mimic the adjustable connection strength between biological neurons, providing tunable memory to enable learning. Applying programming pulses, researchers appropriately weighted synapses receiving spikes from the photodetectors, teaching the network to classify basic shape and motion pattern combinations. Clear post-training differences in synapse conductivity values corresponded to unique identifying features within optical stimuli.

|

|

Overall, the results showcase major strides towards efficient neuromorphic computing using the brain’s design principles. Event-driven information representation addresses key constraints for deploying artificial intelligence on mobile platforms and other power-limited contexts. Looking forward, the researchers aim to continue developing their spiking architecture for practical machine vision applications.

|

|

With expanded, higher-resolution arrays for capturing richer visual data at frame rates matching human perception, biologist-inspired vision systems could become ubiquitous.

|

|

Optimized spike-based data transmission from various existing sensors would further close the gap with biological capacities. For autonomous vehicle navigation, augmented reality interfaces, robotics, and other realms expected to drive future demand growth for computer vision hardware, simultaneous improvements to capability and efficiency remain imperative. This new architecture provides a promising template for the next generation of intelligent vision.

|

|

By

Michael

Berger

– Michael is author of three books by the Royal Society of Chemistry:

Nano-Society: Pushing the Boundaries of Technology,

Nanotechnology: The Future is Tiny, and

Nanoengineering: The Skills and Tools Making Technology Invisible

Copyright ©

Nanowerk LLC

|

|

|

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.nanowerk.com/spotlight/spotid=64666.php