CCC supported three scientific sessions at this year’s AAAS Annual Conference, and in case you weren’t able to attend in person, we will be recapping each session. This week, we will summarize the highlights of the session, “Generative AI in Science: Promises and Pitfalls.” In Part One, we will summarize the introduction and the presentation by Dr. Rebecca Willett.

CCC’s first AAAS panel of the 2024 annual meeting took place on Friday, February 16th, the second day of the conference. The panel, moderated by CCC’s own Dr. Matthew Turk, president of the Toyota Technological Institute at Chicago, was composed of experts who apply artificial intelligence to a variety of scientific fields. Dr. Rebecca Willett, professor of statistics and computer science at the University of Chicago, focused her presentation on how generative models can be used in the sciences and why off-the-shelf models are not sufficient to be applied to scientific research. Dr. Markus Buehler, professor of engineering at the Massachusetts Institute of Technology, spoke about generative models as applied to materials science, and Dr. Duncan Watson-Parris, assistant professor at Scripps Institution of Oceanography and the Halıcıoğlu Data Science Institute at UC San Diego, discussed how generative models can be used in studying the climate sciences.

Dr. Turk, an expert in computer vision and human-computer interaction, began the panel by distinguishing Generative AI from all AI. “At the core of generative AI applications are generative models composed of deep neural networks that learn the structure of their voluminous training data and then generate new data based on what they’ve learned.”

Dr. Turk also outlined popular concerns with generative systems, both due to failures of the systems themselves, such as those that quote nonexistent legal briefs, and also due to their use by bad actors to generate fake content, such as that of fake audio or video of politicians or celebrities.

“Specifically,” said Dr. Turk, “this session will focus on the use of generative AI in science, both as a transformative force in the pursuit of science and also as a potential risk of disruption.”

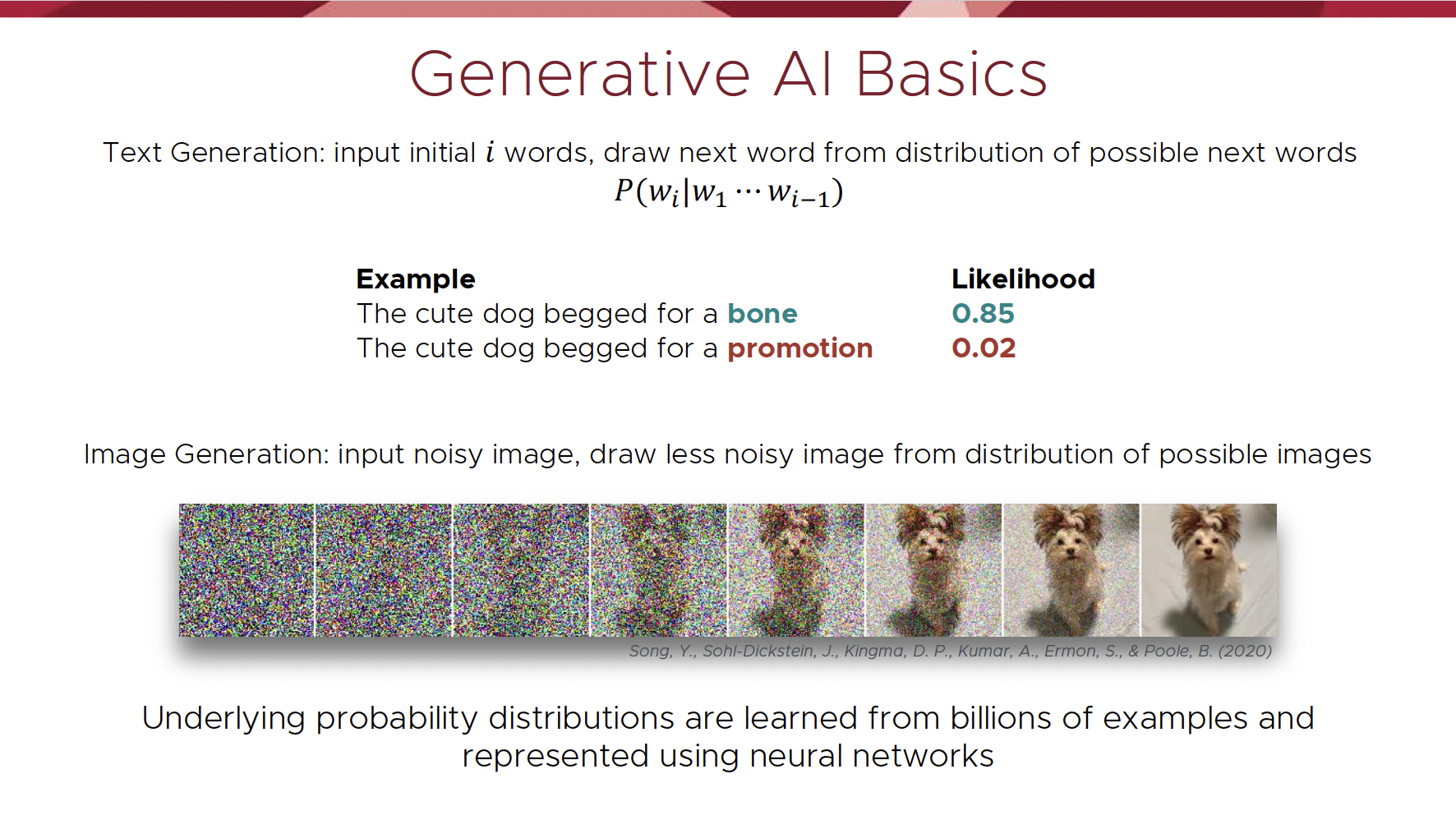

Dr. Rebecca Willett began her presentation by outlining how generative AI can be leveraged to support the scientific discovery process. She first focused on how generative models work. The image below from Dr. Willett’s slides displays how a language model, such as ChatGPT, evaluates the probability of a word occurring, given a previous set of words, and how an image generation model, such as DALL-E 2, generates an image from a given prompt using probability distributions learned from billions of images during training.

“Using this principle of probability distributions, which underlies all generative models, these models can be applied to moonshot ideas in the sciences, such as generating possible climate scenarios given the current climate and potential policies, or generating new microbiomes with targeted functionality, such as one that is particularly efficacious at breaking down plastics”, says Dr. Willett.

However, it is not sufficient to use off-the-shelf generative tools, such as ChatGPT or DALL-E 2 for scientific research. These tools were created in a setting very different from the context in which scientists operate. One obvious difference between an off-the-shelf generative model and a scientific model is the data. In science, often there is very little data on which to base hypotheses. Scientific data typically comes out of simulations and experiments, both of which are often expensive and time-consuming. Because of these limitations, scientists have to carefully choose which experiments to run and how to maximize the efficiency and usefulness of these systems. Off-the-shelf models, in contrast, place far less importance on where data comes from in preference of maximizing the amount of data they can operate on. In science, the accuracy of datasets and their origins are incredibly important, because scientists need to justify their research with robust empirical evidence.

“Additionally, in the sciences, our goals are different than merely producing things that are plausible”, says Dr. Willett. “We must understand the way things work outside of the range of what we have observed so far.” This approach is at odds with generative AI models that treat data as representative of the full range of likely observations. Incorporating physical models and constraints into generative AI helps ensure it will better represent physical phenomena.

Scientific models must also be capable of capturing rare events. “We can safely ignore a lot of rare events when we are training ChatGPT, but in contrast, rare events are often what we care about most in the context of the sciences, such as in a climate model that predicts rare weather events. If we use a generative model which avoids rare events and, for example, never predicts a hurricane, then this model will not be very useful in practice.”

A related challenge is developing generative AI models for chaotic processes, which are sensitive to initial conditions. Dr. Willett displayed the video below, which shows two particles moving in space according to the Lorenz 63 equations. These equations are deterministic, not random, but given two slightly different starting locations, you can see that at any given time the two particles may be in very different locations. Developing generative AI models that predict the exact course of such processes, which arise in climate science, turbulence, and network dynamics, is fundamentally hard, but novel approaches to generative modeling can ensure that generated processes share key statistical characteristics with real scientific data.

[embedded content]

Finally, Dr. Willett addressed the fact that scientific data often spans an enormous range of spatial and temporal scales. For example, in materials science, researchers study materials at the nanometer scale for monymers all the way up to the large-scale system, such as an entire airplane. “That range of scales is very different from data used in off-the-shelf models, and we need to consider how we are building up these generative models in a way that accurately affects these interactions between scales”.

“Generative models are the future of science”, says Dr. Willett, “but to ensure they are used effectively, we need to make fundamental advances in AI and go beyond plugging data into ChatGPT”.

Thank you so much for reading, and please tune in tomorrow to read the recap of Dr. Markus Buehler’s presentation on Generative AI in Mechanobiology.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://feeds.feedblitz.com/~/873922907/0/cccblog~CCC-AAAS-Generative-AI-in-Science-Promises-and-Pitfalls-Recap-Part-One/