A ni o wa yiya lati kede titun kan ti ikede awọn Awọn oniṣẹ SageMaker Amazon fun Kubernetes lilo awọn Awọn oludari AWS fun Kubernetes (ACK). ACK jẹ ilana fun kikọ awọn olutona aṣa Kubernetes, nibiti oluṣakoso kọọkan n ba sọrọ pẹlu API iṣẹ AWS kan. Awọn oludari wọnyi gba awọn olumulo Kubernetes laaye lati pese awọn orisun AWS gẹgẹbi awọn garawa, awọn apoti isura infomesonu, tabi awọn ila ifiranṣẹ nirọrun nipa lilo Kubernetes API.

Tu v1.2.9 ti SageMaker ACK Operators ṣe afikun atilẹyin fun inference irinše, eyiti titi di isisiyi o wa nikan nipasẹ SageMaker API ati AWS Awọn ohun elo Idagbasoke sọfitiwia (SDKs). Awọn paati itọka le ṣe iranlọwọ fun ọ lati mu awọn idiyele imuṣiṣẹ pọ si ati dinku lairi. Pẹlu awọn agbara paati ifọkasi tuntun, o le ran ọkan tabi diẹ sii awọn awoṣe ipilẹ (FM) lori kanna Ẹlẹda Amazon endpoint ati iṣakoso bi ọpọlọpọ awọn accelerators ati iye iranti ti wa ni ipamọ fun FM kọọkan. Eyi ṣe iranlọwọ ilọsiwaju iṣamulo awọn orisun, dinku awọn idiyele imuṣiṣẹ awoṣe ni apapọ nipasẹ 50%, ati pe o jẹ ki o ṣe iwọn awọn aaye ipari papọ pẹlu awọn ọran lilo rẹ. Fun alaye diẹ sii, wo Amazon SageMaker ṣe afikun awọn agbara ifọkasi tuntun lati ṣe iranlọwọ lati dinku awọn idiyele imuṣiṣẹ awoṣe ipilẹ ati lairi.

Wiwa ti awọn paati ifọkasi nipasẹ olutọju SageMaker jẹ ki awọn alabara ti o lo Kubernetes bi ọkọ ofurufu iṣakoso wọn lati lo anfani ti awọn paati ifọkansi lakoko gbigbe awọn awoṣe wọn sori SageMaker.

Ninu ifiweranṣẹ yii, a fihan bi o ṣe le lo Awọn oniṣẹ SageMaker ACK lati ran awọn paati inference SageMaker lọ.

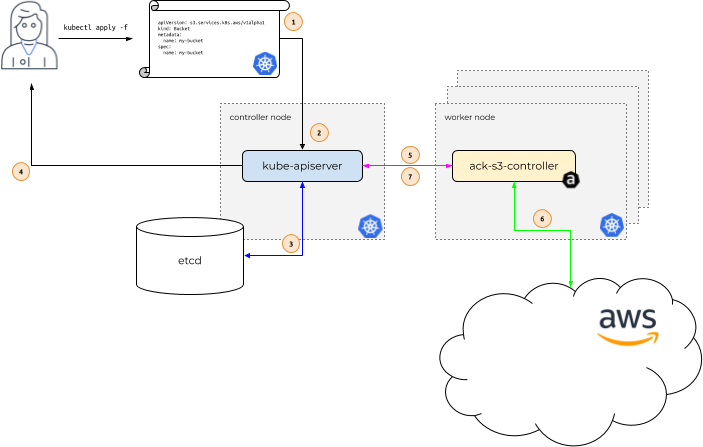

Bawo ni ACK ṣiṣẹ

Lati ṣe afihan bawo ni ACK ṣiṣẹ, jẹ ki a wo apẹẹrẹ nipa lilo Iṣẹ Ifipamọ Simple Amazon (Amazon S3). Ninu aworan atọka atẹle, Alice jẹ olumulo Kubernetes wa. Ohun elo rẹ da lori aye ti garawa S3 ti a npè ni my-bucket.

Ṣiṣẹ iṣẹ ni awọn igbesẹ wọnyi:

- Alice ṣe ipe kan si

kubectl apply, Gbigbe ni faili kan ti o ṣe apejuwe Kubernetes kan aṣa awọn oluşewadi apejuwe rẹ S3 garawa.kubectl applykoja yi faili, ti a npe ni a Farahan, si olupin Kubernetes API ti nṣiṣẹ ni ipade oludari Kubernetes. - Olupin Kubernetes API gba ifihan ti n ṣalaye garawa S3 ati pinnu boya Alice ni awọn igbanilaaye lati ṣẹda aṣa awọn oluşewadi ti Iru

s3.services.k8s.aws/Bucket, ati pe awọn oluşewadi aṣa ti wa ni ọna kika daradara. - Ti Alice ba fun ni aṣẹ ati pe orisun aṣa jẹ iwulo, olupin Kubernetes API kọ awọn orisun aṣa si rẹ

etcditaja data. - Lẹhinna o dahun si Alice pe a ti ṣẹda orisun aṣa.

- Ni aaye yii, iṣẹ ACK adarí fun Amazon S3, eyiti o nṣiṣẹ lori ipade oṣiṣẹ Kubernetes laarin ọrọ ti Kubernetes deede. Podọ, ti wa ni iwifunni wipe titun kan aṣa awọn oluşewadi ti iru

s3.services.k8s.aws/Bucketti ṣẹda. - Oluṣakoso iṣẹ ACK fun Amazon S3 lẹhinna sọrọ pẹlu Amazon S3 API, pipe awọn S3 CreateBucket API lati ṣẹda garawa ni AWS.

- Lẹhin ti ibaraẹnisọrọ pẹlu Amazon S3 API, oluṣakoso iṣẹ ACK pe olupin Kubernetes API lati ṣe imudojuiwọn awọn orisun aṣa aṣa. ipo pẹlu alaye ti o gba lati Amazon S3.

Awọn paati pataki

Awọn agbara ifọkasi tuntun kọ lori awọn aaye ipari ipari akoko gidi SageMaker. Gẹgẹbi iṣaaju, o ṣẹda aaye ipari SageMaker pẹlu iṣeto ipari ipari ti o ṣalaye iru apẹẹrẹ ati kika apẹẹrẹ ibẹrẹ fun aaye ipari. Awọn awoṣe ti wa ni tunto ni titun kan ikole, ohun inference paati. Nibi, o pato nọmba awọn imuyara ati iye iranti ti o fẹ lati pin si ẹda kọọkan ti awoṣe, papọ pẹlu awọn ohun-ọṣọ awoṣe, aworan eiyan, ati nọmba awọn ẹda awoṣe lati ran lọ.

O le lo awọn titun inference agbara lati Amazon SageMaker Studio, awọn SageMaker Python SDK, Aws SDKs, Ati Ọlọpọọmídíà Ifilelẹ Aws AWS (AWS CLI). Wọn tun ṣe atilẹyin nipasẹ AWS awọsanma Ibiyi. Bayi o tun le lo wọn pẹlu Awọn oniṣẹ SageMaker fun Kubernetes.

Akopọ ojutu

Fun demo yii, a lo oluṣakoso SageMaker lati fi ẹda kan ranṣẹ Dolly v2 7B awoṣe ati ki o kan daakọ ti awọn FLAN-T5 XXL awoṣe lati awọn Famọra Oju Awoṣe Ipele lori aaye ipari akoko gidi SageMaker kan ni lilo awọn agbara ifọkansi tuntun.

Prerequisites

Lati tẹle pẹlu, o yẹ ki o ni iṣupọ Kubernetes pẹlu oluṣakoso SageMaker ACK v1.2.9 tabi loke ti fi sori ẹrọ. Fun awọn itọnisọna lori bi o ṣe le pese ohun kan Amazon Rirọ Kubernetes Service (Amazon EKS) iṣupọ pẹlu Awọsanma Rirọ Oniṣiro Amazon (Amazon EC2) Awọn apa iṣakoso Linux nipa lilo eksctl, wo Bibẹrẹ pẹlu Amazon EKS - eksctl. Fun awọn itọnisọna lori fifi sori ẹrọ oludari SageMaker, tọka si Ẹkọ ẹrọ pẹlu ACK SageMaker Adarí.

O nilo iraye si awọn iṣẹlẹ isare (GPUs) fun gbigbalejo awọn LLM. Ojutu yii nlo apẹẹrẹ kan ti ml.g5.12xlarge; o le ṣayẹwo wiwa awọn iṣẹlẹ wọnyi ninu akọọlẹ AWS rẹ ki o beere awọn iṣẹlẹ wọnyi bi o ṣe nilo nipasẹ ibeere alekun Quotas Iṣẹ kan, bi o ṣe han ninu sikirinifoto atẹle.

Ṣẹda paati inference

Lati ṣẹda rẹ inference paati, setumo awọn EndpointConfig, Endpoint, Model, Ati InferenceComponent Awọn faili YAML, iru si awọn ti o han ni abala yii. Lo kubectl apply -f <yaml file> lati ṣẹda awọn orisun Kubernetes.

O le ṣe atokọ ipo ti orisun nipasẹ kubectl describe <resource-type>; fun apere, kubectl describe inferencecomponent.

O tun le ṣẹda paati ifọkasi laisi awọn orisun awoṣe. Tọkasi itọnisọna ti a pese ni awọn Awọn iwe API fun alaye diẹ.

EndpointConfig YAML

Eyi ni koodu fun faili EndpointConfig:

Opin ojuami YAML

Eyi ni koodu fun faili Ipari:

Awoṣe YAML

Eyi ni koodu fun faili Awoṣe:

InferenceComponent YAMLs

Ninu awọn faili YAML atẹle, ti a fun ni pe apẹẹrẹ ml.g5.12xlarge wa pẹlu awọn GPU 4, a n pin awọn GPU 2, 2 CPUs ati 1,024 MB ti iranti si awoṣe kọọkan:

Pe awọn awoṣe

Bayi o le pe awọn awoṣe nipa lilo koodu atẹle:

Ṣe imudojuiwọn paati ifọkasi kan

Lati ṣe imudojuiwọn paati ifọkasi ti o wa tẹlẹ, o le ṣe imudojuiwọn awọn faili YAML lẹhinna lo kubectl apply -f <yaml file>. Atẹle jẹ apẹẹrẹ ti faili imudojuiwọn:

Pa paati ifọkasi rẹ rẹ

Lati pa paati ifọkasi ti o wa tẹlẹ rẹ, lo aṣẹ naa kubectl delete -f <yaml file>.

Wiwa ati ifowoleri

Awọn agbara atọwọdọwọ SageMaker tuntun wa loni ni Awọn agbegbe AWS US East (Ohio, N. Virginia), US West (Oregon), Asia Pacific (Jakarta, Mumbai, Seoul, Singapore, Sydney, Tokyo), Canada (Central), Yuroopu ( Frankfurt, Ireland, London, Stockholm), Aarin Ila-oorun (UAE), ati South America (São Paulo). Fun awọn alaye idiyele, ṣabẹwo Ifowoleri SageMaker Amazon.

ipari

Ninu ifiweranṣẹ yii, a fihan bi o ṣe le lo Awọn oniṣẹ SageMaker ACK lati ran awọn paati inference SageMaker lọ. Ṣe ina iṣupọ Kubernetes rẹ ki o mu awọn FMs rẹ ṣiṣẹ ni lilo awọn agbara inference SageMaker tuntun loni!

Nipa awọn onkọwe

Rajesh Ramchander jẹ Onimọ-ẹrọ ML Alakoso ni Awọn iṣẹ Ọjọgbọn ni AWS. O ṣe iranlọwọ fun awọn alabara ni awọn ipele oriṣiriṣi ni irin-ajo AI / ML ati GenAI wọn, lati ọdọ awọn ti o kan bẹrẹ ni gbogbo ọna si awọn ti n ṣakoso iṣowo wọn pẹlu ete AI-akọkọ.

Rajesh Ramchander jẹ Onimọ-ẹrọ ML Alakoso ni Awọn iṣẹ Ọjọgbọn ni AWS. O ṣe iranlọwọ fun awọn alabara ni awọn ipele oriṣiriṣi ni irin-ajo AI / ML ati GenAI wọn, lati ọdọ awọn ti o kan bẹrẹ ni gbogbo ọna si awọn ti n ṣakoso iṣowo wọn pẹlu ete AI-akọkọ.

Amit Arora jẹ Onitumọ Onimọ-jinlẹ AI ati ML ni Awọn iṣẹ wẹẹbu Amazon, ṣe iranlọwọ fun awọn alabara ile-iṣẹ lati lo awọn iṣẹ ikẹkọ ẹrọ ti o da lori awọsanma lati ṣe iwọn awọn imotuntun wọn ni iyara. O tun jẹ olukọni alaranlọwọ ni imọ-jinlẹ data MS ati eto atupale ni Ile-ẹkọ giga Georgetown ni Washington DC

Amit Arora jẹ Onitumọ Onimọ-jinlẹ AI ati ML ni Awọn iṣẹ wẹẹbu Amazon, ṣe iranlọwọ fun awọn alabara ile-iṣẹ lati lo awọn iṣẹ ikẹkọ ẹrọ ti o da lori awọsanma lati ṣe iwọn awọn imotuntun wọn ni iyara. O tun jẹ olukọni alaranlọwọ ni imọ-jinlẹ data MS ati eto atupale ni Ile-ẹkọ giga Georgetown ni Washington DC

Suryansh Singh jẹ Onimọ-ẹrọ Idagbasoke sọfitiwia ni AWS SageMaker ati ṣiṣẹ lori idagbasoke awọn solusan amayederun pinpin pinpin ML fun awọn alabara AWS ni iwọn.

Suryansh Singh jẹ Onimọ-ẹrọ Idagbasoke sọfitiwia ni AWS SageMaker ati ṣiṣẹ lori idagbasoke awọn solusan amayederun pinpin pinpin ML fun awọn alabara AWS ni iwọn.

Saurabh Trikande jẹ Oluṣakoso Ọja Agba fun Itọkasi SageMaker Amazon. O ni itara lati ṣiṣẹ pẹlu awọn alabara ati pe o ni itara nipasẹ ibi-afẹde ti ẹkọ tiwantiwa ti ẹrọ. O dojukọ awọn italaya pataki ti o ni ibatan si gbigbe awọn ohun elo ML ti o nipọn, awọn awoṣe ML agbatọju lọpọlọpọ, awọn iṣapeye idiyele, ati ṣiṣe imuṣiṣẹ ti awọn awoṣe ikẹkọ jinlẹ diẹ sii ni iraye si. Ni akoko apoju rẹ, Saurabh gbadun irin-ajo, kikọ ẹkọ nipa awọn imọ-ẹrọ imotuntun, atẹle TechCrunch, ati lilo akoko pẹlu ẹbi rẹ.

Saurabh Trikande jẹ Oluṣakoso Ọja Agba fun Itọkasi SageMaker Amazon. O ni itara lati ṣiṣẹ pẹlu awọn alabara ati pe o ni itara nipasẹ ibi-afẹde ti ẹkọ tiwantiwa ti ẹrọ. O dojukọ awọn italaya pataki ti o ni ibatan si gbigbe awọn ohun elo ML ti o nipọn, awọn awoṣe ML agbatọju lọpọlọpọ, awọn iṣapeye idiyele, ati ṣiṣe imuṣiṣẹ ti awọn awoṣe ikẹkọ jinlẹ diẹ sii ni iraye si. Ni akoko apoju rẹ, Saurabh gbadun irin-ajo, kikọ ẹkọ nipa awọn imọ-ẹrọ imotuntun, atẹle TechCrunch, ati lilo akoko pẹlu ẹbi rẹ.

Johna Liu jẹ Onimọ-ẹrọ Idagbasoke Software ni ẹgbẹ Amazon SageMaker. Iṣẹ lọwọlọwọ rẹ dojukọ lori iranlọwọ awọn olupilẹṣẹ daradara gbalejo awọn awoṣe ikẹkọ ẹrọ ati ilọsiwaju iṣẹ itọkasi. O ni itara nipa itupalẹ data aaye ati lilo AI lati yanju awọn iṣoro awujọ.

Johna Liu jẹ Onimọ-ẹrọ Idagbasoke Software ni ẹgbẹ Amazon SageMaker. Iṣẹ lọwọlọwọ rẹ dojukọ lori iranlọwọ awọn olupilẹṣẹ daradara gbalejo awọn awoṣe ikẹkọ ẹrọ ati ilọsiwaju iṣẹ itọkasi. O ni itara nipa itupalẹ data aaye ati lilo AI lati yanju awọn iṣoro awujọ.

- SEO Agbara akoonu & PR Pinpin. Gba Imudara Loni.

- PlatoData.Network inaro Generative Ai. Fi agbara fun ara Rẹ. Wọle si Nibi.

- PlatoAiStream. Web3 oye. Imo Amugbadun. Wọle si Nibi.

- PlatoESG. Erogba, CleanTech, Agbara, Ayika, Oorun, Isakoso Egbin. Wọle si Nibi.

- PlatoHealth. Imọ-ẹrọ Imọ-ẹrọ ati Awọn Idanwo Ile-iwosan. Wọle si Nibi.

- Orisun: https://aws.amazon.com/blogs/machine-learning/use-kubernetes-operators-for-new-inference-capabilities-in-amazon-sagemaker-that-reduce-llm-deployment-costs-by-50-on-average/