Ṣiṣan Iṣiṣẹ ti iṣakoso Amazon fun Apache Airflow (Amazon MWAA) jẹ iṣẹ iṣakoso ti o fun ọ laaye lati lo faramọ Afun Afẹfẹ ayika pẹlu imudara iwọn, wiwa, ati aabo lati mu dara ati iwọn awọn iṣan-iṣẹ iṣowo rẹ laisi ẹru iṣẹ ṣiṣe ti iṣakoso awọn amayederun ipilẹ. Ninu afẹfẹ afẹfẹ, Awọn aworan Acyclic Dari (DAGs) jẹ asọye bi koodu Python. Awọn DAG ti o ni agbara tọka si agbara lati ṣe ipilẹṣẹ awọn DAG lori fifo lakoko akoko asiko, ni igbagbogbo da lori diẹ ninu awọn ipo ita, awọn atunto, tabi awọn aye. Awọn DAG ti o ni agbara ṣe iranlọwọ fun ọ lati ṣẹda, ṣeto, ati ṣiṣe awọn iṣẹ ṣiṣe laarin DAG kan ti o da lori data ati awọn atunto ti o le yipada ni akoko pupọ.

Awọn ọna lọpọlọpọ lo wa lati ṣafihan dynamism ni Airflow DAGs (ìmúdàgba DAG iran) lilo awọn oniyipada ayika ati awọn faili ita. Ọkan ninu awọn ọna ni lati lo awọn DAG Factory YAML ọna iṣeto ni faili. Ile-ikawe yii ni ero lati dẹrọ ẹda ati iṣeto ti DAGs tuntun nipa lilo awọn aye asọye ni YAML. O ngbanilaaye awọn isọdi aiyipada ati pe o jẹ orisun-ìmọ, ti o jẹ ki o rọrun lati ṣẹda ati ṣe akanṣe awọn iṣẹ ṣiṣe tuntun.

Ninu ifiweranṣẹ yii, a ṣawari ilana ti ṣiṣẹda awọn DAG Dynamic pẹlu awọn faili YAML, ni lilo awọn DAG Factory ìkàwé. Awọn DAG ti o ni agbara nfunni ni ọpọlọpọ awọn anfani:

- Imudara koodu atunlo - Nipa siseto awọn DAG nipasẹ awọn faili YAML, a ṣe agbega awọn ohun elo atunlo, idinku idinku ninu awọn asọye ṣiṣan iṣẹ rẹ.

- Itọju ti o ni ilọsiwaju - Iran DAG ti o da lori YAML n ṣe irọrun ilana ti iyipada ati mimudojuiwọn ṣiṣan iṣẹ, ni idaniloju awọn ilana itọju didan.

- parameterization rọ – Pẹlu YAML, o le parameterize awọn atunto DAG, irọrun awọn atunṣe agbara si ṣiṣan iṣẹ ti o da lori awọn ibeere oriṣiriṣi.

- Ilọsiwaju ṣiṣe iṣeto – Awọn DAG ti o ni agbara jẹ ki ṣiṣe ṣiṣe ṣiṣe daradara diẹ sii, ṣiṣe ipinfunni awọn orisun ati imudara awọn ṣiṣe ṣiṣan iṣẹ gbogbogbo

- Ilọsiwaju scalability - Awọn DAG ti a ṣe idari YAML gba laaye fun awọn ṣiṣe isọgba, ṣiṣe awọn ṣiṣan iṣẹ iwọn ti o lagbara lati mu awọn ẹru iṣẹ pọ si daradara.

Nipa lilo agbara awọn faili YAML ati ile-ikawe DAG Factory, a ṣe ifilọlẹ ọna ti o wapọ si kikọ ati ṣiṣakoso awọn DAG, fifun ọ ni agbara lati ṣẹda awọn opo gigun ti data ti o lagbara, iwọn, ati itọju.

Akopọ ti ojutu

Ninu ifiweranṣẹ yii, a yoo lo apẹẹrẹ DAG faili ti o jẹ apẹrẹ lati ṣe ilana eto data COVID-19 kan. Ilana iṣan-iṣẹ naa pẹlu sisẹ sisẹ data orisun orisun ṣiṣi ti a funni nipasẹ WHO-COVID-19-Agbaye. Lẹhin ti a fi sori ẹrọ ni DAG-Factory Python package, a ṣẹda faili YAML ti o ni awọn asọye ti awọn iṣẹ ṣiṣe lọpọlọpọ. A ṣe ilana kika iku kan pato ti orilẹ-ede nipasẹ gbigbe Country bi oniyipada, eyiti o ṣẹda DAG ti orilẹ-ede kọọkan.

Aworan atọka atẹle ṣe afihan ojutu gbogbogbo pẹlu awọn ṣiṣan data laarin awọn bulọọki ọgbọn.

Prerequisites

Fun lilọ kiri yii, o yẹ ki o ni awọn ibeere pataki wọnyi:

Ni afikun, pari awọn igbesẹ wọnyi (ṣiṣẹ iṣeto ni ẹya AWS Ekun nibiti Amazon MWAA ti wa):

- Ṣẹda ohun kan Amazon MWAA ayika (ti o ko ba ni ọkan tẹlẹ). Ti eyi ba jẹ akoko akọkọ rẹ nipa lilo Amazon MWAA, tọka si Ṣafihan Awọn ṣiṣan Iṣẹ iṣakoso Amazon fun Apache Airflow (MWAA).

Rii daju pe Idanimọ AWS ati Isakoso Wiwọle (IAM) olumulo tabi ipa ti a lo fun iṣeto ayika ni awọn ilana IAM ti a so fun awọn igbanilaaye atẹle:

Awọn eto imulo wiwọle ti a mẹnuba nibi jẹ apẹẹrẹ nikan ni ifiweranṣẹ yii. Ni agbegbe iṣelọpọ, pese awọn igbanilaaye granular ti o nilo nikan nipasẹ adaṣe o kere anfani agbekale.

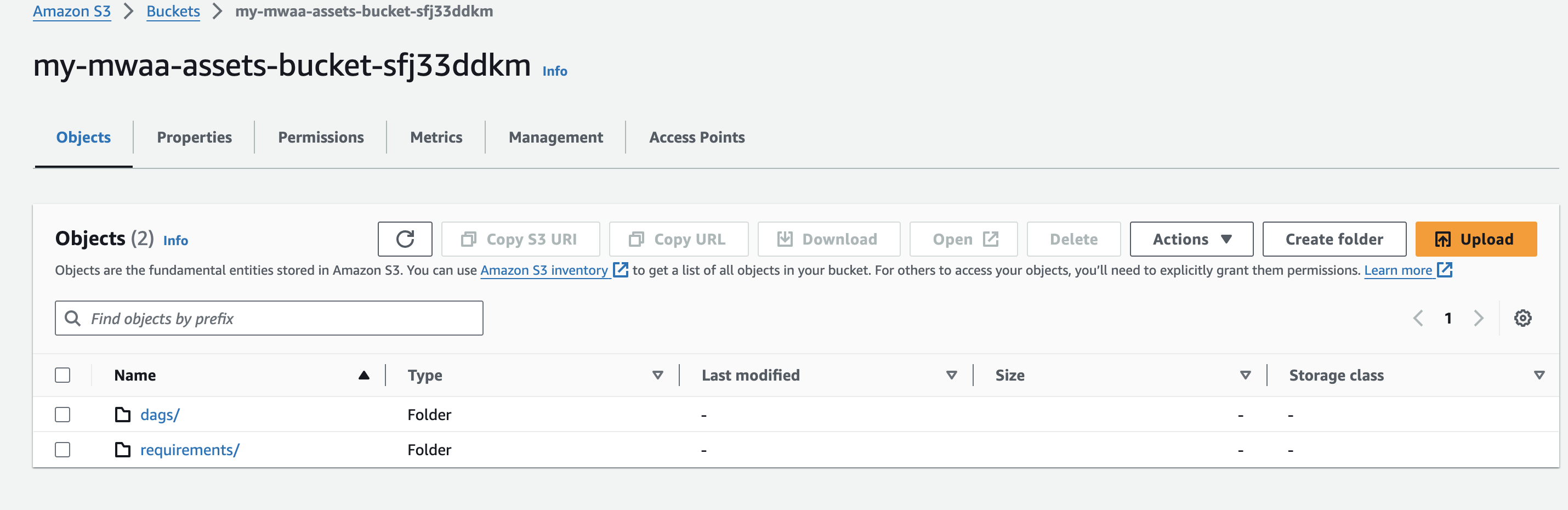

- Ṣẹda alailẹgbẹ kan (laarin akọọlẹ kan) Orukọ garawa Amazon S3 lakoko ṣiṣẹda agbegbe Amazon MWAA rẹ, ati ṣẹda awọn folda ti a pe

dagsatirequirements.

- Ṣẹda ati po si a

requirements.txtfaili pẹlu awọn wọnyi akoonu si awọnrequirementsfolda. Rọpo{environment-version}pẹlu rẹ ayika ká version nọmba, ati{Python-version}pẹlu ẹya Python ti o ni ibamu pẹlu agbegbe rẹ:

Pandas nilo fun apẹẹrẹ lilo apẹẹrẹ ti a ṣalaye ninu ifiweranṣẹ yii, ati dag-factory jẹ plug-in ti a beere nikan. O ti wa ni niyanju lati ṣayẹwo awọn ibamu ti awọn titun ti ikede dag-factory pẹlu Amazon MWAA. Awọn boto ati psycopg2-binary Awọn ile-ikawe wa pẹlu ipilẹ Apache Airflow v2 fi sori ẹrọ ati pe ko nilo lati sọ pato ninu rẹ requirements.txt faili.

- gba awọn WHO-COVID-19-faili data agbaye si ti agbegbe rẹ ẹrọ ati ki o po si labẹ awọn

dagsìpele ti rẹ S3 garawa.

Rii daju pe o n tọka si ẹya garawa AWS S3 tuntun ti tirẹ requirements.txt faili fun fifi sori package afikun lati ṣẹlẹ. Eyi yẹ ki o gba laarin awọn iṣẹju 15 – 20 da lori iṣeto agbegbe rẹ.

Ṣe idaniloju awọn DAG

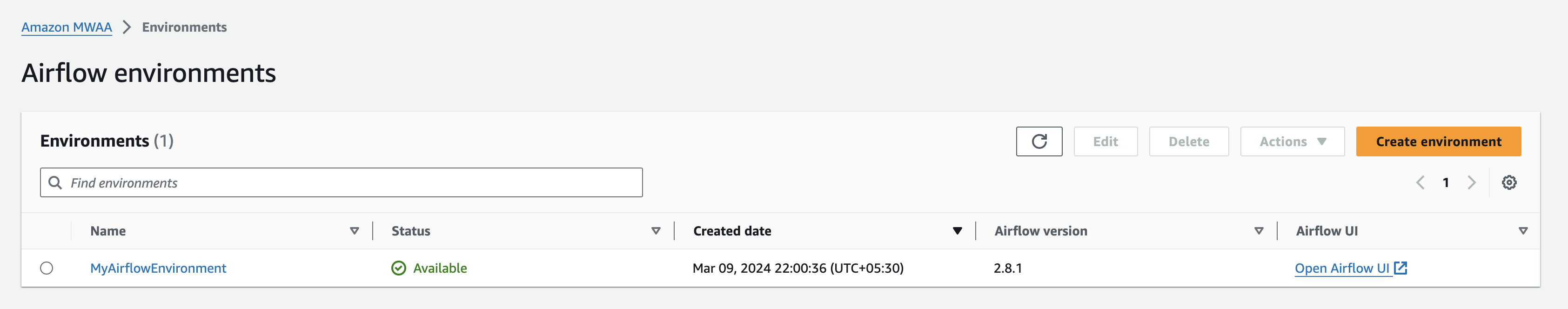

Nigbati agbegbe Amazon MWAA rẹ fihan bi wa lori Amazon MWAA console, lilö kiri si Airflow UI nipa yiyan Ṣii Afẹfẹ UI tókàn si rẹ ayika.

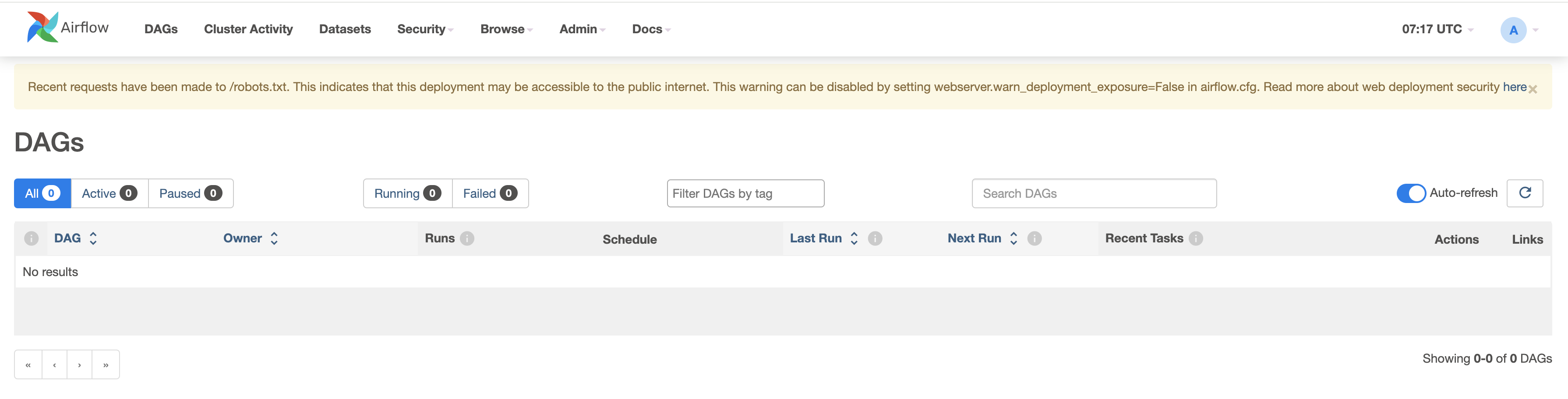

Daju awọn DAG ti o wa tẹlẹ nipa lilọ kiri si taabu DAGs.

Tunto awọn DAG rẹ

Pari awọn igbesẹ wọnyi:

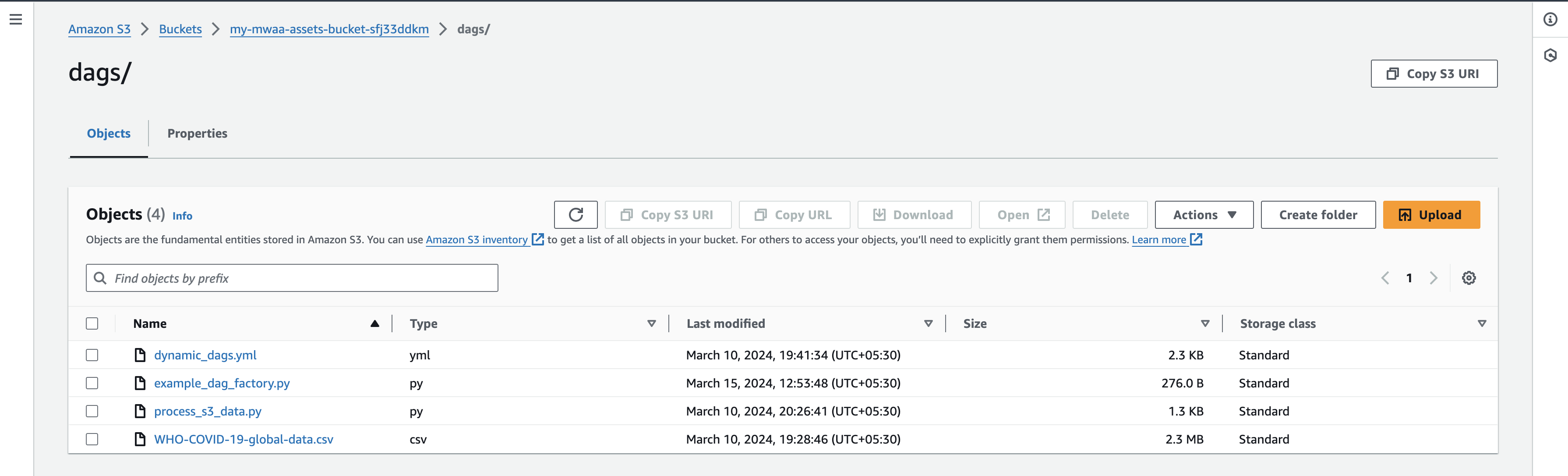

- Ṣẹda awọn faili ofo ti a npè ni

dynamic_dags.yml,example_dag_factory.pyatiprocess_s3_data.pylori ẹrọ agbegbe rẹ. - Ṣatunkọ

process_s3_data.pyfaili ki o fipamọ pẹlu akoonu koodu atẹle, lẹhinna gbe faili naa pada si garawa Amazon S3dagsfolda. A n ṣe diẹ ninu sisẹ data ipilẹ ninu koodu:- Ka faili naa lati ipo Amazon S3 kan

- Lorukọ ni

Country_codeiwe bi o ṣe yẹ si orilẹ-ede naa. - Ajọ data nipasẹ orilẹ-ede ti a fun.

- Kọ data ipari ti a ti ni ilọsiwaju sinu ọna kika CSV ati gbejade pada si asọtẹlẹ S3.

- Ṣatunkọ

dynamic_dags.ymlati fi pamọ pẹlu akoonu koodu atẹle, lẹhinna gbe faili naa pada si awọndagsfolda. A n ran awọn DAG lọpọlọpọ ti o da lori orilẹ-ede naa gẹgẹbi atẹle:- Ṣetumo awọn ariyanjiyan aiyipada ti o kọja si gbogbo awọn DAG.

- Ṣẹda itumọ DAG fun awọn orilẹ-ede kọọkan nipasẹ gbigbe

op_args - Ṣe maapu naa

process_s3_dataiṣẹ pẹlupython_callable_name. - lilo Python onišẹ lati ṣe ilana data faili csv ti o fipamọ sinu garawa Amazon S3.

- A ti ṣeto

schedule_intervalbi 10 iṣẹju, ṣugbọn lero free lati ṣatunṣe yi iye bi ti nilo.

- Ṣatunkọ faili naa

example_dag_factory.pyki o si fi pamọ pẹlu akoonu koodu atẹle, lẹhinna gbe faili naa pada sidagsfolda. Awọn koodu nu awọn ti wa tẹlẹ DAGs ati gbogboclean_dags()ọna ati awọn ṣiṣẹda titun DAGs lilo awọngenerate_dags()ọna lati awọnDagFactoryapeere.

- Lẹhin ti o gbejade awọn faili naa, pada si console Airflow UI ki o lọ kiri si taabu DAG, nibiti iwọ yoo rii DAGs tuntun.

- Ni kete ti o ba gbejade awọn faili naa, pada si console Airflow UI ati labẹ taabu DAGs iwọ yoo rii awọn DAG tuntun ti n farahan bi a ṣe han ni isalẹ:

O le mu awọn DAG ṣiṣẹ nipa ṣiṣe wọn lọwọ ati idanwo wọn ni ẹyọkan. Lori imuṣiṣẹ, afikun faili CSV ti a npè ni count_death_{COUNTRY_CODE}.csv ti ipilẹṣẹ ninu awọn dags folda.

Ninu

Awọn idiyele le wa ni nkan ṣe pẹlu lilo ọpọlọpọ awọn iṣẹ AWS ti a jiroro ni ifiweranṣẹ yii. Lati ṣe idiwọ awọn idiyele ọjọ iwaju, paarẹ agbegbe Amazon MWAA lẹhin ti o ti pari awọn iṣẹ ṣiṣe ti a ṣe ilana ni ifiweranṣẹ yii, ki o si ofo ki o pa garawa S3 naa.

ipari

Ninu ifiweranṣẹ bulọọgi yii a ṣe afihan bi o ṣe le lo ile-iṣẹ dag ile-ikawe lati ṣẹda DAGs ti o ni agbara. Awọn DAG ti o ni agbara jẹ ẹya nipasẹ agbara wọn lati ṣe ipilẹṣẹ awọn abajade pẹlu itupalẹ kọọkan ti faili DAG ti o da lori awọn atunto. Gbero lilo awọn DAG ti o ni agbara ni awọn oju iṣẹlẹ wọnyi:

- Iṣilọ adaṣe adaṣe lati eto ohun-ini si Airflow, nibiti irọrun ni iran DAG ṣe pataki

- Awọn ipo nibiti paramita kan nikan yipada laarin awọn DAG oriṣiriṣi, ṣiṣatunṣe ilana iṣakoso iṣan-iṣẹ

- Ṣiṣakoṣo awọn DAG ti o gbẹkẹle ọna idagbasoke ti eto orisun kan, n pese iyipada si awọn ayipada

- Ṣiṣeto awọn iṣe iwọntunwọnsi fun awọn DAG kọja ẹgbẹ tabi agbari rẹ nipa ṣiṣẹda awọn awoṣe wọnyi, igbega aitasera ati ṣiṣe

- Gbigba awọn ikede ti o da lori YAML lori ifaminsi Python idiju, mimu iṣeto DAG dirọ ati awọn ilana itọju

- Ṣiṣẹda ṣiṣan ṣiṣiṣẹ data ti o ṣe deede ati idagbasoke ti o da lori awọn igbewọle data, ṣiṣe adaṣe adaṣe daradara

Nipa iṣakojọpọ awọn DAG ti o ni agbara sinu ṣiṣiṣẹsẹhin iṣẹ rẹ, o le mu adaṣe pọ si, isọdọtun, ati iwọntunwọnsi, nikẹhin imudara ṣiṣe ati imunadoko ti iṣakoso opo gigun ti data rẹ.

Lati kọ diẹ sii nipa Amazon MWAA DAG Factory, ṣabẹwo Amazon MWAA fun Idanileko Atupale: DAG Factory. Fun awọn alaye afikun ati awọn apẹẹrẹ koodu lori Amazon MWAA, ṣabẹwo si Amazon MWAA Itọsọna olumulo ati awọn Awọn apẹẹrẹ Amazon MWAA GitHub ibi ipamọ.

Nipa awọn onkọwe

Jayesh Shinde jẹ Sr. Ohun elo ayaworan pẹlu AWS ProServe India. O ṣe amọja ni ṣiṣẹda ọpọlọpọ awọn solusan ti o da lori awọsanma nipa lilo awọn iṣe idagbasoke sọfitiwia ode oni bii olupin, DevOps, ati awọn atupale.

Jayesh Shinde jẹ Sr. Ohun elo ayaworan pẹlu AWS ProServe India. O ṣe amọja ni ṣiṣẹda ọpọlọpọ awọn solusan ti o da lori awọsanma nipa lilo awọn iṣe idagbasoke sọfitiwia ode oni bii olupin, DevOps, ati awọn atupale.

Harshd Yeola jẹ Sr. Cloud Architect pẹlu AWS ProServe India n ṣe iranlọwọ fun awọn alabara lati jade ati ṣe imudojuiwọn awọn amayederun wọn sinu AWS. O ṣe amọja ni kikọ DevSecOps ati awọn amayederun iwọn lilo awọn apoti, AIOPs, ati Awọn irinṣẹ Olùgbéejáde AWS ati awọn iṣẹ.

Harshd Yeola jẹ Sr. Cloud Architect pẹlu AWS ProServe India n ṣe iranlọwọ fun awọn alabara lati jade ati ṣe imudojuiwọn awọn amayederun wọn sinu AWS. O ṣe amọja ni kikọ DevSecOps ati awọn amayederun iwọn lilo awọn apoti, AIOPs, ati Awọn irinṣẹ Olùgbéejáde AWS ati awọn iṣẹ.

- SEO Agbara akoonu & PR Pinpin. Gba Imudara Loni.

- PlatoData.Network inaro Generative Ai. Fi agbara fun ara Rẹ. Wọle si Nibi.

- PlatoAiStream. Web3 oye. Imo Amugbadun. Wọle si Nibi.

- PlatoESG. Erogba, CleanTech, Agbara, Ayika, Oorun, Isakoso Egbin. Wọle si Nibi.

- PlatoHealth. Imọ-ẹrọ Imọ-ẹrọ ati Awọn Idanwo Ile-iwosan. Wọle si Nibi.

- Orisun: https://aws.amazon.com/blogs/big-data/dynamic-dag-generation-with-yaml-and-dag-factory-in-amazon-mwaa/