Loni, awọn alabara ti gbogbo awọn ile-iṣẹ — boya awọn iṣẹ inawo, ilera ati imọ-jinlẹ igbesi aye, irin-ajo ati alejò, media ati ere idaraya, awọn ibaraẹnisọrọ, sọfitiwia bi iṣẹ kan (SaaS), ati paapaa awọn olupese awoṣe ti ohun-ini-n lo awọn awoṣe ede nla (LLMs) lati kọ awọn ohun elo bii ibeere ati idahun (QnA) chatbots, awọn ẹrọ wiwa, ati awọn ipilẹ imọ. Awọn wọnyi ipilẹṣẹ AI awọn ohun elo kii ṣe nikan lo lati ṣe adaṣe awọn ilana iṣowo ti o wa tẹlẹ, ṣugbọn tun ni agbara lati yi iriri pada fun awọn alabara nipa lilo awọn ohun elo wọnyi. Pẹlu awọn ilọsiwaju ti a ṣe pẹlu LLMs bi awọn Mixtral-8x7B Ilana, itọsẹ ti architectures bi awọn adalu awọn amoye (MoE), awọn onibara n wa awọn ọna nigbagbogbo lati mu iṣẹ ṣiṣe ati deede ti awọn ohun elo AI ti ipilẹṣẹ lakoko ti o fun wọn laaye lati lo awọn imunadoko ti o gbooro ti awọn awoṣe pipade ati ṣiṣi.

Ọpọlọpọ awọn ilana ni a lo nigbagbogbo lati mu ilọsiwaju ati iṣẹ ṣiṣe ti LLM kan dara si, gẹgẹbi iṣatunṣe didara pẹlu Atunse itanran daradara paramita (PEFT), ẹkọ imuduro lati esi eniyan (RLHF), ati ṣiṣe imo distillation. Bibẹẹkọ, nigbati o ba n kọ awọn ohun elo AI ipilẹṣẹ, o le lo ojutu yiyan ti o fun laaye fun isọdọkan agbara ti imọ ita ati gba ọ laaye lati ṣakoso alaye ti a lo fun iran laisi iwulo lati ṣe itanran-tunse awoṣe ipilẹ ti o wa tẹlẹ. Eyi ni ibi ti Augmented Augmented Generation (RAG) ti nwọle, pataki fun awọn ohun elo AI ti ipilẹṣẹ ni idakeji si awọn omiiran ti o gbowolori diẹ sii ati ti o lagbara-tuning awọn omiiran ti a ti jiroro. Ti o ba n ṣe imuse awọn ohun elo RAG ti o nipọn sinu awọn iṣẹ ṣiṣe ojoojumọ rẹ, o le ba pade awọn italaya ti o wọpọ pẹlu awọn eto RAG rẹ gẹgẹbi imupadabọ aiṣedeede, iwọn ti o pọ si ati idiju ti awọn iwe aṣẹ, ati apọju ipo, eyiti o le ni ipa ni pataki didara ati igbẹkẹle ti awọn idahun ti ipilẹṣẹ. .

Ifiweranṣẹ yii jiroro awọn ilana RAG lati mu ilọsiwaju idahun si ni lilo LangChain ati awọn irinṣẹ bii igbasilẹ iwe aṣẹ obi ni afikun si awọn ilana bii funmorawon ọrọ-ọrọ lati jẹ ki awọn olupilẹṣẹ ṣe ilọsiwaju awọn ohun elo AI ipilẹṣẹ ti o wa tẹlẹ.

Akopọ ojutu

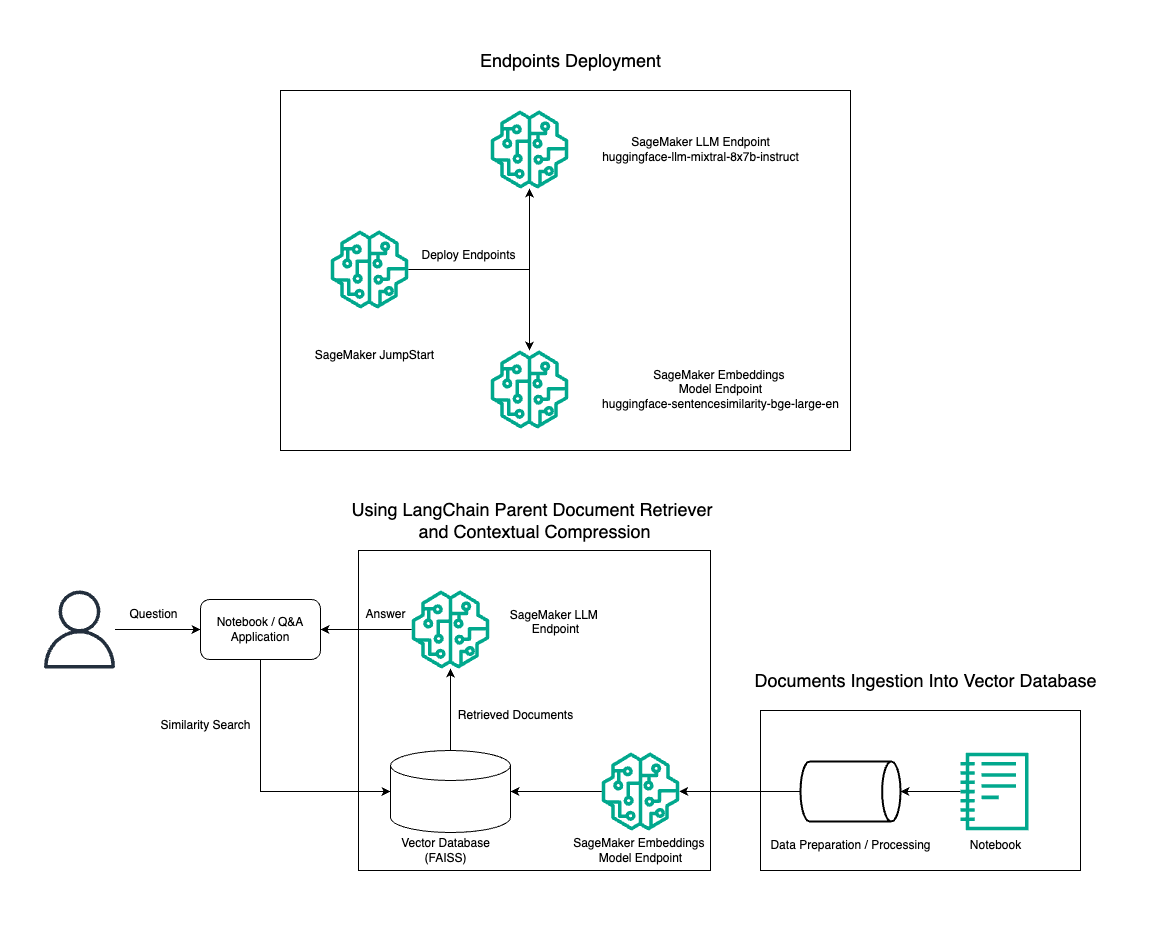

Ninu ifiweranṣẹ yii, a ṣe afihan lilo Mixtral-8x7B Instruct iran ọrọ ni idapo pẹlu awoṣe ifibọ BGE Large En lati ṣe agbero eto RAG QnA daradara lori iwe ajako Amazon SageMaker nipa lilo ohun elo imupadabọ iwe obi ati ilana imupọmọ ọrọ-ọrọ. Aworan ti o tẹle yii ṣe apejuwe faaji ti ojutu yii.

O le ran ojutu yii ṣiṣẹ pẹlu awọn jinna diẹ nipa lilo Amazon SageMaker JumpStart, Syeed ti iṣakoso ni kikun ti o funni ni awọn awoṣe ipilẹ-ti-ti-aworan fun ọpọlọpọ awọn ọran lilo bii kikọ akoonu, iran koodu, idahun ibeere, didaakọ, akopọ, iyasọtọ, ati igbapada alaye. O pese akojọpọ awọn awoṣe ti a ti kọkọ tẹlẹ ti o le fi ranṣẹ ni iyara ati pẹlu irọrun, iyara idagbasoke ati imuṣiṣẹ ti awọn ohun elo ẹkọ ẹrọ (ML). Ọkan ninu awọn paati bọtini ti SageMaker JumpStart jẹ Ipele Awoṣe, eyiti o funni ni katalogi nla ti awọn awoṣe ti a ti kọ tẹlẹ, bii Mixtral-8x7B, fun awọn iṣẹ ṣiṣe lọpọlọpọ.

Mixtral-8x7B nlo faaji MoE kan. Faaji yii ngbanilaaye awọn ẹya oriṣiriṣi ti nẹtiwọọki nkankikan lati ṣe amọja ni awọn iṣẹ ṣiṣe oriṣiriṣi, ni imunadoko pinpin iṣẹ ṣiṣe laarin awọn amoye pupọ. Ọna yii jẹ ki ikẹkọ daradara ati imuṣiṣẹ ti awọn awoṣe ti o tobi ju ni akawe si awọn faaji ibile.

Ọkan ninu awọn anfani akọkọ ti faaji MoE ni iwọn rẹ. Nipa pinpin iṣẹ ṣiṣe kọja awọn amoye lọpọlọpọ, awọn awoṣe MoE le ṣe ikẹkọ lori awọn iwe data nla ati ṣaṣeyọri iṣẹ ṣiṣe to dara julọ ju awọn awoṣe ibile ti iwọn kanna. Ni afikun, awọn awoṣe MoE le ni imunadoko diẹ sii lakoko itọka nitori pe ipin kan ti awọn amoye nilo lati muu ṣiṣẹ fun titẹ sii kan.

Fun alaye diẹ sii lori Ilana Mixtral-8x7B lori AWS, tọka si Mixtral-8x7B wa bayi ni Amazon SageMaker JumpStart. Awoṣe Mixtral-8x7B jẹ ki o wa labẹ iwe-aṣẹ Apache 2.0, fun lilo laisi awọn ihamọ.

Ninu ifiweranṣẹ yii, a sọrọ nipa bi o ṣe le lo LangChain lati ṣẹda awọn ohun elo RAG ti o munadoko ati daradara siwaju sii. LangChain jẹ ile-ikawe Python orisun ṣiṣi ti a ṣe apẹrẹ lati kọ awọn ohun elo pẹlu LLMs. O pese ilana modular ati rọpọ fun apapọ LLMs pẹlu awọn paati miiran, gẹgẹbi awọn ipilẹ imọ, awọn eto igbapada, ati awọn irinṣẹ AI miiran, lati ṣẹda awọn ohun elo ti o lagbara ati isọdi.

A rin nipasẹ kikọ opo gigun ti epo RAG lori SageMaker pẹlu Mixtral-8x7B. A lo awoṣe iran ọrọ Mixtral-8x7B Instruct pẹlu awoṣe ifibọ BGE Large En lati ṣẹda eto QnA ti o munadoko nipa lilo RAG lori iwe akiyesi SageMaker kan. A lo apẹẹrẹ ml.t3.medium kan lati ṣe afihan imuṣiṣẹ LLMs nipasẹ SageMaker JumpStart, eyiti o le wọle nipasẹ aaye ipari API ti SageMaker ti ipilẹṣẹ. Eto yii ngbanilaaye fun iṣawari, idanwo, ati iṣapeye ti awọn ilana RAG to ti ni ilọsiwaju pẹlu LangChain. A tun ṣe apejuwe iṣọpọ ti ile itaja Ifibọ FAISS sinu iṣan-iṣẹ RAG, n ṣe afihan ipa rẹ ni titoju ati gbigba awọn ifibọ pada lati jẹki iṣẹ eto naa.

A ṣe irin-ajo kukuru ti iwe akiyesi SageMaker. Fun alaye diẹ sii ati awọn ilana igbese-nipasẹ-igbesẹ, tọka si awọn Awọn ilana RAG ti ilọsiwaju pẹlu Mixtral lori SageMaker Jumpstart GitHub repo.

Iwulo fun awọn ilana RAG ti ilọsiwaju

Awọn ilana RAG to ti ni ilọsiwaju jẹ pataki lati ni ilọsiwaju lori awọn agbara lọwọlọwọ ti LLM ni ṣiṣe, oye, ati ṣiṣẹda ọrọ bi eniyan. Bi iwọn ati idiju ti awọn iwe aṣẹ n pọ si, o nsoju ọpọlọpọ awọn oju-iwe ti iwe-ipamọ ni ifibọ ẹyọkan le ja si isonu ti pato. Botilẹjẹpe o ṣe pataki lati mu ipilẹ gbogbogbo ti iwe-ipamọ kan, o ṣe pataki bakanna lati ṣe idanimọ ati ṣe aṣoju awọn oriṣiriṣi awọn ọrọ-ọrọ inu. Eyi jẹ ipenija ti o nigbagbogbo dojuko nigbati o n ṣiṣẹ pẹlu awọn iwe aṣẹ nla. Ipenija miiran pẹlu RAG ni pe pẹlu igbapada, iwọ ko mọ awọn ibeere kan pato ti eto ibi ipamọ iwe rẹ yoo ṣe pẹlu lori mimu. Eyi le ja si alaye ti o ṣe pataki julọ si ibeere ti a sin labẹ ọrọ (aponju ọrọ-ọrọ). Lati dinku ikuna ati ilọsiwaju lori ilana faaji RAG ti o wa tẹlẹ, o le lo awọn ilana RAG to ti ni ilọsiwaju (igbasilẹ iwe aṣẹ obi ati funmorawon ọrọ-ọrọ) lati dinku awọn aṣiṣe igbapada, mu didara idahun pọ si, ati mu mimu awọn ibeere idiju ṣiṣẹ.

Pẹlu awọn imọ-ẹrọ ti a jiroro ninu ifiweranṣẹ yii, o le koju awọn italaya bọtini ti o ni nkan ṣe pẹlu igbapada imo itagbangba ati isọpọ, ti n mu ohun elo rẹ ṣiṣẹ lati fi awọn idahun to peye diẹ sii ati ni oye agbegbe.

Ni awọn apakan atẹle, a ṣawari bi iwe baba retrievers ati contextual funmorawon le ṣe iranlọwọ fun ọ lati koju diẹ ninu awọn iṣoro ti a ti jiroro.

Agbapada iwe aṣẹ obi

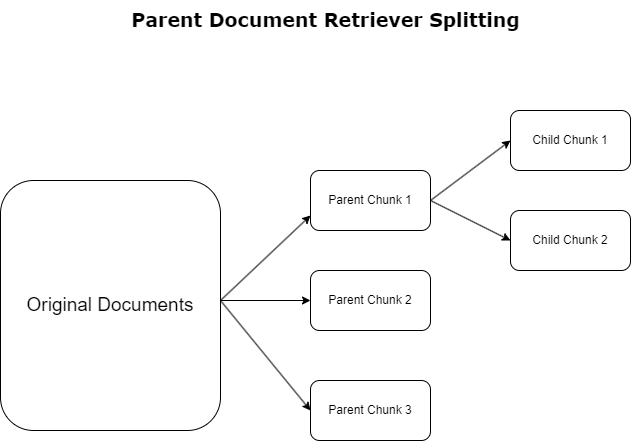

Ni apakan ti tẹlẹ, a ṣe afihan awọn italaya ti awọn ohun elo RAG ba pade nigbati o ba n ba awọn iwe aṣẹ lọpọlọpọ. Lati koju awọn italaya wọnyi, iwe baba retrievers tito lẹtọ ati yan awọn iwe aṣẹ ti nwọle bi awọn iwe aṣẹ obi. Awọn iwe aṣẹ wọnyi jẹ idanimọ fun iseda okeerẹ wọn ṣugbọn wọn ko lo taara ni fọọmu atilẹba wọn fun awọn ifibọ. Dípò kíkọ gbogbo ìwé sílẹ̀ sínú ìfibọ ẹyọ kan, àwọn tí ń gba ìwé òbí pín àwọn ìwé òbí wọ̀nyí sí. ọmọ awọn iwe aṣẹ. Iwe-ipamọ ọmọ kọọkan n gba awọn aaye ọtọtọ tabi awọn koko-ọrọ lati inu iwe obi ti o tobi julọ. Ni atẹle idanimọ ti awọn apakan ọmọ wọnyi, awọn ifibọ ẹni kọọkan ni a yan si ọkọọkan, ti o mu koko-ọrọ koko-ọrọ wọn kan pato (wo aworan atọka atẹle). Lakoko igbapada, iwe aṣẹ obi ni a pe. Ilana yii n pese awọn agbara wiwa ti o ni ifọkansi sibẹsibẹ gbooro, ti n pese LLM pẹlu irisi ti o gbooro. Awọn atunṣe iwe aṣẹ obi pese awọn LLM pẹlu anfani meji-meji: ni pato ti awọn ifibọ iwe-ipamọ ọmọ fun kongẹ ati imupadabọ alaye ti o yẹ, papọ pẹlu ẹbẹ ti awọn iwe aṣẹ obi fun iran idahun, eyiti o mu awọn abajade LLM pọ si pẹlu ipo siwa ati pipe.

Itumọ ọrọ-ọrọ

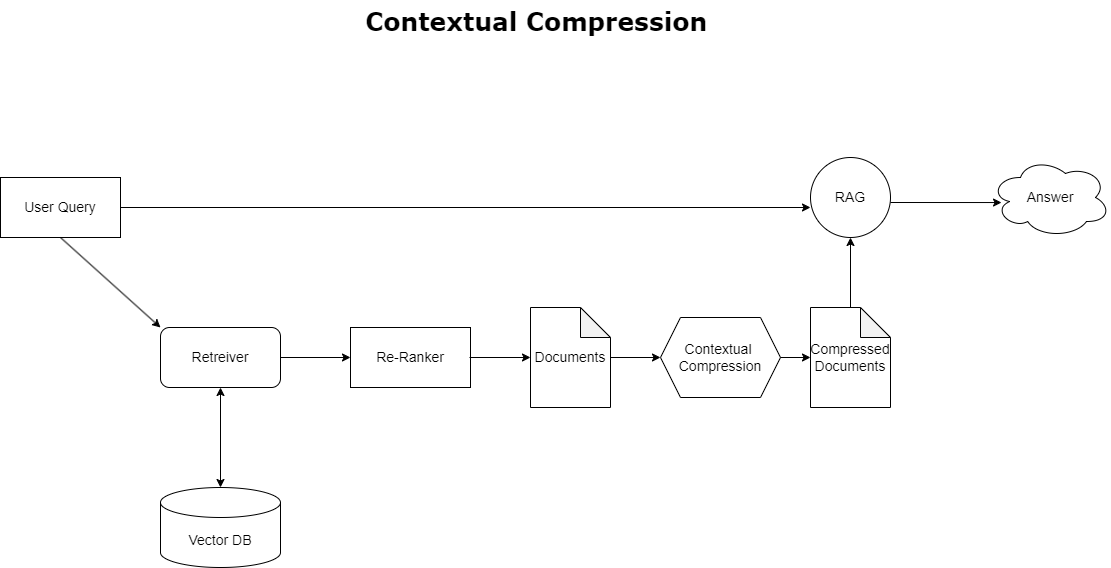

Láti yanjú ọ̀ràn àkúnwọ́sílẹ̀ àyíká tí a ti jíròrò ṣáájú, o lè lò contextual funmorawon lati fisinuirindigbindigbin ati ṣe àlẹmọ awọn iwe aṣẹ ti a gba pada ni ibamu pẹlu ọrọ-ọrọ ibeere naa, nitorinaa alaye ti o wulo nikan ni a tọju ati ṣiṣẹ. Eyi jẹ aṣeyọri nipasẹ apapọ ti olupilẹṣẹ ipilẹ fun mimu iwe akọkọ ati ikọsilẹ iwe fun isọdọtun awọn iwe aṣẹ wọnyi nipa sisọ akoonu wọn silẹ tabi yọkuro wọn patapata da lori ibaramu, bi a ti ṣe apejuwe ninu aworan atọka atẹle. Ọna isanpada yii, ti o ni irọrun nipasẹ olupilẹṣẹ funmorawon agbegbe, mu imudara ohun elo RAG pọ si nipa pipese ọna kan lati jade ati lo ohun ti o ṣe pataki nikan lati ọpọlọpọ alaye. O koju ọran ti apọju alaye ati sisẹ data ti ko ṣe pataki, ti o yori si didara esi ti ilọsiwaju, awọn iṣẹ ṣiṣe LLM ti o ni idiyele diẹ sii, ati ilana imupadabọ gbogbogbo ti o rọ. Ni pataki, o jẹ àlẹmọ ti o ṣe alaye alaye si ibeere ti o wa ni ọwọ, ti o jẹ ki o jẹ ohun elo ti o nilo pupọ fun awọn olupilẹṣẹ ni ero lati mu awọn ohun elo RAG wọn dara julọ fun iṣẹ ṣiṣe to dara julọ ati itẹlọrun olumulo.

Prerequisites

Ti o ba jẹ tuntun si SageMaker, tọka si awọn Itọsọna Idagbasoke SageMaker Amazon.

Ṣaaju ki o to bẹrẹ pẹlu ojutu, ṣẹda iroyin AWS. Nigbati o ba ṣẹda akọọlẹ AWS kan, o gba idanimọ ami-iwọle kan (SSO) ti o ni iraye si pipe si gbogbo awọn iṣẹ AWS ati awọn orisun inu akọọlẹ naa. Idanimọ yii ni a pe ni akọọlẹ AWS gbongbo olumulo.

Wíwọlé si awọn Aṣakoso Iṣakoso AWS lilo adirẹsi imeeli ati ọrọ igbaniwọle ti o lo lati ṣẹda akọọlẹ yoo fun ọ ni iraye si pipe si gbogbo awọn orisun AWS ninu akọọlẹ rẹ. A ṣeduro ni iyanju pe o ko lo olumulo gbongbo fun awọn iṣẹ ṣiṣe lojoojumọ, paapaa awọn ti iṣakoso.

Dipo, fojusi si awọn aabo ti o dara ju ise in Idanimọ AWS ati Isakoso Wiwọle (IAM), ati ṣẹda Isakoso olumulo ati ẹgbẹ. Lẹhinna tii ni aabo kuro ni awọn iwe-ẹri olumulo olumulo ki o lo wọn lati ṣe akọọlẹ diẹ ati awọn iṣẹ ṣiṣe iṣakoso iṣẹ.

Awoṣe Mixtral-8x7b nilo apẹẹrẹ ml.g5.48xlarge kan. SageMaker JumpStart n pese ọna irọrun lati wọle si ati ran awọn orisun ṣiṣi oriṣiriṣi 100 lọ ati awọn awoṣe ipilẹ ẹni-kẹta. Lati le ṣe ifilọlẹ aaye ipari kan lati gbalejo Mixtral-8x7B lati ọdọ SageMaker JumpStart, o le nilo lati beere fun alekun ipin iṣẹ kan lati wọle si apẹẹrẹ ml.g5.48xlarge fun lilo ipari ipari. O le ìbéèrè iṣẹ ipin posi nipasẹ console, Ọlọpọọmídíà Ifilelẹ Aws AWS (AWS CLI), tabi API lati gba iraye si awọn orisun afikun wọnyẹn.

Ṣeto apẹẹrẹ iwe ajako SageMaker ki o fi awọn igbẹkẹle sii

Lati bẹrẹ, ṣẹda apẹẹrẹ iwe ajako SageMaker ki o fi awọn igbẹkẹle ti o nilo sori ẹrọ. Tọkasi awọn GitHub repo lati rii daju a aseyori setup. Lẹhin ti o ṣeto apẹẹrẹ iwe ajako, o le ran awoṣe naa lọ.

O tun le ṣiṣe iwe ajako ni agbegbe lori agbegbe idagbasoke iṣọpọ ti o fẹ (IDE). Rii daju pe o ni laabu ajako Jupyter ti fi sori ẹrọ.

Ran awọn awoṣe

Ran awọn Mixtral-8X7B Ilana LLM awoṣe sori SageMaker JumpStart:

Mu awoṣe ifisinu BGE Tobi En sori SageMaker JumpStart:

Ṣeto LangChain

Lẹhin gbigbe gbogbo awọn ile-ikawe to ṣe pataki wọle ati gbigbe awoṣe Mixtral-8x7B ati awoṣe ifibọ BGE Large En, o le ṣeto LangChain bayi. Fun awọn ilana igbese-nipasẹ-igbesẹ, tọka si awọn GitHub repo.

Igbaradi data

Ninu ifiweranṣẹ yii, a lo awọn ọdun pupọ ti Awọn lẹta Amazon si Awọn onipindoje bi koposi ọrọ lati ṣe QnA lori. Fun awọn igbesẹ alaye diẹ sii lati ṣeto data, tọka si GitHub repo.

Idahun ibeere

Ni kete ti a ti pese data naa, o le lo apẹja ti a pese nipasẹ LangChain, eyiti o fi ipari si ile-itaja vector ti o gba igbewọle fun LLM. Apoti yii ṣe awọn igbesẹ wọnyi:

- Gba ibeere titẹ sii.

- Ṣẹda ifibọ ibeere.

- Mu awọn iwe aṣẹ ti o yẹ.

- Ṣafikun awọn iwe aṣẹ ati ibeere naa sinu kiakia.

- Pe awoṣe pẹlu itọsi ati ṣe ipilẹṣẹ idahun ni ọna kika.

Ni bayi ti ile itaja vector wa ni aye, o le bẹrẹ bibeere awọn ibeere:

Deede retriever pq

Ninu oju iṣẹlẹ ti o ṣaju, a ṣawari ọna iyara ati taara lati gba idahun mimọ-ọrọ si ibeere rẹ. Bayi jẹ ki a wo aṣayan isọdi diẹ sii pẹlu iranlọwọ ti RetrievalQA, nibi ti o ti le ṣe akanṣe bii o ṣe yẹ ki a ṣafikun awọn iwe aṣẹ ti o mu si tọ ni lilo paramita chain_type. Paapaa, lati le ṣakoso iye awọn iwe aṣẹ ti o yẹ yẹ ki o gba pada, o le yi paramita k pada ni koodu atẹle lati rii awọn abajade oriṣiriṣi. Ni ọpọlọpọ awọn oju iṣẹlẹ, o le fẹ lati mọ iru awọn iwe orisun ti LLM lo lati ṣe ipilẹṣẹ idahun. O le gba awọn iwe aṣẹ wọnyẹn ni iṣelọpọ ni lilo return_source_documents, eyi ti o da awọn iwe aṣẹ ti o ti wa ni afikun si awọn ipo ti LLM tọ. RetrievalQA tun gba ọ laaye lati pese awoṣe itọsẹ aṣa ti o le jẹ pato si awoṣe naa.

Jẹ ki a beere ibeere kan:

Pq baba iwe aṣẹ retriever

Jẹ ki a wo aṣayan RAG ti ilọsiwaju diẹ sii pẹlu iranlọwọ ti ParentDocumentRetriever. Nigbati o ba n ṣiṣẹ pẹlu imupadabọ iwe, o le ba pade iṣowo laarin titoju awọn ege kekere ti iwe-ipamọ fun awọn ifibọ deede ati awọn iwe aṣẹ nla lati tọju ọrọ-ọrọ diẹ sii. Olupada iwe aṣẹ obi kọlu iwọntunwọnsi yẹn nipasẹ pipin ati fifipamọ awọn ṣoki kekere ti data.

A nlo a parent_splitter lati pin awọn atilẹba awọn iwe aṣẹ sinu tobi chunks ti a npe ni obi awọn iwe aṣẹ ati ki o kan child_splitter lati ṣẹda awọn iwe aṣẹ ọmọde kekere lati awọn iwe atilẹba:

Awọn iwe aṣẹ ọmọ lẹhinna ni itọka ni ile itaja fekito nipa lilo awọn ifibọ. Eyi ngbanilaaye imupadabọ daradara ti awọn iwe aṣẹ ọmọ ti o da lori ibajọra. Lati gba alaye ti o yẹ pada, olugba iwe aṣẹ obi ti kọkọ gba awọn iwe aṣẹ ọmọ lati ile itaja vector. Lẹhinna o n wo awọn ID obi fun awọn iwe aṣẹ ọmọ wọnyẹn ati da awọn iwe aṣẹ obi ti o pọju ti o baamu pada.

Jẹ ki a beere ibeere kan:

Contextual funmorawon pq

Jẹ ki a wo aṣayan RAG ti ilọsiwaju miiran ti a pe contextual funmorawon. Ipenija kan pẹlu imupadabọ ni pe igbagbogbo a ko mọ awọn ibeere kan pato ti eto ibi ipamọ iwe rẹ yoo dojukọ nigbati o ba wọle data sinu eto naa. Eyi tumọ si pe alaye ti o ṣe pataki julọ si ibeere le jẹ sin sinu iwe-ipamọ pẹlu ọpọlọpọ ọrọ ti ko ṣe pataki. Gbigbe iwe kikun yẹn nipasẹ ohun elo rẹ le ja si awọn ipe LLM gbowolori diẹ sii ati awọn idahun talaka.

Atunṣe funmorawon ti ọrọ-ọrọ n koju ipenija ti gbigba alaye ti o yẹ lati inu eto ibi ipamọ iwe, nibiti o le sin data to wulo laarin awọn iwe aṣẹ ti o ni ọpọlọpọ ọrọ ninu. Nipa fisinuirindigbindigbin ati sisẹ awọn iwe aṣẹ ti a gba pada ti o da lori aaye ibeere ti a fun, alaye ti o wulo julọ nikan ni a da pada.

Lati lo igbapada funmorawon ipo, iwọ yoo nilo:

- A ipilẹ retriever - Eyi ni igbasilẹ akọkọ ti o gba awọn iwe aṣẹ lati inu eto ibi ipamọ ti o da lori ibeere naa

- A konpireso iwe - Ẹya paati yii gba awọn iwe aṣẹ ti a gba ni ibẹrẹ ati kikuru wọn nipa idinku awọn akoonu ti awọn iwe aṣẹ kọọkan tabi jisilẹ awọn iwe aṣẹ ti ko ṣe pataki lapapọ, ni lilo agbegbe ibeere lati pinnu ibaramu

Ṣafikun funmorawon agbegbe pẹlu olutapa pq LLM kan

Ni akọkọ, fi ipari si igbasilẹ ipilẹ rẹ pẹlu kan ContextualCompressionRetriever. Iwọ yoo fi kun LLMChainExtractor, eyi ti yoo ṣe atunṣe lori awọn iwe aṣẹ ti o pada akọkọ ati jade lati inu ọkọọkan nikan ni akoonu ti o ṣe pataki si ibeere naa.

Initialize awọn pq lilo awọn ContextualCompressionRetriever pẹlu ohun LLMChainExtractor ati ki o kọja awọn tọ ni nipasẹ awọn chain_type_kwargs ariyanjiyan.

Jẹ ki a beere ibeere kan:

Ajọ awọn iwe aṣẹ pẹlu ohun LLM pq àlẹmọ

awọn LLMChainFilter jẹ irọrun diẹ ṣugbọn konpireso to lagbara diẹ sii ti o nlo pq LLM kan lati pinnu iru awọn iwe aṣẹ ti a gba ni ibẹrẹ lati ṣe àlẹmọ jade ati awọn wo ni yoo pada, laisi ifọwọyi awọn akoonu inu iwe:

Initialize awọn pq lilo awọn ContextualCompressionRetriever pẹlu ohun LLMChainFilter ati ki o kọja awọn tọ ni nipasẹ awọn chain_type_kwargs ariyanjiyan.

Jẹ ki a beere ibeere kan:

Ṣe afiwe awọn abajade

Tabili ti o tẹle ṣe afiwe awọn abajade lati awọn ibeere oriṣiriṣi ti o da lori ilana.

| ilana | Ibeere 1 | Ibeere 2 | lafiwe |

| Bawo ni AWS ṣe dagbasoke? | Kini idi ti Amazon ṣe aṣeyọri? | ||

| Deede Retriever Pq wu | AWS (Awọn iṣẹ Oju opo wẹẹbu Amazon) wa lati idoko-owo ti ko ni ere ni ibẹrẹ si iṣowo owo ṣiṣe owo-wiwọle ọdọọdun $ 85B pẹlu ere to lagbara, nfunni ni ọpọlọpọ awọn iṣẹ ati awọn ẹya, ati di apakan pataki ti portfolio Amazon. Pelu ti nkọju si ṣiyemeji ati awọn ori afẹfẹ igba kukuru, AWS tẹsiwaju lati ṣe imotuntun, fa awọn alabara tuntun, ati ṣiwakiri awọn alabara ti nṣiṣe lọwọ, nfunni awọn anfani bii agility, ĭdàsĭlẹ, ṣiṣe-iye owo, ati aabo. AWS tun faagun awọn idoko-owo igba pipẹ rẹ, pẹlu idagbasoke chirún, lati pese awọn agbara tuntun ati yi ohun ti o ṣee ṣe fun awọn alabara rẹ. | Amazon jẹ aṣeyọri nitori ilọsiwaju ilọsiwaju ati imugboroja si awọn agbegbe titun gẹgẹbi awọn iṣẹ amayederun imọ-ẹrọ, awọn ẹrọ kika oni-nọmba, awọn oluranlọwọ ti ara ẹni ti o ni ohun, ati awọn awoṣe iṣowo titun bi ibi-ọja ẹni-kẹta. Agbara rẹ lati ṣe iwọn awọn iṣẹ ṣiṣe ni iyara, bi a ti rii ninu imugboroja iyara ti imuse rẹ ati awọn nẹtiwọọki gbigbe, tun ṣe alabapin si aṣeyọri rẹ. Ni afikun, idojukọ Amazon lori iṣapeye ati awọn anfani ṣiṣe ni awọn ilana rẹ ti yorisi awọn ilọsiwaju iṣelọpọ ati awọn idinku idiyele. Apẹẹrẹ ti Iṣowo Amazon n ṣe afihan agbara ile-iṣẹ lati ṣe idogba iṣowo e-commerce rẹ ati awọn agbara eekaderi ni awọn apa oriṣiriṣi. | Da lori awọn idahun lati pq retriever deede, a ṣe akiyesi pe botilẹjẹpe o pese awọn idahun gigun, o jiya lati aponsedanu ọrọ-ọrọ ati kuna lati darukọ eyikeyi awọn alaye pataki lati inu koposi ni n ṣakiyesi idahun si ibeere ti a pese. Ẹwọn igbapada deede ko ni anfani lati mu awọn nuances pẹlu ijinle tabi oye ọrọ-ọrọ, ti o le padanu awọn ẹya pataki ti iwe naa. |

| Abajade Iwe Retriever Obi | AWS (Awọn iṣẹ Oju opo wẹẹbu Amazon) bẹrẹ pẹlu ifilọlẹ akọkọ ti ko dara ti iṣẹ Elastic Compute Cloud (EC2) ni ọdun 2006, n pese iwọn apẹẹrẹ kan nikan, ni ile-iṣẹ data kan, ni agbegbe kan ti agbaye, pẹlu awọn apẹẹrẹ ẹrọ ṣiṣe Linux nikan , ati laisi ọpọlọpọ awọn ẹya bọtini bi ibojuwo, iwọntunwọnsi fifuye, iwọn-ara-ara, tabi ibi ipamọ ti o tẹpẹlẹ. Bibẹẹkọ, aṣeyọri AWS gba wọn laaye lati ṣe arosọ ni iyara ati ṣafikun awọn agbara ti o padanu, nikẹhin gbooro lati funni ni ọpọlọpọ awọn adun, awọn iwọn, ati awọn iṣapeye ti iṣiro, ibi ipamọ, ati Nẹtiwọọki, ati idagbasoke awọn eerun tiwọn (Graviton) lati Titari idiyele ati iṣẹ siwaju. . Ilana isọdọtun aṣetunṣe AWS nilo awọn idoko-owo pataki ni owo ati awọn orisun eniyan ni ọdun 20, nigbagbogbo ni ilosiwaju ti igba ti yoo sanwo, lati pade awọn iwulo alabara ati ilọsiwaju awọn iriri alabara igba pipẹ, iṣootọ, ati ipadabọ fun awọn onipindoje. | Amazon jẹ aṣeyọri nitori agbara rẹ lati ṣe imotuntun nigbagbogbo, ṣe deede si awọn ipo ọja iyipada, ati pade awọn iwulo alabara ni ọpọlọpọ awọn apakan ọja. Eyi han gbangba ni aṣeyọri ti Iṣowo Amazon, eyiti o ti dagba lati wakọ ni aijọju $ 35B ni awọn titaja apapọ lododun nipasẹ jiṣẹ yiyan, iye, ati irọrun si awọn alabara iṣowo. Awọn idoko-owo Amazon ni ecommerce ati awọn agbara eekaderi ti tun jẹ ki ẹda awọn iṣẹ bii Ra pẹlu Prime, eyiti o ṣe iranlọwọ fun awọn oniṣowo pẹlu awọn oju opo wẹẹbu olumulo taara lati ṣe iyipada lati awọn iwo si awọn rira. | Igbapada iwe aṣẹ obi n jinlẹ jinlẹ sinu awọn pato ti ete idagbasoke AWS, pẹlu ilana aṣetunṣe ti fifi awọn ẹya tuntun kun ti o da lori esi alabara ati irin-ajo alaye lati ifilọlẹ akọkọ ti ko dara si ipo ọja ti o ga julọ, lakoko ti o pese idahun ọlọrọ-ọrọ . Awọn idahun bo ọpọlọpọ awọn aaye, lati awọn imotuntun imọ-ẹrọ ati ete ọja si ṣiṣe ti iṣeto ati idojukọ alabara, n pese wiwo gbogbogbo ti awọn okunfa ti o ṣe idasi aṣeyọri pẹlu awọn apẹẹrẹ. Eyi ni a le sọ si awọn agbara wiwa ti o gbooro ti o ni ifọkansi ti olugba iwe aṣẹ obi. |

| LLM Pq Extractor: Contextual funmorawon o wu | AWS wa nipasẹ bẹrẹ bi iṣẹ akanṣe kekere kan ninu Amazon, nilo idoko-owo olu pataki ati ti nkọju si iyemeji lati inu ati ita ile-iṣẹ naa. Sibẹsibẹ, AWS ni ibẹrẹ ori lori awọn oludije ti o pọju ati gbagbọ ninu iye ti o le mu si awọn onibara ati Amazon. AWS ṣe ifaramọ igba pipẹ lati tẹsiwaju idoko-owo, ti o yọrisi diẹ sii ju 3,300 awọn ẹya tuntun ati awọn iṣẹ ti a ṣe ifilọlẹ ni 2022. AWS ti yipada bi awọn alabara ṣe ṣakoso awọn amayederun imọ-ẹrọ wọn ati pe o ti di iṣowo oṣuwọn owo-wiwọle lododun $ 85B ti owo-wiwọle pẹlu ere to lagbara. AWS tun ti ni ilọsiwaju nigbagbogbo awọn ẹbun rẹ, gẹgẹbi imudara EC2 pẹlu awọn ẹya afikun ati awọn iṣẹ lẹhin ifilọlẹ akọkọ rẹ. | Da lori ipo ti a pese, aṣeyọri Amazon ni a le sọ si imugboroja ilana rẹ lati ori pẹpẹ tita iwe kan si aaye ọjà agbaye kan pẹlu ilolupo olutaja ẹni-kẹta larinrin, idoko-owo ni kutukutu ni AWS, ĭdàsĭlẹ ni iṣafihan Kindle ati Alexa, ati idagbasoke nla. ni owo-wiwọle ọdọọdun lati ọdun 2019 si 2022. Idagba yii yori si imugboroja ti ifẹsẹtẹ ile-iṣẹ imuse, ṣiṣẹda nẹtiwọọki gbigbe maili to kẹhin, ati ṣiṣe nẹtiwọọki ile-iṣẹ iyasọtọ tuntun, eyiti o jẹ iṣapeye fun iṣelọpọ ati awọn idinku idiyele. | Extractor LLM pq n ṣetọju iwọntunwọnsi laarin ibora awọn aaye pataki ni kikun ati yago fun ijinle ti ko wulo. O ṣe atunṣe ni agbara si ipo ibeere, nitorinaa abajade jẹ ibaramu taara ati okeerẹ. |

| Àlẹmọ pq LLM: Ijade Ibanujẹ Itumọ | AWS (Awọn iṣẹ Wẹẹbu Amazon) wa nipasẹ ifilọlẹ ni ibẹrẹ ẹya-alaini ṣugbọn aṣetunṣe ni kiakia da lori esi alabara lati ṣafikun awọn agbara pataki. Ọna yii gba AWS laaye lati ṣe ifilọlẹ EC2 ni ọdun 2006 pẹlu awọn ẹya ti o lopin ati lẹhinna ṣafikun awọn iṣẹ ṣiṣe tuntun nigbagbogbo, gẹgẹbi awọn iwọn apẹẹrẹ afikun, awọn ile-iṣẹ data, awọn agbegbe, awọn aṣayan eto iṣẹ, awọn irinṣẹ ibojuwo, iwọntunwọnsi fifuye, iwọn-laifọwọyi, ati ibi ipamọ alamọja. Ni akoko pupọ, AWS yipada lati iṣẹ ti ko dara si ẹya-ara si iṣowo-ọpọ-bilionu-dola nipasẹ idojukọ lori awọn iwulo alabara, agility, ĭdàsĭlẹ, iye owo-ṣiṣe, ati aabo. AWS ni bayi ni oṣuwọn ṣiṣe owo-wiwọle ọdọọdun $ 85B ati pe o funni ni diẹ sii ju 3,300 awọn ẹya tuntun ati awọn iṣẹ ni ọdun kọọkan, ṣiṣe ounjẹ si ọpọlọpọ awọn alabara lati awọn ibẹrẹ si awọn ile-iṣẹ ọpọlọpọ orilẹ-ede ati awọn ẹgbẹ aladani gbangba. | Amazon jẹ aṣeyọri nitori awọn awoṣe iṣowo tuntun rẹ, awọn ilọsiwaju imọ-ẹrọ ti nlọ lọwọ, ati awọn iyipada ilana ilana. Ile-iṣẹ naa ti fa idalọwọduro awọn ile-iṣẹ ibile nigbagbogbo nipasẹ iṣafihan awọn imọran tuntun, gẹgẹbi pẹpẹ ecommerce fun ọpọlọpọ awọn ọja ati iṣẹ, ibi ọjà ẹni-kẹta, awọn iṣẹ amayederun awọsanma (AWS), oluka e-kindle, ati oluranlọwọ ti ara ẹni idari ohun Alexa . Ni afikun, Amazon ti ṣe awọn ayipada igbekalẹ lati mu ilọsiwaju rẹ dara si, bii atunto nẹtiwọọki imuse AMẸRIKA lati dinku awọn idiyele ati awọn akoko ifijiṣẹ, idasi siwaju si aṣeyọri rẹ. | Iru si awọn LLM pq extractor, LLM pq àlẹmọ rii daju wipe biotilejepe awọn bọtini ojuami ti wa ni bo, awọn ti o wu wa ni daradara fun awọn onibara nwa fun ṣoki ati contextual idahun. |

Nigbati a ba ṣe afiwe awọn ilana oriṣiriṣi wọnyi, a le rii pe ni awọn ipo bii ṣiṣe alaye iyipada AWS lati iṣẹ ti o rọrun si eka kan, nkan-ọpọ-bilionu-dola, tabi n ṣalaye awọn aṣeyọri ilana Amazon, pq igbapada deede ko ni konge awọn ilana imudara ti o ni imọran diẹ sii, yori si kere ìfọkànsí alaye. Botilẹjẹpe awọn iyatọ diẹ diẹ ni o han laarin awọn imọ-ẹrọ ilọsiwaju ti a jiroro, wọn jẹ alaye pupọ diẹ sii ju awọn ẹwọn igbapada deede.

Fun awọn alabara ni awọn ile-iṣẹ bii ilera, awọn ibaraẹnisọrọ, ati awọn iṣẹ inawo ti o n wa lati ṣe RAG ninu awọn ohun elo wọn, awọn idiwọn ti pq igbapada deede ni pipese deede, yago fun apọju, ati ifitonileti imunadoko jẹ ki o kere si lati mu awọn iwulo wọnyi ṣẹ ni akawe. si awọn diẹ to ti ni ilọsiwaju obi iwe retriever ati contextual funmorawon imuposi. Awọn imọ-ẹrọ wọnyi ni anfani lati tan alaye lọpọlọpọ sinu ifọkansi, awọn oye ti o ni ipa ti o nilo, lakoko ti o ṣe iranlọwọ ilọsiwaju iṣẹ ṣiṣe idiyele.

Nu kuro

Nigbati o ba ti pari ṣiṣe iwe ajako, paarẹ awọn orisun ti o ṣẹda lati yago fun ikojọpọ awọn idiyele fun awọn orisun ti o nlo:

ipari

Ninu ifiweranṣẹ yii, a ṣe agbekalẹ ojutu kan ti o fun ọ laaye lati ṣe imupadabọ iwe aṣẹ obi ati awọn imuposi pq funmorawon ọrọ lati jẹki agbara awọn LLM lati ṣe ilana ati ṣe ipilẹṣẹ alaye. A ṣe idanwo awọn ilana RAG ilọsiwaju wọnyi pẹlu Ilana Mixtral-8x7B ati awọn awoṣe BGE Large En ti o wa pẹlu SageMaker JumpStart. A tun ṣe iwadii nipa lilo ibi-itọju itẹramọṣẹ fun awọn ifibọ ati awọn ṣoki iwe ati isọpọ pẹlu awọn ile itaja data iṣowo.

Awọn imọ-ẹrọ ti a ṣe kii ṣe atunṣe ọna ti awọn awoṣe LLM wọle nikan ati ṣafikun imọ itagbangba, ṣugbọn tun ṣe ilọsiwaju didara, ibaramu, ati ṣiṣe ti awọn abajade wọn. Nipa apapọ igbapada lati inu ọrọ-ọrọ nla pẹlu awọn agbara iran ede, awọn imọ-ẹrọ RAG to ti ni ilọsiwaju jẹ ki awọn LLM ṣe agbejade otitọ diẹ sii, isokan, ati awọn idahun ti o yẹ-ọrọ, imudara iṣẹ wọn kọja ọpọlọpọ awọn iṣẹ ṣiṣe ti ede adayeba.

SageMaker JumpStart wa ni aarin ti ojutu yii. Pẹlu SageMaker JumpStart, o ni iraye si akojọpọ lọpọlọpọ ti awọn awoṣe orisun ṣiṣi ati pipade, ṣiṣatunṣe ilana ti bibẹrẹ pẹlu ML ati ṣiṣe adaṣe iyara ati imuṣiṣẹ. Lati bẹrẹ imuṣiṣẹ ojutu yii, lilö kiri si iwe ajako ninu GitHub repo.

Nipa awọn onkọwe

Niithin Vijeaswaran jẹ Onitumọ Awọn ojutu ni AWS. Agbegbe idojukọ rẹ jẹ ipilẹṣẹ AI ati AWS AI Accelerators. O ni oye oye oye ni Imọ-ẹrọ Kọmputa ati Bioinformatics. Niithiyn n ṣiṣẹ ni pẹkipẹki pẹlu ẹgbẹ Generative AI GTM lati jẹ ki awọn alabara AWS ṣiṣẹ ni awọn iwaju pupọ ati mu iyara gbigba wọn ti AI ipilẹṣẹ. O jẹ onijakidijagan ti Dallas Mavericks ati gbadun gbigba awọn sneakers.

Niithin Vijeaswaran jẹ Onitumọ Awọn ojutu ni AWS. Agbegbe idojukọ rẹ jẹ ipilẹṣẹ AI ati AWS AI Accelerators. O ni oye oye oye ni Imọ-ẹrọ Kọmputa ati Bioinformatics. Niithiyn n ṣiṣẹ ni pẹkipẹki pẹlu ẹgbẹ Generative AI GTM lati jẹ ki awọn alabara AWS ṣiṣẹ ni awọn iwaju pupọ ati mu iyara gbigba wọn ti AI ipilẹṣẹ. O jẹ onijakidijagan ti Dallas Mavericks ati gbadun gbigba awọn sneakers.

Sebastian Bustillo jẹ Onitumọ Awọn ojutu ni AWS. O dojukọ awọn imọ-ẹrọ AI / ML pẹlu ifẹ ti o jinlẹ fun AI ti ipilẹṣẹ ati iṣiro awọn iyara. Ni AWS, o ṣe iranlọwọ fun awọn alabara lati ṣii iye iṣowo nipasẹ AI ipilẹṣẹ. Nigbati ko ba si ni ibi iṣẹ, o gbadun pipọn kofi pipe ti kofi pataki ati ṣawari agbaye pẹlu iyawo rẹ.

Sebastian Bustillo jẹ Onitumọ Awọn ojutu ni AWS. O dojukọ awọn imọ-ẹrọ AI / ML pẹlu ifẹ ti o jinlẹ fun AI ti ipilẹṣẹ ati iṣiro awọn iyara. Ni AWS, o ṣe iranlọwọ fun awọn alabara lati ṣii iye iṣowo nipasẹ AI ipilẹṣẹ. Nigbati ko ba si ni ibi iṣẹ, o gbadun pipọn kofi pipe ti kofi pataki ati ṣawari agbaye pẹlu iyawo rẹ.

Armando Diaz jẹ Onitumọ Awọn ojutu ni AWS. O fojusi lori AI ipilẹṣẹ, AI / ML, ati Awọn atupale Data. Ni AWS, Armando ṣe iranlọwọ fun awọn alabara ti o ṣepọ awọn agbara AI ti ipilẹṣẹ gige-eti sinu awọn eto wọn, imudara imotuntun ati anfani ifigagbaga. Nigbati ko ba si nibi iṣẹ, o gbadun lilo akoko pẹlu iyawo ati ẹbi rẹ, irin-ajo, ati rin irin-ajo agbaye.

Armando Diaz jẹ Onitumọ Awọn ojutu ni AWS. O fojusi lori AI ipilẹṣẹ, AI / ML, ati Awọn atupale Data. Ni AWS, Armando ṣe iranlọwọ fun awọn alabara ti o ṣepọ awọn agbara AI ti ipilẹṣẹ gige-eti sinu awọn eto wọn, imudara imotuntun ati anfani ifigagbaga. Nigbati ko ba si nibi iṣẹ, o gbadun lilo akoko pẹlu iyawo ati ẹbi rẹ, irin-ajo, ati rin irin-ajo agbaye.

Dokita Farooq Sabir jẹ Imọye Oríkĕ Agba ati Awọn Solusan Onimọran Imọran Ẹkọ Ẹrọ ni AWS. O ni oye PhD ati MS ni Imọ-ẹrọ Itanna lati Ile-ẹkọ giga ti Texas ni Austin ati MS ni Imọ-ẹrọ Kọmputa lati Ile-ẹkọ Imọ-ẹrọ Georgia. O ni diẹ sii ju ọdun 15 ti iriri iṣẹ ati pe o nifẹ lati kọ ati kọ awọn ọmọ ile-iwe kọlẹji. Ni AWS, o ṣe iranlọwọ fun awọn onibara ṣe agbekalẹ ati yanju awọn iṣoro iṣowo wọn ni imọ-ẹrọ data, ẹkọ ẹrọ, iran kọmputa, itetisi atọwọda, iṣapeye nọmba, ati awọn ibugbe ti o jọmọ. Ti o da ni Dallas, Texas, oun ati ẹbi rẹ nifẹ lati rin irin-ajo ati lọ si awọn irin ajo gigun.

Dokita Farooq Sabir jẹ Imọye Oríkĕ Agba ati Awọn Solusan Onimọran Imọran Ẹkọ Ẹrọ ni AWS. O ni oye PhD ati MS ni Imọ-ẹrọ Itanna lati Ile-ẹkọ giga ti Texas ni Austin ati MS ni Imọ-ẹrọ Kọmputa lati Ile-ẹkọ Imọ-ẹrọ Georgia. O ni diẹ sii ju ọdun 15 ti iriri iṣẹ ati pe o nifẹ lati kọ ati kọ awọn ọmọ ile-iwe kọlẹji. Ni AWS, o ṣe iranlọwọ fun awọn onibara ṣe agbekalẹ ati yanju awọn iṣoro iṣowo wọn ni imọ-ẹrọ data, ẹkọ ẹrọ, iran kọmputa, itetisi atọwọda, iṣapeye nọmba, ati awọn ibugbe ti o jọmọ. Ti o da ni Dallas, Texas, oun ati ẹbi rẹ nifẹ lati rin irin-ajo ati lọ si awọn irin ajo gigun.

Marco Punio jẹ Onitumọ Awọn Solusan ti dojukọ lori ilana AI ipilẹṣẹ, awọn solusan AI ti a lo ati ṣiṣe iwadii lati ṣe iranlọwọ fun awọn alabara hyper-iwọn lori AWS. Marco jẹ oludamoran awọsanma oni-nọmba oni-nọmba pẹlu iriri ni FinTech, Ilera & Awọn sáyẹnsì Igbesi aye, Sọfitiwia-bi-iṣẹ, ati laipẹ julọ, ni awọn ile-iṣẹ Ibaraẹnisọrọ. O jẹ onimọ-ẹrọ ti o peye pẹlu itara fun ẹkọ ẹrọ, oye atọwọda, ati awọn iṣọpọ & awọn ohun-ini. Marco wa ni orisun ni Seattle, WA ati gbadun kikọ, kika, adaṣe, ati awọn ohun elo kikọ ni akoko ọfẹ rẹ.

Marco Punio jẹ Onitumọ Awọn Solusan ti dojukọ lori ilana AI ipilẹṣẹ, awọn solusan AI ti a lo ati ṣiṣe iwadii lati ṣe iranlọwọ fun awọn alabara hyper-iwọn lori AWS. Marco jẹ oludamoran awọsanma oni-nọmba oni-nọmba pẹlu iriri ni FinTech, Ilera & Awọn sáyẹnsì Igbesi aye, Sọfitiwia-bi-iṣẹ, ati laipẹ julọ, ni awọn ile-iṣẹ Ibaraẹnisọrọ. O jẹ onimọ-ẹrọ ti o peye pẹlu itara fun ẹkọ ẹrọ, oye atọwọda, ati awọn iṣọpọ & awọn ohun-ini. Marco wa ni orisun ni Seattle, WA ati gbadun kikọ, kika, adaṣe, ati awọn ohun elo kikọ ni akoko ọfẹ rẹ.

AJ Dhimine jẹ Onitumọ Awọn ojutu ni AWS. O ṣe amọja ni AI ipilẹṣẹ, iširo olupin ati awọn atupale data. O jẹ ọmọ ẹgbẹ ti nṣiṣe lọwọ / olutojueni ni Agbegbe Imọ-ẹrọ Imọ-ẹrọ Imọ-ẹrọ ati pe o ti ṣe atẹjade ọpọlọpọ awọn iwe imọ-jinlẹ lori ọpọlọpọ awọn akọle AI / ML. O ṣiṣẹ pẹlu awọn alabara, lati awọn ibẹrẹ si awọn ile-iṣẹ, lati ṣe agbekalẹ awọn solusan AI ti ipilẹṣẹ AWsome. O ṣe itara ni pataki nipa gbigbe Awọn awoṣe Ede nla fun awọn itupalẹ data ilọsiwaju ati ṣawari awọn ohun elo ti o wulo ti o koju awọn italaya gidi-aye. Ni ita iṣẹ, AJ gbadun irin-ajo, ati pe o wa lọwọlọwọ ni awọn orilẹ-ede 53 pẹlu ibi-afẹde ti ṣabẹwo si gbogbo orilẹ-ede ni agbaye.

AJ Dhimine jẹ Onitumọ Awọn ojutu ni AWS. O ṣe amọja ni AI ipilẹṣẹ, iširo olupin ati awọn atupale data. O jẹ ọmọ ẹgbẹ ti nṣiṣe lọwọ / olutojueni ni Agbegbe Imọ-ẹrọ Imọ-ẹrọ Imọ-ẹrọ ati pe o ti ṣe atẹjade ọpọlọpọ awọn iwe imọ-jinlẹ lori ọpọlọpọ awọn akọle AI / ML. O ṣiṣẹ pẹlu awọn alabara, lati awọn ibẹrẹ si awọn ile-iṣẹ, lati ṣe agbekalẹ awọn solusan AI ti ipilẹṣẹ AWsome. O ṣe itara ni pataki nipa gbigbe Awọn awoṣe Ede nla fun awọn itupalẹ data ilọsiwaju ati ṣawari awọn ohun elo ti o wulo ti o koju awọn italaya gidi-aye. Ni ita iṣẹ, AJ gbadun irin-ajo, ati pe o wa lọwọlọwọ ni awọn orilẹ-ede 53 pẹlu ibi-afẹde ti ṣabẹwo si gbogbo orilẹ-ede ni agbaye.

- SEO Agbara akoonu & PR Pinpin. Gba Imudara Loni.

- PlatoData.Network inaro Generative Ai. Fi agbara fun ara Rẹ. Wọle si Nibi.

- PlatoAiStream. Web3 oye. Imo Amugbadun. Wọle si Nibi.

- PlatoESG. Erogba, CleanTech, Agbara, Ayika, Oorun, Isakoso Egbin. Wọle si Nibi.

- PlatoHealth. Imọ-ẹrọ Imọ-ẹrọ ati Awọn Idanwo Ile-iwosan. Wọle si Nibi.

- Orisun: https://aws.amazon.com/blogs/machine-learning/advanced-rag-patterns-on-amazon-sagemaker/