Always-sensing cameras are a relatively new method for users to interact with their smartphones, home appliances, and other consumer devices. Like always-listening audio-based Siri and Alexa, always-sensing cameras enable a seamless, more natural user experience. However, always-sensing camera subsystems require specialized processing due to the quantity and complexity of data generated.

But, how can always-sensing sub-systems be architected to meet the stringent power, latency, and privacy needs of the user? Despite ongoing improvements in energy storage density, next-generation devices always place increased demands on batteries. Even wall-powered devices face scrutiny, with consumers, businesses, and governments demanding lower power consumption. Latency is a huge factor as well; for the best user experience, devices must instantly react to user inputs, and always-sensing systems cannot compete with other processes which add unneeded latency and slow reasons. Privacy and data security are also significant concerns; always-sensing systems need to be architected to securely capture and process data from the camera without storing or exposing it.

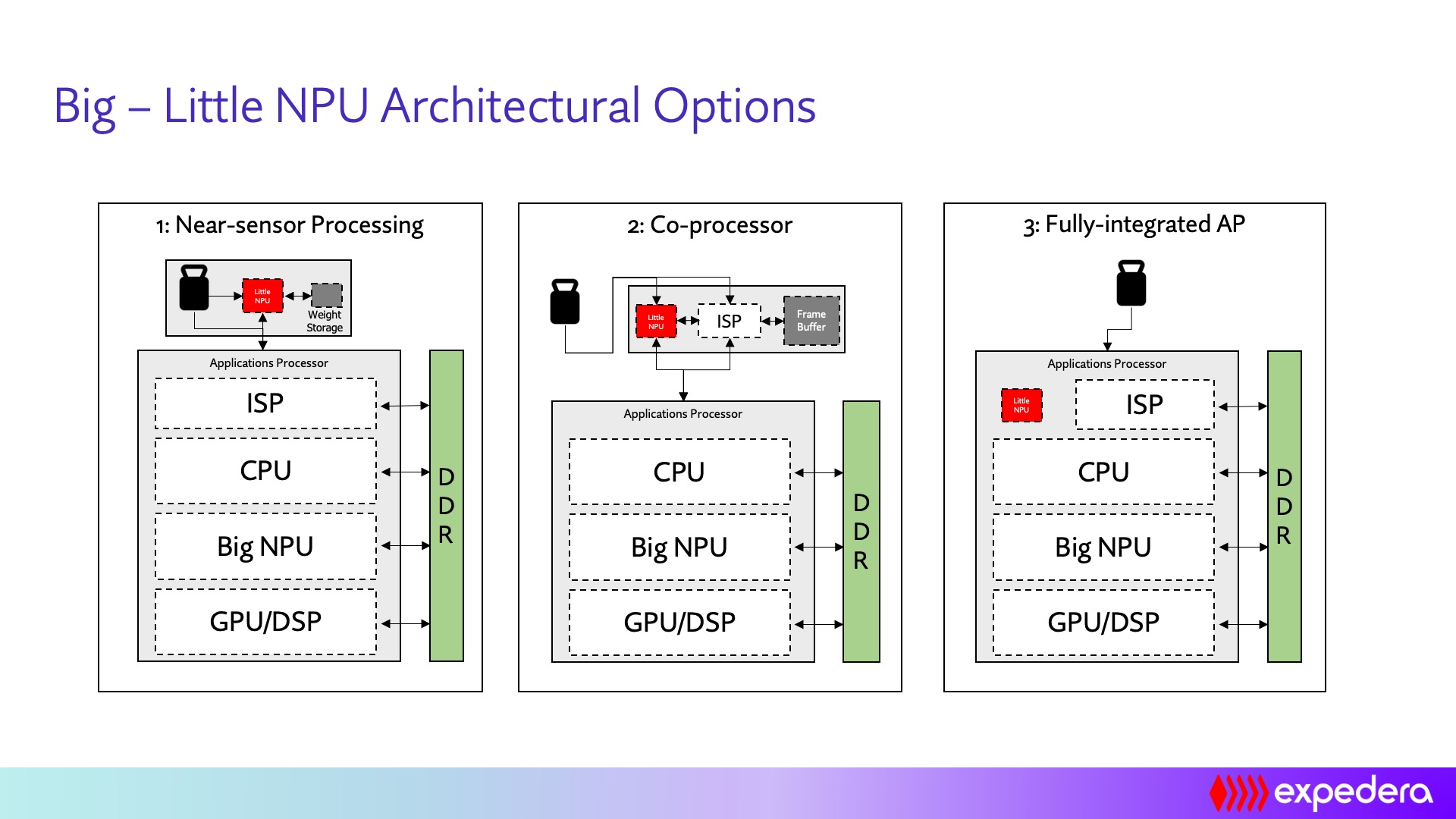

So how can always-sensing be enabled in a power, latency, and privacy-friendly method? While many existing Application Processors (APs) have NPUs inside of them, those NPUs aren’t the ideal vehicle for always-sensing. A typical AP is a mix of heterogeneous computing cores, including CPUs, ISPs, GPU/DSPs, and NPUs. Each processor is designed for specific computing and potentially large processing loads. For example, a typical general-purpose NPU might provide 5-10 TOPS of performance, with a typical power consumption of around 4 TOPS/W and about 40% utilization. However, it is inefficient because it must be somewhat overdesigned to handle worst-case workloads.

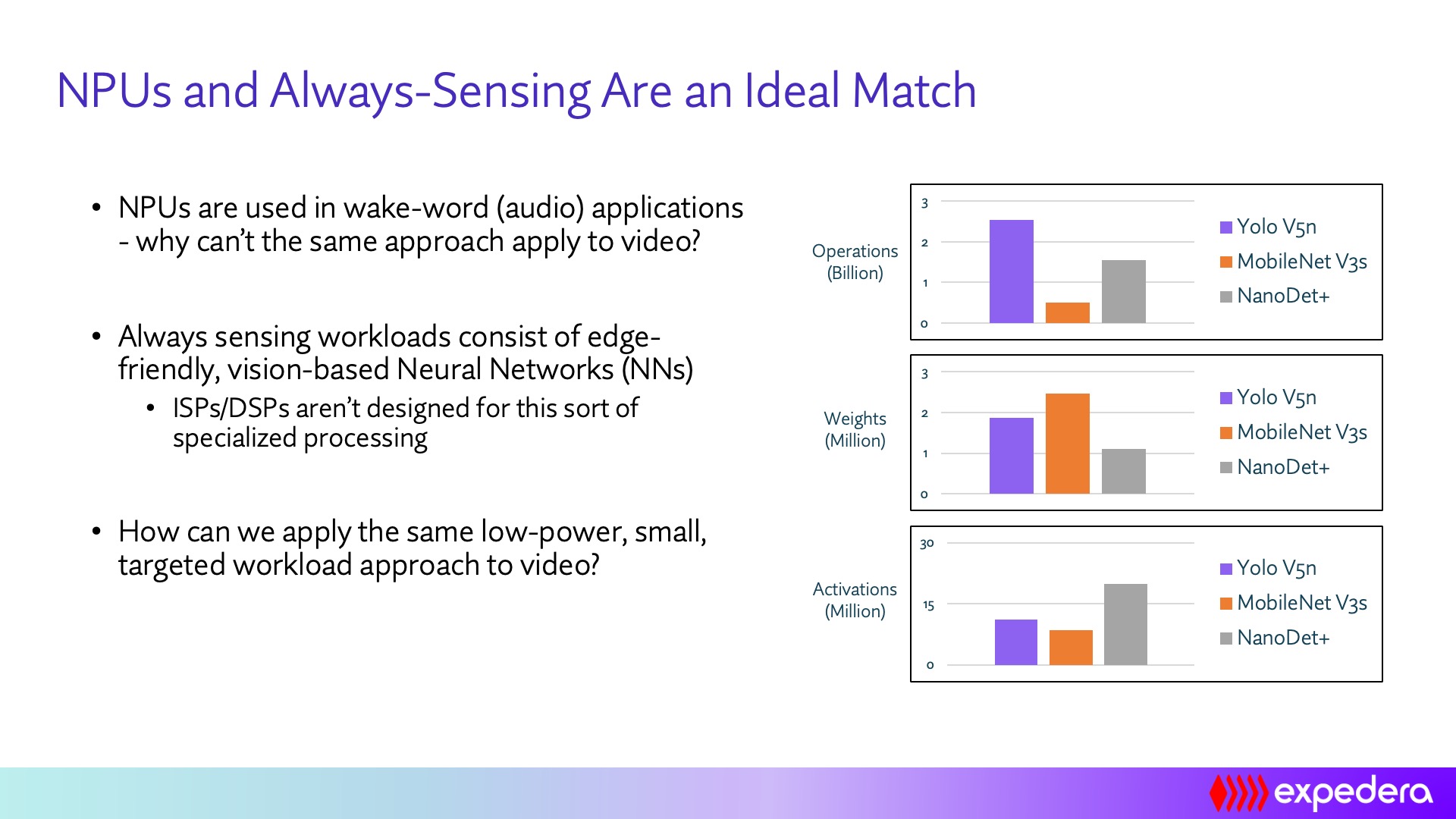

Always-sensing neural networks are specifically created to require minimal processing, typically measured in GOPS — GOPS being one-thousandth of TOPS. While the NPU in an existing AP is capable of always-sensing AI processing, it’s not the right choice for various reasons. First, power consumption will significant, which is a non-starter for an always-on feature since it translates directly to reduced battery life. Second, since AP-based NPU is typically busy with other tasks, other processes can increase latency and negatively impact the user experience. Finally, privacy concerns essentially preclude using the application processor. This is because the always-sensing camera data needs to be isolated from the rest of the system and must not be stored within the device or transmitted off the device. This is necessary to limit the exposure of that data and reduce the chances of a nefarious party stealing the data.

The solution, then, is a dedicated NPU specifically designed and implemented to process always-sensing networks with an absolute minimum of area, power, and latency: the LittleNPU.

In this webinar, Expedera and SemiWiki explore how a dedicated always-sensing subsystem with a dedicated LittleNPU can address the power, latency, and privacy needs while providing an incredible user experience.

Presented by

Sharad Chole, Expedera Chief Scientist and Co-founder

About this talk

Always-sensing cameras are emerging in smartphones, home appliances, and other consumer devices, much like the always-listening Siri or Google voice assistants. Always-on technologies enable a more natural and seamless user experience, allowing such features as automatic locking and unlocking of the device or display adjustment based on the user’s gaze. However, camera data has quality, richness, and privacy concerns which requires specialized Artificial Intelligence (AI) processing. However, existing system processors are ill-suited for always-sensing applications.

Without careful attention to Neural Processing Unit (NPU) design, an always-sensing sub-system will consume excessive power, suffer from excessive latency, or risk the privacy of the user, all leading to an unsatisfactory user experience. To process always-sensing data in a power, latency, and privacy-friendly manner, OEMs are turning to specialized “LittleNPU” AI processors. In this webinar, we’ll explore the architecture of always-sensing, discuss use cases, and provide tips for how OEMs, chipmakers, and system architects can successfully evaluate, specify, and deploy an NPU in an always-on camera sub-system.

Expedera provides scalable neural engine semiconductor IP that enables major improvements in performance, power, and latency while reducing cost and complexity in AI-inference applications. Third-party silicon-validated, Expedera’s solutions produce superior performance and are scalable to a wide range of applications from edge nodes and smartphones to automotive and data centers. Expedera’s Origin™ deep learning accelerator products are easily integrated, readily scalable, and can be customized to application requirements. The company is headquartered in Santa Clara, California. Visit expedera.com

Also Read:

Deep thinking on compute-in-memory in AI inference

Area-optimized AI inference for cost-sensitive applications

Ultra-efficient heterogeneous SoCs for Level 5 self-driving

CEO Interview: Da Chuang of Expedera

Share this post via:

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoAiStream. Web3 Data Intelligence. Knowledge Amplified. Access Here.

- Minting the Future w Adryenn Ashley. Access Here.

- Buy and Sell Shares in PRE-IPO Companies with PREIPO®. Access Here.

- Source: https://semiwiki.com/ip/expedera/329804-webinar-an-ideal-neural-processing-engine-for-always-sensing-deployments/