Account reconciliation is an important step to ensure the completeness and accuracy of financial statements. Specifically, companies must reconcile तुलन पत्र accounts that could contain significant or material misstatements. Accountants go through each account in the general ledger of accounts and verify that the balance listed is complete and accurate. When discrepancies are found, accountants investigate and take appropriate corrective action.

As part of Amazon’s FinTech organization, we offer a software platform that empowers the internal accounting teams at Amazon to conduct account reconciliations. To optimize the reconciliation process, these users require high performance transformation with the ability to scale on demand, as well as the ability to process variable file sizes ranging from as low as a few MBs to more than 100 GB. It’s not always possible to fit data onto a single machine or process it with one single program in a reasonable time frame. This computation has to be done fast enough to provide practical services where programming logic and underlying details (data distribution, fault tolerance, and scheduling) can be separated.

We can achieve these simultaneous computations on multiple machines or threads of the same function across groups of elements of a dataset by using distributed data processing solutions. This encouraged us to reinvent our reconciliation service powered by AWS services, including अमेज़ॅन ईएमआर और अपाचे स्पार्क distributed processing framework, which uses पाइस्पार्क. This service enables users to process files over 100 GB containing up to 100 million transactions in less than 30 minutes. The reconciliation service has become a powerhouse for data processing, and now users can seamlessly perform a variety of operations, such as प्रधान आधार, शामिल हों (like an Excel VLOOKUP operation), अंकगणित संचालन, और अधिक, providing a versatile and efficient solution for reconciling vast datasets. This enhancement is a testament to the scalability and speed achieved through the adoption of distributed data processing solutions.

In this post, we explain how we integrated Amazon EMR to build a highly available and scalable system that enabled us to run a high-volume financial reconciliation process.

Architecture before migration

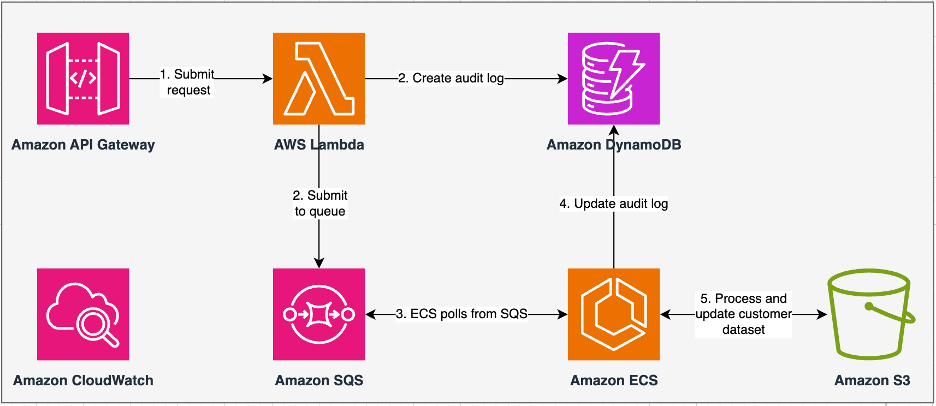

The following diagram illustrates our previous architecture.

Our legacy service was built with अमेज़ॅन इलास्टिक कंटेनर सेवा (अमेज़न ईसीएस) पर AWS फरगेट. We processed the data sequentially using Python. However, due to its lack of parallel processing capability, we frequently had to increase the cluster size vertically to support larger datasets. For context, 5 GB of data with 50 operations took around 3 hours to process. This service was configured to scale horizontally to five ECS instances that polled messages from अमेज़ॅन सरल कतार सेवा (Amazon SQS), which fed the transformation requests. Each instance was configured with 4 vCPUs and 30 GB of memory to allow horizontal scaling. However, we couldn’t expand its capacity on performance because the process happened sequentially, picking chunks of data from अमेज़न सरल भंडारण सेवा (Amazon S3) for processing. For example, a VLOOKUP operation where two files are to be joined required both files to be read in memory chunk by chunk to obtain the output. This became an obstacle for users because they had to wait for long periods of time to process their datasets.

As part of our re-architecture and modernization, we wanted to achieve the following:

- उच्च उपलब्धता – The data processing clusters should be highly available, providing three 9s of availability (99.9%)

- प्रवाह – The service should handle 1,500 runs per day

- विलंब – It should be able to process 100 GB of data within 30 minutes

- विविधता – The cluster should be able to support a wide variety of workloads, with files ranging from a few MBs to hundreds of GBs

- Query concurrency – The implementation demands the ability to support a minimum of 10 degrees of concurrency

- Reliability of jobs and data consistency – Jobs need to run reliably and consistently to avoid breaking Service Level Agreements (SLAs)

- लागत प्रभावी और स्केलेबल – It must be scalable based on the workload, making it cost-effective

- सुरक्षा और अनुपालन – Given the sensitivity of data, it must support fine-grained access control and appropriate security implementations

- निगरानी – The solution must offer end-to-end monitoring of the clusters and jobs

Why Amazon EMR

Amazon EMR is the industry-leading cloud big data solution for petabyte-scale data processing, interactive analytics, and machine learning (ML) using open source frameworks such as अपाचे स्पार्क, अपाचे हाइव, तथा हाथ की सफ़ाई. With these frameworks and related open-source projects, you can process data for analytics purposes and BI workloads. Amazon EMR lets you transform and move large amounts of data in and out of other AWS data stores and databases, such as Amazon S3 and अमेज़ॅन डायनेमोडीबी.

A notable advantage of Amazon EMR lies in its effective use of parallel processing with PySpark, marking a significant improvement over traditional sequential Python code. This innovative approach streamlines the deployment and scaling of Apache Spark clusters, allowing for efficient parallelization on large datasets. The distributed computing infrastructure not only enhances performance, but also enables the processing of vast amounts of data at unprecedented speeds. Equipped with libraries, PySpark facilitates Excel-like operations on डेटाफ्रेम, and the higher-level abstraction of DataFrames simplifies intricate data manipulations, reducing code complexity. Combined with automatic cluster provisioning, dynamic resource allocation, and integration with other AWS services, Amazon EMR proves to be a versatile solution suitable for diverse workloads, ranging from batch processing to ML. The inherent fault tolerance in PySpark and Amazon EMR promotes robustness, even in the event of node failures, making it a scalable, cost-effective, and high-performance choice for parallel data processing on AWS.

Amazon EMR extends its capabilities beyond the basics, offering a variety of deployment options to cater to diverse needs. Whether it’s EC2 पर अमेज़न EMR, ईकेएस पर अमेज़न ईएमआर, अमेज़ॅन ईएमआर सर्वर रहितया, Amazon EMR on AWS Outposts, you can tailor your approach to specific requirements. For those seeking a serverless environment for Spark jobs, integrating एडब्ल्यूएस गोंद is also a viable option. In addition to supporting various open-source frameworks, including Spark, Amazon EMR provides flexibility in choosing deployment modes, अमेज़ॅन इलास्टिक कम्प्यूट क्लाउड (Amazon EC2) instance types, scaling mechanisms, and numerous cost-saving optimization techniques.

Amazon EMR stands as a dynamic force in the cloud, delivering unmatched capabilities for organizations seeking robust big data solutions. Its seamless integration, powerful features, and adaptability make it an indispensable tool for navigating the complexities of data analytics and ML on AWS.

Redesigned architecture

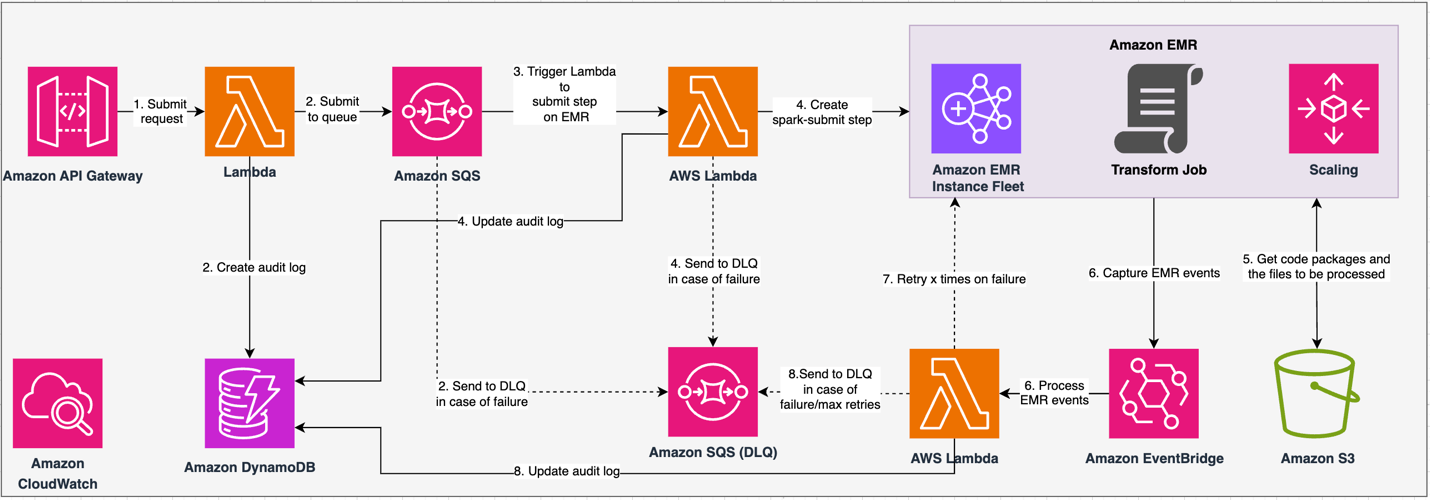

The following diagram illustrates our redesigned architecture.

The solution operates under an API contract, where clients can submit transformation configurations, defining the set of operations alongside the S3 dataset location for processing. The request is queued through Amazon SQS, then directed to Amazon EMR via a Lambda function. This process initiates the creation of an Amazon EMR step for Spark framework implementation on a dedicated EMR cluster. Although Amazon EMR accommodates an unlimited number of steps over a long-running cluster’s lifetime, only 256 steps can be running or pending simultaneously. For optimal parallelization, the step concurrency is set at 10, allowing 10 steps to run concurrently. In case of request failures, the Amazon SQS मृत-पत्र कतार (DLQ) retains the event. Spark processes the request, translating Excel-like operations into PySpark code for an efficient query plan. Resilient DataFrames store input, output, and intermediate data in-memory, optimizing processing speed, reducing disk I/O cost, enhancing workload performance, and delivering the final output to the specified Amazon S3 location.

We define our SLA in two dimensions: latency and throughput. Latency is defined as the amount of time taken to perform one job against a deterministic dataset size and the number of operations performed on the dataset. Throughput is defined as the maximum number of simultaneous jobs the service can perform without breaching the latency SLA of one job. The overall scalability SLA of the service depends on the balance of horizontal scaling of elastic compute resources and vertical scaling of individual servers.

Because we had to run 1,500 processes per day with minimal latency and high performance, we choose to integrate Amazon EMR on EC2 deployment mode with managed scaling enabled to support processing variable file sizes.

The EMR cluster configuration provides many different selections:

- EMR node types – Primary, core, or task nodes

- Instance purchasing options – On-Demand Instances, Reserved Instances, or Spot Instances

- विन्यास विकल्प – EMR instance fleet or uniform instance group

- Scaling options - ऑटो स्केलिंग or Amazon EMR managed scaling

Based on our variable workload, we configured an EMR instance fleet (for best practices, see विश्वसनीयता). We also decided to use Amazon EMR managed scaling to scale the core and task nodes (for scaling scenarios, refer to Node allocation scenarios). Lastly, we chose memory-optimized एडब्ल्यूएस ग्रेविटन instances, which provide up to 30% lower cost and up to 15% improved performance for Spark workloads.

The following code provides a snapshot of our cluster configuration:

प्रदर्शन

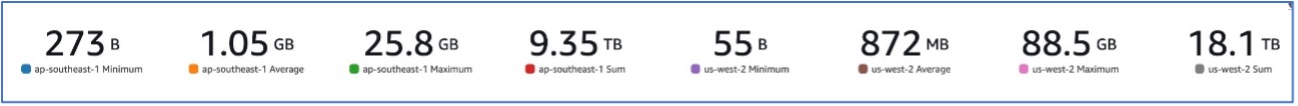

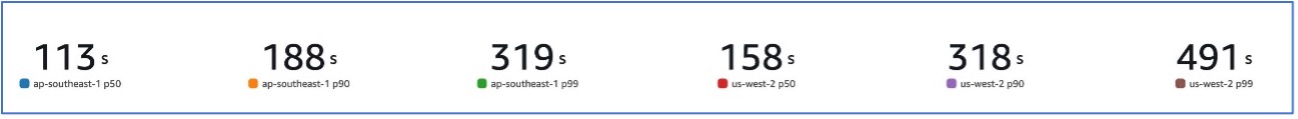

With our migration to Amazon EMR, we were able to achieve a system performance capable of handling a variety of datasets, ranging from as low as 273 B to as high as 88.5 GB with a p99 of 491 seconds (approximately 8 minutes).

The following figure illustrates the variety of file sizes processed.

The following figure shows our latency.

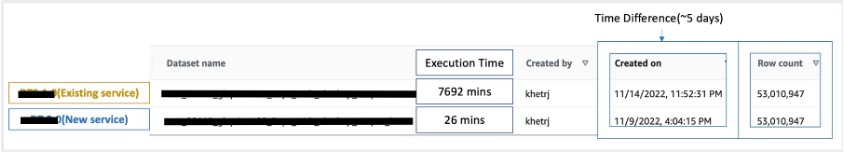

To compare against sequential processing, we took two datasets containing 53 million records and ran a VLOOKUP operation against each other, along with 49 other Excel-like operations. This took 26 minutes to process in the new service, compared to 5 days to process in the legacy service. This improvement is almost 300 times greater over the previous architecture in terms of performance.

विचार

Keep in mind the following when considering this solution:

- Right-sizing clusters – Although Amazon EMR is resizable, it’s important to right-size the clusters. Right-sizing mitigates a slow cluster, if undersized, or higher costs, if the cluster is oversized. To anticipate these issues, you can calculate the number and type of nodes that will be needed for the workloads.

- Parallel steps – Running steps in parallel allows you to run more advanced workloads, increase cluster resource utilization, and reduce the amount of time taken to complete your workload. The number of steps allowed to run at one time is configurable and can be set when a cluster is launched and any time after the cluster has started. You need to consider and optimize the CPU/memory usage per job when multiple jobs are running in a single shared cluster.

- Job-based transient EMR clusters – If applicable, it is recommended to use a job-based transient EMR cluster, which delivers superior isolation, verifying that each task operates within its dedicated environment. This approach optimizes resource utilization, helps prevent interference between jobs, and enhances overall performance and reliability. The transient nature enables efficient scaling, providing a robust and isolated solution for diverse data processing needs.

- ईएमआर सर्वर रहित – EMR Serverless is the ideal choice if you prefer not to handle the management and operation of clusters. It allows you to effortlessly run applications using open-source frameworks available within EMR Serverless, offering a straightforward and hassle-free experience.

- वीरांगना ईकेएस पर ईएमआर – Amazon EMR on EKS offers distinct advantages, such as faster startup times and improved scalability resolving compute capacity challenges—which is particularly beneficial for Graviton and Spot Instance users. The inclusion of a broader range of compute types enhances cost-efficiency, allowing tailored resource allocation. Furthermore, Multi-AZ support provides increased availability. These compelling features provide a robust solution for managing big data workloads with improved performance, cost optimization, and reliability across various computing scenarios.

निष्कर्ष

In this post, we explained how Amazon optimized its high-volume financial reconciliation process with Amazon EMR for higher scalability and performance. If you have a monolithic application that’s dependent on vertical scaling to process additional requests or datasets, then migrating it to a distributed processing framework such as Apache Spark and choosing a managed service such as Amazon EMR for compute may help reduce the runtime to lower your delivery SLA, and also may help reduce the Total Cost of Ownership (TCO).

As we embrace Amazon EMR for this particular use case, we encourage you to explore further possibilities in your data innovation journey. Consider evaluating AWS Glue, along with other dynamic Amazon EMR deployment options such as EMR Serverless or Amazon EMR on EKS, to discover the best AWS service tailored to your unique use case. The future of the data innovation journey holds exciting possibilities and advancements to be explored further.

लेखक के बारे में

Jeeshan Khetrapal is a Sr. Software Development Engineer at Amazon, where he develops fintech products based on cloud computing serverless architectures that are responsible for companies’ IT general controls, financial reporting, and controllership for governance, risk, and compliance.

Jeeshan Khetrapal is a Sr. Software Development Engineer at Amazon, where he develops fintech products based on cloud computing serverless architectures that are responsible for companies’ IT general controls, financial reporting, and controllership for governance, risk, and compliance.

शक्ति मिश्रा AWS में एक प्रिंसिपल सॉल्यूशंस आर्किटेक्ट हैं, जहां वह ग्राहकों को उनके डेटा आर्किटेक्चर को आधुनिक बनाने और डेटा सुरक्षा, पहुंच, प्रशासन और बहुत कुछ सहित उनकी एंड-टू-एंड डेटा रणनीति को परिभाषित करने में मदद करते हैं। वह पुस्तक के लेखक भी हैं अमेज़ॅन ईएमआर के साथ बिग डेटा एनालिटिक्स को सरल बनाएं. काम के अलावा, शक्ति को नई तकनीकें सीखना, फिल्में देखना और परिवार के साथ जगहों पर जाना पसंद है।

शक्ति मिश्रा AWS में एक प्रिंसिपल सॉल्यूशंस आर्किटेक्ट हैं, जहां वह ग्राहकों को उनके डेटा आर्किटेक्चर को आधुनिक बनाने और डेटा सुरक्षा, पहुंच, प्रशासन और बहुत कुछ सहित उनकी एंड-टू-एंड डेटा रणनीति को परिभाषित करने में मदद करते हैं। वह पुस्तक के लेखक भी हैं अमेज़ॅन ईएमआर के साथ बिग डेटा एनालिटिक्स को सरल बनाएं. काम के अलावा, शक्ति को नई तकनीकें सीखना, फिल्में देखना और परिवार के साथ जगहों पर जाना पसंद है।

- एसईओ संचालित सामग्री और पीआर वितरण। आज ही प्रवर्धित हो जाओ।

- प्लेटोडेटा.नेटवर्क वर्टिकल जेनरेटिव एआई। स्वयं को शक्तिवान बनाएं। यहां पहुंचें।

- प्लेटोआईस्ट्रीम। Web3 इंटेलिजेंस। ज्ञान प्रवर्धित। यहां पहुंचें।

- प्लेटोईएसजी. कार्बन, क्लीनटेक, ऊर्जा, पर्यावरण, सौर, कचरा प्रबंधन। यहां पहुंचें।

- प्लेटोहेल्थ। बायोटेक और क्लिनिकल परीक्षण इंटेलिजेंस। यहां पहुंचें।

- स्रोत: https://aws.amazon.com/blogs/big-data/how-amazon-optimized-its-high-volume-financial-reconciliation-process-with-amazon-emr-for-higher-scalability-and-performance/