A yau, abokan ciniki na duk masana'antu-ko sabis na kuɗi, kiwon lafiya da kimiyyar rayuwa, balaguro da baƙi, kafofin watsa labarai da nishaɗi, sadarwa, software a matsayin sabis (SaaS), har ma da masu samar da samfuran mallakar mallaka-suna amfani da samfuran manyan harshe (LLMs) gina aikace-aikace kamar tambaya da amsa (QnA) chatbots, injunan bincike, da tushen ilimi. Wadannan generative AI aikace-aikacen ba kawai ana amfani da su don sarrafa ayyukan kasuwanci na yanzu ba, amma kuma suna da ikon canza gogewa ga abokan ciniki ta amfani da waɗannan aikace-aikacen. Tare da ci gaban da ake samu tare da LLMs kamar Umarnin Mixtral-8x7B, Asalin gine-gine irin su cakudawar masana (MoE), Abokan ciniki suna ci gaba da neman hanyoyin da za su inganta aiki da daidaito na aikace-aikacen AI masu haɓakawa yayin da suke ba su damar yin amfani da fa'ida mai fa'ida na rufaffiyar ƙirar ƙira da buɗe ido.

Ana amfani da fasaha da dama don inganta daidaito da aikin kayan aikin LLM, kamar daidaitawa da kyau. Daidaita ingantaccen daidaitawa (PEFT), ƙarfafa koyo daga ra'ayin ɗan adam (RLHF), da kuma yin distillation ilimi. Koyaya, lokacin gina aikace-aikacen AI masu haɓakawa, zaku iya amfani da madadin mafita wanda ke ba da damar haɓaka haɓaka ilimin waje kuma yana ba ku damar sarrafa bayanan da ake amfani da su don tsarawa ba tare da buƙatar daidaita tsarin tushen ku na yanzu ba. Wannan shine inda Maido da Ƙarfafa Ƙarfafawa (RAG) ya shigo, musamman don aikace-aikacen AI na haɓaka sabanin mafi tsada da ƙwaƙƙwaran hanyoyin daidaitawa da muka tattauna. Idan kuna aiwatar da hadaddun aikace-aikacen RAG a cikin ayyukanku na yau da kullun, zaku iya fuskantar ƙalubale na gama gari tare da tsarin RAG ɗinku kamar dawo da kuskure, ƙara girma da rikiɗar takardu, da cikar mahallin, wanda zai iya tasiri sosai ga inganci da amincin amsoshi da aka samar. .

Wannan post ɗin yana tattauna tsarin RAG don haɓaka daidaiton amsawa ta amfani da LangChain da kayan aikin kamar mai dawo da daftarin aiki na iyaye ban da dabaru kamar matsawa mahallin don baiwa masu haɓaka damar haɓaka aikace-aikacen AI masu haɓakawa.

Bayanin bayani

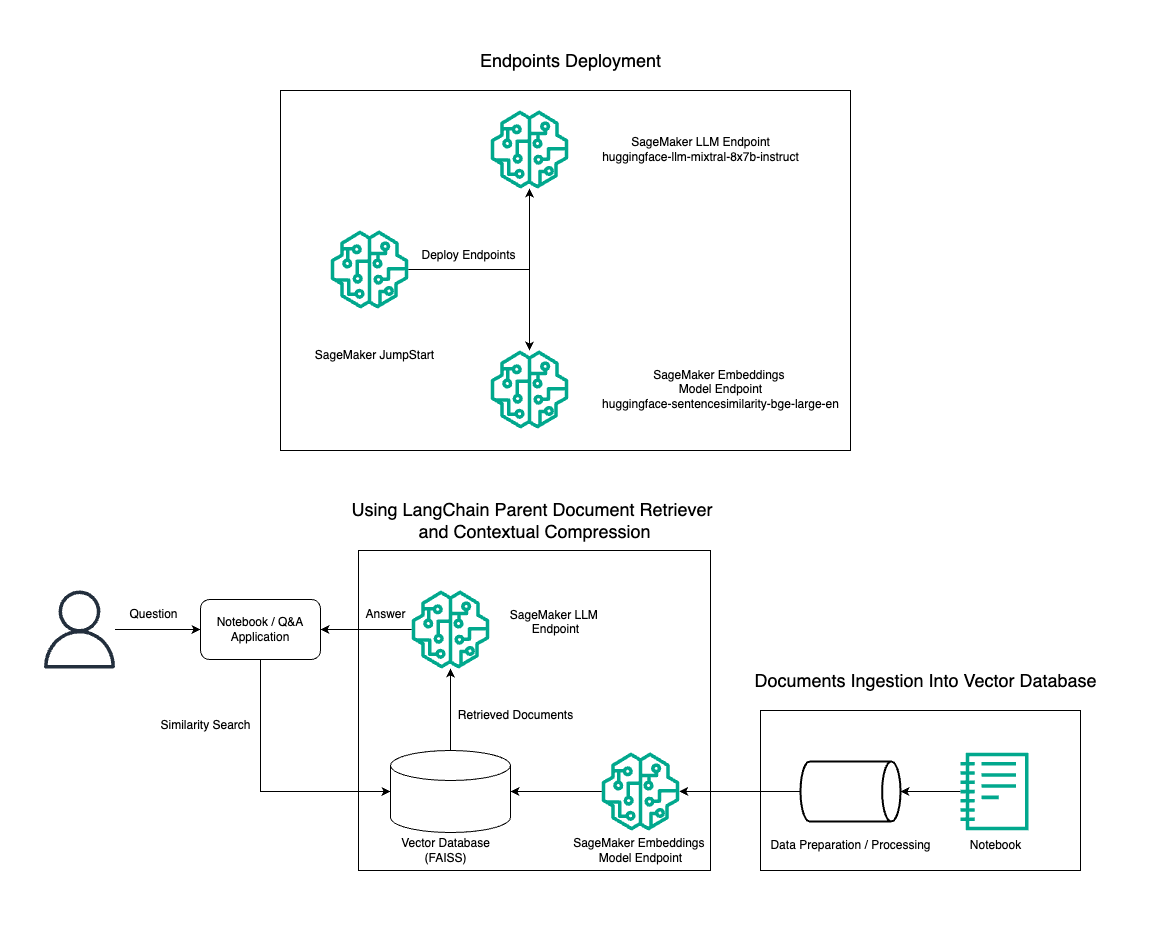

A cikin wannan post ɗin, muna nuna amfani da tsararrun rubutu na Mixtral-8x7B a haɗe tare da BGE Large En embed model don ingantaccen gina tsarin RAG QnA akan littafin littafin Amazon SageMaker ta amfani da kayan aikin dawo da takaddar iyaye da dabarun matsawa mahallin. Hoton da ke gaba yana kwatanta gine-ginen wannan bayani.

Kuna iya tura wannan mafita tare da dannawa kaɗan kawai ta amfani da Amazon SageMaker JumpStart, dandali mai cikakken sarrafawa wanda ke ba da samfuran tushe na zamani don lokuta daban-daban na amfani kamar rubutun abun ciki, tsara lamba, amsa tambaya, rubutun kwafi, taƙaitawa, rarrabawa, da dawo da bayanai. Yana ba da tarin samfuran da aka riga aka horar da za ku iya turawa cikin sauri da sauƙi, haɓaka haɓakawa da tura aikace-aikacen koyon injin (ML). Ɗaya daga cikin mahimman abubuwan haɗin SageMaker JumpStart shine Model Hub, wanda ke ba da babban kasida na ƙirar da aka riga aka horar, kamar Mixtral-8x7B, don ayyuka iri-iri.

Mixtral-8x7B yana amfani da gine-ginen MoE. Wannan gine-ginen yana ba da damar sassa daban-daban na hanyar sadarwa na jijiyoyi don ƙware a ayyuka daban-daban, yadda ya kamata ya rarraba nauyin aiki tsakanin masana da yawa. Wannan tsarin yana ba da damar ingantaccen horarwa da jigilar manyan samfura idan aka kwatanta da gine-ginen gargajiya.

Ɗaya daga cikin manyan fa'idodin gine-ginen MoE shine haɓakarsa. Ta hanyar rarraba nauyin aiki a tsakanin ƙwararrun masana da yawa, ana iya horar da ƙirar MoE akan manyan bayanai da kuma cimma kyakkyawan aiki fiye da ƙirar gargajiya na girman iri ɗaya. Bugu da ƙari, ƙirar MoE na iya zama mafi inganci yayin ƙididdigewa saboda ƙwararrun ƙwararrun ƙwararrun ne kawai ake buƙatar kunna don shigarwar da aka bayar.

Don ƙarin bayani akan Umarnin Mixtral-8x7B akan AWS, koma zuwa Mixtral-8x7B yanzu yana cikin Amazon SageMaker JumpStart. An yi samfurin Mixtral-8x7B a ƙarƙashin lasisin Apache 2.0 mai izini, don amfani ba tare da hani ba.

A cikin wannan post, zamu tattauna yadda zaku iya amfani da shi LangChain don ƙirƙirar aikace-aikacen RAG masu inganci da inganci. LangChain babban ɗakin karatu ne na Python wanda aka tsara don gina aikace-aikace tare da LLMs. Yana ba da tsari mai sauƙi da sassauƙa don haɗa LLMs tare da sauran abubuwan haɗin gwiwa, kamar tushen ilimi, tsarin dawowa, da sauran kayan aikin AI, don ƙirƙirar aikace-aikace masu ƙarfi da daidaitawa.

Muna tafiya ta hanyar gina bututun RAG akan SageMaker tare da Mixtral-8x7B. Muna amfani da tsarin tsara rubutu na Mixtral-8x7B tare da ƙirar BGE Large En don ƙirƙirar ingantaccen tsarin QnA ta amfani da RAG akan littafin rubutu na SageMaker. Muna amfani da misalin ml.t3.medium don nuna ƙaddamar da LLMs ta hanyar SageMaker JumpStart, wanda za'a iya samun dama ta wurin ƙarshen API na SageMaker. Wannan saitin yana ba da damar bincike, gwaji, da haɓaka dabarun RAG na ci gaba tare da LangChain. Har ila yau, muna kwatanta haɗewar kantin sayar da FAISS a cikin aikin RAG, yana nuna rawar da yake takawa wajen adanawa da dawo da kayan aiki don haɓaka aikin tsarin.

Muna yin taƙaitaccen bita na littafin rubutu na SageMaker. Don ƙarin cikakkun bayanai da umarnin mataki-mataki, koma zuwa Samfuran RAG na ci gaba tare da Mixtral akan SageMaker Jumpstart GitHub repo.

Bukatar ci-gaban tsarin RAG

Babban tsarin RAG yana da mahimmanci don haɓaka kan iyawar LLMs na yanzu wajen sarrafawa, fahimta, da samar da rubutu irin na ɗan adam. Yayin da girma da rikitarwa na takardu ke ƙaruwa, wakiltar bangarori da yawa na takaddar a cikin haɗawa ɗaya na iya haifar da asarar takamaiman takamaiman. Ko da yake yana da mahimmanci don ɗaukar ainihin ainihin takaddar, yana da mahimmanci daidai don gane da wakiltar bambance-bambancen ƙananan abubuwan da ke cikin. Wannan ƙalubale ne da ake yawan fuskanta lokacin aiki da manyan takardu. Wani ƙalubale tare da RAG shine cewa tare da dawowa, ba ku san takamaiman takamaiman tambayoyin da tsarin ajiyar daftarin aiki za su yi aiki da su ba lokacin da aka sha. Wannan zai iya haifar da bayanin da ya fi dacewa da tambaya da aka binne a ƙarƙashin rubutu (cikakken yanayi). Don rage gazawa da haɓakawa akan gine-ginen RAG da ake da su, zaku iya amfani da tsarin RAG na ci gaba (mai dawo da daftarin iyaye da matsawa mahallin) don rage kurakuran maidowa, haɓaka ingancin amsa, da ba da damar sarrafa tambayoyi masu rikitarwa.

Tare da dabarun da aka tattauna a cikin wannan post ɗin, zaku iya magance mahimman ƙalubalen da ke da alaƙa da dawo da ilimin waje da haɗin kai, ba da damar aikace-aikacen ku don isar da ƙarin madaidaicin martani da sanin yakamata.

A cikin sassan da ke gaba, mun bincika yadda masu dawo da daftarin iyaye da kuma matsi na mahallin zai iya taimaka muku magance wasu matsalolin da muka tattauna.

Mai dawo da daftarin iyaye

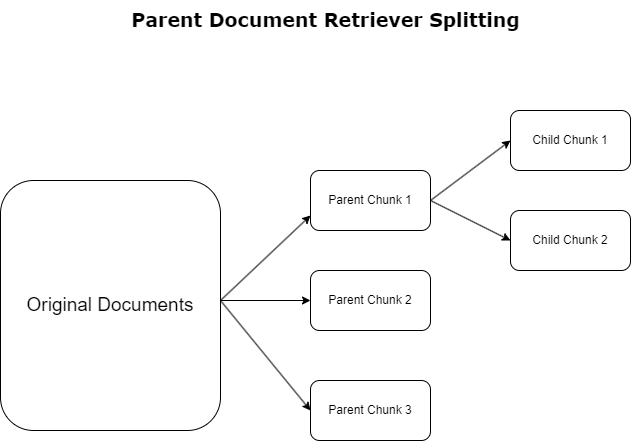

A cikin sashin da ya gabata, mun ba da haske game da ƙalubalen da aikace-aikacen RAG ke fuskanta yayin mu'amala da manyan takardu. Domin magance wadannan kalubale, masu dawo da daftarin iyaye rarraba da kuma sanya takardun masu shigowa kamar takardun iyaye. Ana gane waɗannan takaddun don cikakkun yanayin su amma ba a yi amfani da su kai tsaye a cikin ainihin sigar su don haɗawa ba. Maimakon matsar da takarda gabaɗaya cikin haɗawa guda ɗaya, masu dawo da takaddun iyaye suna rarraba waɗannan takaddun iyaye cikin ciki. takardun yara. Kowane takardun yaro yana ɗaukar sassa daban-daban ko batutuwa daga babban takaddar iyaye. Bayan gano waɗannan ɓangarori na yara, ana ba wa kowane ɗayan abubuwan haɗin gwiwa, suna ɗaukar takamaiman ainihin jigon su (duba zane mai zuwa). Lokacin dawowa, ana kiran daftarin iyaye. Wannan dabarar tana ba da damar bincike da aka yi niyya amma faffadan damar bincike, tana samar da LLM tare da hangen nesa. Masu dawo da daftarin iyaye suna ba wa LLMs fa'ida mai ninki biyu: ƙayyadaddun abubuwan da aka haɗa daftarin yara don daidaitattun bayanan da suka dace, haɗe tare da kiran takaddun iyaye don tsara amsawa, wanda ke wadatar da abubuwan LLM tare da shimfidar wuri kuma cikakke.

Matsi na yanayi

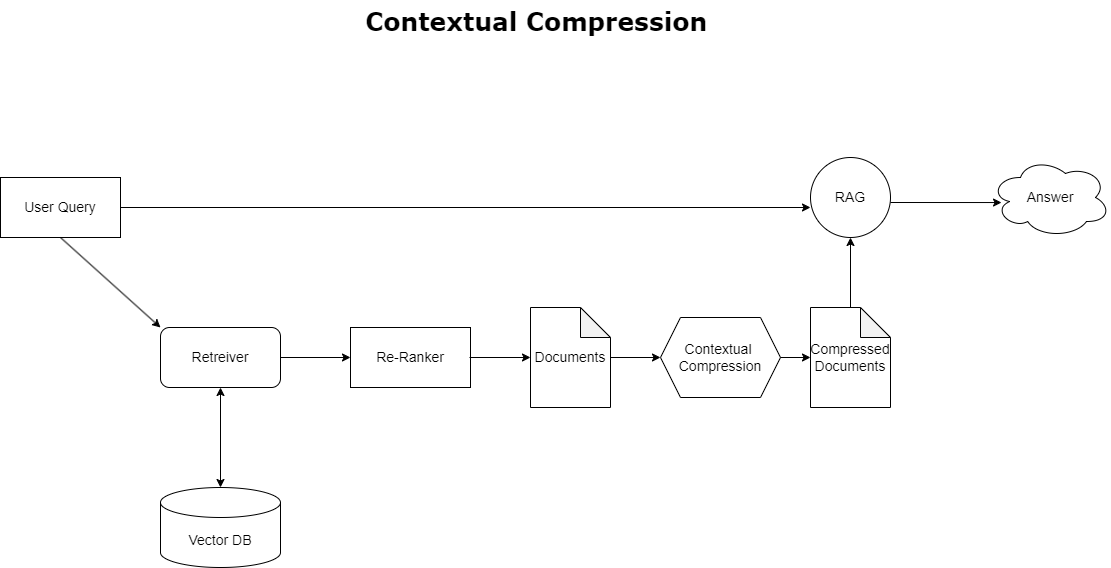

Don magance matsalar ambaliya da aka tattauna a baya, zaku iya amfani da su matsi na mahallin don matsawa da tace takaddun da aka samo daidai da mahallin tambayar, don haka kawai bayanan da suka dace ana adana da sarrafa su. Ana samun wannan ta hanyar haɗin mai dawo da tushe don ɗauko takaddun farko da na'urar damfara don tace waɗannan takaddun ta hanyar rage abubuwan da suke ciki ko keɓe su gaba ɗaya bisa mahimmanci, kamar yadda aka kwatanta a zane mai zuwa. Wannan ingantaccen tsarin, wanda mai dawo da matsi na mahallin ya sauƙaƙe, yana haɓaka ingantaccen aikace-aikacen RAG ta hanyar samar da hanya don cirewa da amfani da kawai abin da ke da mahimmanci daga tarin bayanai. Yana magance matsalar yawan bayanai da sarrafa bayanan da ba su da mahimmanci, wanda ke haifar da ingantacciyar ingancin amsawa, ƙarin ayyuka na LLM masu tsada, da tsari mai sauƙi na dawo da gabaɗaya. Mahimmanci, tacewa ne wanda ke daidaita bayanin zuwa tambayar da ke hannun, yana mai da shi kayan aiki da ake buƙata sosai don masu haɓakawa da nufin haɓaka aikace-aikacen su na RAG don ingantaccen aiki da gamsuwar mai amfani.

abubuwan da ake bukata

Idan kun kasance sababbi ga SageMaker, koma zuwa Jagorar Ci gaban SageMaker na Amazon.

Kafin ka fara da mafita. ƙirƙirar asusun AWS. Lokacin da kuka ƙirƙiri asusun AWS, kuna samun shaidar sa hannu guda ɗaya (SSO) wacce ke da cikakkiyar dama ga duk sabis na AWS da albarkatu a cikin asusun. Ana kiran wannan ainihin asusun AWS tushen mai amfani.

Shiga zuwa ga Gudanar da Gudanar da AWS ta amfani da adireshin imel da kalmar sirri da kuka yi amfani da ita don ƙirƙirar asusun yana ba ku cikakken damar shiga duk albarkatun AWS a cikin asusun ku. Muna ba da shawarar sosai cewa kada ku yi amfani da tushen mai amfani don ayyukan yau da kullun, har ma da na gudanarwa.

Madadin haka, manne wa tsaro mafi kyawun ayyuka in Gano AWS da Gudanar da Samun Dama (IAM), da ƙirƙirar mai amfani da ƙungiya mai gudanarwa. Sa'an nan kuma a kulle amintaccen bayanan bayanan mai amfani kuma yi amfani da su don yin ƴan asusu da ayyukan sarrafa sabis.

Samfurin Mixtral-8x7b yana buƙatar misali ml.g5.48xlarge. SageMaker JumpStart yana ba da hanya mai sauƙi don samun dama da tura sama da 100 buɗaɗɗen tushe daban-daban da ƙirar tushe na ɓangare na uku. Domin yi ƙaddamar da ƙarshen ƙarshen don karɓar bakuncin Mixtral-8x7B daga SageMaker JumpStart, ƙila kuna buƙatar buƙatar haɓaka keɓaɓɓun sabis don samun damar ml.g5.48xlarge misali don amfani da ƙarshen ƙarshen. Za ka iya ƙimar sabis na buƙatar yana ƙaruwa ta hanyar console, Hanyar Layin Umarnin AWS (AWS CLI), ko API don ba da damar samun dama ga waɗannan ƙarin albarkatun.

Saita misalin littafin rubutu na SageMaker kuma shigar da abubuwan dogaro

Don farawa, ƙirƙiri misalin littafin rubutu na SageMaker kuma shigar da abubuwan dogaro da ake buƙata. Koma zuwa ga GitHub repo don tabbatar da ingantaccen saitin. Bayan kun saita misalin littafin rubutu, zaku iya tura samfurin.

Hakanan zaka iya gudanar da littafin rubutu a cikin gida akan yanayin ci gaban da kuka fi so (IDE). Tabbatar cewa an shigar da dakin binciken littafin Jupyter.

Sanya samfurin

Ƙaddamar da tsarin Mixtral-8X7B Umarnin LLM akan SageMaker JumpStart:

Sanya samfurin BGE Babban En akan SageMaker JumpStart:

Saita LangChain

Bayan shigo da duk dakunan karatu da ake buƙata kuma ƙaddamar da samfurin Mixtral-8x7B da samfurin BGE Large En, yanzu zaku iya saita LangChain. Don umarnin mataki-mataki, koma zuwa GitHub repo.

Shirye-shiryen bayanai

A cikin wannan post ɗin, muna amfani da shekaru da yawa na Wasiƙun Amazon zuwa masu hannun jari a matsayin ƙungiyar rubutu don yin QnA akan. Don ƙarin cikakkun matakai don shirya bayanai, koma zuwa GitHub repo.

Amsar tambaya

Da zarar an shirya bayanan, zaku iya amfani da nannade da LangChain ya bayar, wanda ke nannade cikin kantin sayar da vector kuma yana ɗaukar shigarwar LLM. Wannan kundi yana aiwatar da matakai masu zuwa:

- Ɗauki tambayar shigarwa.

- Ƙirƙiri saka tambaya.

- Dauke takaddun da suka dace.

- Haɗa takaddun da tambayar cikin sauri.

- Kira samfurin tare da faɗakarwa kuma samar da amsa ta hanyar da za a iya karantawa.

Yanzu da kantin sayar da vector yana wurin, zaku iya fara yin tambayoyi:

Sarkar maidowa na yau da kullun

A cikin yanayin da ya gabata, mun bincika hanya mai sauri da sauƙi don samun amsar mahallin sane da tambayarka. Yanzu bari mu kalli ƙarin zaɓin da za a iya daidaitawa tare da taimakon RetrievalQA, inda zaku iya tsara yadda za a ƙara takaddun da aka samo a cikin gaggawa ta amfani da siginar chain_type. Hakanan, don sarrafa adadin takaddun da suka dace ya kamata a dawo dasu, zaku iya canza sigar k a cikin lambar mai zuwa don ganin fitowar daban-daban. A cikin al'amuran da yawa, kuna iya son sanin waɗanne takaddun tushe LLM yayi amfani da su don samar da amsar. Kuna iya samun waɗannan takaddun a cikin fitarwa ta amfani da su return_source_documents, wanda ke mayar da takaddun da aka ƙara zuwa mahallin faɗakarwar LLM. RetrievalQA kuma yana ba ku damar samar da samfuri na gaggawa na al'ada wanda zai iya keɓanta da ƙirar.

Bari mu yi tambaya:

Sarkar mai dawo da daftarin iyaye

Bari mu kalli zaɓin RAG mafi ci gaba tare da taimakon ParentDocumentRetriever. Lokacin aiki tare da dawo da daftarin aiki, ƙila za ku ci karo da ciniki tsakanin adana ƙananan guntu na takarda don ingantattun abubuwan haɗawa da manyan takardu don adana ƙarin mahallin. Mai dawo da daftarin iyaye yana cika wannan ma'auni ta hanyar rarrabuwa da adana ƙananan gungu na bayanai.

Muna amfani da a parent_splitter don raba ainihin takaddun zuwa manyan gungu da ake kira takaddun iyaye da a child_splitter don ƙirƙirar ƙananan takardun yara daga ainihin takaddun:

Ana lissafta takardun yara a cikin kantin sayar da kaya ta amfani da abubuwan sakawa. Wannan yana ba da damar dawo da ingantaccen takaddun yara masu dacewa bisa kamanni. Don dawo da bayanan da suka dace, mai dawo da daftarin iyaye na farko ya fara debo takardun yaro daga kantin sayar da kaya. Daga nan sai ya duba ID na iyaye na waɗannan takaddun yara kuma ya dawo da manyan takaddun iyaye daidai.

Bari mu yi tambaya:

Sarkar matsawa na yanayi

Bari mu kalli wani zaɓi na RAG mai ci gaba da ake kira matsi na mahallin. Kalubale ɗaya tare da maidowa shine yawanci ba mu san takamaiman tambayoyin da tsarin ajiyar daftarin aiki zai fuskanta lokacin da kuka shigar da bayanai cikin tsarin ba. Wannan yana nufin cewa bayanan da suka fi dacewa da tambaya za a iya binne su a cikin takarda mai yawan rubutu maras dacewa. Canja wurin cikakken takaddar ta aikace-aikacenku na iya haifar da ƙarin tsadar kiran LLM da maras kyau.

Mai dawo da matsi na mahallin yana magance ƙalubalen maido da bayanan da suka dace daga tsarin ajiyar daftarin aiki, inda za a iya binne bayanan da suka dace a cikin takaddun da ke ɗauke da rubutu da yawa. Ta hanyar matsawa da tace takaddun da aka dawo dasu bisa mahallin tambayar da aka bayar, mafi mahimmancin bayanan kawai ana dawo dasu.

Don amfani da mai dawo da matsi na mahallin, kuna buƙatar:

- Mai dawo da tushe - Wannan shine farkon mai dawo da takardu daga tsarin ajiya dangane da tambaya

- Daftarin aiki compressor - Wannan bangaren yana ɗaukar takaddun da aka samo da farko kuma yana rage su ta hanyar rage abubuwan da ke cikin takaddun guda ɗaya ko zubar da takaddun da ba su da mahimmanci gabaɗaya, ta amfani da mahallin tambaya don tantance dacewa.

Ƙara matsawa mahallin tare da mai cire sarkar LLM

Da farko, kunsa mai dawo da tushe na ku tare da a ContextualCompressionRetriever. Za ku ƙara wani LLMChainExtractor, wanda zai maimaita kan takaddun da aka dawo da farko kuma a cire daga kowane abun ciki kawai wanda ya dace da tambayar.

Fara sarkar ta amfani da ContextualCompressionRetriever da wani LLMChainExtractor kuma shigar da hanzari ta hanyar chain_type_kwargs hujja.

Bari mu yi tambaya:

Tace takardu tare da tace sarkar LLM

The LLMChainFilter yana da sauƙi mai sauƙi amma mafi ƙarfi mai ƙarfi wanda ke amfani da sarkar LLM don yanke shawarar wanne daga cikin takaddun da aka samo asali don tacewa da waɗanda zasu dawo, ba tare da sarrafa abubuwan da ke cikin takardar ba:

Fara sarkar ta amfani da ContextualCompressionRetriever da wani LLMChainFilter kuma shigar da hanzari ta hanyar chain_type_kwargs hujja.

Bari mu yi tambaya:

Kwatanta sakamako

Tebu mai zuwa yana kwatanta sakamako daga tambayoyi daban-daban dangane da fasaha.

| m | Tambaya 1 | Tambaya 2 | kwatanta |

| Ta yaya AWS ya samo asali? | Me yasa Amazon ke nasara? | ||

| Fitowar Sarkar Mai Dawo Na Kai-da-kai | AWS (Sabis na Yanar Gizo na Amazon) ya samo asali ne daga saka hannun jari mara riba na farko zuwa $ 85B na shekara-shekara na kasuwancin kudaden shiga tare da riba mai ƙarfi, yana ba da sabis da fasali iri-iri, kuma ya zama muhimmin ɓangare na fayil ɗin Amazon. Duk da fuskantar shakku da iska na ɗan gajeren lokaci, AWS ya ci gaba da haɓakawa, jawo sabbin abokan ciniki, da ƙaura abokan ciniki masu aiki, suna ba da fa'idodi kamar haɓakawa, haɓakawa, ingantaccen farashi, da tsaro. AWS kuma ta faɗaɗa hannun jari na dogon lokaci, gami da haɓaka guntu, don samar da sabbin ƙarfi da canza abin da zai yiwu ga abokan cinikinta. | Amazon ya ci nasara saboda ci gaba da haɓakawa da haɓaka zuwa sababbin wurare kamar sabis na kayan aikin fasaha, na'urorin karatu na dijital, masu taimaka wa murya ta sirri, da sababbin nau'o'in kasuwanci kamar kasuwa na ɓangare na uku. Ƙarfinsa don ƙaddamar da ayyuka da sauri, kamar yadda aka gani a cikin saurin fadada cikarsa da hanyoyin sadarwar sufuri, yana ba da gudummawa ga nasararsa. Bugu da ƙari, mayar da hankali kan Amazon akan ingantawa da kuma samun ingantaccen aiki a cikin ayyukansa ya haifar da haɓaka yawan aiki da rage farashi. Misalin Kasuwancin Amazon yana nuna ƙarfin kamfani don haɓaka kasuwancin e-commerce da ƙarfin kayan aiki a sassa daban-daban. | Dangane da martanin sarkar maidowa na yau da kullun, mun lura cewa ko da yake yana ba da dogon amsoshi, yana fama da ambaton mahallin kuma ya kasa ambaton kowane muhimmin bayani daga ƙungiyar gawar dangane da amsa tambayar da aka bayar. Sarkar dawo da ita na yau da kullun ba ta iya ɗaukar abubuwan da ke tattare da zurfafa ko fahimtar mahallin mahallin, mai yuwuwar rasa mahimman abubuwan daftarin aiki. |

| Fitar Mai Dauko Takardun Iyaye | AWS (Sabis na Yanar Gizo na Amazon) ya fara ne tare da ƙaddamarwar farko mara kyau na sabis na Elastic Compute Cloud (EC2) a cikin 2006, yana ba da girman misali ɗaya kawai, a cikin cibiyar bayanai ɗaya, a cikin yanki ɗaya na duniya, tare da yanayin tsarin aiki na Linux kawai. , kuma ba tare da abubuwa da yawa masu mahimmanci kamar saka idanu, daidaita nauyi, daidaitawa ta atomatik, ko ma'ajiya mai tsayi. Duk da haka, nasarar AWS ya ba su damar yin sauri da kuma ƙara ƙarfin da ya ɓace, daga ƙarshe ya faɗaɗa don ba da dandano daban-daban, girma, da ingantawa na ƙididdigewa, ajiya, da sadarwar sadarwar, da kuma haɓaka nasu kwakwalwan kwamfuta (Graviton) don tura farashin da aiki gaba. . Tsarin bidi'a na AWS yana buƙatar babban saka hannun jari a cikin kuɗi da albarkatun mutane sama da shekaru 20, galibi da kyau kafin lokacin da zai biya, don biyan bukatun abokin ciniki da haɓaka ƙwarewar abokin ciniki na dogon lokaci, aminci, da dawowa ga masu hannun jari. | Amazon yana da nasara saboda ikonsa na ci gaba da haɓakawa, daidaitawa da canza yanayin kasuwa, da biyan bukatun abokin ciniki a cikin sassan kasuwa daban-daban. Wannan yana bayyana a cikin nasarar Kasuwancin Amazon, wanda ya girma don fitar da kusan $35B a cikin babban tallace-tallace na shekara-shekara ta hanyar sadar da zaɓi, ƙima, da dacewa ga abokan cinikin kasuwanci. Zuba hannun jarin Amazon a cikin kasuwancin e-commerce da ikon dabaru ya kuma ba da damar ƙirƙirar ayyuka kamar Buy tare da Prime, wanda ke taimaka wa 'yan kasuwa masu gidajen yanar gizon kai tsaye zuwa mabukaci su fitar da juyi daga ra'ayoyi zuwa sayayya. | Mai dawo da daftarin iyaye yana zurfafa zurfi cikin ƙayyadaddun dabarun haɓakar AWS, gami da tsarin jujjuyawar ƙara sabbin abubuwa dangane da ra'ayin abokin ciniki da cikakken tafiya daga ƙaƙƙarfan ƙaddamarwa na farko zuwa babban matsayi na kasuwa, yayin ba da amsa mai wadatar mahallin mahallin. . Amsoshin sun ƙunshi nau'i-nau'i iri-iri, daga sabbin fasahohin fasaha da dabarun kasuwa zuwa ingantaccen tsari da mayar da hankali ga abokin ciniki, samar da cikakkiyar ra'ayi game da abubuwan da ke ba da gudummawa ga nasara tare da misalai. Ana iya dangana wannan ga iyawar mai neman daftarin aiki mai fa'ida da niyya amma duk da haka. |

| LLM Sarkar Extractor: Fitar Matsi na yanayi | AWS ya samo asali ne ta hanyar farawa a matsayin karamin aiki a cikin Amazon, yana buƙatar babban jari na jari da kuma fuskantar shakku daga ciki da wajen kamfanin. Koyaya, AWS yana da farkon farawa akan masu fafatawa kuma sun yi imani da ƙimar da zai iya kawowa ga abokan ciniki da Amazon. AWS ya yi alkawarin dogon lokaci don ci gaba da saka hannun jari, wanda ya haifar da sabbin abubuwa da ayyuka sama da 3,300 da aka ƙaddamar a cikin 2022. AWS ya canza yadda abokan ciniki ke sarrafa kayayyakin fasahar su kuma ya zama kasuwancin kuɗin shiga na shekara-shekara na $ 85B tare da riba mai ƙarfi. AWS kuma ya ci gaba da inganta abubuwan da yake bayarwa, kamar haɓaka EC2 tare da ƙarin fasali da ayyuka bayan ƙaddamar da farko. | Dangane da mahallin da aka bayar, ana iya danganta nasarar Amazon ga dabarun haɓakawa daga dandamalin sayar da littattafai zuwa kasuwannin duniya tare da ingantaccen yanayin mai siyarwa na ɓangare na uku, saka hannun jari na farko a AWS, sabbin abubuwa a cikin gabatar da Kindle da Alexa, da haɓaka mai girma. a cikin kudaden shiga na shekara-shekara daga 2019 zuwa 2022. Wannan ci gaban ya haifar da fadada sawun cibiyar cikawa, ƙirƙirar hanyar sadarwar sufuri mai nisa ta ƙarshe, da gina sabuwar cibiyar sadarwa ta rarrabawa, waɗanda aka inganta don samarwa da rage farashi. | Mai cire sarkar LLM yana kiyaye daidaito tsakanin rufe mahimman abubuwan gabaɗaya da guje wa zurfin da ba dole ba. Yana daidaitawa sosai zuwa mahallin tambayar, don haka abin da aka fitar ya dace da kuma cikakke. |

| Tace Sarkar LLM: Fitar Matsi na yanayi | AWS (Sabis na Yanar Gizo na Amazon) ya samo asali ne ta hanyar ƙaddamar da fala-fala-fala-fala amma da sauri dangane da martanin abokin ciniki don ƙara ƙarfin da ake bukata. Wannan tsarin ya ba AWS damar ƙaddamar da EC2 a cikin 2006 tare da ƙayyadaddun fasali sannan kuma ci gaba da ƙara sabbin ayyuka, kamar ƙarin girman misali, cibiyoyin bayanai, yankuna, zaɓuɓɓukan tsarin aiki, kayan aikin saka idanu, daidaitawar kaya, daidaitawa ta atomatik, da adanawa na dindindin. A tsawon lokaci, AWS ya canza daga sabis ɗin mara kyau zuwa kasuwancin biliyoyin daloli ta hanyar mai da hankali kan buƙatun abokin ciniki, haɓakawa, haɓakawa, ingantaccen farashi, da tsaro. AWS yanzu yana da adadin kuɗin shiga na shekara-shekara na $ 85B kuma yana ba da sabbin abubuwa da ayyuka sama da 3,300 kowace shekara, yana ba da ɗimbin abokan ciniki daga farawa zuwa kamfanoni na ƙasa da ƙasa da ƙungiyoyin jama'a. | Amazon yana da nasara saboda sabbin samfuran kasuwancin sa, ci gaba da ci gaban fasaha, da sauye-sauyen ƙungiyoyi. Kamfanin ya ci gaba da rushe masana'antu na gargajiya ta hanyar gabatar da sababbin ra'ayoyi, kamar dandalin ecommerce don samfurori da ayyuka daban-daban, kasuwa na ɓangare na uku, sabis na kayan aikin girgije (AWS), e-reader na Kindle, da mataimaki na sirri na muryar Alexa. . Bugu da ƙari, Amazon ya yi sauye-sauyen tsari don inganta haɓakarsa, kamar sake tsara hanyar sadarwa ta Amurka don rage farashi da lokutan bayarwa, yana kara ba da gudummawa ga nasararsa. | Hakazalika da mai cire sarkar LLM, tace sarkar LLM tana tabbatar da cewa ko da yake an rufe mahimman abubuwan, fitarwar tana da inganci ga abokan ciniki waɗanda ke neman taƙaitaccen amsoshi da mahallin mahallin. |

Bayan kwatanta waɗannan fasahohin daban-daban, za mu iya ganin cewa a cikin mahallin kamar bayyani dalla-dalla game da canjin AWS daga sabis mai sauƙi zuwa hadaddun, mahallin dala biliyan da yawa, ko bayyana nasarorin dabarun Amazon, sarkar mai dawowa na yau da kullun ba ta da madaidaicin mafi kyawun dabarun samarwa, yana haifar da ƙarancin bayanan da aka yi niyya. Kodayake bambance-bambance kaɗan ne ake iya gani tsakanin ci-gaba dabarun da aka tattauna, sun fi ba da bayanai fiye da sarƙoƙi na yau da kullun.

Ga abokan ciniki a cikin masana'antu kamar kiwon lafiya, sadarwa, da sabis na kuɗi waɗanda ke neman aiwatar da RAG a cikin aikace-aikacen su, iyakancewar sarkar maidowa ta yau da kullun wajen samar da daidaito, guje wa sakewa, da matse bayanai yadda ya kamata ya sa ya zama ƙasa da dacewa don biyan waɗannan buƙatun idan aka kwatanta da su. zuwa mafi ci-gaba mai dawo da daftarin aiki na iyaye da dabarun matsi na mahallin. Waɗannan fasahohin suna iya karkatar da ɗimbin bayanai a cikin tattara bayanai masu tasiri waɗanda kuke buƙata, yayin da suke taimakawa haɓaka aikin farashi.

Tsaftacewa

Lokacin da kuka gama gudanar da littafin rubutu, share albarkatun da kuka ƙirƙira don gujewa tara kuɗin albarkatun da ake amfani da su:

Kammalawa

A cikin wannan sakon, mun gabatar da wani bayani wanda zai ba ku damar aiwatar da mai dawo da daftarin aiki na iyaye da dabarun sarƙoƙi na mahallin don haɓaka ikon LLMs don aiwatarwa da samar da bayanai. Mun gwada waɗannan dabarun RAG na ci gaba tare da Umarnin Mixtral-8x7B da samfuran BGE Large En da ake samu tare da SageMaker JumpStart. Mun kuma bincika ta yin amfani da ma'auni na dindindin don haɗawa da guntuwar daftarin aiki da haɗin kai tare da shagunan bayanan kasuwanci.

Dabarun da muka yi ba wai kawai suna inganta hanyoyin samun damar samfuran LLM da haɗa ilimin waje ba, har ma suna haɓaka inganci, dacewa, da ingancin abubuwan da suke samarwa. Ta hanyar haɗa maidowa daga babban rubutun rubutu tare da ƙarfin tsara harshe, waɗannan ci-gaba na dabarun RAG suna ba LLMs damar samar da ƙarin bayani na gaskiya, daidaitacce, da madaidaicin mahallin da ya dace, haɓaka aikinsu a cikin ayyuka daban-daban na sarrafa harshe na halitta.

SageMaker JumpStart yana tsakiyar wannan mafita. Tare da SageMaker JumpStart, kuna samun damar yin amfani da ɗimbin nau'ikan buɗaɗɗen ƙirar tushe da rufaffiyar, daidaita tsarin farawa da ML da ba da damar gwaji da turawa cikin sauri. Don fara tura wannan maganin, kewaya zuwa littafin rubutu a cikin GitHub repo.

Game da Authors

Niithin Vijeaswaran shine Magani Architect a AWS. Yankin da ya fi mayar da hankali shine AI da AWS AI Accelerators. Ya yi digirin farko a fannin Computer Science da Bioinformatics. Niithiyn yana aiki kafada da kafada tare da ƙungiyar Generative AI GTM don baiwa abokan cinikin AWS damar gabaɗaya da haɓaka haɓakar haɓakar AI. Shi mai sha'awar wasan Dallas Mavericks ne kuma yana jin daɗin tattara sneakers.

Niithin Vijeaswaran shine Magani Architect a AWS. Yankin da ya fi mayar da hankali shine AI da AWS AI Accelerators. Ya yi digirin farko a fannin Computer Science da Bioinformatics. Niithiyn yana aiki kafada da kafada tare da ƙungiyar Generative AI GTM don baiwa abokan cinikin AWS damar gabaɗaya da haɓaka haɓakar haɓakar AI. Shi mai sha'awar wasan Dallas Mavericks ne kuma yana jin daɗin tattara sneakers.

Sebastian Bustillo shine Magani Architect a AWS. Yana mai da hankali kan fasahar AI / ML tare da sha'awar haɓaka AI da ƙididdige masu haɓakawa. A AWS, yana taimaka wa abokan ciniki buɗe ƙimar kasuwanci ta hanyar haɓaka AI. Lokacin da ba ya wurin aiki, yana jin daɗin dafa cikakken kofi na musamman da kuma bincika duniya tare da matarsa.

Sebastian Bustillo shine Magani Architect a AWS. Yana mai da hankali kan fasahar AI / ML tare da sha'awar haɓaka AI da ƙididdige masu haɓakawa. A AWS, yana taimaka wa abokan ciniki buɗe ƙimar kasuwanci ta hanyar haɓaka AI. Lokacin da ba ya wurin aiki, yana jin daɗin dafa cikakken kofi na musamman da kuma bincika duniya tare da matarsa.

Armando Diaz ne adam wata shine Magani Architect a AWS. Yana mai da hankali kan haɓaka AI, AI / ML, da Binciken Bayanai. A AWS, Armando yana taimaka wa abokan ciniki su haɗa manyan damar haɓakar AI a cikin tsarin su, haɓaka ƙima da fa'ida mai fa'ida. Lokacin da ba ya wurin aiki, yana jin daɗin zama tare da matarsa da danginsa, yin balaguro, da balaguro a duniya.

Armando Diaz ne adam wata shine Magani Architect a AWS. Yana mai da hankali kan haɓaka AI, AI / ML, da Binciken Bayanai. A AWS, Armando yana taimaka wa abokan ciniki su haɗa manyan damar haɓakar AI a cikin tsarin su, haɓaka ƙima da fa'ida mai fa'ida. Lokacin da ba ya wurin aiki, yana jin daɗin zama tare da matarsa da danginsa, yin balaguro, da balaguro a duniya.

Dr Farooq Sabir Babban Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararru a AWS. Ya yi digiri na uku (PhD) da MS a fannin Injiniyan Lantarki daga Jami’ar Texas a Austin da MS a Kimiyyar Kwamfuta daga Cibiyar Fasaha ta Georgia. Yana da ƙwarewar aiki sama da shekaru 15 kuma yana son koyarwa da jagoranci ɗaliban kwaleji. A AWS, yana taimaka wa abokan ciniki su ƙirƙira da magance matsalolin kasuwancin su a cikin ilimin kimiyyar bayanai, koyon injin, hangen nesa na kwamfuta, basirar wucin gadi, haɓaka ƙididdiga, da wuraren da ke da alaƙa. An kafa shi a Dallas, Texas, shi da danginsa suna son tafiya da tafiya cikin tafiye-tafiye masu tsayi.

Dr Farooq Sabir Babban Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararrun Ƙwararru a AWS. Ya yi digiri na uku (PhD) da MS a fannin Injiniyan Lantarki daga Jami’ar Texas a Austin da MS a Kimiyyar Kwamfuta daga Cibiyar Fasaha ta Georgia. Yana da ƙwarewar aiki sama da shekaru 15 kuma yana son koyarwa da jagoranci ɗaliban kwaleji. A AWS, yana taimaka wa abokan ciniki su ƙirƙira da magance matsalolin kasuwancin su a cikin ilimin kimiyyar bayanai, koyon injin, hangen nesa na kwamfuta, basirar wucin gadi, haɓaka ƙididdiga, da wuraren da ke da alaƙa. An kafa shi a Dallas, Texas, shi da danginsa suna son tafiya da tafiya cikin tafiye-tafiye masu tsayi.

Marco Punio shine Masanin Gine-ginen Magani da aka mayar da hankali kan dabarun AI na haɓakawa, amfani da mafitacin AI da gudanar da bincike don taimakawa abokan ciniki masu girman kai akan AWS. Marco shine mai ba da shawara ga girgije na dijital na dijital tare da gogewa a cikin FinTech, Kiwon Lafiya & Kimiyyar Rayuwa, Software-as-a-sabis, kuma mafi kwanan nan, a cikin masana'antar Sadarwa. Shi ƙwararren masanin fasaha ne tare da sha'awar koyon injin, hankali na wucin gadi, da haɗaka & saye. Marco yana zaune ne a Seattle, WA kuma yana jin daɗin rubutu, karantawa, motsa jiki, da gina aikace-aikacen a cikin lokacinsa na kyauta.

Marco Punio shine Masanin Gine-ginen Magani da aka mayar da hankali kan dabarun AI na haɓakawa, amfani da mafitacin AI da gudanar da bincike don taimakawa abokan ciniki masu girman kai akan AWS. Marco shine mai ba da shawara ga girgije na dijital na dijital tare da gogewa a cikin FinTech, Kiwon Lafiya & Kimiyyar Rayuwa, Software-as-a-sabis, kuma mafi kwanan nan, a cikin masana'antar Sadarwa. Shi ƙwararren masanin fasaha ne tare da sha'awar koyon injin, hankali na wucin gadi, da haɗaka & saye. Marco yana zaune ne a Seattle, WA kuma yana jin daɗin rubutu, karantawa, motsa jiki, da gina aikace-aikacen a cikin lokacinsa na kyauta.

AJ Dhimin shine Magani Architect a AWS. Ya ƙware a cikin haɓaka AI, ƙididdiga marasa sabar da kuma nazarin bayanai. Shi memba ne mai aiki / mai ba da shawara a cikin Ƙungiyar Fasaha ta Koyon Injiniya kuma ya buga takaddun kimiyya da yawa akan batutuwan AI/ML daban-daban. Yana aiki tare da abokan ciniki, kama daga farawa zuwa kamfanoni, don haɓaka hanyoyin AIWsome generative AI. Yana da sha'awar yin amfani da Manyan Samfuran Harshe don ci-gaba da nazarin bayanai da kuma bincika aikace-aikace masu amfani waɗanda ke magance ƙalubale na gaske. A wajen aiki, AJ yana jin daɗin tafiya, kuma a halin yanzu yana cikin ƙasashe 53 tare da burin ziyartar kowace ƙasa a duniya.

AJ Dhimin shine Magani Architect a AWS. Ya ƙware a cikin haɓaka AI, ƙididdiga marasa sabar da kuma nazarin bayanai. Shi memba ne mai aiki / mai ba da shawara a cikin Ƙungiyar Fasaha ta Koyon Injiniya kuma ya buga takaddun kimiyya da yawa akan batutuwan AI/ML daban-daban. Yana aiki tare da abokan ciniki, kama daga farawa zuwa kamfanoni, don haɓaka hanyoyin AIWsome generative AI. Yana da sha'awar yin amfani da Manyan Samfuran Harshe don ci-gaba da nazarin bayanai da kuma bincika aikace-aikace masu amfani waɗanda ke magance ƙalubale na gaske. A wajen aiki, AJ yana jin daɗin tafiya, kuma a halin yanzu yana cikin ƙasashe 53 tare da burin ziyartar kowace ƙasa a duniya.

- Ƙunshin Ƙarfafa SEO & Rarraba PR. Samun Girma a Yau.

- PlatoData.Network Tsaye Generative Ai. Karfafa Kanka. Shiga Nan.

- PlatoAiStream. Web3 Mai hankali. Ilmi Ya Faru. Shiga Nan.

- PlatoESG. Carbon, CleanTech, Kuzari, Muhalli, Solar, Gudanar da Sharar gida. Shiga Nan.

- PlatoHealth. Biotech da Clinical Trials Intelligence. Shiga Nan.

- Source: https://aws.amazon.com/blogs/machine-learning/advanced-rag-patterns-on-amazon-sagemaker/