Gabatarwa

Koyo game da Ayyukan Window a PySpark na iya zama ƙalubale amma ya cancanci ƙoƙarin. Ayyukan Window kayan aiki ne mai ƙarfi don nazarin bayanai kuma suna iya taimaka muku samun bayanan da ƙila ba ku taɓa gani ba. Ta hanyar fahimtar yadda ake amfani da Ayyukan Window a cikin Spark; zaka iya daukar naka bincike bayanai basira zuwa mataki na gaba kuma ku yanke shawara mai zurfi. Ko kana aiki da babba ko ƙarami datasets, Koyan Ayyukan Window a cikin Spark zai ba ku damar sarrafa da kuma nazarin bayanai ta sababbin hanyoyi masu ban sha'awa.

A cikin wannan shafin, za mu fara fahimtar manufar ayyukan taga sannan mu tattauna yadda ake amfani da su tare da Spark SQL da PySpark DataFrame API. Don haka a ƙarshen wannan labarin, zaku fahimci yadda ake amfani da ayyukan taga tare da bayanan bayanan gaske kuma ku sami mahimman bayanai don kasuwanci.

makasudin

- Fahimtar manufar ayyukan taga.

- Yin aiki tare da ayyukan taga ta amfani da saitin bayanai.

- Nemo abubuwan fahimta ta amfani da ayyukan taga.

- Yi amfani da Spark SQL da DataFrame API don aiki tare da ayyukan taga.

An buga wannan labarin a matsayin wani ɓangare na Bayanan Kimiyya Blogathon.

Table da ke ciki

Menene Ayyukan Taga?

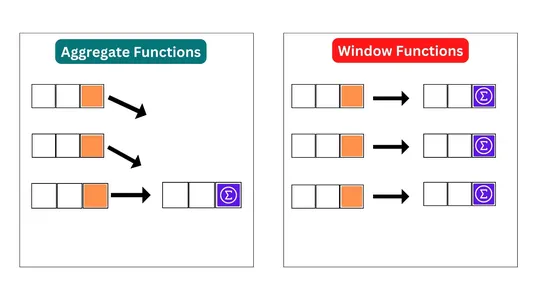

Ayyukan taga suna taimakawa tantance bayanai a cikin rukunin layuka waɗanda ke da alaƙa da juna. Suna baiwa masu amfani damar yin hadaddun sauye-sauye akan layuka na tsarin bayanai ko saitin bayanai masu alaƙa da juna dangane da wasu sharuɗɗan rarrabawa da oda.

Ayyukan taga suna aiki akan takamaiman yanki na tsarin bayanai ko saitin bayanai da aka ayyana ta hanyar saitin ginshiƙan rarrabawa. The KARANTA DA juzu'i yana raba bayanan a cikin aikin taga don tsara shi cikin takamaiman tsari. Ayyukan taga sai su yi lissafin akan tagar layuka masu zamewa waɗanda suka haɗa da jeri na yanzu da juzu'in na baya ko dai 'da'/'ko' na gaba, kamar yadda aka ƙayyade a firam ɗin taga.

Wasu misalan gama-gari na ayyukan taga sun haɗa da ƙididdige matsakaita masu motsi, matsayi ko rarraba layuka bisa takamaiman shafi ko rukuni na ginshikan, ƙididdige jimlar gudu, da gano ƙimar farko ko ta ƙarshe a cikin rukunin layuka. Tare da ayyukan taga mai ƙarfi na Spark, masu amfani za su iya yin nazari mai rikitarwa da tari manyan bayanai tare da sauƙi mai sauƙi, yana mai da shi kayan aiki mai mahimmanci ga manyan sarrafa bayanai da nazari.

Ayyukan Window a cikin SQL

Spark SQL yana goyan bayan nau'ikan ayyukan taga guda uku:

- Ayyukan Matsayi:- Waɗannan ayyuka suna ba da matsayi ga kowane jere a cikin ɓangaren saitin sakamako. Misali, aikin ROW_NUMBER() yana ba da lamba ta musamman ga kowane jere a cikin sashin.

- Ayyukan Nazari:- Waɗannan ayyuka suna lissafta jimillar ƙima akan tagar layuka. Misali, aikin SUM() yana lissafin jimlar shafi akan tagar layuka.

- Ayyukan ƙima:- Waɗannan ayyuka suna ƙididdige ƙimar nazari ga kowane jeri a cikin bangare, dangane da ƙimar sauran layuka a cikin bangare ɗaya. Misali, aikin LAG() yana dawo da ƙimar shafi daga jeren baya a cikin ɓangaren.

Ƙirƙirar DataFrame

Za mu ƙirƙiri wani samfurin dataframe don haka, cewa za mu iya a zahiri aiki tare da daban-daban taga ayyuka. Hakanan za mu yi ƙoƙarin amsa wasu tambayoyi tare da taimakon wannan bayanan da ayyukan taga.

Tsarin bayanan yana da cikakkun bayanai na ma'aikata kamar Sunan su, Naɗi, Lambar Ma'aikata, Kwanan Aikin Hayar, Albashi da sauransu. Gabaɗaya muna da ginshiƙai 8 waɗanda ke kamar haka:

- 'empno': Wannan shafi ya ƙunshi lambar ma'aikaci.

- 'ename': Wannan shafi yana da sunayen ma'aikata.

- 'aiki': Wannan shafi yana ƙunshe da bayanai game da sunayen ayyukan ma'aikata.

- 'hayar': Wannan shafi yana nuna kwanan watan hayar ma'aikaci.

- 'sal': Bayanin albashi ya ƙunshi a cikin wannan shafi.

- 'comm': Wannan shafi yana da cikakkun bayanan hukumar ma'aikata, idan akwai.

- 'deptno': Lambar sashen da ma'aikaci ke cikinta tana cikin wannan shafi.

# Create Sample Dataframe

employees = [

(7369, "SMITH", "CLERK", "17-Dec-80", 800, 20, 10),

(7499, "ALLEN", "SALESMAN", "20-Feb-81", 1600, 300, 30),

(7521, "WARD", "SALESMAN", "22-Feb-81", 1250, 500, 30),

(7566, "JONES", "MANAGER", "2-Apr-81", 2975, 0, 20),

(7654, "MARTIN", "SALESMAN", "28-Sep-81", 1250, 1400, 30),

(7698, "BLAKE", "MANAGER", "1-May-81", 2850, 0, 30),

(7782, "CLARK", "MANAGER", "9-Jun-81", 2450, 0, 10),

(7788, "SCOTT", "ANALYST", "19-Apr-87", 3000, 0, 20),

(7629, "ALEX", "SALESMAN", "28-Sep-79", 1150, 1400, 30),

(7839, "KING", "PRESIDENT", "17-Nov-81", 5000, 0, 10),

(7844, "TURNER", "SALESMAN", "8-Sep-81", 1500, 0, 30),

(7876, "ADAMS", "CLERK", "23-May-87", 1100, 0, 20)

]# create dataframe

emp_df = spark.createDataFrame(employees,

["empno", "ename", "job", "hiredate", "sal", "comm", "deptno"])

emp_df.show()

# Output:

+-----+------+---------+---------+----+----+------+

|empno| ename| job| hiredate| sal|comm|deptno|

+-----+------+---------+---------+----+----+------+

| 7369| SMITH| CLERK|17-Dec-80| 800| 20| 10|

| 7499| ALLEN| SALESMAN|20-Feb-81|1600| 300| 30|

| 7521| WARD| SALESMAN|22-Feb-81|1250| 500| 30|

| 7566| JONES| MANAGER| 2-Apr-81|2975| 0| 20|

| 7654|MARTIN| SALESMAN|28-Sep-81|1250|1400| 30|

| 7698| BLAKE| MANAGER| 1-May-81|2850| 0| 30|

| 7782| CLARK| MANAGER| 9-Jun-81|2450| 0| 10|

| 7788| SCOTT| ANALYST|19-Apr-87|3000| 0| 20|

| 7629| ALEX| SALESMAN|28-Sep-79|1150|1400| 30|

| 7839| KING|PRESIDENT|17-Nov-81|5000| 0| 10|

| 7844|TURNER| SALESMAN| 8-Sep-81|1500| 0| 30|

| 7876| ADAMS| CLERK|23-May-87|1100| 0| 20|

+-----+------+---------+---------+----+----+------+Yanzu za mu duba tsarin:

# Checking the schema

emp_df.printSchema()

# Output:-

root

|-- empno: long (nullable = true)

|-- ename: string (nullable = true)

|-- job: string (nullable = true)

|-- hiredate: string (nullable = true)

|-- sal: long (nullable = true)

|-- comm: long (nullable = true)

|-- deptno: long (nullable = true)Ƙirƙiri ra'ayi na ɗan lokaci na DataFrame 'emp_df' tare da sunan "emp". Yana ba mu damar bincika DataFrame ta amfani da SQL syntax a cikin Spark SQL kamar tebur. Duban ɗan lokaci yana aiki ne kawai na tsawon lokacin Zama Spark.

emp_df.createOrReplaceTempView("emp")Magance Maganganun Matsala Ta Amfani da Ayyukan Taga

Anan za mu magance maganganun matsaloli da yawa ta amfani da ayyukan windows:

Q1. Sanya albashi a cikin kowane sashe.

# Using spark sql

rank_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal,

RANK() OVER (PARTITION BY deptno ORDER BY sal DESC) AS rank FROM emp""")

rank_df.show()

# Using PySpark

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

ranking_result_df = emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.rank().over(windowSpec).alias('rank'))

ranking_result_df.show()

# Output:-

+-----+------+---------+------+----+----+

|empno| ename| job|deptno| sal|rank|

+-----+------+---------+------+----+----+

| 7839| KING|PRESIDENT| 10|5000| 1|

| 7782| CLARK| MANAGER| 10|2450| 2|

| 7369| SMITH| CLERK| 10| 800| 3|

| 7788| SCOTT| ANALYST| 20|3000| 1|

| 7566| JONES| MANAGER| 20|2975| 2|

| 7876| ADAMS| CLERK| 20|1100| 3|

| 7698| BLAKE| MANAGER| 30|2850| 1|

| 7499| ALLEN| SALESMAN| 30|1600| 2|

| 7844|TURNER| SALESMAN| 30|1500| 3|

| 7521| WARD| SALESMAN| 30|1250| 4|

| 7654|MARTIN| SALESMAN| 30|1250| 4|

| 7629| ALEX| SALESMAN| 30|1150| 6|

+-----+------+---------+------+----+----+Hanyar don lambar PySpark

- Aikin Window yana raba bayanai ta lambar sashen ta amfani da partitionBy(col('deptno')) sannan ya ba da umarnin bayanan cikin kowane bangare ta hanyar albashi ta hanyar saukowa ta amfani da orderBy('sal').desc()). WindowSpec mai canzawa yana riƙe da ƙayyadaddun taga na ƙarshe.

- 'emp_df' shine tsarin data wanda ya ƙunshi bayanan ma'aikata, gami da ginshiƙan empno, ename, aiki, deptno da sal.

- Ana amfani da aikin daraja zuwa ginshiƙin albashi ta amfani da 'F.rank() .over(windowSpec)' a cikin zaɓaɓɓen bayanin. Shagon da aka samu yana da sunan laƙabi a matsayin 'rank'.

- Zai ƙirƙiri tsarin bayanai, 'ranking_result_df', wanda ya haɗa da empno, ename, aiki, deptno, da albashi. Hakanan yana da sabon ginshiƙi, 'rank', wanda ke wakiltar matsayin albashin ma'aikaci a cikin sashinsu.

fitarwa:

Sakamakon yana da matsayi na albashi a kowane sashe.

Q2. Babban darajar albashi a cikin kowane sashe.

# Using Spark SQL

dense_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal,

DENSE_RANK() OVER (PARTITION BY deptno ORDER BY sal DESC)

AS dense_rank FROM emp""")

dense_df.show()

# Using PySpark

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

dense_ranking_df=emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.dense_rank().over(windowSpec).alias('dense_rank'))

dense_ranking_df.show()

# Output:-

+-----+------+---------+------+----+----------+

|empno| ename| job|deptno| sal|dense_rank|

+-----+------+---------+------+----+----------+

| 7839| KING|PRESIDENT| 10|5000| 1|

| 7782| CLARK| MANAGER| 10|2450| 2|

| 7369| SMITH| CLERK| 10| 800| 3|

| 7788| SCOTT| ANALYST| 20|3000| 1|

| 7566| JONES| MANAGER| 20|2975| 2|

| 7876| ADAMS| CLERK| 20|1100| 3|

| 7698| BLAKE| MANAGER| 30|2850| 1|

| 7499| ALLEN| SALESMAN| 30|1600| 2|

| 7844|TURNER| SALESMAN| 30|1500| 3|

| 7521| WARD| SALESMAN| 30|1250| 4|

| 7654|MARTIN| SALESMAN| 30|1250| 4|

| 7629| ALEX| SALESMAN| 30|1150| 5|

+-----+------+---------+------+----+----------+Hanyar don lambar PySpark

- Da farko, ƙirƙiri ƙayyadaddun tagar ta amfani da aikin Window, wanda ya raba 'emp_df' DataFrame ta deptno kuma ya ba da umarni ta hanyar saukowa shafi na 'sal'.

- Sannan, ana amfani da aikin dense_rank() akan ƙayyadaddun taga, wanda ke ba da matsayi mai yawa ga kowane jere a cikin kowane bangare dangane da tsarin da aka tsara.

- A ƙarshe, an ƙirƙiri sabon DataFrame da ake kira 'dense_ranking_df' ta zaɓi takamaiman ginshiƙai daga emp_df (watau 'empno', 'ename', 'aikin', 'deptno', da 'sal') da ƙara sabon shafi 'dense_rank' cewa ya ƙunshi ƙima mai yawa da aka ƙididdige su ta aikin taga.

- A ƙarshe, nuna sakamakon DataFrame a cikin tsarin tabular.

fitarwa:

Sakamakon yana da darajar albashi mai yawa.

Q3. Lamba layi a cikin kowane sashe.

# Using Spark SQL

row_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal,

ROW_NUMBER() OVER (PARTITION BY deptno ORDER BY sal DESC)

AS row_num FROM emp """)

row_df.show()

# Using PySpark code

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

row_num_df = emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.row_number().over(windowSpec).alias('row_num'))

row_num_df.show()

# Output:-

+-----+------+---------+------+----+-------+

|empno| ename| job|deptno| sal|row_num|

+-----+------+---------+------+----+-------+

| 7839| KING|PRESIDENT| 10|5000| 1|

| 7782| CLARK| MANAGER| 10|2450| 2|

| 7369| SMITH| CLERK| 10| 800| 3|

| 7788| SCOTT| ANALYST| 20|3000| 1|

| 7566| JONES| MANAGER| 20|2975| 2|

| 7876| ADAMS| CLERK| 20|1100| 3|

| 7698| BLAKE| MANAGER| 30|2850| 1|

| 7499| ALLEN| SALESMAN| 30|1600| 2|

| 7844|TURNER| SALESMAN| 30|1500| 3|

| 7521| WARD| SALESMAN| 30|1250| 4|

| 7654|MARTIN| SALESMAN| 30|1250| 5|

| 7629| ALEX| SALESMAN| 30|1150| 6|

+-----+------+---------+------+----+-------+Hanyar don lambar PySpark

- Layin farko yana bayyana ƙayyadaddun taga don lissafin ta amfani da ayyukan Window.partitionBy () da Window.orderBy(). Wannan taga an raba ta da ginshiƙin deptno kuma an yi oda ta hanyar sal column a cikin tsari mai saukowa.

- Layi na biyu ya ƙirƙiri sabon DataFrame mai suna 'row_num_df', hasashe na 'emp_df' tare da ƙarin ginshiƙi mai suna 'row_num' kuma yana ɗauke da bayanan lambobi.

- Ayyukan nuni() yana nuna sakamakon DataFrame, wanda ke nuna empno, ename, job, deptno, sal, da row_num ginshiƙan kowane ma'aikaci.

fitarwa:

Fitowar za ta sami lambar layin kowane ma'aikaci a cikin sashinsu bisa ladan albashi.

Q4. Gudun jimlar adadin albashi a cikin kowane sashe.

# Using Spark SQL

running_sum_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal,

SUM(sal) OVER (PARTITION BY deptno ORDER BY sal DESC)

AS running_total FROM emp

""")

running_sum_df.show()

# Using PySpar

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

running_sum_sal_df= emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.sum('sal').over(windowSpec).alias('running_total'))

running_sum_sal_df.show()

# Output:-

+-----+------+---------+------+----+-------------+

|empno| ename| job|deptno| sal|running_total|

+-----+------+---------+------+----+-------------+

| 7839| KING|PRESIDENT| 10|5000| 5000|

| 7782| CLARK| MANAGER| 10|2450| 7450|

| 7369| SMITH| CLERK| 10| 800| 8250|

| 7788| SCOTT| ANALYST| 20|3000| 3000|

| 7566| JONES| MANAGER| 20|2975| 5975|

| 7876| ADAMS| CLERK| 20|1100| 7075|

| 7698| BLAKE| MANAGER| 30|2850| 2850|

| 7499| ALLEN| SALESMAN| 30|1600| 4450|

| 7844|TURNER| SALESMAN| 30|1500| 5950|

| 7521| WARD| SALESMAN| 30|1250| 8450|

| 7654|MARTIN| SALESMAN| 30|1250| 8450|

| 7629| ALEX| SALESMAN| 30|1150| 9600|

+-----+------+---------+------+----+-------------+Approach don lambar PySpark

- Da farko, an bayyana takamaiman taga ta amfani da hanyoyin "Window.partitionBy()" da "Window.orderBy()". Hanyar "partitionBy()" tana raba bayanan ta hanyar "deptno", yayin da hanyar "orderBy()" tana ba da odar bayanai ta hanyar "sal" a cikin tsari mai saukowa.

- Bayan haka, ana amfani da aikin “ jimla ()” zuwa ginshiƙin “sal” ta amfani da hanyar “over()” don ƙididdige yawan adadin albashin da ke cikin kowane sashe. Sakamakon zai kasance a cikin sabon DataFrame mai suna "running_sum_sal_df", wanda ya ƙunshi ginshiƙan 'empno', 'ename', 'aiki', 'deptno', 'sal', da 'running_total'.

- A ƙarshe, ana kiran hanyar “show()” akan “running_sum_sal_df”DataFrame don nuna fitowar tambayar. Sakamakon DataFrame yana nuna jimlar albashin kowane ma'aikaci da sauran bayanai kamar suna, lambar sashe, da aiki.

fitarwa:

Fitowar za ta sami jimillar bayanan albashin kowane sashe.

Q5: Albashi na gaba a cikin kowane sashe.

Don nemo albashi na gaba a cikin kowane sashe muna amfani da aikin LEAD.

Ayyukan taga jagora () yana taimakawa don samun ƙimar magana a jere na gaba na ɓangaren taga. Yana mayar da ginshiƙi ga kowane ginshiƙin shigarwa, inda kowane shafi zai ƙunshi ƙimar ginshiƙin shigarwa don layin daidaitawa sama da layin na yanzu a cikin ɓangaren taga. Ma'anar aikin jagoran shine: - gubar (col, offset=1, default=Babu).

# Using Spark SQL

next_sal_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal, LEAD(sal, 1)

OVER (PARTITION BY deptno ORDER BY sal DESC) AS next_val FROM emp

""")

next_sal_df.show()

# Output:-

+-----+------+---------+------+----+--------+

|empno| ename| job|deptno| sal|next_val|

+-----+------+---------+------+----+--------+

| 7839| KING|PRESIDENT| 10|5000| 2450|

| 7782| CLARK| MANAGER| 10|2450| 800|

| 7369| SMITH| CLERK| 10| 800| null|

| 7788| SCOTT| ANALYST| 20|3000| 2975|

| 7566| JONES| MANAGER| 20|2975| 1100|

| 7876| ADAMS| CLERK| 20|1100| null|

| 7698| BLAKE| MANAGER| 30|2850| 1600|

| 7499| ALLEN| SALESMAN| 30|1600| 1500|

| 7844|TURNER| SALESMAN| 30|1500| 1250|

| 7521| WARD| SALESMAN| 30|1250| 1250|

| 7654|MARTIN| SALESMAN| 30|1250| 1150|

| 7629| ALEX| SALESMAN| 30|1150| null|

+-----+------+---------+------+----+--------+

# Using PySpark

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

next_salary_df = emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.lead('sal', offset=1, default=0).over(windowSpec).alias('next_val'))

next_salary_df.show()

# Output:-

+-----+------+---------+------+----+--------+

|empno| ename| job|deptno| sal|next_val|

+-----+------+---------+------+----+--------+

| 7839| KING|PRESIDENT| 10|5000| 2450|

| 7782| CLARK| MANAGER| 10|2450| 800|

| 7369| SMITH| CLERK| 10| 800| 0|

| 7788| SCOTT| ANALYST| 20|3000| 2975|

| 7566| JONES| MANAGER| 20|2975| 1100|

| 7876| ADAMS| CLERK| 20|1100| 0|

| 7698| BLAKE| MANAGER| 30|2850| 1600|

| 7499| ALLEN| SALESMAN| 30|1600| 1500|

| 7844|TURNER| SALESMAN| 30|1500| 1250|

| 7521| WARD| SALESMAN| 30|1250| 1250|

| 7654|MARTIN| SALESMAN| 30|1250| 1150|

| 7629| ALEX| SALESMAN| 30|1150| 0|

+-----+------+---------+------+----+--------+Hanyar don lambar PySpark

- Na farko, aikin taga yana taimakawa wajen raba layuka na DataFrame ta lambar sashen (deptno) da kuma ba da odar albashi a cikin tsari mai saukowa a cikin kowane bangare.

- Daga nan ana amfani da aikin gubar () zuwa ginshiƙin 'sal' da aka ba da odar a cikin kowane bangare don dawo da albashin ma'aikaci mai zuwa (tare da kashe 1), kuma ƙimar da ta gabata ita ce 0 idan babu ma'aikaci na gaba.

- Sakamakon DataFrame 'next_salary_df' ya ƙunshi ginshiƙai don lambar ma'aikaci (empno), suna (ename), taken aiki (aiki), lambar sashe (deptno), albashi na yanzu (sal), da albashi na gaba (na gaba_val).

fitarwa:

Fitowar ta ƙunshi albashin ma'aikaci na gaba a sashen bisa tsarin albashin da ya ragu.

Q6. Albashin da ya gabata a cikin kowane sashe.

Don lissafin albashin da ya gabata, muna amfani da aikin LAG.

Ayyukan lag ɗin yana dawo da ƙimar magana a ɓangarorin da aka bayar kafin layin na yanzu a cikin ɓangaren taga. Ma'anar aikin lag shine:- lag(expr, offset=1, default=Babu).over(windowSpec).

# Using Spark SQL

preious_sal_df = spark.sql(

"""SELECT empno, ename, job, deptno, sal, LAG(sal, 1)

OVER (PARTITION BY deptno ORDER BY sal DESC)

AS prev_val FROM emp

""")

preious_sal_df.show()

# Output:-

+-----+------+---------+------+----+--------+

|empno| ename| job|deptno| sal|prev_val|

+-----+------+---------+------+----+--------+

| 7839| KING|PRESIDENT| 10|5000| null|

| 7782| CLARK| MANAGER| 10|2450| 5000|

| 7369| SMITH| CLERK| 10| 800| 2450|

| 7788| SCOTT| ANALYST| 20|3000| null|

| 7566| JONES| MANAGER| 20|2975| 3000|

| 7876| ADAMS| CLERK| 20|1100| 2975|

| 7698| BLAKE| MANAGER| 30|2850| null|

| 7499| ALLEN| SALESMAN| 30|1600| 2850|

| 7844|TURNER| SALESMAN| 30|1500| 1600|

| 7521| WARD| SALESMAN| 30|1250| 1500|

| 7654|MARTIN| SALESMAN| 30|1250| 1250|

| 7629| ALEX| SALESMAN| 30|1150| 1250|

+-----+------+---------+------+----+--------+

# Using PySpark

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

prev_sal_df = emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.lag('sal', offset=1, default=0).over(windowSpec).alias('prev_val'))

prev_sal_df.show()

# Output:-

+-----+------+---------+------+----+--------+

|empno| ename| job|deptno| sal|prev_val|

+-----+------+---------+------+----+--------+

| 7839| KING|PRESIDENT| 10|5000| 0|

| 7782| CLARK| MANAGER| 10|2450| 5000|

| 7369| SMITH| CLERK| 10| 800| 2450|

| 7788| SCOTT| ANALYST| 20|3000| 0|

| 7566| JONES| MANAGER| 20|2975| 3000|

| 7876| ADAMS| CLERK| 20|1100| 2975|

| 7698| BLAKE| MANAGER| 30|2850| 0|

| 7499| ALLEN| SALESMAN| 30|1600| 2850|

| 7844|TURNER| SALESMAN| 30|1500| 1600|

| 7521| WARD| SALESMAN| 30|1250| 1500|

| 7654|MARTIN| SALESMAN| 30|1250| 1250|

| 7629| ALEX| SALESMAN| 30|1150| 1250|

+-----+------+---------+------+----+--------+Hanyar don lambar PySpark

- Window.partitionBy(col('deptno')) yana ƙayyadadden ɓangaren taga. Yana nufin cewa aikin taga yana aiki daban don kowane sashe.

- Sa'an nan orderBy ('sal').desc())) ya ƙayyade tsarin albashi kuma zai ba da umarnin albashi a cikin kowane sashe a cikin tsari mai saukowa.

- F.lag ('sal', kashewa = 1, tsoho = 0).over(windowSpec).alias('prev_val') yana ƙirƙirar sabon shafi mai suna prev_val a cikin DataFrame 'prev_sal_df'.

- Ga kowane layi, wannan shafi yana ƙunshe da ƙimar ginshiƙin 'sal' daga layin da ya gabata a cikin taga da tagaSpec ya ayyana.

- Ma'auni = 1 siga yana nuna cewa layin da ya gabata yakamata ya zama layi daya kafin layin na yanzu, kuma tsoho = 0 yana ƙayyadaddun ƙimar ƙimar layin farko a kowane bangare (tunda babu layin baya na layin farko).

- A ƙarshe, prev_sal_df.show() yana nuna sakamakon DataFrame.

fitarwa:

Fitowar tana wakiltar albashin da ya gabata ga kowane ma'aikaci a cikin kowane sashe, dangane da ba da odar albashin cikin tsari.

Q7. Albashi na farko a cikin kowane sashe da kwatanta da kowane memba a cikin kowane sashe.

# Using Spark SQL

first_val_df = spark.sql("""SELECT empno, ename, job, deptno, sal,

FIRST_VALUE(sal) OVER (PARTITION BY deptno ORDER BY sal DESC)

AS first_val FROM emp """)

first_val_df.show()

# Using PySpark

windowSpec = Window.partitionBy(col('deptno')).orderBy(col('sal').desc())

first_value_df = emp_df.select('empno', 'ename', 'job', 'deptno', 'sal',

F.first('sal').over(windowSpec).alias('first_val'))

first_value_df.show()

# Output:-

+-----+------+---------+------+----+---------+

|empno| ename| job|deptno| sal|first_val|

+-----+------+---------+------+----+---------+

| 7839| KING|PRESIDENT| 10|5000| 5000|

| 7782| CLARK| MANAGER| 10|2450| 5000|

| 7369| SMITH| CLERK| 10| 800| 5000|

| 7788| SCOTT| ANALYST| 20|3000| 3000|

| 7566| JONES| MANAGER| 20|2975| 3000|

| 7876| ADAMS| CLERK| 20|1100| 3000|

| 7698| BLAKE| MANAGER| 30|2850| 2850|

| 7499| ALLEN| SALESMAN| 30|1600| 2850|

| 7844|TURNER| SALESMAN| 30|1500| 2850|

| 7521| WARD| SALESMAN| 30|1250| 2850|

| 7654|MARTIN| SALESMAN| 30|1250| 2850|

| 7629| ALEX| SALESMAN| 30|1150| 2850|

+-----+------+---------+------+----+---------+Hanyar don lambar PySpark

- Da farko, ƙirƙiri wani abu na WindowSpec wanda ke raba bayanan ta lambar sashe (deptno) kuma a yi oda ta hanyar albashi (sal) cikin tsari mai saukowa.

- Sa'an nan kuma aiwatar da aikin na farko () na nazari zuwa ginshiƙin 'sal' akan taga da aka ayyana ta windowSpec. Wannan aikin yana dawo da ƙimar farko na ginshiƙin 'sal' a cikin kowane bangare (watau kowace ƙungiyar deptno) da aka yi oda ta hanyar saukowa 'sal'. Shagon da aka samu yana da sabon suna, 'first_val'.

- Yanzu sanya sakamakon DataFrame, wanda ya ƙunshi ginshiƙai da aka zaɓa da sabon ginshiƙi, 'first_val', wanda ke nuna mafi girman albashi na farko ga kowane sashe bisa ƙimar ƙimar albashi, zuwa sabon canji mai suna 'first_value_df'.

fitarwa:

Fitowar ta nuna mafi girman albashi na farko ga kowane sashe a cikin ma'aikaci DataFrame.

Kammalawa

A cikin wannan labarin, mun koyi game da ayyukan taga. Spark SQL yana da nau'ikan ayyukan taga guda uku: Ayyuka masu ƙima, Ayyukan Tari da Ayyukan Ƙimar. Yin amfani da wannan aikin, mun yi aiki a kan tsarin bayanai don nemo wasu mahimman bayanai masu mahimmanci. Ayyukan Window Spark suna ba da kayan aikin bincike masu ƙarfi kamar matsayi, nazari, da ƙididdige ƙima. Ko ana nazarin fahimtar albashi ta sashen ko yin amfani da misalai masu amfani tare da PySpark & SQL, waɗannan ayyukan suna ba da kayan aiki masu mahimmanci don ingantaccen sarrafa bayanai da bincike a cikin Spark.

Maɓallin Takeaways

- Mun koyi game da ayyukan taga kuma mun yi aiki tare da su ta amfani da Spark SQL da PySpark DataFrame API.

- Muna amfani da ayyuka kamar matsayi, dense_rank, row_number, lag, lead, groupBy, partitionBy, da sauran ayyuka don samar da ingantaccen bincike.

- Mun kuma ga dalla-dalla matakan magance matsalar kuma mun bincika abubuwan da aka fitar a ƙarshen kowace bayanin matsala.

Wannan binciken yana taimaka muku fahimtar ayyukan PySpark. Idan kuna da wata ra'ayi ko tambayoyi, to kuyi sharhi a ƙasa. Haɗa ni akan LinkedIn domin cigaba da tattaunawa. Ci gaba da Koyo!!!

Kafofin watsa labaru da aka nuna a cikin wannan labarin ba mallakin Vidhya Analytics bane kuma ana amfani dashi bisa ga ra'ayin Mawallafin.

- Ƙunshin Ƙarfafa SEO & Rarraba PR. Samun Girma a Yau.

- PlatoData.Network Tsaye Generative Ai. Karfafa Kanka. Shiga Nan.

- PlatoAiStream. Web3 Mai hankali. Ilmi Ya Faru. Shiga Nan.

- PlatoESG. Carbon, CleanTech, Kuzari, Muhalli, Solar, Gudanar da Sharar gida. Shiga Nan.

- PlatoHealth. Biotech da Clinical Trials Intelligence. Shiga Nan.

- Source: https://www.analyticsvidhya.com/blog/2024/03/working-with-window-functions-in-pyspark/