In the realm of generative AI, open-source projects have emerged as powerful tools that democratize access to cutting-edge models and foster collaborative development. They enable researchers, developers, and enthusiasts to experiment, improve upon existing models, and create novel applications that benefit society as a whole.

In this article, I want to briefly review the key open-source projects and discuss their potential as well as safety risks.

Hugging Face: A Hub of Open-Source AI Models

Hugging Face stands out as a prime hub for open-source models in various AI domains. Hosting over 200,000 models, it bridges the gap between academic research and industry applications. The models are contributed by tech leaders like Meta, Microsoft, Google, and OpenAI, as well as researchers across the globe.

Notably, Hugging Face extends beyond hosting models. It has a suite of its own libraries, such as the Transformers and Diffusers libraries, catering to an array of tasks.

- Transformers library provides APIs and tools to easily download and train state-of-the-art pretrained models. These models support common tasks in different modalities, such as:

- Natural Language Processing: text classification, named entity recognition, question answering, language modeling, summarization, translation, multiple choice, and text generation.

- Computer Vision: image classification, object detection, and segmentation.

- Audio: automatic speech recognition and audio classification.

- Multimodal: table question answering, optical character recognition, information extraction from scanned documents, video classification, and visual question answering.

- Diffusers library provides pretrained vision and audio diffusion models, and serves as a modular toolbox for inference and training. More precisely, Diffusers offers:

- state-of-the-art diffusion pipelines that can be run in inference with just a couple of lines of code;

- various noise schedulers that can be used interchangeably for the preferred speed vs. quality trade-off in inference;

- multiple types of models, such as UNet, can be used as building blocks in an end-to-end diffusion system.

- Training examples to show how to train the most popular diffusion model tasks.

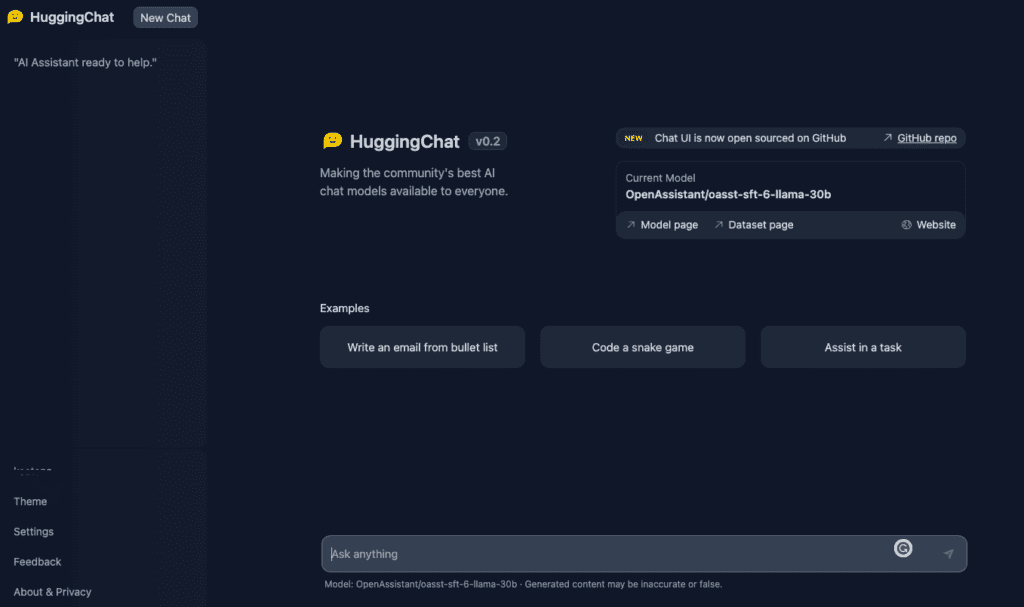

- In its latest development, Hugging Face has introduced HuggingChat, its own chatbot. The interface is very similar to ChatGPT but you also see the link to the model used for this chatbot (LLaMA-based) and the corresponding dataset page. Furthermore, Chat UI is also open-sourced on GitHub.

- Note that Hugging Face enforces a gating mechanism wherein individuals are required to request access and receive approval prior to downloading numerous models from the company’s platform. The objective is to limit access solely to those individuals who can provide a valid rationale, as determined by Hugging Face, for obtaining the model.

Stability AI: The New Frontier

Stability AI, another crucial player in the open-source AI realm, offers a set of open-source models for text and image generation.

- Stable Diffusion – a set of open-source models for text-to-image generation.

- DeepFloyd IF – a powerful text-to-image model that can smartly integrate text into images.

- Stable LM – open source LLM. The Alpha version of the model is available in 3B and 7B parameters, with 15B to 65B parameter models to follow. Developers can get free access to inspect, use, and adapt StableLM base models for commercial or research purposes.

- Stable Vicuna – the first large-scale open-source chatbot trained via reinforced learning from human feedback (RLHF). StableVicuna is based on a fine-tuned LLaMA 13B model.

- Stable Animation – text-to-animation tool, currently available for developers only as it doesn’t have a user-friendly interface yet. The tool allows three ways to create animations:

- Text to animation: Users input a text prompt and tweak various parameters to produce an animation.

- Text input + initial image input: Users provide an initial image that acts as the starting point of their animation. A text prompt is used in conjunction with the image to produce the final output animation.

- Input video + text input: Users provide an initial video to base their animation on. By tweaking various parameters, they arrive at a final output animation that is additionally guided by a text prompt.

[embedded content]

Other Open-Source Models

Numerous other open-source models have been released recently. Standout models include:

- Alpaca from a team at the University of Stanford,

- Dolly from the software firm Databricks, and

- Cerebras-GPT from AI firm Cerebras.

These models are important additions to the open-source AI ecosystem, further enriching its diversity.

However, it’s important to note that many open-source models are built upon the foundational models released by tech giants like Meta and OpenAI.

- For instance, HuggingChat and Stable Vicuna are based on Meta’s open-sourced LLaMA model.

- Furthermore, the open-source community has also benefited from the expansive public dataset called the Pile, compiled by EleutherAI, a nonprofit organization. The Pile was made possible largely due to OpenAI’s openness, which allowed a group of coders to reverse-engineer how GPT-3 was made.

Now, the open-source policies of these tech giants are evolving.

- OpenAI is reconsidering its previous open policy due to competition fears.

- Meta is seeking to balance transparency and safety, implementing measures like click-through licenses and restrictions on data usage.

Leading tech companies like Meta, Google, or Microsoft should be especially cautious about reputational risks, and thus take very seriously all the safety concerns related to open-sourcing their models.

Safety Concerns in Open-Source AI Projects

Despite their immense potential, open-source AI projects aren’t without risks. These include:

- Model Misuse: The accessibility of open-source AI models also means they can be misused by malicious actors for purposes such as deepfakes, automated spam, or disinformation campaigns.

- For example, quite a lot of people use the open-source code of Stable Diffusion models to remove filters and generate pornography and harmful images.

- Bias and Fairness: Open-source models trained on public data can reflect and propagate biases present in that data. They may also be fine-tuned in ways that introduce new biases, leading to outputs that are unfair or discriminatory.

- Lack of Oversight and Accountability: In an open-source environment, it can be challenging to maintain oversight of how models are being used and modified. This could lead to situations where it’s unclear who is responsible if something goes wrong.

- Data Security and Privacy: Open-source projects can be vulnerable to data security issues if not properly managed. Sensitive data could potentially be exposed during the development process, and there may also be risks associated with data used to train, test, or fine-tune models.

In conclusion, open-source projects in generative AI offer immense potential for advancing AI technology and its applications. However, it is crucial to acknowledge the responsibility that comes with this freedom. As the field continues to evolve, it becomes increasingly important to ensure that ethical considerations are prioritized. Safeguarding against potential misuse and unintended consequences is paramount.

Enjoy this article? Sign up for more AI research updates.

We’ll let you know when we release more summary articles like this one.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoAiStream. Web3 Data Intelligence. Knowledge Amplified. Access Here.

- Minting the Future w Adryenn Ashley. Access Here.

- Buy and Sell Shares in PRE-IPO Companies with PREIPO®. Access Here.

- Source: https://www.topbots.com/open-source-projects-generative-ai/